Web API and Microsoft Azure

Total Page:16

File Type:pdf, Size:1020Kb

Load more

Recommended publications

-

Lap Around the .NET Framework 4

Lap Around the .NET Framework 4 Marc Schweigert ([email protected]) Principal Developer Evangelist DPE US Federal Government Team http://blogs.msdn.com/devkeydet http://twitter.com/devkeydet .NET Framework 4.0 User Interface Services Data Access ASP.NET Windows Windows (WebForms, Entity Presentation Data Services Communication ADO.NET MVC, Dynamic Framework Foundation Foundation Data) Windows WinForms Workflow LINQ to SQL Foundation Core Managed Dynamic Parallel Base Class Extensibility LINQ Languages Language Extensions Library Framework Runtime Common Language Runtime ASP.NET MVC 1.0 (Model View Controller) A new Web Application Project type Simply an option Not a replacement for WebForms Builds on top ASP.NET Manual vs. Automatic Transmission Supports a clear separation of concerns Supports testability Supports “close to the metal” programming experience ASP.NET MVC 2 Visual Studio 2010 Included Visual Studio 2008 (Service Pack 1) Download Both versions built against .NET 3.5 What’s New in MVC 2? Better Separation of Concerns (Maintainability) Html.RenderAction() Areas Easier Validation (Maintainability/Productivity) Data Annotations Client Validation Helper Improvements (Maintainability/Productivity) Strongly-Typed Helpers Templated Helpers ASP.NET 4 Web Forms? Support for SEO with URL Routing Cleaner HTML Client ID improvements ViewState improvements Dynamic Data Improvements Chart Controls Productivity and Extensibility Rich Client Ajax supports both MVC & Web Forms WPF 4 Calendar, Data Grid, DatePicker Ribbon (separate download) -

XAMARIN.FORMS for BEGINNERS ABOUT ME Tom Soderling Sr

XAMARIN.FORMS FOR BEGINNERS ABOUT ME Tom Soderling Sr. Mobile Apps Developer @ Polaris Industries; Ride Command Xamarin.Forms enthusiast DevOps hobbyist & machine learning beginner 4 year XCMD Blog: https://tomsoderling.github.io GitHub: https://github.com/TomSoderling Twitter: @tomsoderling How Deep Pickster Spaniel Is It? THE PLAN • Introduction: Why, What, and When • Overview of Xamarin.Forms Building Blocks • Building a Xamarin.Forms UI in XAML • Data Binding • View Customization • Next Steps & Resources • Please ask any questions that come up! THE PLAN • Introduction: Why, What, and When • Overview of Xamarin.Forms Building Blocks • Building a Xamarin.Forms UI in XAML • Data Binding • View Customization • Next Steps & Resources INTRODUCTION : WHY • WET: the soggy state of mobile app development • Write Everything Twice INTRODUCTION : WHY • WET: the soggy state of mobile app development • Write Everything Twice INTRODUCTION : WHAT • What is Xamarin.Forms? • Cross-platform UI framework • Platforms: • Mobile: iOS 8 and up, Android 4.0.3 (API 15) • Desktop: Windows 10 UWP, MacOS, WFP • Samsung Smart Devices: Tizen INTRODUCTION : WHAT • Brief History: • May 2011, Xamarin founded • MonoTouch and Mono for Android using MonoDevelop IDE • February 2013, release of Xamarin 2.0 • Xamarin Studio IDE & integration with Visual Studio • Renamed to Xamarin.Android and Xamarin.iOS • May 2014, Xamarin.Forms released as part of Xamarin 3 • February 24 2016, Xamarin acquired by Microsoft • Owned, actively developed on, and supported by Microsoft • Free -

A Field Guide to Web Apis by Kin Lane Contents

A field guide to web APIs By Kin Lane Contents Executive summary 4 What are APIs used for? 5 Open data . 5 Websites . 5 Mobile . 5. Automobiles . 6. Homes and buildings . 6 Why are web APIs different? 7 They build on existing web architecture . 7. Intuitive resources . 7 Simplicity rules . 8. Easy to understand for developers and even nondevelopers . 8. Self-service resources . 8 . History of web APIs 9 Commerce . 9 . Social . 9 . Cloud computing . .9 . Mobile . .10 . What technology goes into an API? 11 REST . 11. JSON . 11 Security . 11 . Keys . 11 . Basic auth . 12 Open authorization . 12 . Webhooks . 12 Deploying your web API 13 Do-it-yourself approaches . 13 Cloud solutions . 13 . Enterprise gateways . 13 . Established practices for managing APIs 14 Self-service . 14 . Getting started . .14 . Documentation . 15 . Code samples . 15. 2 A field guide to web APIs Support and feedback loops . 15 . The legal aspect . 15. Developer dashboard . 16 Marketing and API evangelism 17 Goals . 17 User engagement . .17 . Blogging . 17 Landscape analysis . 18 . GitHub . .18 . Social . 18. Events . 19. The future of web APIs 20 API aggregation . 20 . Real-time APIs . 20. Backend as a Service (BaaS) . 20 . Automation . 20 Voice . 21. Internet of things . 21. Cloud trends 22 Maturity of IaaS layer . 22. Opportunities in the PaaS layer . .22 . Key takeaways 23 About Kin Lane 23 3 A field guide to web APIs Executive summary A new breed of web API has emerged, delivering a vision of a lightweight, low-cost approach to connect devices and allowing applications to exchange data efficiently. This research report is a field guide for web API providers, developers, and even nondevelopers . -

End to End Azure and Xamarin.Forms

End-to-End Native Mobile Apps with C# and Xamarin James Montemagno Developer Evangelist, Xamarin [email protected] motzcod.es @JamesMontemagno DESIGN – DEVELOP - INTEGRATE TEST MONITOR LEARN iOS C# UI Android C# UI Windows C# UI Shared C# Mobile Shared C# codebase • 100% native API access • High performance Microsoft.Phone Microsoft.Networking Windows.Storage Windows.Foundation Microsoft.Devices System.Net System System.IO System.Linq System.Xml System.Data System.Windows System.Numerics System.Core System.ServiceModel MapKit UIKit iBeacon CoreGraphics CoreMotion System.Net System System.IO System.Linq System.Xml System.Data System.Windows System.Numerics System.Core System.ServiceModel Text-to-speech ActionBar Printing Framework Renderscript NFC System.Net System System.IO System.Linq System.Xml System.Data System.Windows System.Numerics System.Core System.ServiceModel .NET IL + JIT Compile C# and Link .APK Runs Natively Bindings Xamarin.iOS does full Ahead Of Time Xamarin.Android takes advantage of (AOT) compilation to produce an ARM Just In Time (JIT) compilation on the binary for Apple’s App Store. Android device. • • • • • ✓Always Up-to-Date • • • • • • iOS C# UI Android C# UI Windows C# UI Shared UI Code Shared C# Backend Shared C# Backend Traditional Xamarin With Xamarin.Forms: Approach More code-sharing, all native ✓ 40+ Pages, layouts, and controls (Build from code behind or XAML) Shared UI Code ✓ Two-way data binding ✓ Navigation Shared C# Backend ✓ Animation API ✓ Dependency Service ✓ Messaging Center Pages Content MasterDetail -

Microsoft Mobility and Security for Enterprise Architects

Microsoft Mobility What IT architects need to know about mobility with Microsoft cloud services and Security for and platforms Enterprise Architects This topic is 1 of 4 in a series 1 2 3 4 Enable productivity and collaboration from anywhere while protecting data and applications Microsoft provides broad support for mobile productivity with support for applications and rich features for controlling access to your organization s assets. Microsoft mobile apps for business Developing your own mobile apps Microsoft produces a number of business class mobile apps for Use the Mobile Apps feature of Azure App Service to build engaging productivity. See page two for featured applications. iOS, Android, and Windows apps or cross-platform Xamarin or Cordova (Phonegap) apps for your customers and business. With an Office 365 for business subscription, you get mobile apps that help you get more done from your favorite device while keeping your Broadcast push with customer data secured. Use Intune Mobile Application Management to apply segmentation additional security when using these mobile applications. Enterprise single sign-on with Active Directory Autoscale to support millions of devices Skype for Business, Yammer, OneNote, and more apps for business Apps can work offline and sync are also available. Get the full list of apps and learn how to set them Social integration with Facebook, up on your devices. Twitter, Google Dynamics CRM also includes apps for phones and tablets. Cloud App Security for SaaS apps Mobile access to on-prem applications Microsoft Cloud App Security is a comprehensive service that provides Microsoft Azure Active Directory Application Proxy lets you publish deeper visibility, comprehensive controls, and improved protection for applications, such as web-based apps inside your private network and your cloud applications. -

Ios, Android, Windows Phone Xamarin, Xamarin.Forms Mvvmcross, Mvvm Light …

iOS, Android, Windows Phone Xamarin, Xamarin.Forms MvvmCross, Mvvm Light … Formation – Audit – Conseil – Développement XAML (Windows Store, WPF, Silverlight, Windows Phone), C# Cross-plateforme Windows / Android / iOS UX Design ALLDOT. BLOGTom e 5 3ème édition Développement Cross-Plateforme Tout Dot.Blog par thème sous la forme de livres PDF gratuits ! Reproduction, utilisation et diffusion interdites sans l’autorisation de l’auteur Olivier Dahan [email protected] P a g e 1 | 410 Table des matières Introduction .................................................................................................................. 11 Présentation de l’édition 2015 ...................................................................................... 13 Edition 2015 ................................................................................................................... 15 Les unités Mobiles – Axer correctement ses développements ............................. 15 Une infographie complète ..................................................................................... 15 La guerre tablette / PC ........................................................................................... 15 Parts de marché des OS ........................................................................................ 20 Les mobiles et les français .....................................................................................22 Unités mobiles et Web .......................................................................................... 23 Conclusion -

Release 1.13 Kal Ahmed, Graham Moore

BrightstarDB Documentation Release 1.13 Kal Ahmed, Graham Moore January 08, 2016 Contents 1 Getting Started 1 1.1 Architect.................................................1 1.2 Data....................................................1 1.3 Developer.................................................1 2 Concepts 3 2.1 Architecture...............................................3 2.2 Data Model................................................4 2.3 Storage Features.............................................5 2.4 Client APIs................................................5 2.5 Supported RDF Syntaxes........................................5 3 Why BrightstarDB? 7 3.1 An Associative Model..........................................7 3.2 Schema-less Data Store.........................................7 3.3 A Semantic Data Model.........................................7 3.4 Automatic Data caching.........................................8 3.5 Full Historical Capabilities........................................8 3.6 Developer Friendly Toolset.......................................8 3.7 Native .NET Semantic Web Database..................................8 3.8 RDF is great for powering Object Oriented solutions..........................8 4 Developing With BrightstarDB9 5 Developer Quick Start 11 5.1 Create New Project............................................ 11 5.2 Create the Model............................................. 12 5.3 Generating the Context and Classes................................... 13 5.4 Using the Context........................................... -

ASP.Net Web API

ASP.Net Web API 1 [email protected] מה זה Web API ? Web API הוא API אותו מממש השרת והוא מחזיר לקליינט Data בלבד ולא View הבקשה והתשובה הן בפרוטוקול Http\Https הקליינטים של Web API יכולים להיות רבים : אפשר להשתמש גם בASP.Net MVC להחזיר Desktop Http\Https Application לקליינט Data אבל WEB API יותר מתאים ונוח Mobile App Server Web API Web Client Data 2 [email protected] דוגמאות ל Web API דוגמאות ידועות ל Web API : /https://jsonplaceholder.typicode.com ● https://openweathermap.org/api ● /https://developers.google.com/maps ● https://developers.facebook.com/docs/graph-api ● ● ועוד רבים 3 [email protected] ASP.Net Web API Microsoft מאפשרת לנו לכתוב שרת אשר מממש Web API כך שקליינטים יוכלו לפנות אליו ולקבל מידע התשתית שמאפשרת זאת נקראת ASP.Net Web API התשתית הזו מאוד דומה ל ASP.Net MVC ההבדל המרכזי הוא ש ASP.Net MVC יכול להחזיר View ויכול להחזיר Data ואילו ASP.Net Web API יכול להחזיר רק Data 4 [email protected] מה זה REST ? REST הוא ראשי תיבות של - Representational State Transfer REST הוא architectural pattern ליצירת API שמשתמש ב HTTP לתקשורת REST הומצא בשנת 2000 REST נמצא בשימוש רוב האתרים שחושפים WEB API 5 [email protected] דוגמא לשימוש בREST עבור אתר הבלוגים שלנו Resource HTTP method Expected output Response Code OK/200 החזרת רשימת כל הבלוגים api/blogs GET/ Created/201 יצירת בלוג חדש api/blogs POST/ No Content or 200/OK/204 עדכן מידע בלוג קיים שהid הוא api/blogs/73 PUT 73/ No Content or 200/OK/204 מחק בלוג קיים שהid הוא api/blogs/73 DELETE 73/ OK/200 החזרת מידע בלוג שהid שלו הוא api/blogs/73 GET 73/ נובע מrouting עם api טבלה בעזרתה אפשר לממש controller .CRUD בשם את המידע עובר ב PUT/POST אפשר BlogsController כאשר id לא נמצא מחזירים להעביר כ querystring שנוסף ל url או ב body של הrequest 404/Not Found 6 [email protected] שימוש ב ASP.Net Web API נוסיף ספריה api תחת ספרית Controllers של פרויקט קיים של ASP.Net MVC. -

Scalability in Web Apis

Worcester Polytechnic Institute Scalability in Web APIs Ryan Baker Mike Perrone Advised by: George T. Heineman 1 Worcester Polytechnic Institute 1 Introduction 2 Background 2.1 Problem Statement 2.2 Game Services and Tools 2.2.1 Graphics Engine 2.2.2 Map Editor 2.2.3 Friend Network 2.2.4 Achievements 2.2.5 Leaderboards 2.3 Our Service Definition 2.3.1 Leaderboards 2.4 Service Requirements 2.4.1 Administrative Ease 2.4.2 Security 2.4.3 Scalability 2.5 Internal Service Decisions 2.5.1 Application Framework 2.5.2 Cloud Computing 3 Methodology 3.1 Decisions of Design and Architecture 3.1.1 Leaderboards 3.1.2 API Documentation 3.1.3 Developer Console 3.1.4 Admin Console 3.1.5 Java Client Package 3.1.6 Logging 3.2 Decisions of Implementation 3.2.1 Enterprise vs Public 3.2.2 Front End Implementation 3.2.3 Cloud Computing Provider (AWS) 3.2.4 Web Application Framework Implementation (Flask) 3.2.5 Continuous Integration Service 3.2.6 API 3.2.7 Logging 3.2.8 Database Schema 4 Success Metrics 4.1 Resiliency 4.1.1 Simulated Traffic 4.1.2 Load Testing and Scalability 4.2 Design 4.2.1 Client Perspective 2 4.2.3 Admin Perspective 5 Conclusions & Future Work 5.1 Client Conclusions 5.2 Administrator Conclusions 5.3 The Future 6 References 7 Appendix A Why we chose Leaderboards B Facebook’s Game Development API C Playtomic’s API D Front End Tooling Decision E API Documentation Tool F Elastic Beanstalk 3 1 Introduction Game developers, especially those that make social games, undertake a large amount of work to create them. -

Data Services

Data Services Michael J. Carey Nicola Onose Michalis Petropoulos University of California, Irvine University of California, Irvine University of California, San 2091 Donald Bren Hall (DBH) 2091 Donald Bren Hall (DBH) Diego Irvine, CA 92697-3435 Irvine, CA 92697-3435 9500 Gilman Drive [email protected] [email protected] San Diego, CA 92093-0114 [email protected] ABSTRACT any schema information, the consumer is not sure if he/she Data services provide access to data drawn from one or more can compose these two services to retrieve the orders of cus- underlying information sources. Compared to traditional tomers. Moreover, consumers of data services can utilize a services, data services are model-based, providing richer and query language and pass a query expression as a parameter more data-centric views of the data they serve; they often to a data service; they can use metadata-guided knowledge provide both query-based and function-based access to data. of data services and their relationships to formulate queries In the enterprise world, data services play an important role and navigate between sets of entities. in SOA architectures. They are also important when an Modern data services are descendants of the stored pro- enterprise wishes to controllably share data with its busi- cedure facilities provided by relational database systems, ness partners via the Internet. In the emerging world of which allow a set of SQL statements and control logic to software-as-a-service (SaaS), data services enable enterprises be parameterized, named, access-controlled, and then called to access their own externally stored information. -

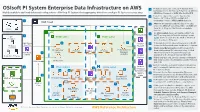

Osisoft PI System Enterprise Data Infrastructure On

PI System Connector connects PI System from OSIsoft PI System Enterprise Data Infrastructure on AWS 1 individual sites to the Roll-up PI System on AWS via Highly available and load-balanced configuration of Roll-up PI System that aggregates data from multiple PI Systems across sites. AWS Direct Connect or AWS Site-to-Site VPN. 2 Data from edge devices can be ingested via Modbus, OPC UA or MQTT into AWS IoT 12 Greengrass or with a AWS Lambda function. AWS Cloud 3 AWS IoT Greengrass sends the data from edge devices to AWS IoT Core using MQTT. An AWS IoT Core rule is triggered that invokes a Lambda Users Availability Zone 2 Availability Zone 2 function. VPC An AWS Lambda function translates data from Amazon S3 4 1 MQTT message into OSIsoft Messaging Format On- Public subnet Public subnet (OMF) and then sends it to the PI Web API which Premises posts the data to PI System. 11 AWS Site-to 5 Amazon 5 PI System data from on-premises is sent to the PI PI System Site VPN Redshift Connector Relay which posts the data to PI System. Connector 6 Elastic Load Balancing directs PI System traffic PI Connector PI Integrator for PI Vision Elastic Load PI Vision from public subnet (PI Connector Relay, PI Web AWS Direct 4 Relay Business API, PI vision and PI Integrator for Business Balancing Analytics Connect Amazon Analytics) to the PI Asset Framework (PI AF). PI Interface PI Server Kinesis 7 Highly available SQL Servers running on Amazon PI Web API EC2 instances store the metadata used by PI Vision AWS Lambda and PI AF. -

Introducing Xuni & Xamarin.Forms

INTRODUCING XUNI & XAMARIN.FORMS Hosted by Greg Lutz, Kelley Ricker & Ross Dederer OVERVIEW .Brief background on mobile development .Introduction to Xamarin.Forms .Introduction to Xuni .Hands-on building a mobile app with Xamarin.Forms .Adding data visualization controls to an app using Xuni .Questions & Survey This web cast will be recorded and available to watch again at any time. MOBILE DEVELOPMENT .Consumers and businesses alike are all going mobile .Bring Your Own Device (BYOD) is a common practice for businesses whose enterprise apps work on a variety of different devices .For mobile app development we have two choices, each with pros and cons: . Web . Native MOBILE WEB APPS .Mobile Web sites or Web Apps made to look and feel native (Hybrid Apps) .Tools like PhoneGap and Ionic help produce Hybrid apps .Written with JavaScript and HTML on client-side .Reach ~100% of mobile users because all smart phones can display a web page NATIVE APPS .Native apps are built using OS-specific programming languages and tools Programming Language Popular Tools Android Java Eclipse iOS Objective-C Xcode Windows Phone C# Visual Studio .Reach ~99% of users WEB VS NATIVE Pros Cons Web Write once (HTML5/JavaScript) Not truly native Native Better user experience Write 3 times (Android, iOS, Windows Phone) What if we could have the best of both worlds – write once and get the best user experience? XAMARIN.FORMS Xamarin.Forms is a cross-platform natively backed UI toolkit abstraction that allows developers to easily create user interfaces that can be shared across Android, iOS, and Windows Phone. Xamarin.Forms Xamarin.iOS Xamarin.Android Windows Phone iOS Android XAMARIN.FORMS EXAMPLE: TABBED PAGE Pivot XAMARIN.FORMS VIEWS (CONTROLS) .ActivityIndicator .Picker .BoxView .ProgressBar .Button .SearchBar .DatePicker .Slider .Editor .Stepper .Entry .Switch .Image .TableView .Label .TimePicker .ListView .Web View XAMARIN COMPONENT STORE http://components.xamarin.com/ XUNI .Xuni is a collection of native, cross-platform controls designed for Xamarin.Forms .Like Xamarin.Forms, .