The Rise of Reactive and Interactive Video Game Audio

Total Page:16

File Type:pdf, Size:1020Kb

Load more

Recommended publications

-

1. Introduction

Latest Gaming Console 1 1. INTRODUCTION Gaming consoles are one of the best digital entertainment media now available. Gaming consoles were designed for the sole purpose of playing electronic games. A gaming console is a highly specialised piece of hardware that has rapidly evolved since its inception incorporating all the latest advancements in processor technology, memory, graphics, and sound among others to give the gamer the ultimate gaming experience. A console is a command line interface where the personal computer game's settings and variables can be edited while the game is running. But a Gaming Console is an interactive entertainment computer or electronic device that produces a video display signal which can be used with a display device to display a video game. The term "video game console" is used to distinguish a machine designed for consumers to buy and use solely for playing video games from a personal computer, which has many other functions, or arcade machines, which are designed for businesses that buy and then charge others to play. 1.1. Why are games so popular? The answer to this question is to be found in real life. Essentially, most people spend much of their time playing games of some kind or another like making it through traffic lights before they turn red, attempting to catch the train or bus before it leaves, completing the crossword, or answering the questions correctly on Who Wants To Be A Millionaire before the contestants. Office politics forms a continuous, real-life strategy game which many people play, whether they want to or not, with player- definable goals such as ³increase salary to next level´, ³become the boss´, ³score points off a rival colleague and beat them to that promotion´ or ³get a better job elsewhere´. -

THIEF PS4 Manual

See important health and safety warnings in the system Settings menu. GETTING STARTED PlayStation®4 system Starting a game: Before use, carefully read the instructions supplied with the PS4™ computer entertainment system. The documentation contains information on setting up and using your system as well as important safety information. Touch the (power) button of the PS4™ system to turn the system on. The power indicator blinks in blue, and then lights up in white. Insert the Thief disc with the label facing up into the disc slot. The game appears in the content area of the home screen. Select the software title in the PS4™ system’s home screen, and then press the S button. Refer to this manual for Default controls: information on using the software. Xxxxxx xxxxxx left stick Xxxxxx xxxxxx R button Quitting a game: Press and hold the button, and then select [Close Application] on the p Xxxxxx xxxxxx E button screen that is displayed. Xxxxxx xxxxxx W button Returning to the home screen from a game: To return to the home screen Xxxxxx xxxxxx K button Xxxxxx xxxxxx right stick without quitting a game, press the p button. To resume playing the game, select it from the content area. Xxxxxx xxxxxx N button Xxxxxx xxxxxx (Xxxxxx xxxxxxx) H button Removing a disc: Touch the (eject) button after quitting the game. Xxxxxx xxxxxx (Xxxxxx xxxxxxx) J button Xxxxxx xxxxxx (Xxxxxx xxxxxxx) Q button Trophies: Earn, compare and share trophies that you earn by making specic in-game Xxxxxx xxxxxx (Xxxxxx xxxxxxx) Click touchpad accomplishments. Trophies access requires a Sony Entertainment Network account . -

Video Games: Changing the Way We Think of Home Entertainment

Rochester Institute of Technology RIT Scholar Works Theses 2005 Video games: Changing the way we think of home entertainment Eri Shulga Follow this and additional works at: https://scholarworks.rit.edu/theses Recommended Citation Shulga, Eri, "Video games: Changing the way we think of home entertainment" (2005). Thesis. Rochester Institute of Technology. Accessed from This Thesis is brought to you for free and open access by RIT Scholar Works. It has been accepted for inclusion in Theses by an authorized administrator of RIT Scholar Works. For more information, please contact [email protected]. Video Games: Changing The Way We Think Of Home Entertainment by Eri Shulga Thesis submitted in partial fulfillment of the requirements for the degree of Master of Science in Information Technology Rochester Institute of Technology B. Thomas Golisano College of Computing and Information Sciences Copyright 2005 Rochester Institute of Technology B. Thomas Golisano College of Computing and Information Sciences Master of Science in Information Technology Thesis Approval Form Student Name: _ __;E=.;r....;...i S=-h;....;..;u;;;..;..lg;;i..;:a;;...__ _____ Thesis Title: Video Games: Changing the Way We Think of Home Entertainment Thesis Committee Name Signature Date Evelyn Rozanski, Ph.D Evelyn Rozanski /o-/d-os- Chair Prof. Andy Phelps Andrew Phelps Committee Member Anne Haake, Ph.D Anne R. Haake Committee Member Thesis Reproduction Permission Form Rochester Institute of Technology B. Thomas Golisano College of Computing and Information Sciences Master of Science in Information Technology Video Games: Changing the Way We Think Of Home Entertainment L Eri Shulga. hereby grant permission to the Wallace Library of the Rochester Institute of Technofogy to reproduce my thesis in whole or in part. -

A Neuroevolution Approach to Imitating Human-Like Play in Ms

A Neuroevolution Approach to Imitating Human-Like Play in Ms. Pac-Man Video Game Maximiliano Miranda, Antonio A. S´anchez-Ruiz, and Federico Peinado Departamento de Ingenier´ıadel Software e Inteligencia Artificial Universidad Complutense de Madrid c/ Profesor Jos´eGarc´ıaSantesmases 9, 28040 Madrid (Spain) [email protected] - [email protected] - [email protected] http://www.narratech.com Abstract. Simulating human behaviour when playing computer games has been recently proposed as a challenge for researchers on Artificial Intelligence. As part of our exploration of approaches that perform well both in terms of the instrumental similarity measure and the phenomeno- logical evaluation by human spectators, we are developing virtual players using Neuroevolution. This is a form of Machine Learning that employs Evolutionary Algorithms to train Artificial Neural Networks by consider- ing the weights of these networks as chromosomes for the application of genetic algorithms. Results strongly depend on the fitness function that is used, which tries to characterise the human-likeness of a machine- controlled player. Designing this function is complex and it must be implemented for specific games. In this work we use the classic game Ms. Pac-Man as an scenario for comparing two different methodologies. The first one uses raw data extracted directly from human traces, i.e. the set of movements executed by a real player and their corresponding time stamps. The second methodology adds more elaborated game-level parameters as the final score, the average distance to the closest ghost, and the number of changes in the player's route. We assess the impor- tance of these features for imitating human-like play, aiming to obtain findings that would be useful for many other games. -

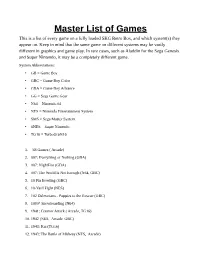

Master List of Games This Is a List of Every Game on a Fully Loaded SKG Retro Box, and Which System(S) They Appear On

Master List of Games This is a list of every game on a fully loaded SKG Retro Box, and which system(s) they appear on. Keep in mind that the same game on different systems may be vastly different in graphics and game play. In rare cases, such as Aladdin for the Sega Genesis and Super Nintendo, it may be a completely different game. System Abbreviations: • GB = Game Boy • GBC = Game Boy Color • GBA = Game Boy Advance • GG = Sega Game Gear • N64 = Nintendo 64 • NES = Nintendo Entertainment System • SMS = Sega Master System • SNES = Super Nintendo • TG16 = TurboGrafx16 1. '88 Games ( Arcade) 2. 007: Everything or Nothing (GBA) 3. 007: NightFire (GBA) 4. 007: The World Is Not Enough (N64, GBC) 5. 10 Pin Bowling (GBC) 6. 10-Yard Fight (NES) 7. 102 Dalmatians - Puppies to the Rescue (GBC) 8. 1080° Snowboarding (N64) 9. 1941: Counter Attack ( Arcade, TG16) 10. 1942 (NES, Arcade, GBC) 11. 1943: Kai (TG16) 12. 1943: The Battle of Midway (NES, Arcade) 13. 1944: The Loop Master ( Arcade) 14. 1999: Hore, Mitakotoka! Seikimatsu (NES) 15. 19XX: The War Against Destiny ( Arcade) 16. 2 on 2 Open Ice Challenge ( Arcade) 17. 2010: The Graphic Action Game (Colecovision) 18. 2020 Super Baseball ( Arcade, SNES) 19. 21-Emon (TG16) 20. 3 Choume no Tama: Tama and Friends: 3 Choume Obake Panic!! (GB) 21. 3 Count Bout ( Arcade) 22. 3 Ninjas Kick Back (SNES, Genesis, Sega CD) 23. 3-D Tic-Tac-Toe (Atari 2600) 24. 3-D Ultra Pinball: Thrillride (GBC) 25. 3-D WorldRunner (NES) 26. 3D Asteroids (Atari 7800) 27. -

The Cross Over Talk

Programming Composers and Composing Programmers Victoria Dorn – Sony Interactive Entertainment 1 About Me • Berklee College of Music (2013) – Sound Design/Composition • Oregon State University (2018) – Computer Science • Audio Engineering Intern -> Audio Engineer -> Software Engineer • Associate Software Engineer in Research and Development at PlayStation • 3D Audio for PS4 (PlayStation VR, Platinum Wireless Headset) • Testing, general research, recording, and developer support 2 Agenda • Programming tips/tricks for the audio person • Audio and sound tips/tricks for the programming person • Creating a dialog and establishing vocabulary • Raise the level of common understanding between sound people and programmers • Q&A 3 Media Files Used in This Presentation • Can be found here • https://drive.google.com/drive/folders/1FdHR4e3R4p59t7ZxAU7pyMkCdaxPqbKl?usp=sharing 4 Programming Tips for the ?!?!?! Audio Folks "Binary Code" by Cncplayer is licensed under CC BY-SA 3.0 5 Music/Audio Programming DAWs = Programming Language(s) Musical Motives = Programming Logic Instruments = APIs or Libraries 6 Where to Start?? • Learning the Language • Pseudocode • Scripting 7 Learning the Language • Programming Fundamentals • Variables (a value with a name) soundVolume = 10 • Loops (works just like looping a sound actually) for (loopCount = 0; while loopCount < 10; increase loopCount by 1){ play audio file one time } • If/else logic (if this is happening do this, else do something different) if (the sky is blue){ play bird sounds } else{ play rain sounds -

Marco Benôit Carbone Today for the Definition of the Artistic, Expressive and Cultural Status of Games

This paper approaches the issue of the ‘invention’ of video games – that is, the birth of a text that would mark the advent of a new medium – from a semiotic perspective, through the analysis of early games such as Spacewar! (Steve Russell et al., 1962), OXO (A. S. Douglas, E C 1952) and Tennis for Two (William Higginbotham, 1958). Research in this particular field may help to shed more light on much-debated issues about their nature as a medium, their characteristics as semiotic texts1, and the role of the empirical authors in their relations with the semiotic processes of enunciation2. In addition, this may The Adam of Videogames. improve our comprehension of the role of semiotics From Invention to Autorship in the study of video games, as well as about just how through the Analysis of much we should believe in a real need for new disciplines Primordial Games that are willing to focus exclusively in this medium. Inventorship will ultimately imply a reflection about the role authors play in video games, a crucial issue Marco Benôit Carbone today for the definition of the artistic, expressive and cultural status of games. We shall focus on ‘primordial’ interactive texts that game historians and scholars 1. OXO, Tennis for Two, Spacewar!: game texts have considered so far as the “first video games” ever. as acts of bricolage However, nostalgia doesn’t play a role in this decision, nor is historical interest in such titles the only reason For years, the “very first video game” has been the label for choosing them. -

Regulating Violence in Video Games: Virtually Everything

Journal of the National Association of Administrative Law Judiciary Volume 31 Issue 1 Article 7 3-15-2011 Regulating Violence in Video Games: Virtually Everything Alan Wilcox Follow this and additional works at: https://digitalcommons.pepperdine.edu/naalj Part of the Administrative Law Commons, Comparative and Foreign Law Commons, and the Entertainment, Arts, and Sports Law Commons Recommended Citation Alan Wilcox, Regulating Violence in Video Games: Virtually Everything, 31 J. Nat’l Ass’n Admin. L. Judiciary Iss. 1 (2011) Available at: https://digitalcommons.pepperdine.edu/naalj/vol31/iss1/7 This Comment is brought to you for free and open access by the Caruso School of Law at Pepperdine Digital Commons. It has been accepted for inclusion in Journal of the National Association of Administrative Law Judiciary by an authorized editor of Pepperdine Digital Commons. For more information, please contact [email protected], [email protected], [email protected]. Regulating Violence in Video Games: Virtually Everything By Alan Wilcox* TABLE OF CONTENTS I. INTRODUCTION ................................. ....... 254 II. PAST AND CURRENT RESTRICTIONS ON VIOLENCE IN VIDEO GAMES ........................................... 256 A. The Origins of Video Game Regulation...............256 B. The ESRB ............................. ..... 263 III. RESTRICTIONS IMPOSED IN OTHER COUNTRIES . ............ 275 A. The European Union ............................... 276 1. PEGI.. ................................... 276 2. The United -

Abstract the Goal of This Project Is Primarily to Establish a Collection of Video Games Developed by Companies Based Here In

Abstract The goal of this project is primarily to establish a collection of video games developed by companies based here in Massachusetts. In preparation for a proposal to the companies, information was collected from each company concerning how, when, where, and why they were founded. A proposal was then written and submitted to each company requesting copies of their games. With this special collection, both students and staff will be able to use them as tools for the IMGD program. 1 Introduction WPI has established relationships with Massachusetts game companies since the Interactive Media and Game Development (IMGD) program’s beginning in 2005. With the growing popularity of game development, and the ever increasing numbers of companies, it is difficult to establish and maintain solid relationships for each and every company. As part of this project, new relationships will be founded with a number of greater-Boston area companies in order to establish a repository of local video games. This project will not only bolster any previous relationships with companies, but establish new ones as well. With these donated materials, a special collection will be established at the WPI Library, and will include a number of retail video games. This collection should inspire more people to be interested in the IMGD program here at WPI. Knowing that there are many opportunities locally for graduates is an important part of deciding one’s major. I knew I wanted to do something with the library for this IQP, but I was not sure exactly what I wanted when I first went to establish a project. -

Analyzing Modular Smoothness in Video Game Music

Analyzing Modular Smoothness in Video Game Music Elizabeth Medina-Gray KEYWORDS: video game music, modular music, smoothness, probability, The Legend of Zelda: The Wind Waker, Portal 2 ABSTRACT: This article provides a detailed method for analyzing smoothness in the seams between musical modules in video games. Video game music is comprised of distinct modules that are triggered during gameplay to yield the real-time soundtracks that accompany players’ diverse experiences with a given game. Smoothness —a quality in which two convergent musical modules fit well together—as well as the opposite quality, disjunction, are integral products of modularity, and each quality can significantly contribute to a game. This article first highlights some modular music structures that are common in video games, and outlines the importance of smoothness and disjunction in video game music. Drawing from sources in the music theory, music perception, and video game music literature (including both scholarship and practical composition guides), this article then introduces a method for analyzing smoothness at modular seams through a particular focus on the musical aspects of meter, timbre, pitch, volume, and abruptness. The method allows for a comprehensive examination of smoothness in both sequential and simultaneous situations, and it includes a probabilistic approach so that an analyst can treat all of the many possible seams that might arise from a single modular system. Select analytical examples from The Legend of Zelda: The Wind Waker and Portal -

Finding Aid to the Atari Coin-Op Division Corporate Records, 1969-2002

Brian Sutton-Smith Library and Archives of Play Atari Coin-Op Division Corporate Records Finding Aid to the Atari Coin-Op Division Corporate Records, 1969-2002 Summary Information Title: Atari Coin-Op Division corporate records Creator: Atari, Inc. coin-operated games division (primary) ID: 114.6238 Date: 1969-2002 (inclusive); 1974-1998 (bulk) Extent: 600 linear feet (physical); 18.8 GB (digital) Language: The materials in this collection are primarily in English, although there a few instances of Japanese. Abstract: The Atari Coin-Op records comprise 600 linear feet of game design documents, memos, focus group reports, market research reports, marketing materials, arcade cabinet drawings, schematics, artwork, photographs, videos, and publication material. Much of the material is oversized. Repository: Brian Sutton-Smith Library and Archives of Play at The Strong One Manhattan Square Rochester, New York 14607 585.263.2700 [email protected] Administrative Information Conditions Governing Use: This collection is open for research use by staff of The Strong and by users of its library and archives. Though intellectual property rights (including, but not limited to any copyright, trademark, and associated rights therein) have not been transferred, The Strong has permission to make copies in all media for museum, educational, and research purposes. Conditions Governing Access: At this time, audiovisual and digital files in this collection are limited to on-site researchers only. It is possible that certain formats may be inaccessible or restricted. Custodial History: The Atari Coin-Op Division corporate records were acquired by The Strong in June 2014 from Scott Evans. The records were accessioned by The Strong under Object ID 114.6238. -

RUSQ Vol. 58, No. 4

THE ALERT COLLECTOR Mark Shores, Editor Kristen Nyitray began her immersion in video games with an Atari 2600 and ColecoVision console and checking out Game On to games from her local public library. Later in life, she had the opportunity to start building a video game studies col- lection in her professional career as an archivist and special Game After collections librarian. While that project has since ended, you get the benefit of her expansive knowledge of video game sources in “Game On to Game After: Sources for Video Game Sources for Video History.” There is much in this column to help librarians wanting to support research in this important entertainment Game History form. Ready player one?—Editor ideo games have emerged as a ubiquitous and dominant form of entertainment as evidenced by statistics compiled in the United States and pub- lished by the Entertainment Software Association: V60 percent of Americans play video and/or computer games daily; 70 percent of gamers are 18 and older; the average age of a player is 34; adult women constitute 33 percent of play- ers; and sales in the United States were estimated in 2017 at $36 billion.1 What constitutes a video game? This seemingly simple question has spurred much technical and philosophical debate. To this point, in 2010 I founded with Raiford Guins (professor of cinema and media studies, the Media School, Kristen J. Nyitray Indiana University) the William A. Higinbotham Game Studies Collection (2010–2016), named in honor of physi- Kristen J. Nyitray is Director of Special Collections cist Higinbotham, developer of the analog computer game and University Archives, and University Archivist at Tennis for Two (as it is most commonly known).2 This game Stony Brook University, State University of New York.