CS2110 Lecture 29 Mar. 31, 2021

Total Page:16

File Type:pdf, Size:1020Kb

Load more

Recommended publications

-

Coursenotes 4 Non-Adaptive Sorting Batcher's Algorithm

4. Non Adaptive Sorting Batcher’s Algorithm 4.1 Introduction to Batcher’s Algorithm Sorting has many important applications in daily life and in particular, computer science. Within computer science several sorting algorithms exist such as the “bubble sort,” “shell sort,” “comb sort,” “heap sort,” “bucket sort,” “merge sort,” etc. We have actually encountered these before in section 2 of the course notes. Many different sorting algorithms exist and are implemented differently in each programming language. These sorting algorithms are relevant because they are used in every single computer program you run ranging from the unimportant computer solitaire you play to the online checking account you own. Indeed without sorting algorithms, many of the services we enjoy today would simply not be available. Batcher’s algorithm is a way to sort individual disorganized elements called “keys” into some desired order using a set number of comparisons. The keys that will be sorted for our purposes will be numbers and the order that we will want them to be in will be from greatest to least or vice a versa (whichever way you want it). The Batcher algorithm is non-adaptive in that it takes a fixed set of comparisons in order to sort the unsorted keys. In other words, there is no change made in the process during the sorting. Unlike other methods of sorting where you need to remember comparisons (tournament sort), Batcher’s algorithm is useful because it requires less thinking. Non-adaptive sorting is easy because outcomes of comparisons made at one point of the process do not affect which comparisons will be made in the future. -

An Evolutionary Approach for Sorting Algorithms

ORIENTAL JOURNAL OF ISSN: 0974-6471 COMPUTER SCIENCE & TECHNOLOGY December 2014, An International Open Free Access, Peer Reviewed Research Journal Vol. 7, No. (3): Published By: Oriental Scientific Publishing Co., India. Pgs. 369-376 www.computerscijournal.org Root to Fruit (2): An Evolutionary Approach for Sorting Algorithms PRAMOD KADAM AND Sachin KADAM BVDU, IMED, Pune, India. (Received: November 10, 2014; Accepted: December 20, 2014) ABstract This paper continues the earlier thought of evolutionary study of sorting problem and sorting algorithms (Root to Fruit (1): An Evolutionary Study of Sorting Problem) [1]and concluded with the chronological list of early pioneers of sorting problem or algorithms. Latter in the study graphical method has been used to present an evolution of sorting problem and sorting algorithm on the time line. Key words: Evolutionary study of sorting, History of sorting Early Sorting algorithms, list of inventors for sorting. IntroDUCTION name and their contribution may skipped from the study. Therefore readers have all the rights to In spite of plentiful literature and research extent this study with the valid proofs. Ultimately in sorting algorithmic domain there is mess our objective behind this research is very much found in documentation as far as credential clear, that to provide strength to the evolutionary concern2. Perhaps this problem found due to lack study of sorting algorithms and shift towards a good of coordination and unavailability of common knowledge base to preserve work of our forebear platform or knowledge base in the same domain. for upcoming generation. Otherwise coming Evolutionary study of sorting algorithm or sorting generation could receive hardly information about problem is foundation of futuristic knowledge sorting problems and syllabi may restrict with some base for sorting problem domain1. -

Investigating the Effect of Implementation Languages and Large Problem Sizes on the Tractability and Efficiency of Sorting Algorithms

International Journal of Engineering Research and Technology. ISSN 0974-3154, Volume 12, Number 2 (2019), pp. 196-203 © International Research Publication House. http://www.irphouse.com Investigating the Effect of Implementation Languages and Large Problem Sizes on the Tractability and Efficiency of Sorting Algorithms Temitayo Matthew Fagbola and Surendra Colin Thakur Department of Information Technology, Durban University of Technology, Durban 4000, South Africa. ORCID: 0000-0001-6631-1002 (Temitayo Fagbola) Abstract [1],[2]. Theoretically, effective sorting of data allows for an efficient and simple searching process to take place. This is Sorting is a data structure operation involving a re-arrangement particularly important as most merge and search algorithms of an unordered set of elements with witnessed real life strictly depend on the correctness and efficiency of sorting applications for load balancing and energy conservation in algorithms [4]. It is important to note that, the rearrangement distributed, grid and cloud computing environments. However, procedure of each sorting algorithm differs and directly impacts the rearrangement procedure often used by sorting algorithms on the execution time and complexity of such algorithm for differs and significantly impacts on their computational varying problem sizes and type emanating from real-world efficiencies and tractability for varying problem sizes. situations [5]. For instance, the operational sorting procedures Currently, which combination of sorting algorithm and of Merge Sort, Quick Sort and Heap Sort follow a divide-and- implementation language is highly tractable and efficient for conquer approach characterized by key comparison, recursion solving large sized-problems remains an open challenge. In this and binary heap’ key reordering requirements, respectively [9]. -

Sorting Partnership Unless You Sign Up! Brian Curless • Homework #5 Will Be Ready After Class, Spring 2008 Due in a Week

Announcements (5/9/08) • Project 3 is now assigned. CSE 326: Data Structures • Partnerships due by 3pm – We will not assume you are in a Sorting partnership unless you sign up! Brian Curless • Homework #5 will be ready after class, Spring 2008 due in a week. • Reading for this lecture: Chapter 7. 2 Sorting Consistent Ordering • Input – an array A of data records • The comparison function must provide a – a key value in each data record consistent ordering on the set of possible keys – You can compare any two keys and get back an – a comparison function which imposes a indication of a < b, a > b, or a = b (trichotomy) consistent ordering on the keys – The comparison functions must be consistent • Output • If compare(a,b) says a<b, then compare(b,a) must say b>a • If says a=b, then must say b=a – reorganize the elements of A such that compare(a,b) compare(b,a) • If compare(a,b) says a=b, then equals(a,b) and equals(b,a) • For any i and j, if i < j then A[i] ≤ A[j] must say a=b 3 4 Why Sort? Space • How much space does the sorting • Allows binary search of an N-element algorithm require in order to sort the array in O(log N) time collection of items? • Allows O(1) time access to kth largest – Is copying needed? element in the array for any k • In-place sorting algorithms: no copying or • Sorting algorithms are among the most at most O(1) additional temp space. -

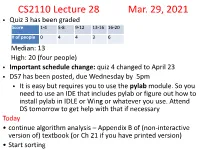

CS2110 Lecture 28 Mar. 29, 2021

CS2110 Lecture 28 Mar. 29, 2021 • Quiz 3 has been graded Score 1-4 5-8 9-12 13-16 16-20 # of people 0 4 4 3 6 Median: 13 High: 20 (four people) • Important schedule change: quiz 4 changed to April 23 • DS7 has been posted, due Wednesday by 5pM • It is easy but requires you to use the pylab module. So you need to use an IDE that includes pylab or figure out how to install pylab in IDLE or Wing or whatever you use. Attend DS toMorrow to get help with that if necessary Today • continue algorithM analysis – Appendix B of (non-interactive version of) textbook (or Ch 21 if you have printed version) • Start sorting Last tiMe discussed “RAM” Model used to count steps of program execution. Considering again the 4 + 3n steps for foo(n) def foo(n): i = 0 result = 0 while i <= n: result = result + i i = i + 1 return answer • I said that we usually ignore the 4. It turns out we are also usually happy to ignore the leading constant on the n. n is what's iMportant - the number of steps required grows linearly with n. • Throwing out those constants doesn't always Make sense - at "tuning" tiMe or other tiMes, we Might want/need to consider the constants. But in big picture coMparisons, it's often helpful and valid to siMplify things by ignoring theM. We’ll look at two More examples before forMalizing this throwing-away-stuff approach via Big-O notation. FroM last tiMe - when can we search quickly? • When the input is sorted. -

Sorting Algorithm 1 Sorting Algorithm

Sorting algorithm 1 Sorting algorithm In computer science, a sorting algorithm is an algorithm that puts elements of a list in a certain order. The most-used orders are numerical order and lexicographical order. Efficient sorting is important for optimizing the use of other algorithms (such as search and merge algorithms) that require sorted lists to work correctly; it is also often useful for canonicalizing data and for producing human-readable output. More formally, the output must satisfy two conditions: 1. The output is in nondecreasing order (each element is no smaller than the previous element according to the desired total order); 2. The output is a permutation, or reordering, of the input. Since the dawn of computing, the sorting problem has attracted a great deal of research, perhaps due to the complexity of solving it efficiently despite its simple, familiar statement. For example, bubble sort was analyzed as early as 1956.[1] Although many consider it a solved problem, useful new sorting algorithms are still being invented (for example, library sort was first published in 2004). Sorting algorithms are prevalent in introductory computer science classes, where the abundance of algorithms for the problem provides a gentle introduction to a variety of core algorithm concepts, such as big O notation, divide and conquer algorithms, data structures, randomized algorithms, best, worst and average case analysis, time-space tradeoffs, and lower bounds. Classification Sorting algorithms used in computer science are often classified by: • Computational complexity (worst, average and best behaviour) of element comparisons in terms of the size of the list . For typical sorting algorithms good behavior is and bad behavior is . -

Visvesvaraya Technological University a Project Report

` VISVESVARAYA TECHNOLOGICAL UNIVERSITY “Jnana Sangama”, Belagavi – 590 018 A PROJECT REPORT ON “PREDICTIVE SCHEDULING OF SORTING ALGORITHMS” Submitted in partial fulfillment for the award of the degree of BACHELOR OF ENGINEERING IN COMPUTER SCIENCE AND ENGINEERING BY RANJIT KUMAR SHA (1NH13CS092) SANDIP SHAH (1NH13CS101) SAURABH RAI (1NH13CS104) GAURAV KUMAR (1NH13CS718) Under the guidance of Ms. Sridevi (Senior Assistant Professor, Dept. of CSE, NHCE) DEPARTMENT OF COMPUTER SCIENCE AND ENGINEERING NEW HORIZON COLLEGE OF ENGINEERING (ISO-9001:2000 certified, Accredited by NAAC ‘A’, Permanently affiliated to VTU) Outer Ring Road, Panathur Post, Near Marathalli, Bangalore – 560103 ` NEW HORIZON COLLEGE OF ENGINEERING (ISO-9001:2000 certified, Accredited by NAAC ‘A’ Permanently affiliated to VTU) Outer Ring Road, Panathur Post, Near Marathalli, Bangalore-560 103 DEPARTMENT OF COMPUTER SCIENCE AND ENGINEERING CERTIFICATE Certified that the project work entitled “PREDICTIVE SCHEDULING OF SORTING ALGORITHMS” carried out by RANJIT KUMAR SHA (1NH13CS092), SANDIP SHAH (1NH13CS101), SAURABH RAI (1NH13CS104) and GAURAV KUMAR (1NH13CS718) bonafide students of NEW HORIZON COLLEGE OF ENGINEERING in partial fulfillment for the award of Bachelor of Engineering in Computer Science and Engineering of the Visvesvaraya Technological University, Belagavi during the year 2016-2017. It is certified that all corrections/suggestions indicated for Internal Assessment have been incorporated in the report deposited in the department library. The project report has been approved as it satisfies the academic requirements in respect of Project work prescribed for the Degree. Name & Signature of Guide Name Signature of HOD Signature of Principal (Ms. Sridevi) (Dr. Prashanth C.S.R.) (Dr. Manjunatha) External Viva Name of Examiner Signature with date 1. -

Evaluation of Sorting Algorithms, Mathematical and Empirical Analysis of Sorting Algorithms

International Journal of Scientific & Engineering Research Volume 8, Issue 5, May-2017 86 ISSN 2229-5518 Evaluation of Sorting Algorithms, Mathematical and Empirical Analysis of sorting Algorithms Sapram Choudaiah P Chandu Chowdary M Kavitha ABSTRACT:Sorting is an important data structure in many real life applications. A number of sorting algorithms are in existence till date. This paper continues the earlier thought of evolutionary study of sorting problem and sorting algorithms concluded with the chronological list of early pioneers of sorting problem or algorithms. Latter in the study graphical method has been used to present an evolution of sorting problem and sorting algorithm on the time line. An extensive analysis has been done compared with the traditional mathematical methods of ―Bubble Sort, Selection Sort, Insertion Sort, Merge Sort, Quick Sort. Observations have been obtained on comparing with the existing approaches of All Sorts. An “Empirical Analysis” consists of rigorous complexity analysis by various sorting algorithms, in which comparison and real swapping of all the variables are calculatedAll algorithms were tested on random data of various ranges from small to large. It is an attempt to compare the performance of various sorting algorithm, with the aim of comparing their speed when sorting an integer inputs.The empirical data obtained by using the program reveals that Quick sort algorithm is fastest and Bubble sort is slowest. Keywords: Bubble Sort, Insertion sort, Quick Sort, Merge Sort, Selection Sort, Heap Sort,CPU Time. Introduction In spite of plentiful literature and research in more dimension to student for thinking4. Whereas, sorting algorithmic domain there is mess found in this thinking become a mark of respect to all our documentation as far as credential concern2. -

Sorting Algorithm 1 Sorting Algorithm

Sorting algorithm 1 Sorting algorithm A sorting algorithm is an algorithm that puts elements of a list in a certain order. The most-used orders are numerical order and lexicographical order. Efficient sorting is important for optimizing the use of other algorithms (such as search and merge algorithms) which require input data to be in sorted lists; it is also often useful for canonicalizing data and for producing human-readable output. More formally, the output must satisfy two conditions: 1. The output is in nondecreasing order (each element is no smaller than the previous element according to the desired total order); 2. The output is a permutation (reordering) of the input. Since the dawn of computing, the sorting problem has attracted a great deal of research, perhaps due to the complexity of solving it efficiently despite its simple, familiar statement. For example, bubble sort was analyzed as early as 1956.[1] Although many consider it a solved problem, useful new sorting algorithms are still being invented (for example, library sort was first published in 2006). Sorting algorithms are prevalent in introductory computer science classes, where the abundance of algorithms for the problem provides a gentle introduction to a variety of core algorithm concepts, such as big O notation, divide and conquer algorithms, data structures, randomized algorithms, best, worst and average case analysis, time-space tradeoffs, and upper and lower bounds. Classification Sorting algorithms are often classified by: • Computational complexity (worst, average and best behavior) of element comparisons in terms of the size of the list (n). For typical serial sorting algorithms good behavior is O(n log n), with parallel sort in O(log2 n), and bad behavior is O(n2). -

FDM SORT: an External and Distributed Sorting Chintha Sivakrishnaiah1*, Puttumbaku.Chittibabu2

International Journal of Emerging Trends & Technology in Computer Science (IJETTCS) Web Site: www.ijettcs.org Email: [email protected] Volume 5, Issue 3, May-June 2016 ISSN 2278-6856 FDM SORT: An External and Distributed Sorting Chintha SivaKrishnaiah1*, Puttumbaku.ChittiBabu2 1Asst. Professor, Annmacharya PG College of Computer Studies, Rajampet, Kadapa(District), AndhraPradesh, India, 2 Professor ,Annmacharya PG College of Computer Studies, Rajampet, Kadapa(District),Andhra Pradesh, India, Abstract uniformly distributed, then the address calculation sort Distribution is a competent method for sorting proving by degenerates into an O (N2) time complexity. many of them in terms of complexity with little comparisons. Radix sort is one that the digits of each data value are used In this paper we put forward an approach to sort elements by in the sort. Radix sort for M-sized data element with N distribution using Fibonacci Sequence and a small number of elements has a time complexity of O(mn). If the sub data arithmetic calculations. It has no comparisons in case of elements become very dense, then M becomes more distinctive set of data elements. If elements are repetitive then approximately logN, then the Radix Sort degenerates to a only comparison exist at the distributed position where O(NlogN) algorithm. So hence, the Radix Sort depends on repetitive element exists. The sorting technique uses array of M much less than N by a sizable ratio. four dimensions or sparse matrix or any other possible data Hash Sort is a general purpose non-comparison based structure that hold the distributed element externally. Spectral test prove that the distribution by dimensional approach is a sorting algorithm, which has some features not found in proficient method for unique distributions. -

Comparison Sorts Name Best Average Worst Memory Stable Method Other Notes Quicksort Is Usually Done in Place with O(Log N) Stack Space

Comparison sorts Name Best Average Worst Memory Stable Method Other notes Quicksort is usually done in place with O(log n) stack space. Most implementations on typical in- are unstable, as stable average, worst place sort in-place partitioning is case is ; is not more complex. Naïve Quicksort Sedgewick stable; Partitioning variants use an O(n) variation is stable space array to store the worst versions partition. Quicksort case exist variant using three-way (fat) partitioning takes O(n) comparisons when sorting an array of equal keys. Highly parallelizable (up to O(log n) using the Three Hungarian's Algorithmor, more Merge sort worst case Yes Merging practically, Cole's parallel merge sort) for processing large amounts of data. Can be implemented as In-place merge sort — — Yes Merging a stable sort based on stable in-place merging. Heapsort No Selection O(n + d) where d is the Insertion sort Yes Insertion number of inversions. Introsort No Partitioning Used in several STL Comparison sorts Name Best Average Worst Memory Stable Method Other notes & Selection implementations. Stable with O(n) extra Selection sort No Selection space, for example using lists. Makes n comparisons Insertion & Timsort Yes when the data is already Merging sorted or reverse sorted. Makes n comparisons Cubesort Yes Insertion when the data is already sorted or reverse sorted. Small code size, no use Depends on gap of call stack, reasonably sequence; fast, useful where Shell sort or best known is No Insertion memory is at a premium such as embedded and older mainframe applications. Bubble sort Yes Exchanging Tiny code size. -

TJ IOI 2017 Written Round Solutions

TJ IOI 2017 Written Round Solutions Thomas Jefferson High School for Science and Technology Saturday, January 13, 2018 Contents 1 Introduction 1 2 Bead sort 1 3 Elementary sorts 2 3.1 Selection sort . 2 3.2 Insertion sort . 3 4 Linearithmic sorts 5 4.1 Merge sort . 5 5 Radix Sort 7 5.1 Key-Indexed Sorting . 7 5.2 Sorting Strings . 9 5.3 Least Significant Digit Radix Sort . 10 5.4 Most Significant Digit Radix Sort . 12 1 Introduction This document will outline possible solutions to the written round of TJ IOI 2017. At the time of the contest, grading was performed without formally written solutions, so these have been written after the fact. These solutions may be useful for teams preparing for future TJ IOI contests, and should be considered more relevant than written rounds from the TJ IOI contests in 2012 or 2013. It is worth noting that errors in the problem statements exist; we regret the presence any such errors, and have tried to clarify the ones we have found in the solutions below. If more errors are found, please contact the TJ IOI officers so that this document can be updated appropriately. 2 Bead sort This section was intended as a fun complement to the T-shirt designed, which prominently featured bead sort on the reverse. Though it wasn't very serious, we hope it was enjoyable to think about! Problem 2.1 (4 points). According to physics, the motion of a falling object that starts at rest is described by 1 ∆y = − gt2 2 where g is the accelerationp of gravity relative to Earth's surface.