Development of Photogrammetric Methods for Landslide Analysis

Total Page:16

File Type:pdf, Size:1020Kb

Load more

Recommended publications

-

Old Guys Merge ,.Put on Hold TERRACE -- One Northwest Imately Three Corona Common a Year -- Once the Deal Goes Area of Northern Ontario

m • i bu d get ) ' old guys merge ,.put on hold TERRACE -- One northwest imately three Corona common a year -- once the deal goes area of northern Ontario. TERRACE -- The city has municipalities now receive. gold mine and one under shares for each Homestake one. through. For its part Homestake is postponed finalizing its 1992 Reduction or withdrawal budget until the provincial development will find Analysts generally favour the International Corona now considered an expert in of any of those grants would government hands down its themselves under the same cor- deal, saying Homestake has the produces 600,000 ounces of autoclaving, the type of change the cost to the city of own budget tomorrow. porate roof if a merger goes kind of money needed for the gold a year. metallurgical process that'll be a number of projects which Alderman and finance through. Eskay Creek mine which is ex- used to extract gold at Eskay are in~,lded in the city's Homestake and International Creek. committee chairman Danny Involved is a shareswap bet- pected to cost $210 million. latest draft budget, said Corona have already approved This is the second major cor- Sheridan said the New Sheridan. ween Homestake Mining Co., A final feasibility study for the deal and it's expected to be porate event in the last while to Democrat government's con- He anticipated the city will which has the controlling in- that project is expected later this ready in time for a mid-May an- affect the Eskay Creek proper- tinual warnings about a shor- bring down its budget by the terest in the Golden Bear Mine year. -

Goodwill Message NABC Success Formula

Saturday, December 4, 2010 Volume 83, Number 9 Daily Bulletin 83rd North American Bridge Championships Editors: Brent Manley and Dave Smith NABC success formula: Nickell leads Reisinger, going for three straight hard work and good help The team captained by Nick Nickell, winners of the Reisinger Board-a-Match Teams in 2008 and 2009, took the lead after two qualifying sessions as they strive for a third straight title in one of the ACBL’s toughest contests. Nickell has won the event seven times with few changes in the roster. His current team is Ralph Katz, Bob Hamman, Zia Mahmood, Jeff Meckstroth and Eric Rodwell. The field was reduced to 20 teams for today’s two semifinal sessions. Nickell’s carryover of 11.47 is less than a board ahead of the second-place team: Magnus Eriksson, Sandra Rimstedt, Shane Blanchard and Bob Blanchard. Ten teams will play in the final two sessions on Sunday. Muriel Altus and Jayne Thomas Phil Altus Two tied for lead in When it comes to putting on an NABC, this trademarks of all Florida tournaments.” North America Swiss year’s chairs – Jayne Thomas, Muriel Altus and She also recognized the hard work of the Phil Altus – knew just what to do: recruit the right various volunteer chairs (see the list at the end of Teams headed by Betty Bloom and Jun Liu volunteers. this article). “I want to thank all the volunteers who enter today’s place in the Keohane North American At the 2010 Fall NABC in Orlando, the payoff put in countless hours to make the tournament a Swiss teams in a dead tie, both with carryover of was lots of compliments from the players. -

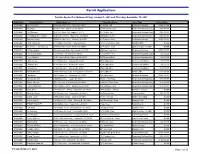

2021 Building Permits

Permit Applications Permits Applied For Between Friday, January 1, 2021 and Thursday, September 30, 2021 Application Property Owner Mailing Address Property Address Project Type Project Value 01/04/2021 Bennett Bixler 6424 Blue Water Drive Buford GA 30518- 91 Joshua Trail Residential Building $ 826,831.00 01/04/2021 DJ Wight PO Box 1327 Dahlonega GA 30533- 129 Hickory Nut Trail Residential Building Other $ 80,000.00 01/04/2021 Jeff Stevens 850 31ST Street NW Naples FL 34117- 476 Wildcat Trail Residential Building Other $ 50,000.00 01/04/2021 CHARLES MATHESON 210 Matheson Drive Dawsonville GA 30534- 210 Matheson Drive Residential Building Other $ 40,000.00 01/05/2021 Michael Vinson 575 Fawn Glen Court Roswell GA 30075- 32 Fieldstone Court Residential Building $ 600,000.00 01/06/2021 JBA Homes Inc 277 Indian Cave Road Ellijay GA 30536- 724 River Overlook Road Electrical RES $ 0.00 01/06/2021 Reminisce residential LLC 575 Fawn Glen Court Roswell GA 30075- 32 Fieldstone Court Land Development RES $ 0.00 01/06/2021 William Hobbs 76 Farmhouse Road Ball Ground GA 30107- 354 Bear Creek Drive Residential Building $ 750,000.00 01/06/2021 Jimmy Davenport Olivia Lane Ball Ground GA 30107- 178 Sunshine Drive Residential Building Other $ 2,400.00 01/06/2021 Troy Caldwell 1126 Yahoola Road Dahlonega GA 30533- 235 Dogwood Drive Residential Building Other $ 4,000.00 01/07/2021 Manor Lake 316 Hillside Drive Waleska GA 30183- 3769 Kallie Circle Commercial Building $ 2,000.00 01/07/2021 Manor Lake 316 Hillside Drive Waleska GA 30183- 3769 Kallie Circle Commercial -

Anchorage, AK 99515

Connor Williams Christopher Mahon Doug Jensen 2-26021 Twp Rd 544 PSC 123 Box 35r Cochrane, AB T4C 1E7 Sturgeon County, AB T8T 1M8 APO, AE 09719-0001 Jennifer Armstrong Antony Luesby Cecile Ferrell 1309 Sloan St # 2 1 , AK 99901 North Pole, AK 99705-5808 1, AK 12345 dogan ozkan Britton Kerin abbasagamahallesi yildiz vaddesi no Patricia Blank 232 Henderson Rd S 39/1 , AK 99827 Fairbanks, AK 99709-2345 besiktas istanbul turkey, AK 99701 Patti Lisenbee Carla Dummerauf Margaret McNeil 601 Cherry St Apt 2 4201 Davis St 841 75th Anchorage, AK 99504-2148 Anchorage, AK 90551 Anch, AK 99518 David Kreiss-Tomkins Courtney Johnson Gabriel Day 313 Islander Dr , AK , AK Sitka, AK 99835-9730 Derek Monroe Deborah Voves Gael Irvine 1705 Morningtide Ct 13231 Mountain Pl 8220 E Edgerton-Parks Rd Anchorage, AK 99501-5722 Anchorage, AK 99516-3150 Palmer, AK 99645 Hayden Kaden Jean James John Bennett PO Box 138 3526 Ida Ln , AK 90709 Gustavus, AK 99826-0138 Fairbanks, AK 99709-2803 James Mathewswon Joanne Rousculp Kray Van Kirk 314 N Tiffany Dr 9800 Tern Dr 1015 Arctic Cir Palmer, AK 99645-7739 Palmer, AK 99645-9103 Juneau, AK 99801-8754 Marie Pedraza Nathaniel Perry Mary Klippel 658 N Angus Loop PO Box 71002 , AK 99577 Palmer, AK 99645-9507 Shaktoolik, AK 99771-1002 Arlene Reber raymond pitka Pamela Minkemann 2311 W 48th Ave PO Box 71578 Anchorage, AK 99515 Anchorage, AK 99517-3173 Fairbanks, AK 99707-1578 Dirk Nelson Kevin Shaffer Marc Dumas PO Box 283 123 Post Office Dr 1166 Skyline Dr Ester, AK 99725-0283 Moose Pass, AK 99631 Fairbanks, AK 99712-1309 Samuel Molletti John S. -

Alone in the Dark 3

Not sold in cognito ALONE IN THE DARK 3 For starters, you can adjust the diffi Hartwood, whom you rescued from the culty level for combat. Alignment to use Derceto Mansion two years ago, in ne of the most innova an item at a location still presented Alone in the Dark. tive animated graphic minor problems, but it didn't seem to· be So, you remove your trusty .38 adventures of 1993 was as precise as Special Alone in the Dark. It set required in from your polygonal characters Alone in the . desk and set against a beautifully rendered back Darkand off for ground, music illustrating the mood, Alone in the Slaughter great sound effects and an absorbing sto Dark2. Gulch and ryline. Hitting whatever Utilizing a 3-D rendered, 360-degree the Tab key horrors it rotational view, Alone introduced us to a brings up a,n may hold. multitude of camera angles. These overhead 3- Upon ranged from top-down views to close D view of your arrival. ups, from floor level shots to reverse the game you cross a angles and "looking up" pans. Alone area and a ii&..----....1 bridge into went on to become one of the best hits flashing red arrow points to where you town, only to have it blow up behind of 1993. are. you. There is no going back now. You A year later we had Alone in the In this 3rd - and hopefully not last must forge ahead and solve the mystery Dark 2, again starring Edward Carnby. - chapter in the saga of of Slaughter Gulch. -

Regent Theatre Ulumbarra Theatre, Bendigo

melbourne opera Part One of e Ring of the Nibelung WAGNER’S Regent Theatre Ulumbarra Theatre, Bendigo Principal Sponsor: Henkell Brothers Investment Managers Sponsors: Richard Wagner Society (VIC) • Wagner Society (nsw) Dr Alastair Jackson AM • Lady Potter AC CMRI • Angior Family Foundation Robert Salzer Foundation • Dr Douglas & Mrs Monica Mitchell • Roy Morgan Restart Investment to Sustain and Expand (RISE) Fund melbourneopera.com Classic bistro wine – ethereal, aromatic, textural, sophisticated and so easy to pair with food. They’re wines with poise and charm. Enjoy with friends over a few plates of tapas. debortoli.com.au /DeBortoliWines melbourne opera WAGNER’S COMPOSER & LIBRETTIST RICHARD WAGNER First performed at Munich National Theatre, Munich, Germany on 22nd September 1869. Wotan BASS Eddie Muliaumaseali’i Alberich BARITONE Simon Meadows Loge TENOR James Egglestone Fricka MEZZO-SOPRANO Sarah Sweeting Freia SOPRANO Lee Abrahmsen Froh TENOR Jason Wasley Donner BASS-BARITONE Darcy Carroll Erda MEZZO-SOPRANO Roxane Hislop Mime TENOR Michael Lapina Fasolt BASS Adrian Tamburini Fafner BASS Steven Gallop Woglinde SOPRANO Rebecca Rashleigh Wellgunde SOPRANO Louise Keast Flosshilde MEZZO-SOPRANO Karen Van Spall Conductors Anthony Negus, David Kram FEB 5, 21 Director Suzanne Chaundy Head of Music Raymond Lawrence Set Design Andrew Bailey Video Designer Tobias Edwards Lighting Designer Rob Sowinski Costume Designer Harriet Oxley Set Build & Construction Manager Greg Carroll German Language Diction Coach Carmen Jakobi Company Manager Robbie McPhee Producer Greg Hocking AM MELBOURNE OPERA ORCHESTRA The performance lasts approximately 2 hours 25 minutes with no interval. Principal Sponsor: Henkell Brothers Investment Managers Sponsors: Dr Alastair Jackson AM • Lady Potter AC CMRI • Angior Family Foundation Robert Salzer Foundation • Dr Douglas & Mrs Monica Mitchell • Roy Morgan Restart Investment to Sustain and The role of Loge, performed by James Egglestone, is proudly supported by Expland (RISE) Fund – an Australian the Richard Wagner Society of Victoria. -

THE KINDEST PEOPLE: HEROES and GOOD SAMARITANS, VOLUME 4 by David Bruce Dedicated to Caleb, Hartley, and Michelle SMASHWORDS EDITION Copyright 2012 by Bruce D

THE KINDEST PEOPLE: HEROES AND GOOD SAMARITANS, VOLUME 4 By David Bruce Dedicated to Caleb, Hartley, and Michelle SMASHWORDS EDITION Copyright 2012 by Bruce D. Bruce Thank you for downloading this free ebook. You are welcome to share it with your friends. This book may be reproduced, copied and distributed for non-commercial purposes, provided the book remains in its complete original form. If you enjoyed this book, please return to Smashwords.com to discover other works by this author. Thank you for your support. ••• CHAPTER 1: STORIES 1-50 In a Hurry to be Born In January 2012 in New York, Rabita Sarkar, age 31, of Harrison, New Jersey, was commuting with her husband, Aditya Saurabh, on a PATH commuter train to Manhattan’s Roosevelt Hospital because she was feeling contractions although her baby was not yet due. They did not make it to the hospital in time; she gave birth to a boy on the train. Ms. Sarkar said, “It’s just that this guy had other plans, and he came out earlier.” On the train, her contractions came more quickly, and her husband looked and saw that the baby’s head was already coming out. After Ms. Sarkar gave birth, a little girl on the train offered her jacket to be used to keep the newborn warm. PATH officials directed the train to skip most of its stops, so that the couple and baby could get to the hospital quickly. Emergency personnel, including police officer Atiba Joseph-Cumberbatch, met the couple and newborn and took them to the hospital. -

Proposal Keeps Quarters, Adds Courses in 974 the Educational Policy Com- the Proposal Begins with a Parts of Multlunlt Modules

The College of Wooster Open Works The oV ice: 1971-1980 "The oV ice" Student Newspaper Collection 5-25-1973 The oW oster Voice (Wooster, OH), 1973-05-25 Wooster Voice Editors Follow this and additional works at: https://openworks.wooster.edu/voice1971-1980 Recommended Citation Editors, Wooster Voice, "The oosW ter Voice (Wooster, OH), 1973-05-25" (1973). The Voice: 1971-1980. 67. https://openworks.wooster.edu/voice1971-1980/67 This Book is brought to you for free and open access by the "The oV ice" Student Newspaper Collection at Open Works, a service of The oC llege of Wooster Libraries. It has been accepted for inclusion in The oV ice: 1971-1980 by an authorized administrator of Open Works. For more information, please contact [email protected]. Old Lady: Mr. Fields, W. C. Fields: Modem, why don't you drink I tish tornicato in water. , water? 1 1 I PUBLISHED BY THE STUDENTS OF THE COLLEGE OF. WOOSTER Volume LXXXIX Wooster, Ohio, Friday, May 25, 1973 Number 24 EPC suggests new curriculum Proposal keeps quarters, adds courses in 974 The Educational Policy Com- The proposal begins with a parts of multlunlt modules. This an oral examination. Freshman Colloquium program, mittee brought its long-await- ed recommendation to keep the effectively changes the grad- After reviewing the language the committee feels a strong need curriculum change proposal to course-quart- er calendar. Fur- uation requirement to 36 courses. requirement the committee to Insure freshmen at least one the faculty at Monday night's thermore, It suggests that the There were two major reasons suggests the offering of a number small seminar course that can faculty meeting. -

Results Book

q DALLASDALLAS MARATHONMARATHON 2004 Dallas White Rock Marathon Benefi tingTexas Scottish Rite Hospital for Children Sunday. December 11. 2005 a.-ooam RUN he ROCkl DALLAS whne R~k MARATHON :2.005 Amedc:on Alrlne, Center - VlctGly Plozo, Doll•, lexos Pu■ MGrathon • Hal M<lroltlon • 5-1'- 11-'<ly Lalla• 1'111• MOMY l'urs. - hit l!!q>o In 1M Soultlw..t Mote than ao land& Along a Scenic Coul'H www.runtherocllc.com !Mn.at, T II; X A ■ SCOTTISH RITE HOSPITAL Cl 11·.. lllllllllti 17> Salute to Pat Cheshier The runners and supporters of the Dallas White Rock Marathon owe a debt of gratitude to countless family, friends and volunteers who make behind-the-scenes contributions that help The Rock run. At the top of that 2 Dear Runners list is Pat Cheshier, recently retired 5 Dallas Police Association Senior Corporal from the Dallas Police Department. Pat is a 33-year veteran 6 The Course of the DPD and served as head of 8 Texas Shindig the Special Operations Division of the DPD since 1991. For the past 14 10 Hall of Fame years, Pat has been personally responsible for the safety of our runners, and 11 Top Finishers virtually every other running event in the City of Dallas. 13 Victory Award for Excellence Pat and his colleagues at the Dallas Police Department are heroes to those of us 14 For The Love of The Lake who run. We are particularly proud that the Dallas Police Association has, for the second year, donated their services to the Dallas White Rock Marathon so that 15 A Day at TSRH the Marathon could contribute more money to the Texas Scottish Rite Hospital for 16 Fitness Expo Children. -

Agenda of the Lee County Board June 17, 2021 6:00 P.M

County [[inois Agenda of the Lee County Board June 17, 2021 6:00 P.M. 3rd Floor Boardroom Old Lee County Courthouse 112 E. Second St. Dixon, IL 61021 Call to Order: Pledge of Allegiance: Roll Call: Announcements: a. Please mute or turn off cell phones. Approval of Board Minutes of: Regular County Board meeting. Resolution a. Two (2) Joseph E. Meyer Resolutions-Cancellation of Certificates-Certificate 2018-00348 PPN# 13-21-12-278-036 and Certificate 2018-00349 PPN# 13-21-12-278-037. To Zoning Board: a. Petition 21-P-1567, Petitioner: Lee County Zoning Office. PPN# 19-22-25-400-004 in Sublette Township. Petitioner is requesting a map amendment from 1-2 General Industrial District to Ag-1 Agricultural District. b. Petition 21-P-1568, Petitioner: Lee County Zoning Office. PPN# 20-11-14-400-008 in Viola Township. Petitioner is requesting a map amendment from C-3 General Business District to Ag-1 Agricultural District. c. Petition 21-P-1569, Petitioner: Lee County Zoning Office. PPN# 20-11-14-400-007 in Viola Township. Petitioner is requesting a map amendment from C-3 General Business District to Ag-1 Agricultural District. d. Petition 21-P-1570, Petitioner: Lee County Zoning Office. PPN# 20-11-14-300-009 in Viola Township. Petitioner is requesting a map amendment from C-3 General Business District to Ag-1 Agricultural District. e. Petition 21-P-1571, Petitioner: Lee County Zoning Office. PPN# 20-11-14-300-008 in Viola Township. Petitioner is requesting a map amendment from C-3 General Business District to Ag-1 Agricultural District. -

Graduation Link

MainThe Official Magazine of RAF HaltonpointAutumn 2015 Air cadet Graduation link Support Television personality and Air Cadet Ambassador, Honorary Group Captain Wing Carol Vorderman, attended the latest graduation parade at RAF Halton Special Families Welfare Day caravan opens The grand opening of the Halton An opportunity for the Station to give Lodge eight berth welfare caravan something back to the families who provide took place at Pagham near such wonderful support to its personnel Chichester in Sussex Mainpoint Autumn 2015 1 STATION SNIPPETS | COMMUNITY AND CHARITY | SPT WG SPECIAL MainThe Official Magazine of RAF HaltonpointAutumn 2015 Foreword Station Commander’s Foreword Air cadet OC Graduation link Support Television personality and Air Cadet Ambassador, Honorary Group Captain Carol Vorderman, attended the latest graduation parade at RAF Halton Wing Special Group Captain A S Burns MSc MA BSc RAF ED’S GARAGE One team, training people for Defence Your first stop Families Welfare Day caravan opens The grand opening of the Halton An opportunity for the Station to give Lodge eight berth welfare caravan something back to the families who provide took place at Pagham near such wonderful support to its personnel Chichester in Sussex Mainpoint Autumn 2015 1 STATION SNIPPETS | CO MMUNITY AND C H A R ITY | A RME D FO R CES DA Y for vehicle care Editorial Team in Watford and Hemel Hempstead & Aylesbury Editor FS Greg Saunders IMLC DS Airmen’s Command Squadron Beat the Credit Crunch at EDS Tel: 01296 656380 Email: [email protected] ALL MAKES & MODELS SERVICED Deputy Editor Cpl Laura Taylor • Brakes • Servicing • Batteries Welfare and Support Personnel Tel: 01296 656929 • Clutches • State of the • Tyres Email: [email protected] Distribution • Exhausts art diagnostic • State of the art Courtesy of the Central Registry and Fire Section • MOT’s equipment Tracking system Photography Support 20% Off Service Labour & Repair Kate Rutherford, Chris Yarrow & Luka Waycott. -

2013 Results

2013 Results Archery - Target Female Adult (18-54) Freestyle 7/28/2013 1. MISTY YOUNG (COLORADO SPRINGS, CO) 874 2. KAYLEE GEIST (COLORADO SPRINGS, CO) 873 3. ANNE GEIST (COLORADO SPRINGS, CO) 871 4. CHRISTINA STEVENS (FOUNTIAN, CO) 829 5. JEANNE SWAEBY (LAFAYETTE, CO) 792 6. BROOKE WARDRIP (AURORA, CO) 731 Female Adult (18-54) Freestyle Bowhunter 7/28/2013 1. BRENDA FLORES (JOHNSTOWN, CO) 797 Female Adult (18-54) Freestyle Limited 7/28/2013 1. REGINA MONTOYA (AURORA, CO) 692 2. CANDI BOYER (DENVER, CO) 662 3. REBECCA BOWEN (LAFAYETTE, CO) 648 4. SHELLY PINGEL (EVANS, CO) 577 Female Adult (18-54) Traditional 7/28/2013 1. GRETA KNIEVEL (LONGMONT, CO) 515 2. ALETHIA FENNEY (LONGMONT, CO) 475 3. ARIEL STEELE (LONGMONT, CO) 286 4. EMILY NAVA (LONGMONT, CO) 201 Female Cub Freestyle 7/28/2013 1. KEEGAN LEWIS (HIGHLANDS RANCH, CO) 852 Female Cub Freestyle Bowhunter 7/28/2013 1. ALINA HARPER (HIGHLANDS RANCH, CO) 672 2. JESSICA SIMS (PEYTON, CO) 653 Female Cub Freestyle Limited Recurve 7/28/2013 1. ZACHARY KESSLER (CENTENNIAL, CO) 238 Female Cub Freestyle Limited Recurve 7/28/2013 1. TATYANA LABLUE (COLORADO SPRINGS, CO) 806 2. ERIN FLORES (JOHNSTOWN, CO) 618 3. MADELYN GOLDBERG (HIGHLANDS RANCH, CO) 541 4. BETHANY HINMAN (COLORADO SPRINGS, CO) 469 Female Senior (55-64) Freestyle Bowhunter 7/28/2013 1. TAMMY BREDY (CEDAR CREST, NM) 846 Female Young Adult (15-17) Freestyle 7/28/2013 1. JOLIE BATY (ENGLEWOOD, CO) 853 2. SARAH BOYD (COLORADO SPRINGS, CO) 811 3. JENNIFER HEIMERMAN (COLORADO SPRINGS, CO) 796 4. CLAIRE LANDWENR (COLORADO SPRINGS, CO) 730 5.