Consumer Online Privacy Hearing Committee On

Total Page:16

File Type:pdf, Size:1020Kb

Load more

Recommended publications

-

EPIC Statement 1 Social Media Privacy Senate Judiciary and Commerce Committees April 9, 2018

April 9, 2018 Senator Chuck Grassley, Chairman Senator Dianne Feinstein, Ranking Member Committee on the Judiciary 224 Dirksen Senate Office Building Washington, D.C. 20510 Senator John Thune, Chairman Senator Bill Nelson, Ranking Members Committee on Commerce, Science and Transportation 512 Dirksen Senate Building Washington, D.C. 20510 Dear Members of the Senate Judiciary Committee and the Senate Commerce Committee: We write to you regarding the joint hearing this week on “Facebook, Social Media Privacy, and the Use and Abuse of Data.”1 We appreciate your interest in this important issue. For many years, the Electronic Privacy Information Center (“EPIC”) has worked with both the Judiciary Committee and the Commerce Committee to help protect the privacy rights of Americans.2 In this statement from EPIC, we outline the history of Facebook’s 2011 Consent Order with the Federal Trade Commission, point to key developments (including the failure of the FTC to enforce the Order), and make a few preliminary recommendations. Our assessment is that the Cambridge Analytica breach, as well as a range of threats to consumer privacy and democratic institutions, could have been prevented if the Commission had enforced the Order. 1 Facebook, Social Media Privacy, and the Use and Abuse of Data: Hearing Before the S. Comm. on the Judiciary, 115th Cong. (2018), https://www.judiciary.senate.gov/meetings/facebook-social-media- privacy-and-the-use-and-abuse-of-data (April 10, 2018). 2 See, e.g., The Video Privacy Protection Act: Protecting Viewer Privacy in the 21st Century: Hearing Before the S. Comm on the Judiciary, 112th Cong. -

An Examination of the Unanticipated Outcomes of Having a Fun Organizational Culture

University of Tennessee at Chattanooga UTC Scholar Student Research, Creative Works, and Honors Theses Publications 5-2018 An examination of the unanticipated outcomes of having a fun organizational culture Victoria Baltz University of Tennessee at Chattanooga, [email protected] Follow this and additional works at: https://scholar.utc.edu/honors-theses Part of the Business Administration, Management, and Operations Commons Recommended Citation Baltz, Victoria, "An examination of the unanticipated outcomes of having a fun organizational culture" (2018). Honors Theses. This Theses is brought to you for free and open access by the Student Research, Creative Works, and Publications at UTC Scholar. It has been accepted for inclusion in Honors Theses by an authorized administrator of UTC Scholar. For more information, please contact [email protected]. Page 1 An Examination of the Unanticipated Outcomes of Having a Fun Organizational Culture Victoria Baltz Departmental Honors Thesis The University of Tennessee Chattanooga Management Examination Date: March 26th, 2018 Randy Evans, Ph.D. Philip Roundy, Ph.D. Associate Professor of Management, UC Foundation Assistant Professor of UC Foundation Associate Professor Entrepreneurship Thesis Advisor Department Advisor Richard Allen, Ph.D. UC Foundation Professor of Management Department Advisor Page 2 Abstract Fun organizational cultures break the social constructs of the traditional work environment. Fun organizational cultures have been gaining popularity over the past couple decades. Since fun cultures are gaining attractiveness due to reports focused primarily on the positive aspects of fun cultures, this study examined if there were any unanticipated negative outcomes of a having a fun organizational culture. Qualitative data was pulled from interviews conducted with various members of fun organizational cultures. -

Administration of Barack Obama, 2015 Remarks at the CEO Summit of the Americas in Panama City, Panama April 10, 2015

Administration of Barack Obama, 2015 Remarks at the CEO Summit of the Americas in Panama City, Panama April 10, 2015 Well, thank you, Luis. First of all, let me not only thank you but thank our host and the people of Panama, who have done an extraordinary job organizing this summit. It is a great pleasure to be joined by leaders who I think have done extraordinary work in their own countries. And I've had the opportunity to work with President Rousseff and Peña Nieto on a whole host of regional, international, and bilateral issues and very much appreciate their leadership. And clearly, President Varela is doing an outstanding job here in Panama as well. A lot of important points have already been made. Let me just say this. When I came into office, in 2009, obviously, we were all facing an enormous economic challenge globally. Since that time, both exports from the United States to Latin America and imports from Latin America to the United States have gone up over 50 percent. And it's an indication not only of the recovery that was initiated—in part by important policies that were taken and steps that were taken in each of the countries in coordination through mechanisms like the G–20—but also the continuing integration that's going to be taking place in this hemisphere as part of a global process of integration. And I'll just point out some trends that I think are inevitable. One has already been mentioned: that global commerce, because of technology, because of logistics, it is erasing the boundaries by which we think about businesses not just for large companies, but also for small and medium-sized companies as well. -

Vumber Gives You Throw-Away Phone Numbers for Dating, Work

Tech Gadgets Mobile Enterprise GreenTech CrunchBase TechCrunch TV The Crunchies More Search What's Hot: Android Apple Facebook Google Groupon Microsoft Twitter Zynga Classics Subscribe: Got a tip? Building a startup? Tell us NOW TWEET THIS AUTHENTIC? Google Teams Up With Facebook Has Been Refining KEEN ON... Twitter And SayNow To Bring Their Troll-Slaying Comment Exposed: Apple's Terrible Sin Tweeting-By-Phone To Egypt System For Months; Finally in China » » Ready To Roll? » Err, Call Me: Vumber Gives You Throw-Away Phone Numbers For Dating, Work Jason Kincaid Tweet Most Popular 14 minutes ago View Comments Now Commented Facebook By now you’ve probably heard of Google Voice, a service that lets you take Exposed: Apple’s Terrible Sin in one phone number and configure it to ring all of your phones — work, China (TCTV) mobile, home, whatever — with plenty of settings to manage your inbound and outbound calls. But what if you wanted the opposite: a service that lets Should You Really Be A Startup you spawn a multitude of phone numbers to be used and discarded at your Entrepreneur? leisure? That’s where Vumber comes in. When The Drones Come Marching In The service has actually been around for four years, but it was originally 4chan Founder Unleashes Canvas marketed exclusively toward people on dating sites. The use case is On The World obvious: instead of handing out your real phone number to strangers, Instructure Launches To Root Vumber lets you spin up a new phone number, which you then redirect to Blackboard Out Of Universities your real phone. -

Workshop on Venture Capital and Antitrust, February 12, 2020

Venture Capital and Antitrust Transcript of Proceedings at the Public Workshop Held by the Antitrust Division of the United States Department of Justice February 12, 2020 Paul Brest Hall Stanford University 555 Salvatierra Walk Stanford, CA 94305 Table of Contents Opening Remarks ......................................................................................................................... 1 Fireside Chat with Michael Moritz: Trends in VC Investment: How did we get here? ........ 5 Antitrust for VCs: A Discussion with Stanford Law Professor Doug Melamed ................... 14 Panel 1: What explains the Kill Zones? .................................................................................... 22 Afternoon Remarks .................................................................................................................... 40 Panel 2: Monetizing data ............................................................................................................ 42 Panel 3: Investing in platform-dominated markets ................................................................. 62 Roundtable: Is there a problem and what is the solution? ..................................................... 84 Closing Remarks ......................................................................................................................... 99 Public Workshop on Venture Capital and Antitrust, February 12, 2020 Opening Remarks • Makan Delrahim, Assistant Attorney General for Antitrust, Antitrust Division, U.S. Department of Justice MAKAN -

M&A @ Facebook: Strategy, Themes and Drivers

A Work Project, presented as part of the requirements for the Award of a Master Degree in Finance from NOVA – School of Business and Economics M&A @ FACEBOOK: STRATEGY, THEMES AND DRIVERS TOMÁS BRANCO GONÇALVES STUDENT NUMBER 3200 A Project carried out on the Masters in Finance Program, under the supervision of: Professor Pedro Carvalho January 2018 Abstract Most deals are motivated by the recognition of a strategic threat or opportunity in the firm’s competitive arena. These deals seek to improve the firm’s competitive position or even obtain resources and new capabilities that are vital to future prosperity, and improve the firm’s agility. The purpose of this work project is to make an analysis on Facebook’s acquisitions’ strategy going through the key acquisitions in the company’s history. More than understanding the economics of its most relevant acquisitions, the main research is aimed at understanding the strategic view and key drivers behind them, and trying to set a pattern through hypotheses testing, always bearing in mind the following question: Why does Facebook acquire emerging companies instead of replicating their key success factors? Keywords Facebook; Acquisitions; Strategy; M&A Drivers “The biggest risk is not taking any risk... In a world that is changing really quickly, the only strategy that is guaranteed to fail is not taking risks.” Mark Zuckerberg, founder and CEO of Facebook 2 Literature Review M&A activity has had peaks throughout the course of history and different key industry-related drivers triggered that same activity (Sudarsanam, 2003). Historically, the appearance of the first mergers and acquisitions coincides with the existence of the first companies and, since then, in the US market, there have been five major waves of M&A activity (as summarized by T.J.A. -

Mark Zuckerberg I'm Glad We Got a Chance to Talk Yesterday

Mark Zuckerberg I'm glad we got a chance to talk yesterday. I appreciate the open style you have for working through these issues. It makes me want to work with you even more. I was th inking about our conversation some more and wanted to share a few more thoughts. On the thread about lnstagram joining Facebook, I'm really excited about what we can do to grow lnstagram as an independent brand and product while also having you take on a major leadership role within Facebook that spans all of our photos products, including mobile photos, desktop photos, private photo sharing and photo searching and browsing. This would be a role where we'd be working closely together and you'd have a lot of space to shape the way that the vast majority of the workd's photos are shared and accessed. We have ~300m photos added daily with tens of billions already in the system. We have almost 1O0m mobile photos a day as well and it's growing really quickly -- and that's without us releasing and promoting our mobile photos product yet. We also have a lot of our infrastructure built p around storing and serving photos, querying them, etc which we can do some amazing things with . Overall I'm really excited about what you'd be able to do with this and what we could do together. One thought I had on this is that it might be worth you spending some time with.to get a sense for the impact you could have here and the value of using all of the infrastructure that we've built up rather than having to build everything from scratch at a startup. -

Social Network

DEADLINE.com FROM THE BLACK WE HEAR-- MARK (V.O.) Did you know there are more people with genius IQ’s living in China than there are people of any kind living in the United States? ERICA (V.O.) That can’t possibly be true. MARK (V.O.) It is. ERICA (V.O.) What would account for that? MARK (V.O.) Well, first, an awful lot of people live in China. But here’s my question: FADE IN: INT. CAMPUS BAR - NIGHT MARK ZUCKERBERG is a sweet looking 19 year old whose lack of any physically intimidating attributes masks a very complicated and dangerous anger. He has trouble making eye contact and sometimes it’s hard to tell if he’s talking to you or to himself. ERICA, also 19, is Mark’s date. She has a girl-next-door face that makes her easy to fall for. At this point in the conversation she already knows that she’d rather not be there and her politeness is about to be tested. The scene is stark and simple. MARK How do you distinguish yourself in a population of people who all got 1600 on theirDEADLINE.com SAT’s? ERICA I didn’t know they take SAT’s in China. MARK They don’t. I wasn’t talking about China anymore, I was talking about me. ERICA You got 1600? MARK Yes. I could sing in an a Capella group, but I can’t sing. 2. ERICA Does that mean you actually got nothing wrong? MARK I can row crew or invent a 25 dollar PC. -

Alternative Perspectives of African American Culture and Representation in the Works of Ishmael Reed

ALTERNATIVE PERSPECTIVES OF AFRICAN AMERICAN CULTURE AND REPRESENTATION IN THE WORKS OF ISHMAEL REED A thesis submitted to the faculty of San Francisco State University In partial fulfillment of Zo\% The requirements for IMl The Degree Master of Arts In English: Literature by Jason Andrew Jackl San Francisco, California May 2018 Copyright by Jason Andrew Jackl 2018 CERTIFICATION OF APPROVAL I certify that I have read Alternative Perspectives o f African American Culture and Representation in the Works o f Ishmael Reed by Jason Andrew Jackl, and that in my opinion this work meets the criteria for approving a thesis submitted in partial fulfillment of the requirement for the degree Master of Arts in English Literature at San Francisco State University. Geoffrey Grec/C Ph.D. Professor of English Sarita Cannon, Ph.D. Associate Professor of English ALTERNATIVE PERSPECTIVES OF AFRICAN AMERICAN CULTURE AND REPRESENTATION IN THE WORKS OF ISHMAEL REED Jason Andrew JackI San Francisco, California 2018 This thesis demonstrates the ways in which Ishmael Reed proposes incisive countemarratives to the hegemonic master narratives that perpetuate degrading misportrayals of Afro American culture in the historical record and mainstream news and entertainment media of the United States. Many critics and readers have responded reductively to Reed’s work by hastily dismissing his proposals, thereby disallowing thoughtful critical engagement with Reed’s views as put forth in his fiction and non fiction writing. The study that follows asserts that Reed’s corpus deserves more thoughtful critical and public recognition than it has received thus far. To that end, I argue that a critical re-exploration of his fiction and non-fiction writing would yield profound contributions to the ongoing national dialogue on race relations in America. -

Hammer Langdon Cv18.Pdf

LANGDON HAMMER Department of English [email protected] Yale University jamesmerrillweb.com New Haven CT 06520-8302 yale.edu bio page USA EDUCATION Ph.D., English Language and Literature, Yale University B.A., English Major, summa cum laude, Yale University ACADEMIC APPOINTMENT Niel Gray, Jr., Professor of English and American Studies, Yale University Appointments in the English Department at Yale: Lecturer Convertible, 1987; Assistant Professor, 1989; Associate Professor with tenure, 1996; Professor, 2001; Department Chair, 2005-fall 2008, Acting Department Chair, fall 2011 and fall 2013, Department Chair, 2014-17 and 2017-19 PUBLICATIONS Books In progress: Elizabeth Bishop: Life & Works, A Critical Biography (under contract to Farrar Straus Giroux) The Oxford History of Poetry in English (Oxford UP), 18 volumes, Patrick Cheney general editor; LH coordinating editor for Volumes 10-12 on American Poetry, and editor for Volume 12 The Oxford History of American Poetry Since 1939 The Selected Letters of James Merrill, edited by LH, J. D. McClatchy, and Stephen Yenser (under contract to Alfred A. Knopf) Published: James Merrill: Poems, Everyman’s Library Pocket Poets, selected and edited with a foreword by LH (Penguin RandomHouse, 2017), 256 pp James Merrill: Life and Art (Alfred A. Knopf, 2015), 944 pp, 32 pp images, and jamesmerrillweb.com, a website companion with more images, bibliography, documents, linked reviews, and blog Winner, Lambda Literary Award for Gay Memoir/Biography, 2016. Finalist for the Poetry 2 Foundation’s Pegasus Award for Poetry Criticism, 2015. Named a Times Literary Supplement “Book of the Year, 2015” (two nominations, November 25). New York Times, “Top Books of 2015” (December 11). -

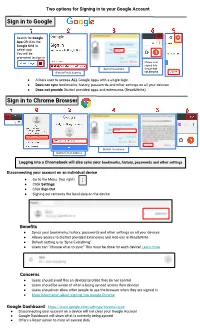

Signing in to Your Google Account File

Two options for Signing in to your Google Account Sign in to Google Search for Google App OR click the Google Grid to select app. You will be prompted to sign in Shows user signed into District Username Google but District Email Address not Chrome ● Allows user to access ALL Google Apps with a single login ● Does not sync bookmarks, history, passwords and other settings on all your devices ● Does not provide District provided apps and extensions (Read&Write) Sign in to Chrome Browser District Username District Email Address Logging into a Chromebook will also sync your bookmarks, history, passwords and other settings Disconnecting your account on an individual device ● Go to the Menu (top right) ● Click Settings ● Click Sign Out ● Signing out removes the local data on the device Benefits ● Syncs your bookmarks, history, passwords and other settings on all your devices ● Allows access to District provided Extensions and Add-ons ie Read&Write ● Default setting is to “Sync Everything” ● Users can “Choose what to sync” This must be done for each device! Learn more Concerns ● Users should avoid this on devices/profiles they do not control ● Users should be aware of what is being synced across their devices ● Users should not allow other people to use the browser when they are signed in ● More information about signing into Google Chrome Google Dashboard: https://www.google.com/settings/chrome/sync ● Disconnecting your account on a device will not clear your Google Account ● Google Dashboard will show what is currently being synced ● Offers a Reset option to clear all synced data. -

Noor Kharrat

Google and your Privacy By: Noor Kharrat Many take Google.com for granted. The number one most used website to search and the number two most visited site has recently been under criticism by many individuals and groups about its privacy. Google is based in Mountain View, California and is regulated according to US laws. This means that Google has to follow the USA Patriot Act, which is a controversial Act of Congress that U.S. President George Bush signed into law on October 26, 2001. All Google's applications and services that it opens to the public are bound under that act. Google.ca, Google.co.uk, Google.cn all fall under google.com. The servers that hold international sites for Google are still located in US grounds. The rights group "Privacy International" rated the search giant as "hostile" to privacy in a report ranking web firms by how they handle personal data. The group said Google was leading a "race to the bottom" among Internet firms many of whom had policies that did little to substantially protect users. (BBC news channel) Here are some of the reasons why Google was declared "hostile to privacy":1 • IP addresses are not considered personal information. They do not believe that they collect sensitive information. (privacyinternational.org, 2007) • Google maintains records of all search strings and the associated IP-addresses and time stamps for at least 18 to 24 months and does not provide users with an option. (privacyinternational.org, 2007) • Google has access to additional personal information, including hobbies, employment, address, and phone number, contained within user profiles in Orkut, which is a social network similar to the much popular Facebook.