Lecture 18: How to Solve ODE? 18.1 Numerical

Total Page:16

File Type:pdf, Size:1020Kb

Load more

Recommended publications

-

Numerical Solution of Ordinary Differential Equations

NUMERICAL SOLUTION OF ORDINARY DIFFERENTIAL EQUATIONS Kendall Atkinson, Weimin Han, David Stewart University of Iowa Iowa City, Iowa A JOHN WILEY & SONS, INC., PUBLICATION Copyright c 2009 by John Wiley & Sons, Inc. All rights reserved. Published by John Wiley & Sons, Inc., Hoboken, New Jersey. Published simultaneously in Canada. No part of this publication may be reproduced, stored in a retrieval system, or transmitted in any form or by any means, electronic, mechanical, photocopying, recording, scanning, or otherwise, except as permitted under Section 107 or 108 of the 1976 United States Copyright Act, without either the prior written permission of the Publisher, or authorization through payment of the appropriate per-copy fee to the Copyright Clearance Center, Inc., 222 Rosewood Drive, Danvers, MA 01923, (978) 750-8400, fax (978) 646-8600, or on the web at www.copyright.com. Requests to the Publisher for permission should be addressed to the Permissions Department, John Wiley & Sons, Inc., 111 River Street, Hoboken, NJ 07030, (201) 748-6011, fax (201) 748-6008. Limit of Liability/Disclaimer of Warranty: While the publisher and author have used their best efforts in preparing this book, they make no representations or warranties with respect to the accuracy or completeness of the contents of this book and specifically disclaim any implied warranties of merchantability or fitness for a particular purpose. No warranty may be created ore extended by sales representatives or written sales materials. The advice and strategies contained herin may not be suitable for your situation. You should consult with a professional where appropriate. Neither the publisher nor author shall be liable for any loss of profit or any other commercial damages, including but not limited to special, incidental, consequential, or other damages. -

Numerical Integration

Chapter 12 Numerical Integration Numerical differentiation methods compute approximations to the derivative of a function from known values of the function. Numerical integration uses the same information to compute numerical approximations to the integral of the function. An important use of both types of methods is estimation of derivatives and integrals for functions that are only known at isolated points, as is the case with for example measurement data. An important difference between differen- tiation and integration is that for most functions it is not possible to determine the integral via symbolic methods, but we can still compute numerical approx- imations to virtually any definite integral. Numerical integration methods are therefore more useful than numerical differentiation methods, and are essential in many practical situations. We use the same general strategy for deriving numerical integration meth- ods as we did for numerical differentiation methods: We find the polynomial that interpolates the function at some suitable points, and use the integral of the polynomial as an approximation to the function. This means that the truncation error can be analysed in basically the same way as for numerical differentiation. However, when it comes to round-off error, integration behaves differently from differentiation: Numerical integration is very insensitive to round-off errors, so we will ignore round-off in our analysis. The mathematical definition of the integral is basically via a numerical in- tegration method, and we therefore start by reviewing this definition. We then derive the simplest numerical integration method, and see how its error can be analysed. We then derive two other methods that are more accurate, but for these we just indicate how the error analysis can be done. -

On the Numerical Solution of Equations Involving Differential Operators with Constant Coefficients 1

ON THE NUMERICAL SOLUTION OF EQUATIONS 219 The author acknowledges with thanks the aid of Dolores Ufford, who assisted in the calculations. Yudell L. Luke Midwest Research Institute Kansas City 2, Missouri 1 W. E. Milne, "The remainder in linear methods of approximation," NBS, Jn. of Research, v. 43, 1949, p. 501-511. 2W. E. Milne, Numerical Calculus, p. 108-116. 3 M. Bates, On the Development of Some New Formulas for Numerical Integration. Stanford University, June, 1929. 4 M. E. Youngberg, Formulas for Mechanical Quadrature of Irrational Functions. Oregon State College, June, 1937. (The author is indebted to the referee for references 3 and 4.) 6 E. L. Kaplan, "Numerical integration near a singularity," Jn. Math. Phys., v. 26, April, 1952, p. 1-28. On the Numerical Solution of Equations Involving Differential Operators with Constant Coefficients 1. The General Linear Differential Operator. Consider the differential equation of order n (1) Ly + Fiy, x) = 0, where the operator L is defined by j» dky **£**»%- and the functions Pk(x) and Fiy, x) are such that a solution y and its first m derivatives exist in 0 < x < X. In the special case when (1) is linear the solution can be completely determined by the well known method of varia- tion of parameters when n independent solutions of the associated homo- geneous equations are known. Thus for the case when Fiy, x) is independent of y, the solution of the non-homogeneous equation can be obtained by mere quadratures, rather than by laborious stepwise integrations. It does not seem to have been observed, however, that even when Fiy, x) involves the dependent variable y, the numerical integrations can be so arranged that the contributions to the integral from the upper limit at each step of the integration, at the time when y is still unknown at the upper limit, drop out. -

The Original Euler's Calculus-Of-Variations Method: Key

Submitted to EJP 1 Jozef Hanc, [email protected] The original Euler’s calculus-of-variations method: Key to Lagrangian mechanics for beginners Jozef Hanca) Technical University, Vysokoskolska 4, 042 00 Kosice, Slovakia Leonhard Euler's original version of the calculus of variations (1744) used elementary mathematics and was intuitive, geometric, and easily visualized. In 1755 Euler (1707-1783) abandoned his version and adopted instead the more rigorous and formal algebraic method of Lagrange. Lagrange’s elegant technique of variations not only bypassed the need for Euler’s intuitive use of a limit-taking process leading to the Euler-Lagrange equation but also eliminated Euler’s geometrical insight. More recently Euler's method has been resurrected, shown to be rigorous, and applied as one of the direct variational methods important in analysis and in computer solutions of physical processes. In our classrooms, however, the study of advanced mechanics is still dominated by Lagrange's analytic method, which students often apply uncritically using "variational recipes" because they have difficulty understanding it intuitively. The present paper describes an adaptation of Euler's method that restores intuition and geometric visualization. This adaptation can be used as an introductory variational treatment in almost all of undergraduate physics and is especially powerful in modern physics. Finally, we present Euler's method as a natural introduction to computer-executed numerical analysis of boundary value problems and the finite element method. I. INTRODUCTION In his pioneering 1744 work The method of finding plane curves that show some property of maximum and minimum,1 Leonhard Euler introduced a general mathematical procedure or method for the systematic investigation of variational problems. -

Improving Numerical Integration and Event Generation with Normalizing Flows — HET Brown Bag Seminar, University of Michigan —

Improving Numerical Integration and Event Generation with Normalizing Flows | HET Brown Bag Seminar, University of Michigan | Claudius Krause Fermi National Accelerator Laboratory September 25, 2019 In collaboration with: Christina Gao, Stefan H¨oche,Joshua Isaacson arXiv: 191x.abcde Claudius Krause (Fermilab) Machine Learning Phase Space September 25, 2019 1 / 27 Monte Carlo Simulations are increasingly important. https://twiki.cern.ch/twiki/bin/view/AtlasPublic/ComputingandSoftwarePublicResults MC event generation is needed for signal and background predictions. ) The required CPU time will increase in the next years. ) Claudius Krause (Fermilab) Machine Learning Phase Space September 25, 2019 2 / 27 Monte Carlo Simulations are increasingly important. 106 3 10− parton level W+0j 105 particle level W+1j 10 4 W+2j particle level − W+3j 104 WTA (> 6j) W+4j 5 10− W+5j 3 W+6j 10 Sherpa MC @ NERSC Mevt W+7j / 6 Sherpa / Pythia + DIY @ NERSC 10− W+8j 2 10 Frequency W+9j CPUh 7 10− 101 8 10− + 100 W +jets, LHC@14TeV pT,j > 20GeV, ηj < 6 9 | | 10− 1 10− 0 50000 100000 150000 200000 250000 300000 0 1 2 3 4 5 6 7 8 9 Ntrials Njet Stefan H¨oche,Stefan Prestel, Holger Schulz [1905.05120;PRD] The bottlenecks for evaluating large final state multiplicities are a slow evaluation of the matrix element a low unweighting efficiency Claudius Krause (Fermilab) Machine Learning Phase Space September 25, 2019 3 / 27 Monte Carlo Simulations are increasingly important. 106 3 10− parton level W+0j 105 particle level W+1j 10 4 W+2j particle level − W+3j 104 WTA (> -

A Brief Introduction to Numerical Methods for Differential Equations

A Brief Introduction to Numerical Methods for Differential Equations January 10, 2011 This tutorial introduces some basic numerical computation techniques that are useful for the simulation and analysis of complex systems modelled by differential equations. Such differential models, especially those partial differential ones, have been extensively used in various areas from astronomy to biology, from meteorology to finance. However, if we ignore the differences caused by applications and focus on the mathematical equations only, a fundamental question will arise: Can we predict the future state of a system from a known initial state and the rules describing how it changes? If we can, how to make the prediction? This problem, known as Initial Value Problem(IVP), is one of those problems that we are most concerned about in numerical analysis for differential equations. In this tutorial, Euler method is used to solve this problem and a concrete example of differential equations, the heat diffusion equation, is given to demonstrate the techniques talked about. But before introducing Euler method, numerical differentiation is discussed as a prelude to make you more comfortable with numerical methods. 1 Numerical Differentiation 1.1 Basic: Forward Difference Derivatives of some simple functions can be easily computed. However, if the function is too compli- cated, or we only know the values of the function at several discrete points, numerical differentiation is a tool we can rely on. Numerical differentiation follows an intuitive procedure. Recall what we know about the defini- tion of differentiation: df f(x + h) − f(x) = f 0(x) = lim dx h!0 h which means that the derivative of function f(x) at point x is the difference between f(x + h) and f(x) divided by an infinitesimal h. -

![Arxiv:1809.06300V1 [Hep-Ph] 17 Sep 2018](https://docslib.b-cdn.net/cover/2158/arxiv-1809-06300v1-hep-ph-17-sep-2018-762158.webp)

Arxiv:1809.06300V1 [Hep-Ph] 17 Sep 2018

CERN-TH-2018-205, TTP18-034 Double-real contribution to the quark beam function at N3LO QCD Kirill Melnikov,1, ∗ Robbert Rietkerk,1, y Lorenzo Tancredi,2, z and Christopher Wever1, 3, x 1Institute for Theoretical Particle Physics, KIT, Karlsruhe, Germany 2Theoretical Physics Department, CERN, 1211 Geneva 23, Switzerland 3Institut f¨urKernphysik, KIT, 76344 Eggenstein-Leopoldshafen, Germany Abstract We compute the master integrals required for the calculation of the double-real emission contri- butions to the matching coefficients of jettiness beam functions at next-to-next-to-next-to-leading order in perturbative QCD. As an application, we combine these integrals and derive the double- real emission contribution to the matching coefficient Iqq(t; z) of the quark beam function. arXiv:1809.06300v1 [hep-ph] 17 Sep 2018 ∗ Electronic address: [email protected] y Electronic address: [email protected] z Electronic address: [email protected] x Electronic address: [email protected] 1 I. INTRODUCTION The absence of any evidence for physics beyond the Standard Model at the LHC implies a growing importance of indirect searches for new particles and interactions. An integral part of this complex endeavour are first-principles predictions for hard scattering processes in proton collisions with controllable perturbative accuracy. In recent years, we have seen a remarkable progress in an effort to provide such predictions. Indeed, robust methods for one-loop computations developed during the past decade, that allowed the theoretical description of a large number of processes with multi-particle final states through NLO QCD [1{6], were followed by the development of practical NNLO QCD subtraction and slicing schemes [7{17] and advances in computations of two-loop scattering amplitudes [18{29]. -

Further Results on the Dirac Delta Approximation and the Moment Generating Function Techniques for Error Probability Analysis in Fading Channels

International Journal of Computer Networks & Communications (IJCNC) Vol.5, No.1, January 2013 FURTHER RESULTS ON THE DIRAC DELTA APPROXIMATION AND THE MOMENT GENERATING FUNCTION TECHNIQUES FOR ERROR PROBABILITY ANALYSIS IN FADING CHANNELS Annamalai Annamalai 1, Eyidayo Adebola 2 and Oluwatobi Olabiyi 3 Center of Excellence for Communication Systems Technology Research Department of Electrical & Computer Engineering, Prairie View A&M University, Texas [email protected], [email protected], [email protected] ABSTRACT In this article, we employ two distinct methods to derive simple closed-form approximations for the statistical expectations of the positive integer powers of Gaussian probability integral E[ Q p (βΩ γ )] with γ respect to its fading signal-to-noise ratio (SNR) γ random variable. In the first approach, we utilize the shifting property of Dirac delta function on three tight bounds/approximations for Q (.) to circumvent the need for integration. In the second method, tight exponential-type approximations for Q (.) are exploited to simplify the resulting integral in terms of only the weighted sum of moment generating function (MGF) of γ. These results are of significant interest in the development of analytically tractable and simple closed- form approximations for the average bit/symbol/block error rate performance metrics of digital communications over fading channels. Numerical results reveal that the approximations based on the MGF method are much more versatile and can achieve better accuracy compared to the approximations derived via the asymptotic Dirac delta technique for a wide range of digital modulations schemes and wireless fading environments. KEYWORDS Moment generating function method, Dirac delta approximation, Gaussian quadrature approximation. -

Numerical Integration of Functions with Logarithmic End Point

FACTA UNIVERSITATIS (NIS)ˇ Ser. Math. Inform. 17 (2002), 57–74 NUMERICAL INTEGRATION OF FUNCTIONS WITH ∗ LOGARITHMIC END POINT SINGULARITY Gradimir V. Milovanovi´cand Aleksandar S. Cvetkovi´c Abstract. In this paper we study some integration problems of functions involv- ing logarithmic end point singularity. The basic idea of calculating integrals over algebras different from the standard algebra {1,x,x2,...} is given and is applied to evaluation of integrals. Also, some convergence properties of quadrature rules over different algebras are investigated. 1. Introduction The basic motive for our work is a slow convergence of the Gauss-Legendre quadrature rule, transformed to (0, 1), 1 n (1.1) I(f)= f(x) dx Qn(f)= Aνf(xν), 0 ν=1 in the case when f(x)=xx. It is obvious that this function is continuous (even uniformly continuous) and positive over the interval of integration, so that we can expect a convergence of (1.1) in this case. In Table 1.1 we give relative errors in Gauss-Legendre approximations (1.1), rel. err(f)= |(Qn(f) − I(f))/I(f)|,forn = 30, 100, 200, 300 and 400 nodes. All calculations are performed in D- and Q-arithmetic, with machine precision ≈ 2.22 × 10−16 and ≈ 1.93 × 10−34, respectively. (Numbers in parentheses denote decimal exponents.) Received September 12, 2001. The paper was presented at the International Confer- ence FILOMAT 2001 (Niˇs, August 26–30, 2001). 2000 Mathematics Subject Classification. Primary 65D30, 65D32. ∗The authors were supported in part by the Serbian Ministry of Science, Technology and Development (Project #2002: Applied Orthogonal Systems, Constructive Approximation and Numerical Methods). -

1 Ordinary Differential Equations

1 Ordinary Differential Equations 1.0 Mathematical Background 1.0.1 Smoothness Definition 1.1 A function f defined on [a, b] is continuous at ξ ∈ [a, b] if lim f(x) = x→ξ f(ξ). Remark Note that this implies existence of the quantities on both sides of the equa- tion. f is continuous on [a, b], i.e., f ∈ C[a, b], if f is continuous at every ξ ∈ [a, b]. If f (ν) is continuous on [a, b], then f ∈ C(ν)[a, b]. Alternatively, one could start with the following ε-δ definition: Definition 1.2 A function f defined on [a, b] is continuous at ξ ∈ [a, b] if for every ε > 0 there exists a δε > 0 (that depends on ξ) such that |f(x) − f(ξ)| < ε whenever |x − ξ| < δε. FIGURE 1 Example (A function that is continuous, but not uniformly) f(x) = x with FIGURE. Definition 1.3 A function f is uniformly continuous on [a, b] if it is continuous with a uniform δε for all ξ ∈ [a, b], i.e., independent of ξ. Important for ordinary differential equations is Definition 1.4 A function f defined on [a, b] is Lipschitz continuous on [a, b] if there exists a number λ such that |f(x) − f(y)| ≤ λ|x − y| for all x, y ∈ [a, b]. λ is called the Lipschitz constant. Remark 1. In fact, any Lipschitz continuous function is uniformly continuous, and therefore continuous. 2. For a differentiable function with bounded derivative we can take λ = max |f 0(ξ)|, ξ∈[a,b] and we see that Lipschitz continuity is “between” continuity and differentiability. -

Simplifying Integration for Logarithmic Singularities R.N.L. Smith

Transactions on Modelling and Simulation vol 12, © 1996 WIT Press, www.witpress.com, ISSN 1743-355X Simplifying integration for logarithmic singularities R.N.L. Smith Department of Applied Mathematics & OR, Cranfield University, RMCS, Shrivenham, Swindon, Wiltshire SN6 SLA, UK Introduction Any implementation of the boundary element method requires consideration of three types of integral - simple non-singular integrals, singular integrals of two kinds and those integrals which are 'nearly singular' (Brebbia and Dominguez 1989). If linear elements are used all integrals may be per- formed exactly (Jaswon and Symm 1977), but the additional complexity of quadratic or cubic element programs appears to give a significant gain in efficiency, and such elements are therefore often utilised; These elements may be curved and can represent complex shapes and changes in boundary variables more effectively. Unfortunately, more complex elements require more complex integration routines than the routines for straight elements. In dealing with BEM codes simplicity is clearly desirable, particularly when attempting to show others the value of boundary methods. Non-singular integrals are almost invariably dealt with by a simple Gauss- Legendre quadrature and problems with near-singular integrals may be overcome by using the same simple integration scheme given a sufficient number of integration points and some restriction on the minimum distance between a given element and the nearest off-element node. The strongly singular integrals which appear in the usual BEM direct method may be evaluated using the row sum technique which may not be the most efficient available but has the virtue of being easy to explain and implement. -

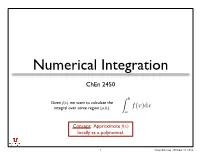

Numerical Integration

Numerical Integration ChEn 2450 b Given f(x), we want to calculate the integral over some region [a,b]. f(x)dx a Concept: Approximate f(x) locally as a polynomial. 1 Integration.key - October 31, 2014 Midpoint Rule Trapezoid Rule Concept: Approximate f(x) as a Concept: Approximate f(x) as a constant on the interval [a,b]. linear function on the interval [a,b]. b b + a b Triangle area. Rectangle area. f(x)dx (b a) f b a f(x)dx −2 (f(b) f(a)) + f(a) (b a) a ⇥ − 2 ⇥ − − ⇤ ⇥ a b a − [f(a) + f(b)] ⇥ 2 • Requires function • Convenient form for tabular (discrete) data. f(x) value at the midpoint f(x) (can be a problem for • Doesn’t require tabular/discrete data). equally spaced data. a b • ∆x = b-a x a x b Simpson’s 1/3 Rule Simpson’s 3/8 Rule Concept: Approximate f(x) as a Concept: Approximate f(x) as a quadratic on the interval [a,b]. cubic on the interval [a,b]. b b ∆x a+b 3∆x f(x)dx f(a) + 4f + f(b) f(x)dx (f(x0) + 3f(x1) + 3f(x2) + f(x3)) ≈ 3 2 ≈ 8 ⇧a a ⇤ ⇥ ⌅ • Requires four equally spaced • Requires three equally spaced points on interval [a,b]. f(x) points on interval [a,b]. f(x) • ∆x = (b-a)/3 • ∆x = (b-a)/2 • xi = a + i∆x a b a b x x 2 Integration.key - October 31, 2014 Example: Discrete Data Integration CO2 Emissions in US from How much CO2 was emitted from electrical electrical power generation.