Propositional Logic: Syntax and Semantics

Total Page:16

File Type:pdf, Size:1020Kb

Load more

Recommended publications

-

CAS LX 522 Syntax I

It is likely… CAS LX 522 IP This satisfies the EPP in Syntax I both clauses. The main DPj I′ clause has Mary in SpecIP. Mary The embedded clause has Vi+I VP is the trace in SpecIP. V AP Week 14b. PRO and control ti This specific instance of A- A IP movement, where we move a likely subject from an embedded DP I′ clause to a higher clause is tj generally called subject raising. I VP to leave Reluctance to leave Reluctance to leave Now, consider: Reluctant has two θ-roles to assign. Mary is reluctant to leave. One to the one feeling the reluctance (Experiencer) One to the proposition about which the reluctance holds (Proposition) This looks very similar to Mary is likely to leave. Can we draw the same kind of tree for it? Leave has one θ-role to assign. To the one doing the leaving (Agent). How many θ-roles does reluctant assign? In Mary is reluctant to leave, what θ-role does Mary get? IP Reluctance to leave Reluctance… DPi I′ Mary Vj+I VP In Mary is reluctant to leave, is V AP Mary is doing the leaving, gets Agent t Mary is reluctant to leave. j t from leave. i A′ Reluctant assigns its θ- Mary is showing the reluctance, gets θ roles within AP as A θ IP Experiencer from reluctant. required, Mary moves reluctant up to SpecIP in the main I′ clause by Spellout. ? And we have a problem: I vP But what gets the θ-role to Mary appears to be getting two θ-roles, from leave, and what v′ in violation of the θ-criterion. -

The Validities of Pep and Some Characteristic Formulas in Modal Logic

THE VALIDITIES OF PEP AND SOME CHARACTERISTIC FORMULAS IN MODAL LOGIC Hong Zhang, Huacan He School of Computer Science, Northwestern Polytechnical University,Xi'an, Shaanxi 710032, P.R. China Abstract: In this paper, we discuss the relationships between some characteristic formulas in normal modal logic with their frames. We find that the validities of the modal formulas are conditional even though some of them are intuitively valid. Finally, we prove the validities of two formulas of Position- Exchange-Principle (PEP) proposed by papers 1 to 3 by using of modal logic and Kripke's semantics of possible worlds. Key words: Characteristic Formulas, Validity, PEP, Frame 1. INTRODUCTION Reasoning about knowledge and belief is an important issue in the research of multi-agent systems, and reasoning, which is set up from the main ideas of situational calculus, about other agents' states and actions is one of the most important directions in Al^^'^\ Papers [1-3] put forward an axiom scheme in reasoning about others(RAO) in multi-agent systems, and a rule called Position-Exchange-Principle (PEP), which is shown as following, was abstracted . C{(p^y/)^{C(p->Cy/) (Ce{5f',...,5f-}) When the length of C is at least 2, it reflects the mechanism of an agent reasoning about knowledge of others. For example, if C is replaced with Bf B^/, then it should be B':'B]'{(p^y/)-^iBf'B]'(p^Bf'B]'y/) 872 Hong Zhang, Huacan He The axiom schema plays a useful role as that of modus ponens and axiom K when simplifying proof. Though examples were demonstrated as proofs in papers [1-3], from the perspectives both in semantics and in syntax, the rule is not so compellent. -

Syntax and Semantics of Propositional Logic

INTRODUCTIONTOLOGIC Lecture 2 Syntax and Semantics of Propositional Logic. Dr. James Studd Logic is the beginning of wisdom. Thomas Aquinas Outline 1 Syntax vs Semantics. 2 Syntax of L1. 3 Semantics of L1. 4 Truth-table methods. Examples of syntactic claims ‘Bertrand Russell’ is a proper noun. ‘likes logic’ is a verb phrase. ‘Bertrand Russell likes logic’ is a sentence. Combining a proper noun and a verb phrase in this way makes a sentence. Syntax vs. Semantics Syntax Syntax is all about expressions: words and sentences. ‘Bertrand Russell’ is a proper noun. ‘likes logic’ is a verb phrase. ‘Bertrand Russell likes logic’ is a sentence. Combining a proper noun and a verb phrase in this way makes a sentence. Syntax vs. Semantics Syntax Syntax is all about expressions: words and sentences. Examples of syntactic claims ‘likes logic’ is a verb phrase. ‘Bertrand Russell likes logic’ is a sentence. Combining a proper noun and a verb phrase in this way makes a sentence. Syntax vs. Semantics Syntax Syntax is all about expressions: words and sentences. Examples of syntactic claims ‘Bertrand Russell’ is a proper noun. ‘Bertrand Russell likes logic’ is a sentence. Combining a proper noun and a verb phrase in this way makes a sentence. Syntax vs. Semantics Syntax Syntax is all about expressions: words and sentences. Examples of syntactic claims ‘Bertrand Russell’ is a proper noun. ‘likes logic’ is a verb phrase. Combining a proper noun and a verb phrase in this way makes a sentence. Syntax vs. Semantics Syntax Syntax is all about expressions: words and sentences. Examples of syntactic claims ‘Bertrand Russell’ is a proper noun. -

The Substitutional Analysis of Logical Consequence

THE SUBSTITUTIONAL ANALYSIS OF LOGICAL CONSEQUENCE Volker Halbach∗ dra version ⋅ please don’t quote ónd June óþÕä Consequentia ‘formalis’ vocatur quae in omnibus terminis valet retenta forma consimili. Vel si vis expresse loqui de vi sermonis, consequentia formalis est cui omnis propositio similis in forma quae formaretur esset bona consequentia [...] Iohannes Buridanus, Tractatus de Consequentiis (Hubien ÕÉßä, .ì, p.óóf) Zf«±§Zh± A substitutional account of logical truth and consequence is developed and defended. Roughly, a substitution instance of a sentence is dened to be the result of uniformly substituting nonlogical expressions in the sentence with expressions of the same grammatical category. In particular atomic formulae can be replaced with any formulae containing. e denition of logical truth is then as follows: A sentence is logically true i all its substitution instances are always satised. Logical consequence is dened analogously. e substitutional denition of validity is put forward as a conceptual analysis of logical validity at least for suciently rich rst-order settings. In Kreisel’s squeezing argument the formal notion of substitutional validity naturally slots in to the place of informal intuitive validity. ∗I am grateful to Beau Mount, Albert Visser, and Timothy Williamson for discussions about the themes of this paper. Õ §Z êZo±í At the origin of logic is the observation that arguments sharing certain forms never have true premisses and a false conclusion. Similarly, all sentences of certain forms are always true. Arguments and sentences of this kind are for- mally valid. From the outset logicians have been concerned with the study and systematization of these arguments, sentences and their forms. -

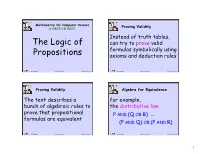

Propositional Logic (PDF)

Mathematics for Computer Science Proving Validity 6.042J/18.062J Instead of truth tables, The Logic of can try to prove valid formulas symbolically using Propositions axioms and deduction rules Albert R Meyer February 14, 2014 propositional logic.1 Albert R Meyer February 14, 2014 propositional logic.2 Proving Validity Algebra for Equivalence The text describes a for example, bunch of algebraic rules to the distributive law prove that propositional P AND (Q OR R) ≡ formulas are equivalent (P AND Q) OR (P AND R) Albert R Meyer February 14, 2014 propositional logic.3 Albert R Meyer February 14, 2014 propositional logic.4 1 Algebra for Equivalence Algebra for Equivalence for example, The set of rules for ≡ in DeMorgan’s law the text are complete: ≡ NOT(P AND Q) ≡ if two formulas are , these rules can prove it. NOT(P) OR NOT(Q) Albert R Meyer February 14, 2014 propositional logic.5 Albert R Meyer February 14, 2014 propositional logic.6 A Proof System A Proof System Another approach is to Lukasiewicz’ proof system is a start with some valid particularly elegant example of this idea. formulas (axioms) and deduce more valid formulas using proof rules Albert R Meyer February 14, 2014 propositional logic.7 Albert R Meyer February 14, 2014 propositional logic.8 2 A Proof System Lukasiewicz’ Proof System Lukasiewicz’ proof system is a Axioms: particularly elegant example of 1) (¬P → P) → P this idea. It covers formulas 2) P → (¬P → Q) whose only logical operators are 3) (P → Q) → ((Q → R) → (P → R)) IMPLIES (→) and NOT. The only rule: modus ponens Albert R Meyer February 14, 2014 propositional logic.9 Albert R Meyer February 14, 2014 propositional logic.10 Lukasiewicz’ Proof System Lukasiewicz’ Proof System Prove formulas by starting with Prove formulas by starting with axioms and repeatedly applying axioms and repeatedly applying the inference rule. -

Tarski's Threat to the T-Schema

Tarski’s Threat to the T-Schema MSc Thesis (Afstudeerscriptie) written by Cian Chartier (born November 10th, 1986 in Montr´eal, Canada) under the supervision of Prof. dr. Frank Veltman, and submitted to the Board of Examiners in partial fulfillment of the requirements for the degree of MSc in Logic at the Universiteit van Amsterdam. Date of the public defense: Members of the Thesis Committee: August 30, 2011 Prof. Dr. Frank Veltman Prof. Dr. Michiel van Lambalgen Prof. Dr. Benedikt L¨owe Dr. Robert van Rooij Abstract This thesis examines truth theories. First, four relevant programs in philos- ophy are considered. Second, four truth theories are compared according to a range of criteria. The truth theories are categorised according to Leitgeb’s eight criteria for a truth theory. The four truth theories are then compared with each-other based on three new criteria. In this way their relative use- fulness in pursuing some of the aims of the four programs is evaluated. This presents a springboard for future work on truth: proposing ideas for differ- ent truth theories that advance unambiguously different programs on the basis of the four truth theories proposed. 1 Acknowledgements I first thank my supervisor Prof. Dr. Frank Veltman for regularly read- ing and providing constructive feedback, recommending some papers along the way. This thesis has been much improved by his corrections and input, including extensive discussions and suggestions of the ideas proposed here. His patience in working with me on the thesis has been much appreciated. Among the other professors in the ILLC, Dr. Robert van Rooij also provided helpful discussion. -

A Validity Framework for the Use and Development of Exported Assessments

A Validity Framework for the Use and Development of Exported Assessments By María Elena Oliveri, René Lawless, and John W. Young A Validity Framework for the Use and Development of Exported Assessments María Elena Oliveri, René Lawless, and John W. Young1 1 The authors would like to acknowledge Bob Mislevy, Michael Kane, Kadriye Ercikan, and Maurice Hauck for their valuable input and expertise in reviewing various iterations of the framework as well as Priya Kannan, Don Powers, and James Carlson for their input throughout the technical review process. Copyright © 2015 Educational Testing Service. All Rights Reserved. ETS, the ETS logo, GRADUATE RECORD EXAMINATIONS, GRE, LISTENTING. LEARNING. LEADING, TOEIC, and TOEFL are registered trademarks of Educational Testing Service (ETS). EXADEP and EXAMEN DE ADMISIÓN A ESTUDIOS DE POSGRADO are trademarks of ETS. 1 Abstract In this document, we present a framework that outlines the key considerations relevant to the fair development and use of exported assessments. Exported assessments are developed in one country and are used in countries with a population that differs from the one for which the assessment was developed. Examples of these assessments include the Graduate Record Examinations® (GRE®) and el Examen de Admisión a Estudios de Posgrado™ (EXADEP™), among others. Exported assessments can be used to make inferences about performance in the exporting country or in the receiving country. To illustrate, the GRE can be administered in India and be used to predict success at a graduate school in the United States, or similarly it can be administered in India to predict success in graduate school at a graduate school in India. -

Syntax: Recursion, Conjunction, and Constituency

Syntax: Recursion, Conjunction, and Constituency Course Readings Recursion Syntax: Conjunction Recursion, Conjunction, and Constituency Tests Auxiliary Verbs Constituency . Syntax: Course Readings Recursion, Conjunction, and Constituency Course Readings Recursion Conjunction Constituency Tests The following readings have been posted to the Moodle Auxiliary Verbs course site: I Language Files: Chapter 5 (pp. 204-215, 216-220) I Language Instinct: Chapter 4 (pp. 74-99) . Syntax: An Interesting Property of our PS Rules Recursion, Conjunction, and Our Current PS Rules: Constituency S ! f NP , CP g VP NP ! (D) (A*) N (CP) (PP*) Course Readings VP ! V (NP) f (NP) (CP) g (PP*) Recursion PP ! P (NP) Conjunction CP ! C S Constituency Tests Auxiliary Verbs An Interesting Feature of These Rules: As we saw last time, these rules allow sentences to contain other sentences. I A sentence must have a VP in it. I A VP can have a CP in it. I A CP must have an S in it. Syntax: An Interesting Property of our PS Rules Recursion, Conjunction, and Our Current PS Rules: Constituency S ! f NP , CP g VP NP ! (D) (A*) N (CP) (PP*) Course Readings VP ! V (NP) f (NP) (CP) g (PP*) Recursion ! PP P (NP) Conjunction CP ! C S Constituency Tests Auxiliary Verbs An Interesting Feature of These Rules: As we saw last time, these rules allow sentences to contain other sentences. S NP VP N V CP Dave thinks C S that . he. is. cool. Syntax: An Interesting Property of our PS Rules Recursion, Conjunction, and Our Current PS Rules: Constituency S ! f NP , CP g VP NP ! (D) (A*) N (CP) (PP*) Course Readings VP ! V (NP) f (NP) (CP) g (PP*) Recursion PP ! P (NP) Conjunction CP ! CS Constituency Tests Auxiliary Verbs Another Interesting Feature of These Rules: These rules also allow noun phrases to contain other noun phrases. -

Lecture 1: Propositional Logic

Lecture 1: Propositional Logic Syntax Semantics Truth tables Implications and Equivalences Valid and Invalid arguments Normal forms Davis-Putnam Algorithm 1 Atomic propositions and logical connectives An atomic proposition is a statement or assertion that must be true or false. Examples of atomic propositions are: “5 is a prime” and “program terminates”. Propositional formulas are constructed from atomic propositions by using logical connectives. Connectives false true not and or conditional (implies) biconditional (equivalent) A typical propositional formula is The truth value of a propositional formula can be calculated from the truth values of the atomic propositions it contains. 2 Well-formed propositional formulas The well-formed formulas of propositional logic are obtained by using the construction rules below: An atomic proposition is a well-formed formula. If is a well-formed formula, then so is . If and are well-formed formulas, then so are , , , and . If is a well-formed formula, then so is . Alternatively, can use Backus-Naur Form (BNF) : formula ::= Atomic Proposition formula formula formula formula formula formula formula formula formula formula 3 Truth functions The truth of a propositional formula is a function of the truth values of the atomic propositions it contains. A truth assignment is a mapping that associates a truth value with each of the atomic propositions . Let be a truth assignment for . If we identify with false and with true, we can easily determine the truth value of under . The other logical connectives can be handled in a similar manner. Truth functions are sometimes called Boolean functions. 4 Truth tables for basic logical connectives A truth table shows whether a propositional formula is true or false for each possible truth assignment. -

1 Logic, Language and Meaning 2 Syntax and Semantics of Predicate

Semantics and Pragmatics 2 Winter 2011 University of Chicago Handout 1 1 Logic, language and meaning • A formal system is a set of primitives, some statements about the primitives (axioms), and some method of deriving further statements about the primitives from the axioms. • Predicate logic (calculus) is a formal system consisting of: 1. A syntax defining the expressions of a language. 2. A set of axioms (formulae of the language assumed to be true) 3. A set of rules of inference for deriving further formulas from the axioms. • We use this formal system as a tool for analyzing relevant aspects of the meanings of natural languages. • A formal system is a syntactic object, a set of expressions and rules of combination and derivation. However, we can talk about the relation between this system and the models that can be used to interpret it, i.e. to assign extra-linguistic entities as the meanings of expressions. • Logic is useful when it is possible to translate natural languages into a logical language, thereby learning about the properties of natural language meaning from the properties of the things that can act as meanings for a logical language. 2 Syntax and semantics of Predicate Logic 2.1 The vocabulary of Predicate Logic 1. Individual constants: fd; n; j; :::g 2. Individual variables: fx; y; z; :::g The individual variables and constants are the terms. 3. Predicate constants: fP; Q; R; :::g Each predicate has a fixed and finite number of ar- guments called its arity or valence. As we will see, this corresponds closely to argument positions for natural language predicates. -

Lambda Calculus and the Decision Problem

Lambda Calculus and the Decision Problem William Gunther November 8, 2010 Abstract In 1928, Alonzo Church formalized the λ-calculus, which was an attempt at providing a foundation for mathematics. At the heart of this system was the idea of function abstraction and application. In this talk, I will outline the early history and motivation for λ-calculus, especially in recursion theory. I will discuss the basic syntax and the primary rule: β-reduction. Then I will discuss how one would represent certain structures (numbers, pairs, boolean values) in this system. This will lead into some theorems about fixed points which will allow us have some fun and (if there is time) to supply a negative answer to Hilbert's Entscheidungsproblem: is there an effective way to give a yes or no answer to any mathematical statement. 1 History In 1928 Alonzo Church began work on his system. There were two goals of this system: to investigate the notion of 'function' and to create a powerful logical system sufficient for the foundation of mathematics. His main logical system was later (1935) found to be inconsistent by his students Stephen Kleene and J.B.Rosser, but the 'pure' system was proven consistent in 1936 with the Church-Rosser Theorem (which more details about will be discussed later). An application of λ-calculus is in the area of computability theory. There are three main notions of com- putability: Hebrand-G¨odel-Kleenerecursive functions, Turing Machines, and λ-definable functions. Church's Thesis (1935) states that λ-definable functions are exactly the functions that can be “effectively computed," in an intuitive way. -

A Logical Approach to Grammar Description Lionel Clément, Jérôme Kirman, Sylvain Salvati

A logical approach to grammar description Lionel Clément, Jérôme Kirman, Sylvain Salvati To cite this version: Lionel Clément, Jérôme Kirman, Sylvain Salvati. A logical approach to grammar description. Jour- nal of Language Modelling, Institute of Computer Science, Polish Academy of Sciences, Poland, 2015, Special issue on High-level methodologies for grammar engineering, 3 (1), pp. 87-143 10.15398/jlm.v3i1.94. hal-01251222 HAL Id: hal-01251222 https://hal.archives-ouvertes.fr/hal-01251222 Submitted on 19 Jan 2016 HAL is a multi-disciplinary open access L’archive ouverte pluridisciplinaire HAL, est archive for the deposit and dissemination of sci- destinée au dépôt et à la diffusion de documents entific research documents, whether they are pub- scientifiques de niveau recherche, publiés ou non, lished or not. The documents may come from émanant des établissements d’enseignement et de teaching and research institutions in France or recherche français ou étrangers, des laboratoires abroad, or from public or private research centers. publics ou privés. A logical approach to grammar description Lionel Clément, Jérôme Kirman, and Sylvain Salvati Université de Bordeaux LaBRI France abstract In the tradition of Model Theoretic Syntax, we propose a logical ap- Keywords: proach to the description of grammars. We combine in one formal- grammar ism several tools that are used throughout computer science for their description, logic, power of abstraction: logic and lambda calculus. We propose then a Finite State high-level formalism for describing mildly context sensitive grammars Automata, and their semantic interpretation. As we rely on the correspondence logical between logic and finite state automata, our method combines con- transduction, ciseness with effectivity.