Overview of Mathematical Tools for Intermediate Microeconomics

Total Page:16

File Type:pdf, Size:1020Kb

Load more

Recommended publications

-

Principles of MICROECONOMICS an Open Text by Douglas Curtis and Ian Irvine

with Open Texts Principles of MICROECONOMICS an Open Text by Douglas Curtis and Ian Irvine VERSION 2017 – REVISION B ADAPTABLE | ACCESSIBLE | AFFORDABLE Creative Commons License (CC BY-NC-SA) advancing learning Champions of Access to Knowledge OPEN TEXT ONLINE ASSESSMENT All digital forms of access to our high- We have been developing superior on- quality open texts are entirely FREE! All line formative assessment for more than content is reviewed for excellence and is 15 years. Our questions are continuously wholly adaptable; custom editions are pro- adapted with the content and reviewed for duced by Lyryx for those adopting Lyryx as- quality and sound pedagogy. To enhance sessment. Access to the original source files learning, students receive immediate per- is also open to anyone! sonalized feedback. Student grade reports and performance statistics are also provided. SUPPORT INSTRUCTOR SUPPLEMENTS Access to our in-house support team is avail- Additional instructor resources are also able 7 days/week to provide prompt resolu- freely accessible. Product dependent, these tion to both student and instructor inquiries. supplements include: full sets of adaptable In addition, we work one-on-one with in- slides and lecture notes, solutions manuals, structors to provide a comprehensive sys- and multiple choice question banks with an tem, customized for their course. This can exam building tool. include adapting the text, managing multi- ple sections, and more! Contact Lyryx Today! [email protected] advancing learning Principles of Microeconomics an Open Text by Douglas Curtis and Ian Irvine Version 2017 — Revision B BE A CHAMPION OF OER! Contribute suggestions for improvements, new content, or errata: A new topic A new example An interesting new question Any other suggestions to improve the material Contact Lyryx at [email protected] with your ideas. -

Microeconomics III Oligopoly — Preface to Game Theory

Microeconomics III Oligopoly — prefacetogametheory (Mar 11, 2012) School of Economics The Interdisciplinary Center (IDC), Herzliya Oligopoly is a market in which only a few firms compete with one another, • and entry of new firmsisimpeded. The situation is known as the Cournot model after Antoine Augustin • Cournot, a French economist, philosopher and mathematician (1801-1877). In the basic example, a single good is produced by two firms (the industry • is a “duopoly”). Cournot’s oligopoly model (1838) — A single good is produced by two firms (the industry is a “duopoly”). — The cost for firm =1 2 for producing units of the good is given by (“unit cost” is constant equal to 0). — If the firms’ total output is = 1 + 2 then the market price is = − if and zero otherwise (linear inverse demand function). We ≥ also assume that . The inverse demand function P A P=A-Q A Q To find the Nash equilibria of the Cournot’s game, we can use the proce- dures based on the firms’ best response functions. But first we need the firms payoffs(profits): 1 = 1 11 − =( )1 11 − − =( 1 2)1 11 − − − =( 1 2 1)1 − − − and similarly, 2 =( 1 2 2)2 − − − Firm 1’s profit as a function of its output (given firm 2’s output) Profit 1 q'2 q2 q2 A c q A c q' Output 1 1 2 1 2 2 2 To find firm 1’s best response to any given output 2 of firm 2, we need to study firm 1’s profit as a function of its output 1 for given values of 2. -

ECON - Economics ECON - Economics

ECON - Economics ECON - Economics Global Citizenship Program ECON 3020 Intermediate Microeconomics (3) Knowledge Areas (....) This course covers advanced theory and applications in microeconomics. Topics include utility theory, consumer and ARTS Arts Appreciation firm choice, optimization, goods and services markets, resource GLBL Global Understanding markets, strategic behavior, and market equilibrium. Prerequisite: ECON 2000 and ECON 3000. PNW Physical & Natural World ECON 3030 Intermediate Macroeconomics (3) QL Quantitative Literacy This course covers advanced theory and applications in ROC Roots of Cultures macroeconomics. Topics include growth, determination of income, employment and output, aggregate demand and supply, the SSHB Social Systems & Human business cycle, monetary and fiscal policies, and international Behavior macroeconomic modeling. Prerequisite: ECON 2000 and ECON 3000. Global Citizenship Program ECON 3100 Issues in Economics (3) Skill Areas (....) Analyzes current economic issues in terms of historical CRI Critical Thinking background, present status, and possible solutions. May be repeated for credit if content differs. Prerequisite: ECON 2000. ETH Ethical Reasoning INTC Intercultural Competence ECON 3150 Digital Economy (3) Course Descriptions The digital economy has generated the creation of a large range OCOM Oral Communication of significant dedicated businesses. But, it has also forced WCOM Written Communication traditional businesses to revise their own approach and their own value chains. The pervasiveness can be observable in ** Course fulfills two skill areas commerce, marketing, distribution and sales, but also in supply logistics, energy management, finance and human resources. This class introduces the main actors, the ecosystem in which they operate, the new rules of this game, the impacts on existing ECON 2000 Survey of Economics (3) structures and the required expertise in those areas. -

Notes for Math 136: Review of Calculus

Notes for Math 136: Review of Calculus Gyu Eun Lee These notes were written for my personal use for teaching purposes for the course Math 136 running in the Spring of 2016 at UCLA. They are also intended for use as a general calculus reference for this course. In these notes we will briefly review the results of the calculus of several variables most frequently used in partial differential equations. The selection of topics is inspired by the list of topics given by Strauss at the end of section 1.1, but we will not cover everything. Certain topics are covered in the Appendix to the textbook, and we will not deal with them here. The results of single-variable calculus are assumed to be familiar. Practicality, not rigor, is the aim. This document will be updated throughout the quarter as we encounter material involving additional topics. We will focus on functions of two real variables, u = u(x;t). Most definitions and results listed will have generalizations to higher numbers of variables, and we hope the generalizations will be reasonably straightforward to the reader if they become necessary. Occasionally (notably for the chain rule in its many forms) it will be convenient to work with functions of an arbitrary number of variables. We will be rather loose about the issues of differentiability and integrability: unless otherwise stated, we will assume all derivatives and integrals exist, and if we require it we will assume derivatives are continuous up to the required order. (This is also generally the assumption in Strauss.) 1 Differential calculus of several variables 1.1 Partial derivatives Given a scalar function u = u(x;t) of two variables, we may hold one of the variables constant and regard it as a function of one variable only: vx(t) = u(x;t) = wt(x): Then the partial derivatives of u with respect of x and with respect to t are defined as the familiar derivatives from single variable calculus: ¶u dv ¶u dw = x ; = t : ¶t dt ¶x dx c 2016, Gyu Eun Lee. -

FALL 2021 COURSE SCHEDULE August 23 –– December 9, 2021

9/15/21 DEPARTMENT OF ECONOMICS FALL 2021 COURSE SCHEDULE August 23 –– December 9, 2021 Course # Class # Units Course Title Day Time Location Instruction Mode Instructor Limit 1078-001 20457 3 Mathematical Tools for Economists 1 MWF 8:00-8:50 ECON 117 IN PERSON Bentz 47 1078-002 20200 3 Mathematical Tools for Economists 1 MWF 6:30-7:20 ECON 119 IN PERSON Hurt 47 1078-004 13409 3 Mathematical Tools for Economists 1 0 ONLINE ONLINE ONLINE Marein 71 1088-001 18745 3 Mathematical Tools for Economists 2 MWF 6:30-7:20 HLMS 141 IN PERSON Zhou, S 47 1088-002 20748 3 Mathematical Tools for Economists 2 MWF 8:00-8:50 HLMS 211 IN PERSON Flynn 47 2010-100 19679 4 Principles of Microeconomics 0 ONLINE ONLINE ONLINE Keller 500 2010-200 14353 4 Principles of Microeconomics 0 ONLINE ONLINE ONLINE Carballo 500 2010-300 14354 4 Principles of Microeconomics 0 ONLINE ONLINE ONLINE Bottan 500 2010-600 14355 4 Principles of Microeconomics 0 ONLINE ONLINE ONLINE Klein 200 2010-700 19138 4 Principles of Microeconomics 0 ONLINE ONLINE ONLINE Gruber 200 2020-100 14356 4 Principles of Macroeconomics 0 ONLINE ONLINE ONLINE Valkovci 400 3070-010 13420 4 Intermediate Microeconomic Theory MWF 9:10-10:00 ECON 119 IN PERSON Barham 47 3070-020 21070 4 Intermediate Microeconomic Theory TTH 2:20-3:35 ECON 117 IN PERSON Chen 47 3070-030 20758 4 Intermediate Microeconomic Theory TTH 11:10-12:25 ECON 119 IN PERSON Choi 47 3070-040 21443 4 Intermediate Microeconomic Theory MWF 1:50-2:40 ECON 119 IN PERSON Bottan 47 3080-001 22180 3 Intermediate Macroeconomic Theory MWF 11:30-12:20 -

Product Differentiation

Product differentiation Industrial Organization Bernard Caillaud Master APE - Paris School of Economics September 22, 2016 Bernard Caillaud Product differentiation Motivation The Bertrand paradox relies on the fact buyers choose the cheap- est firm, even for very small price differences. In practice, some buyers may continue to buy from the most expensive firms because they have an intrinsic preference for the product sold by that firm: Notion of differentiation. Indeed, assuming an homogeneous product is not realistic: rarely exist two identical goods in this sense For objective reasons: products differ in their physical char- acteristics, in their design, ... For subjective reasons: even when physical differences are hard to see for consumers, branding may well make two prod- ucts appear differently in the consumers' eyes Bernard Caillaud Product differentiation Motivation Differentiation among products is above all a property of con- sumers' preferences: Taste for diversity Heterogeneity of consumers' taste But it has major consequences in terms of imperfectly competi- tive behavior: so, the analysis of differentiation allows for a richer discussion and comparison of price competition models vs quan- tity competition models. Also related to the practical question (for competition authori- ties) of market definition: set of goods highly substitutable among themselves and poorly substitutable with goods outside this set Bernard Caillaud Product differentiation Motivation Firms have in general an incentive to affect the degree of differ- entiation of their products compared to rivals'. Hence, differen- tiation is related to other aspects of firms’ strategies. Choice of products: firms choose how to differentiate from rivals, this impacts the type of products that they choose to offer and the diversity of products that consumers face. -

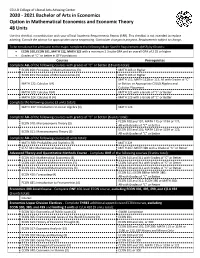

2020-2021 Bachelor of Arts in Economics Option in Mathematical

CSULB College of Liberal Arts Advising Center 2020 - 2021 Bachelor of Arts in Economics Option in Mathematical Economics and Economic Theory 48 Units Use this checklist in combination with your official Academic Requirements Report (ARR). This checklist is not intended to replace advising. Consult the advisor for appropriate course sequencing. Curriculum changes in progress. Requirements subject to change. To be considered for admission to the major, complete the following Major Specific Requirements (MSR) by 60 units: • ECON 100, ECON 101, MATH 122, MATH 123 with a minimum 2.3 suite GPA and an overall GPA of 2.25 or higher • Grades of “C” or better in GE Foundations Courses Prerequisites Complete ALL of the following courses with grades of “C” or better (18 units total): ECON 100: Principles of Macroeconomics (3) MATH 103 or Higher ECON 101: Principles of Microeconomics (3) MATH 103 or Higher MATH 111; MATH 112B or 113; All with Grades of “C” MATH 122: Calculus I (4) or Better; or Appropriate CSULB Algebra and Calculus Placement MATH 123: Calculus II (4) MATH 122 with a Grade of “C” or Better MATH 224: Calculus III (4) MATH 123 with a Grade of “C” or Better Complete the following course (3 units total): MATH 247: Introduction to Linear Algebra (3) MATH 123 Complete ALL of the following courses with grades of “C” or better (6 units total): ECON 100 and 101; MATH 115 or 119A or 122; ECON 310: Microeconomic Theory (3) All with Grades of “C” or Better ECON 100 and 101; MATH 115 or 119A or 122; ECON 311: Macroeconomic Theory (3) All with -

I. Externalities

Economics 1410 Fall 2017 Harvard University SECTION 8 I. Externalities 1. Consider a factory that emits pollution. The inverse demand for the good is Pd = 24 − Q and the inverse supply curve is Ps = 4 + Q. The marginal cost of the pollution is given by MC = 0:5Q. (a) What are the equilibrium price and quantity when there is no government intervention? (b) How much should the factory produce at the social optimum? (c) How large is the deadweight loss from the externality? (d) How large of a per-unit tax should the government impose to achieve the social optimum? 2. In Karro, Kansas, population 1,001, the only source of entertainment available is driving around in your car. The 1,001 Karraokers are all identical. They all like to drive, but hate congestion and pollution, resulting in the following utility function: Ui(f; d; t) = f + 16d − d2 − 6t=1000, where f is consumption of all goods but driving, d is the number of hours of driving Karraoker i does per day, and t is the total number of hours of driving all other Karraokers do per day. Assume that driving is free, that the unit price of food is $1, and that daily income is $40. (a) If an individual believes that the amount of driving he does wont affect the amount that others drive, how many hours per day will he choose to drive? (b) If everybody chooses this number of hours, then what is the total amount t of driving by other persons? (c) What will the utility of each resident be? (d) If everybody drives 6 hours a day, what will the utility level of each Karraoker be? (e) Suppose that the residents decided to pass a law restricting the total number of hours that anyone is allowed to drive. -

Utility with Decreasing Risk Aversion

144 UTILITY WITH DECREASING RISK AVERSION GARY G. VENTER Abstract Utility theory is discussed as a basis for premium calculation. Desirable features of utility functions are enumerated, including decreasing absolute risk aversion. Examples are given of functions meeting this requirement. Calculating premiums for simplified risk situations is advanced as a step towards selecting a specific utility function. An example of a more typical portfolio pricing problem is included. “The large rattling dice exhilarate me as torrents borne on a precipice flowing in a desert. To the winning player they are tipped with honey, slaying hirri in return by taking away the gambler’s all. Giving serious attention to my advice, play not with dice: pursue agriculture: delight in wealth so acquired.” KAVASHA Rig Veda X.3:5 Avoidance of risk situations has been regarded as prudent throughout history, but individuals with a preference for risk are also known. For many decision makers, the value of different potential levels of wealth is apparently not strictly proportional to the wealth level itself. A mathematical device to treat this is the utility function, which assigns a value to each wealth level. Thus, a 50-50 chance at double or nothing on your wealth level may or may not be felt equivalent to maintaining your present level; however, a 50-50 chance at nothing or the value of wealth that would double your utility (if such a value existed) would be equivalent to maintaining the present level, assuming that the utility of zero wealth is zero. This is more or less by definition, as the utility function is set up to make such comparisons possible. -

Nine Lives of Neoliberalism

A Service of Leibniz-Informationszentrum econstor Wirtschaft Leibniz Information Centre Make Your Publications Visible. zbw for Economics Plehwe, Dieter (Ed.); Slobodian, Quinn (Ed.); Mirowski, Philip (Ed.) Book — Published Version Nine Lives of Neoliberalism Provided in Cooperation with: WZB Berlin Social Science Center Suggested Citation: Plehwe, Dieter (Ed.); Slobodian, Quinn (Ed.); Mirowski, Philip (Ed.) (2020) : Nine Lives of Neoliberalism, ISBN 978-1-78873-255-0, Verso, London, New York, NY, https://www.versobooks.com/books/3075-nine-lives-of-neoliberalism This Version is available at: http://hdl.handle.net/10419/215796 Standard-Nutzungsbedingungen: Terms of use: Die Dokumente auf EconStor dürfen zu eigenen wissenschaftlichen Documents in EconStor may be saved and copied for your Zwecken und zum Privatgebrauch gespeichert und kopiert werden. personal and scholarly purposes. Sie dürfen die Dokumente nicht für öffentliche oder kommerzielle You are not to copy documents for public or commercial Zwecke vervielfältigen, öffentlich ausstellen, öffentlich zugänglich purposes, to exhibit the documents publicly, to make them machen, vertreiben oder anderweitig nutzen. publicly available on the internet, or to distribute or otherwise use the documents in public. Sofern die Verfasser die Dokumente unter Open-Content-Lizenzen (insbesondere CC-Lizenzen) zur Verfügung gestellt haben sollten, If the documents have been made available under an Open gelten abweichend von diesen Nutzungsbedingungen die in der dort Content Licence (especially Creative -

M.Sc. (Mathematics), SEM- I Paper - II ANALYSIS – I

M.Sc. (Mathematics), SEM- I Paper - II ANALYSIS – I PSMT102 Note- There will be some addition to this study material. You should download it again after few weeks. CONTENT Unit No. Title 1. Differentiation of Functions of Several Variables 2. Derivatives of Higher Orders 3. Applications of Derivatives 4. Inverse and Implicit Function Theorems 5. Riemann Integral - I 6. Measure Zero Set *** SYLLABUS Unit I. Euclidean space Euclidean space : inner product < and properties, norm Cauchy-Schwarz inequality, properties of the norm function . (Ref. W. Rudin or M. Spivak). Standard topology on : open subsets of , closed subsets of , interior and boundary of a subset of . (ref. M. Spivak) Operator norm of a linear transformation ( : v & }) and its properties such as: For all linear maps and 1. 2. ,and 3. (Ref. C. C. Pugh or A. Browder) Compactness: Open cover of a subset of , Compact subsets of (A subset K of is compact if every open cover of K contains a finite subover), Heine-Borel theorem (statement only), the Cartesian product of two compact subsets of compact (statement only), every closed and bounded subset of is compact. Bolzano-Weierstrass theorem: Any bounded sequence in has a converging subsequence. Brief review of following three topics: 1. Functions and Continuity Notation: arbitary non-empty set. A function f : A → and its component functions, continuity of a function( , δ definition). A function f : A → is continuous if and only if for every open subset V there is an open subset U of such that 2. Continuity and compactness: Let K be a compact subset and f : K → be any continuous function. -

Economic Evaluation Glossary of Terms

Economic Evaluation Glossary of Terms A Attributable fraction: indirect health expenditures associated with a given diagnosis through other diseases or conditions (Prevented fraction: indicates the proportion of an outcome averted by the presence of an exposure that decreases the likelihood of the outcome; indicates the number or proportion of an outcome prevented by the “exposure”) Average cost: total resource cost, including all support and overhead costs, divided by the total units of output B Benefit-cost analysis (BCA): (or cost-benefit analysis) a type of economic analysis in which all costs and benefits are converted into monetary (dollar) values and results are expressed as either the net present value or the dollars of benefits per dollars expended Benefit-cost ratio: a mathematical comparison of the benefits divided by the costs of a project or intervention. When the benefit-cost ratio is greater than 1, benefits exceed costs C Comorbidity: presence of one or more serious conditions in addition to the primary disease or disorder Cost analysis: the process of estimating the cost of prevention activities; also called cost identification, programmatic cost analysis, cost outcome analysis, cost minimization analysis, or cost consequence analysis Cost effectiveness analysis (CEA): an economic analysis in which all costs are related to a single, common effect. Results are usually stated as additional cost expended per additional health outcome achieved. Results can be categorized as average cost-effectiveness, marginal cost-effectiveness,