Sensor and Sensor Fusion Technology in Autonomous Vehicles: a Review

Total Page:16

File Type:pdf, Size:1020Kb

Load more

Recommended publications

-

Control in Robotics

Control in Robotics Mark W. Spong and Masayuki Fujita Introduction The interplay between robotics and control theory has a rich history extending back over half a century. We begin this section of the report by briefly reviewing the history of this interplay, focusing on fundamentals—how control theory has enabled solutions to fundamental problems in robotics and how problems in robotics have motivated the development of new control theory. We focus primarily on the early years, as the importance of new results often takes considerable time to be fully appreciated and to have an impact on practical applications. Progress in robotics has been especially rapid in the last decade or two, and the future continues to look bright. Robotics was dominated early on by the machine tool industry. As such, the early philosophy in the design of robots was to design mechanisms to be as stiff as possible with each axis (joint) controlled independently as a single-input/single-output (SISO) linear system. Point-to-point control enabled simple tasks such as materials transfer and spot welding. Continuous-path tracking enabled more complex tasks such as arc welding and spray painting. Sensing of the external environment was limited or nonexistent. Consideration of more advanced tasks such as assembly required regulation of contact forces and moments. Higher speed operation and higher payload-to-weight ratios required an increased understanding of the complex, interconnected nonlinear dynamics of robots. This requirement motivated the development of new theoretical results in nonlinear, robust, and adaptive control, which in turn enabled more sophisticated applications. Today, robot control systems are highly advanced with integrated force and vision systems. -

An Abstract of the Dissertation Of

AN ABSTRACT OF THE DISSERTATION OF Austin Nicolai for the degree of Doctor of Philosophy in Robotics presented on September 11, 2019. Title: Augmented Deep Learning Techniques for Robotic State Estimation Abstract approved: Geoffrey A. Hollinger While robotic systems may have once been relegated to structured environments and automation style tasks, in recent years these boundaries have begun to erode. As robots begin to operate in largely unstructured environments, it becomes more difficult for them to effectively interpret their surroundings. As sensor technology improves, the amount of data these robots must utilize can quickly become intractable. Additional challenges include environmental noise, dynamic obstacles, and inherent sensor non- linearities. Deep learning techniques have emerged as a way to efficiently deal with these challenges. While end-to-end deep learning can be convenient, challenges such as validation and training requirements can be prohibitive to its use. In order to address these issues, we propose augmenting the power of deep learning techniques with tools such as optimization methods, physics based models, and human expertise. In this work, we present a principled framework for approaching a prob- lem that allows a user to identify the types of augmentation methods and deep learning techniques best suited to their problem. To validate our framework, we consider three different domains: LIDAR based odometry estimation, hybrid soft robotic control, and sonar based underwater mapping. First, we investigate LIDAR based odometry estimation which can be characterized with both high data precision and availability; ideal for augmenting with optimization methods. We propose using denoising autoencoders (DAEs) to address the challenges presented by modern LIDARs. -

Cognitive Radar (STO-TR-SET-227)

NORTH ATLANTIC TREATY SCIENCE AND TECHNOLOGY ORGANIZATION ORGANIZATION AC/323(SET-227)TP/947 www.sto.nato.int STO TECHNICAL REPORT TR-SET-227 Cognitive Radar (Radar cognitif) Final Report of Task Group SET-227. Published October 2020 Distribution and Availability on Back Cover NORTH ATLANTIC TREATY SCIENCE AND TECHNOLOGY ORGANIZATION ORGANIZATION AC/323(SET-227)TP/947 www.sto.nato.int STO TECHNICAL REPORT TR-SET-227 Cognitive Radar (Radar cognitif) Final Report of Task Group SET-227. The NATO Science and Technology Organization Science & Technology (S&T) in the NATO context is defined as the selective and rigorous generation and application of state-of-the-art, validated knowledge for defence and security purposes. S&T activities embrace scientific research, technology development, transition, application and field-testing, experimentation and a range of related scientific activities that include systems engineering, operational research and analysis, synthesis, integration and validation of knowledge derived through the scientific method. In NATO, S&T is addressed using different business models, namely a collaborative business model where NATO provides a forum where NATO Nations and partner Nations elect to use their national resources to define, conduct and promote cooperative research and information exchange, and secondly an in-house delivery business model where S&T activities are conducted in a NATO dedicated executive body, having its own personnel, capabilities and infrastructure. The mission of the NATO Science & Technology Organization -

Massive Open Online Courses – Anyone Can Access Anywhere at Anytime

SHANLAX s han lax International Journal of Education # S I N C E 1 9 9 0 Massive Open Online Courses – Anyone Can Access Anywhere at Anytime K. Suresh OPEN ACCESS ICSSR Post Doctoral Fellow, Department of Education Central University of Tamil Nadu, Thiruvarur, Tamil Nadu, India Manuscript ID: EDU-2020-08032458 P. Srinivasan Professor and Head, Department of Education Volume: 8 Central University of Tamil Nadu, Thiruvarur, Tamil Nadu, India Issue: 3 Abstract Information Communication Technology influences all dimensions of education. Especially in Month: June distance education, rapid change occurs. Based on the huge availability of online resources like open education resources, distance education can be accessed openly at a low cost. In the 21st century, teaching and learning, Massive Open Online Course (MOOC) is a new trend of learning Year: 2020 for digital learners. MOOC is an open education system available on the web. It is a low-cost courseware, and the participants can access the learning content from anywhere and anytime. The P-ISSN: 2320-2653 MOOCs are categorized cMOOCs, xMOOCs, and Quasi MOOC models. India is a developing country, and it is making steps ahead to digitalization on its processes. e-panchayat, a-district, e-hospital, e-greetings, e-office, e-visa, e-NAM (National Agricultural Market), e-pathshala are E-ISSN: 2582-1334 the examples for India stood on digitalization. India tried to ensure access, equity, and quality education to the massive. With this aim, the SWAYAM portal initiated, and it was the milestone of Received: 06.04.2020 Indian distance education and providing open education to massive through MOOCs. -

Moocs: a Comparative Analysis Between Indian Scenario and Global Scenario

International Journal of Engineering & Technology, 7 (4.39) (2018) 854-857 International Journal of Engineering & Technology Website: www.sciencepubco.com/index.php/IJET Research paper MOOCs: A Comparative analysis between Indian scenario and Global scenario 1Dr.Gomathi Sankar Jaganathan, 2Dr. N. Sugundan, 3Mr. SiranjeeviSivakumar, 1Assistant Professor Saveetha School of Management Saveetha Institute of Medical and Technical Sciences (SIMATS) Associate Professor, Saveetha School of Management, Saveetha Institute of Medical and Technical Sciences (SIMATS) Assistant Professor, Saveetha School of Management, Saveetha Institute of Medical and Technical Sciences (SIMATS) *Corresponding author E-mail: [email protected] Abstract: Massive open online courses (MOOCs) are most recent and prominent trends in higher education. MOOCs has witnessed tremendous growth with huge enrolment. Having recorded large enrolment, India has initiated various projects such as NPTEL, IITBX, and SWAYAM. Many online platforms have been developed to provide online courses to encourage continuing education. This study detailed the characteristics of MOOCs along with various online platforms across the world. The authors compare the growth of Indian MOOCs with global scenario and list of providers who develop and deliver online courses and possible challenges of MOOCs implementation in India. Keywords: MOOC, SWAYAM, Online education, NPTEL, Coursera, and edX. 1. Introduction: 2. Characteristics of Moocs: MOOCs – Massive Open Online Courses are quite new and most • Massive: MOOCs can have enormous of participants. It conspicuous trends in higher education. It represents learning witnessed more than 81 million registration across the phenomenon where learners access online educational multimedia world. It recorded around 23 million new registration in materials, and get associated with enormous numbers of other the year 2017 (Class central, 2018). -

Learning New Lessons Worksheet

Intelligent Business Learning new lessons Worksheet A Before you read – Discuss Discuss the questions below. Share your knowledge, experience and opinions. 1) Have you attended, or do you intend to attend a university? 2) If so, was it a traditional university with a campus or was it an on-line university? 3) Have you ever tried any form of on-line learning? Describe your experiences. 4) Do you think on-line learning is as effective as traditional lectures, assignments and seminars? Do you think on-line learning is more effective? 5) What are the advantages of on-line learning for governments, university tutors and learners? What are the disadvantages? B Comprehension Read the first three paragraphs and answer the questions. 1) What do the top universities offer? [Find three elements] 2) What do lesser universities offer? [Find four elements] 3) Why does online provision threaten doom for the laggard and mediocre? 4) How did the Open University provide distance teaching in 1971? 5) What are MOOCs? 6) Are Udacity, edX and Coursera MOOCs? Read paragraphs 4, 5, 6. (The last word of paragraph 6 is rivals.) Mark these statements TRUE or FALSE. 7) Coursera works with several universities in the USA and elsewhere. T/F 8) Futurelearn will not charge for carrying courses on the platform. T/F 9) Marginal Revolution University offers courses in blogging. T/F 10) Universities using online courses can offer cheaper degrees. T/F Read paragraphs 7, 8, and 9. Which advantages do MOOCs offer? Choose from the list below. 11) a) interactive coursework b) no pause, rewind (or fast forward) buttons c) students learn at their own pace d) universities can offer courses to larger numbers of students e) students in different countries can work together without leaving their homes f) students are all from rich countries Read paragraphs 10, 11, 12. -

Moocs and Google

UNCTAD Advisory Group on Developing skills, knowledge and capacities through innovation: ELearning, MLearning, cloudLearning Geneva 10/12/2013 MOOCs and Google Michel Benard University Relations Manager Google The first MOOC (Massive Open Online Course) was launched late 2011 by Profs. Peter Norvig and Sebastian Thrun from Stanford University. In the TED video relating his unique experience (http://www.ted.com/talks/peter_norvig_the_100_000_student_classroom.html), Peter Norvig is addressing many opportunities and open questions that this introduction has raised. Since then many MOOCs have been created by a large number of universities and institutions worldwide, hosted by platforms like Coursera, Udacity, EdX, Iversity, FutureLearn and many other private or public organizations. MOOCs are now used for teaching science, technology, humanities and many other fields, such as sport, vocational topics or general public interest topics. In summer 2012, at Google we ran a MOOC (Massive Open Online Course) on Power Searching. Soon after, we open sourced Course Builder, the platform that we developed on Google technologies to present the course. Since then, we have released four versions of Course Builder adding features such as userfriendly content development, administrative support, dashboards on student performance and behavior, new assessment types including peer review, accessibility, internationalization, etc. A large number of courses have been hosted on Course Builder, with many more in the pipeline. This work started with the observation that we have all the component technology one needs to create a platform for delivering a learning experience similar to other MOOCs that were being offered on Coursera and Udacity. So we set about wiring together these components (YouTube, App Engine, Groups, Apps, Google+ and Hangouts, etc.) to create the first version of Course Builder. -

Curriculum Reinforcement Learning for Goal-Oriented Robot Control

MEng Individual Project Imperial College London Department of Computing CuRL: Curriculum Reinforcement Learning for Goal-Oriented Robot Control Supervisor: Author: Dr. Ed Johns Harry Uglow Second Marker: Dr. Marc Deisenroth June 17, 2019 Abstract Deep Reinforcement Learning has risen to prominence over the last few years as a field making strong progress tackling continuous control problems, in particular robotic control which has numerous potential applications in industry. However Deep RL algorithms alone struggle on complex robotic control tasks where obstacles need be avoided in order to complete a task. We present Curriculum Reinforcement Learning (CuRL) as a method to help solve these complex tasks by guided training on a curriculum of simpler tasks. We train in simulation, manipulating a task environment in ways not possible in the real world to create that curriculum, and use domain randomisation in attempt to train pose estimators and end-to-end controllers for sim-to-real transfer. To the best of our knowledge this work represents the first example of reinforcement learning with a curriculum of simpler tasks on robotic control problems. Acknowledgements I would like to thank: • Dr. Ed Johns for his advice and support as supervisor. Our discussions helped inform many of the project’s key decisions. • My parents, Mike and Lyndsey Uglow, whose love and support has made the last four year’s possible. Contents 1 Introduction8 1.1 Objectives................................. 9 1.2 Contributions ............................... 10 1.3 Report Structure ............................. 11 2 Background 12 2.1 Machine learning (ML) .......................... 12 2.2 Artificial Neural Networks (ANNs) ................... 12 2.2.1 Strengths of ANNs ....................... -

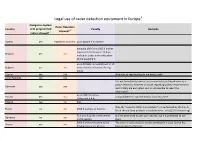

Legal Use of Radar Detection Equipment in Europe1 Navigation System Radar Detectors Country with Programmed Penalty Remarks Allowed? 3 Radars Allowed? 2

Legal use of radar detection equipment in Europe1 Navigation System Radar Detectors Country with programmed Penalty Remarks allowed? 3 radars allowed? 2 Austria yes legislation not clear up to 4000 € if no license between 100 € and 1000 € and/or imprisonment between 15 days Belgium yes no and up to 1 year and confiscation of the equipment up to 50 BGN and withdrawal of 10 Bulgaria yes no control points of control driving ticket Cyprus yes yes Attempts to regulate legally are being made Czech Republic yes yes It is not forbidden to own or use a radar detector (also known as a police detector). However all canals regarding police, rescue services Denmark yes yes and military are encrypted, so it is not possible to reach the information. up to 100 fine unites Estonia yes no not prohibited to own the device, but so to use it (1 fine unit = 4 €) Finland yes no fine Only the "assistant d'aide à la conduite" are authorized by the law. A France no no 1500 € and loss of 3 points list of the conform products is available here : http://a2c.infocert.org/ 75 € and 4 points in the central it is not prohibited to own such devices, but it is prohibited to use Germany no no traffic registry them 2000 € and confiscation of the The use of a radar detector can be permitted if a state licence has Greece yes no driving licence for 30 days been granted to the user Legal use of radar detection equipment in Europe1 Navigation System Radar Detectors Country with programmed Penalty Remarks allowed? 3 radars allowed? 2 Hungary yes yes Iceland yes yes Ireland no no no specific penalty yes (the Police also provides a map of from €761,00 to €3.047,00 (Art. -

LONG RANGE Radar/Laser Detector User's Manual R7

R7 LONG RANGE Radar/Laser Detector User’s Manual © 2019 Uniden America Corporation Issue 1, March 2019 Irving, Texas Printed in Korea CUSTOMER CARE At Uniden®, we care about you! If you need assistance, please do NOT return this product to your place of purchase Save your receipt/proof of purchase for warranty. Quickly find answers to your questions by: • Reading this User’s Manual. • Visiting our customer support website at www.uniden.com. Images in this manual may differ slightly from your actual product. DISCLAIMER: Radar detectors are illegal in some states. Some states prohibit mounting any object on your windshield. Check applicable law in your state and any state in which you use the product to verify that using and mounting a radar detector is legal. Uniden radar detectors are not manufactured and/or sold with the intent to be used for illegal purposes. Drive safely and exercise caution while using this product. Do not change settings of the product while driving. Uniden expects consumer’s use of these products to be in compliance with all local, state, and federal law. Uniden expressly disclaims any liability arising out of or related to your use of this product. CONTENTS CUSTOMER CARE .......................................................................................................... 2 R7 OVERVIEW .............................................................................................5 FEATURES ....................................................................................................................... -

Getting Started with Motionfx Sensor Fusion Library in X-CUBE-MEMS1 Expansion for Stm32cube

UM2220 User manual Getting started with MotionFX sensor fusion library in X-CUBE-MEMS1 expansion for STM32Cube Introduction The MotionFX is a middleware library component of the X-CUBE-MEMS1 software and runs on STM32. It provides real-time motion-sensor data fusion. It also performs gyroscope bias and magnetometer hard iron calibration. This library is intended to work with ST MEMS only. The algorithm is provided in static library format and is designed to be used on STM32 microcontrollers based on the ARM® Cortex® -M0+, ARM® Cortex®-M3, ARM® Cortex®-M4 or ARM® Cortex®-M7 architectures. It is built on top of STM32Cube software technology to ease portability across different STM32 microcontrollers. The software comes with sample implementation running on an X-NUCLEO-IKS01A2, X-NUCLEO-IKS01A3 or X-NUCLEO- IKS02A1 expansion board on a NUCLEO-F401RE, NUCLEO-L476RG, NUCLEO-L152RE or NUCLEO-L073RZ development board. UM2220 - Rev 8 - April 2021 www.st.com For further information contact your local STMicroelectronics sales office. UM2220 Acronyms and abbreviations 1 Acronyms and abbreviations Table 1. List of acronyms Acronym Description API Application programming interface BSP Board support package GUI Graphical user interface HAL Hardware abstraction layer IDE Integrated development environment UM2220 - Rev 8 page 2/25 UM2220 MotionFX middleware library in X-CUBE-MEMS1 software expansion for STM32Cube 2 MotionFX middleware library in X-CUBE-MEMS1 software expansion for STM32Cube 2.1 MotionFX overview The MotionFX library expands the functionality of the X-CUBE-MEMS1 software. The library acquires data from the accelerometer, gyroscope (6-axis fusion) and magnetometer (9-axis fusion) and provides real-time motion-sensor data fusion. -

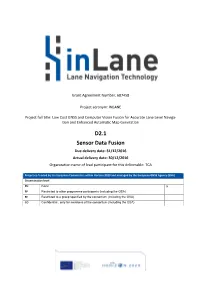

D2.1 Sensor Data Fusion V1

Grant Agreement Number: 687458 Project acronym: INLANE Project full title: Low Cost GNSS and Computer Vision Fusion for Accurate Lane Level Naviga- tion and Enhanced Automatic Map Generation D2.1 Sensor Data Fusion Due delivery date: 31/12/2016 Actual delivery date: 30/12/2016 Organization name of lead participant for this deliverable: TCA Project co-funded by the European Commission within Horizon 2020 and managed by the European GNSS Agency (GSA) Dissemination level PU Public x PP Restricted to other programme participants (including the GSA) RE Restricted to a group specified by the consortium (including the GSA) CO Confidential , only for members of the consortium (including the GSA) Document Control Sheet Deliverable number: D2.1 Deliverable responsible: TeleConsult Austria Workpackage: 2 Editor: Axel Koppert Author(s) – in alphabetical order Name Organisation E-mail Axel Koppert TCA [email protected] Document Revision History Version Date Modifications Introduced Modification Reason Modified by V0.1 18/11/2016 Table of Contents Axel Koppert V0.2 05/12/2016 Filling the document with content Axel Koppert V0.3 19/12/2016 Internal Review François Fischer V1.0 23/12/2016 First final version after internal review Claudia Fösleitner Abstract This Deliverable is the first release of the Report on the development of the sensor fusion and vision based software modules. The report presents the INLANE sensor-data fusion and GNSS processing approach. This first report release presents the status of the development (tasks 2.1 and 2.5) at M12. Legal Disclaimer The information in this document is provided “as is”, and no guarantee or warranty is given that the information is fit for any particular purpose.