Two-Stage Dimension Reduction for Noisy High-Dimensional Images and Application to Cryogenic Electron Microscopy

Total Page:16

File Type:pdf, Size:1020Kb

Load more

Recommended publications

-

Caso Relativamente Recente

Perché chiamiamo “fondamentale” la Cenerentola della ricerca? (di M. Brunori) Neanche nel Pnrr si trovano speranze di cambiamento e iniziative coraggiose per la ricerca di base. Ma nelle scienze della vita non sono rare le scoperte nate da progetti di ricerca curiosity driven che richiedono tempo per portare risultati Soci dell'Accademia dei Lincei. (a cura di Maurizio Brunori, Prof. emerito di Chimica e Biochimica, Sapienza Università di Roma, Presidente emerito della Classe di Scienze FMN dell’Accademia dei Lincei) Nelle scienze della vita non sono infrequenti le scoperte innovative nate da progetti di ricerca di base, iniziati per cercare di comprendere qualche importante proprietà di un essere vivente, misteriosa ma ovviamente necessaria se è stata conservata nel corso dell’evoluzione. Questi progetti sono quelli che si iniziano per curiosità intellettuale, ma richiedono libertà di iniziativa, impegno pluriennale e molto coraggio in quanto di difficile soluzione. Un successo straordinario noto a molti è quello ottenuto dieci anni fa da due straordinarie ricercatrici, Emmanuelle Charpentier e Jennifer Doudna; che a dicembre hanno ricevuto dal Re di Svezia il Premio Nobel per la Chimica con la seguente motivazione: “for the development of a new method for genome editing”. Nel 2018 in occasione di una conferenza magistrale che la Charpentier tenne presso l’Accademia Nazionale dei Lincei, avevo pubblicato sul Blog di HuffPost un pezzo per commentare l’importanza della scoperta di CRISPR/Cas9, un kit molecolare taglia-e-cuci che consente di modificare con precisione ed efficacia senza precedenti il genoma di qualsiasi essere vivente: batteri, piante, animali, compreso l’uomo. NOBEL PRIZE Nobel Chimica Non era mai accaduto che due donne vincessero insieme il Premio Nobel. -

The Pharmacologist 2 0 0 6 December

Vol. 48 Number 4 The Pharmacologist 2 0 0 6 December 2006 YEAR IN REVIEW The Presidential Torch is passed from James E. Experimental Biology 2006 in San Francisco Barrett to Elaine Sanders-Bush ASPET Members attend the 15th World Congress in China Young Scientists at EB 2006 ASPET Awards Winners at EB 2006 Inside this Issue: ASPET Election Online EB ’07 Program Grid Neuropharmacology Division Mixer at SFN 2006 New England Chapter Meeting Summary SEPS Meeting Summary and Abstracts MAPS Meeting Summary and Abstracts Call for Late-Breaking Abstracts for EB‘07 A Publication of the American Society for 121 Pharmacology and Experimental Therapeutics - ASPET Volume 48 Number 4, 2006 The Pharmacologist is published and distributed by the American Society for Pharmacology and Experimental Therapeutics. The Editor PHARMACOLOGIST Suzie Thompson EDITORIAL ADVISORY BOARD Bryan F. Cox, Ph.D. News Ronald N. Hines, Ph.D. Terrence J. Monks, Ph.D. 2006 Year in Review page 123 COUNCIL . President Contributors for 2006 . page 124 Elaine Sanders-Bush, Ph.D. Election 2007 . President-Elect page 126 Kenneth P. Minneman, Ph.D. EB 2007 Program Grid . page 130 Past President James E. Barrett, Ph.D. Features Secretary/Treasurer Lynn Wecker, Ph.D. Secretary/Treasurer-Elect Journals . Annette E. Fleckenstein, Ph.D. page 132 Past Secretary/Treasurer Public Affairs & Government Relations . page 134 Patricia K. Sonsalla, Ph.D. Division News Councilors Bryan F. Cox, Ph.D. Division for Neuropharmacology . page 136 Ronald N. Hines, Ph.D. Centennial Update . Terrence J. Monks, Ph.D. page 137 Chair, Board of Publications Trustees Members in the News . -

JACQUES DUBOCHET (75) Es Ist Derzeit Nicht Leicht, an Jacques Dubochet Heranzukom- Men

NoBelpreis TexT Mathias Plüss Bilder anoush abrar JACQUES DUBOCHET (75) Es ist derzeit nicht leicht, an Jacques Dubochet heranzukom- men. Ist man aber einmal bei ihm, so hat man ihn ganz für aus dem Waadtland ist ein Mensch wie du sich. Nicht nur lässt er sich keine Sekunde ablenken – er inte- und ich. Und gerade darum vielleicht ressiert sich auch für sein Gegenüber. Er pflegt Kolleginnen der ungewöhnlichste Nobelpreisträger, und Kollegen aus allen möglichen Disziplinen zu sich zum Essen einzuladen und mit Fragen zu löchern. Zu mir sagt er, den man sich vorstellen kann. als wir auf dem Weg zur Metro in Lausanne sind: «Und was Mit unserem Autor hat er eine kleine sind Sie für ein Mensch?» Zugfahrt gemacht. Dubochet ist 1942 in Aigle VD geboren. Er wuchs im Wal- lis und im Waadtland auf. In der Schule hatte er Mühe, konn- te aber dank verständnisvoller Lehrer die Matura machen. Er studierte Physik und wechselte dann zur Biologie. Die Statio- nen seiner Karriere waren Lausanne, Genf, Basel, Heidel- berg. Von 1987 bis 2007 war er Professor für Biophysik an der Universität Lausanne. Er ist mit einer Basler Künstlerin ver- heiratet und hat zwei erwachsene Kinder. Auch nach seiner Emeritierung engagiert er sich weiterhin: zum Beispiel im von ihm entwickelten Studienprogramm «Biologie und Ge- sellschaft», aber auch als Lokalpolitiker der SP an seinem Wohnort Morges VD. 4747 — — 20172017 DASDAS MAGAZIN MAGAZIN N° N° 20 «ICH VERSTEHE NICHTS VON CHEMIE» 4747 — — 20172017 DASDAS MAGAZIN MAGAZIN N° N° In der Schule Probleme, heute Nobelpreisträger: Jacques Dubochet aus Morges VD. Anfang Oktober gab das Stockholmer Nobelpreiskomitee be- … auch Luc Montagnier, der Entdecker des Aids-Vi- kannt, dass Jacques Dubochet den Chemie-Nobelpreis 2017 rus, entwickelte sehr bizarre Ideen. -

October 2017 Current Affairs

Unique IAS Academy – October 2017 Current Affairs 1. Which state to host the 36th edition of National Games of India in 2018? [A] Goa [B] Assam [C] Kerala [D] Jharkhand Correct 2. Which Indian entrepreneur has won the prestigious International Business Person of the Year award in London for innovative IT solutions? [A] Birendra Sasmal [B] Uday Lanje [C] Madhira Srinivasu [D] Ranjan Kumar 3. The United Nations‟ (UN) International Day of Non-Violence is observed on which date? [A] October 4 [B] October 1 [C] October 2 [D] October 3 4. Which country to host the 6th edition of World Government Summit (WGS)? 0422 4204182, 9884267599 1st Street, Gandhipuram Coimbatore Page 1 Unique IAS Academy – October 2017 Current Affairs [A] Israel [B] United States [C] India [D] UAE 5. Who of the following has/have won the Nobel Prize in Physiology or Medicine 2017? [A] Jeffrey C. Hall [B] Michael Rosbash [C] Michael W. Young [D] All of the above 6. Which state government has launched a state-wide campaign against child marriage and dowry on the occasion of Mahatma Gandhi‟s birth anniversary? [A] Odisha [B] Bihar [C] Uttar Pradesh [D] Rajasthan 7. The 8th Conference of Association of SAARC Speakers and Parliamentarians to be held in which country? [A] China [B] India [C] Sri Lanka [D] Nepal Correct 8. Which committee has drafted the 3rd National Wildlife Action Plan (NWAP) for 2017- 2031? [A] Krishna Murthy committee [B] JC Kala committee 0422 4204182, 9884267599 1st Street, Gandhipuram Coimbatore Page 2 Unique IAS Academy – October 2017 Current Affairs [C] Prabhakar Reddy committee [D] K C Patan committee 9. -

Molecular Geometry and Molecular Graphics: Natta's Polypropylene And

Molecular geometry and molecular graphics: Natta's polypropylene and beyond Guido Raos Dip. di Chimica, Materiali e Ing. Chimica \G. Natta", Politecnico di Milano Via L. Mancinelli 7, 20131 Milano, Italy [email protected] Abstract. In this introductory lecture I will try to summarize Natta's contribution to chemistry and materials science. The research by his group, which earned him the Noble prize in 1963, provided unprece- dented control over the synthesis of macromolecules with well-defined three-dimensional structures. I will emphasize how this structure is the key for the properties of these materials, or for that matter for any molec- ular object. More generally, I will put Natta's research in a historical context, by discussing the pervasive importance of molecular geometry in chemistry, from the 19th century up to the present day. Advances in molecular graphics, alongside those in experimental and computational methods, are allowing chemists, materials scientists and biologists to ap- preciate the structure and properties of ever more complex materials. Keywords: molecular geometry, stereochemistry, chirality, polymers, self-assembly, Giulio Natta To be presented at the 18th International Conference on Geometry and Graphics, Politecnico di Milano, August 2018: http://www.icgg2018.polimi.it/ 1 Introduction: the birth of stereochemistry Modern chemistry was born in the years spanning the transition from the 18th to the 19th century. Two key figures were Antoine Lavoisier (1943-1794), whose em- phasis on quantitative measurements helped to transform alchemy into a science on an equal footing with physics, and John Dalton (1766-1844), whose atomic theory provided a simple rationalization for the way chemical elements combine with each other to form compounds. -

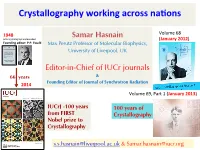

Samar Hasnain

Crystallography,working,across,na2ons, , 1948, Samar Hasnain Volume!68! Acta!Crystallographica!launched! (January,2012)! Founding,editor:,P.P.,Ewald, Max Perutz Professor of Molecular Biophysics, University of Liverpool, UK Editor-in-Chief of IUCr journals 66 years ! & Founding Editor of Journal of Synchrotron Radiation 2014, Volume!69,!Part!1!(January,2013)! IUCrJ -100 years 100 years of from FIRST Crystallography, Nobel prize to Crystallography, [email protected] & [email protected]! IUCr facts 53#member!countries! 42#Adhering!Bodies!(including!4 Regional!Associates:!ACA,!AsCA,!ECA,!LACA)! 23#Commissions! 9#Journals!(including#IUCrJ#launched!in!2014)! IUCr!Congress!every!3#years!(24th IUCr!Congress,!Hyderabad,!August!2017)! Michele Zema The IUCr is a member of since 1947 Project Manager for IYCr2014 EMC5, Oujda, Oct 2013 IUCr Journal milestones 1968, Acta!Crystallographica!split!into!! 1948, SecMon!A:!FoundaMons!of!! Acta!Crystallographica!launched! Crystallography!and!SecMon!B:!! Founding,editor:,P.P.,Ewald, Structural!Science! Founding,editor:,A.J.C.,Wilson, ! 1983, 1968, Acta!Crystallographica!SecMon!C:!! Journal!of!Applied!Crystallography! Crystal!Structure!CommunicaMons!! 1991, launched! Adop2on,of,CIF, launched! Founding,editor:,A.,Guinier, Founding,editor:,S.C.,Abrahams, ! ! 1994, 1993, Journal!of!Synchrotron!! Acta!Crystallographica!SecMon!D:!! RadiaMon!launched! 1999, Biological!Crystallography! Founding,editors:,, Online,, launched! S.S.,Hasnain,,J.R.,Helliwell,, access, Founding,editor:,J.P.,Glusker, and,H.,Kamitsubo, -

Marie Skłodowska-Curie Actions: Over 20 Years of European Support for Researchers’ Work

Marie Skłodowska-Curie Actions: Over 20 years of European support for researchers’ work Since 1994, the Marie Skłodowska-Curie Actions have provided grants to train excellent researchers at all stages of their careers - be they doctoral candidates or highly experienced researchers – while encouraging transnational, inter-sectoral and interdisciplinary mobility. In 1996, the programme was named after the double Nobel Prize winner Marie Skłodowska-Curie to honour and spread the values she stood for. To date, more than 120 000 researchers have participated in the programme with many more benefiting from it – among them nine Nobel laureates and an Oscar winner. Marie Skłodowska-Curie Actions in the future Building on the success of the programme over more than twenty years, the Marie Skłodowska-Curie Actions will continue to fund a new generation of outstanding, early-career researchers under Horizon Europe, the new European research and innovation programme for 2021-2027. The Commission has proposed a budget of EUR 6.8 billion for Marie Skłodowska-Curie Actions under Horizon Europe which will now be the subject of negotiations with the European Parliament and Council. Stakeholders will have an opportunity to have their say in autumn 2018 to help shape the specific Marie Skłodowska-Curie Actions funding schemes for the period 2021-2027. WHY WERE THE MARIE SKŁODOWSKA- as organisations involved in research: academic CURIE ACTIONS CREATED? institutions, international research organisations, private businesses and NGOs. The Marie Skłodowska- Research and innovation are the backbone of the Curie Actions are open to excellent researchers in all economy. Scientific discoveries drive the development disciplines, from fundamental research to market of new products and services, boosting economic growth take-up and innovation services. -

Nfap Policy Brief » October 2019

NATIONAL FOUNDATION FOR AMERICAN POLICY NFAP POLICY BRIEF» OCTOBER 2019 IMMIGRANTS AND NOBEL PRIZES : 1901- 2019 EXECUTIVE SUMMARY Immigrants have been awarded 38%, or 36 of 95, of the Nobel Prizes won by Americans in Chemistry, Medicine and Physics since 2000.1 In 2019, the U.S. winner of the Nobel Prize in Physics (James Peebles) and one of the two American winners of the Nobel Prize in Chemistry (M. Stanley Whittingham) were immigrants to the United States. This showing by immigrants in 2019 is consistent with recent history and illustrates the contributions of immigrants to America. In 2018, Gérard Mourou, an immigrant from France, won the Nobel Prize in Physics. In 2017, the sole American winner of the Nobel Prize in Chemistry was an immigrant, Joachim Frank, a Columbia University professor born in Germany. Immigrant Rainer Weiss, who was born in Germany and came to the United States as a teenager, was awarded the 2017 Nobel Prize in Physics, sharing it with two other Americans, Kip S. Thorne and Barry C. Barish. In 2016, all 6 American winners of the Nobel Prize in economics and scientific fields were immigrants. Table 1 U.S. Nobel Prize Winners in Chemistry, Medicine and Physics: 2000-2019 Category Immigrant Native-Born Percentage of Immigrant Winners Physics 14 19 42% Chemistry 12 21 36% Medicine 10 19 35% TOTAL 36 59 38% Source: National Foundation for American Policy, Royal Swedish Academy of Sciences, George Mason University Institute for Immigration Research. Between 1901 and 2019, immigrants have been awarded 35%, or 105 of 302, of the Nobel Prizes won by Americans in Chemistry, Medicine and Physics. -

Structural Studies of the Β2-Adrenergic Receptor Gs Complex

Structural Studies of the β2-Adrenergic Receptor Gs Complex Gerwin Westfield,1,2 Soren Rasmussen,3 Min Su,1 Brian Kobilka,3 and Georgios Skiniotis 1,2 1 Life Sciences Institute, University of Michigan, 210 Washtenaw Ave., Ann Arbor, MI 48109-2216 2 Department of Biological Chemistry, University of Michigan, 210 Washtenaw Ave., Ann Arbor, MI 48109-2216 3 Department of Molecular and Cellular Physiology and Medicine, Stanford University, 279 Campus Drive, Stanford, CA 94305-5345 G-protein-coupled receptors (GPCRs) are seven-helix transmembrane proteins responsible for integrating extracellular stimuli to an intracellular response [1]. When an extracellular ligand binds to the receptor, a conformational change occurs that is transmitted to the intracellularly bound G- protein trimer. G-proteins regulate many cellular functions including metabolic enzymes, ion channels, and transporters. Due to their central role in physiological responses, GPCRs constitute ~30% of all prescription drug targets. Determining the structure of these important receptors in complex with their coupled G-protein will help to understand how they regulate cellular signals, as well as help to create new therapeutics for the treatment of several diseases. Despite the fact that several crystal structures of G-proteins and GPCRs have been solved independently [2], and the structure of these proteins together in a complex has defied researchers for the last 50 years [3]. In collaboration with the lab of Brian Kobilka we are trying to resolve the architecture of a GPCR-G- protein complex with the application of single-particle EM. The β2-adrenergic receptor Gs complex (β2AR-Gs) is approximately 140 kDa, consisting of the transmembrane receptor and the G-protein heterotrimer of Gsα and βγ. -

SHALOM NWODO CHINEDU from Evolution to Revolution

Covenant University Km. 10 Idiroko Road, Canaan Land, P.M.B 1023, Ota, Ogun State, Nigeria Website: www.covenantuniversity.edu.ng TH INAUGURAL 18 LECTURE From Evolution to Revolution: Biochemical Disruptions and Emerging Pathways for Securing Africa's Future SHALOM NWODO CHINEDU INAUGURAL LECTURE SERIES Vol. 9, No. 1, March, 2019 Covenant University 18th Inaugural Lecture From Evolution to Revolution: Biochemical Disruptions and Emerging Pathways for Securing Africa's Future Shalom Nwodo Chinedu, Ph.D Professor of Biochemistry (Enzymology & Molecular Genetics) Department of Biochemistry Covenant University, Ota Media & Corporate Affairs Covenant University, Km. 10 Idiroko Road, Canaan Land, P.M.B 1023, Ota, Ogun State, Nigeria Tel: +234-8115762473, 08171613173, 07066553463. www.covenantuniversity.edu.ng Covenant University Press, Km. 10 Idiroko Road, Canaan Land, P.M.B 1023, Ota, Ogun State, Nigeria ISSN: 2006-0327 Public Lecture Series. Vol. 9, No.1, March, 2019 Shalom Nwodo Chinedu, Ph.D Professor of Biochemistry (Enzymology & Molecular Genetics) Department of Biochemistry Covenant University, Ota From Evolution To Revolution: Biochemical Disruptions and Emerging Pathways for Securing Africa's Future THE FOUNDATION 1. PROTOCOL The Chancellor and Chairman, Board of Regents of Covenant University, Dr David O. Oyedepo; the Vice-President (Education), Living Faith Church World-Wide (LFCWW), Pastor (Mrs) Faith A. Oyedepo; esteemed members of the Board of Regents; the Vice- Chancellor, Professor AAA. Atayero; the Deputy Vice-Chancellor; the -

Kobilka GPCR

NIH Public Access Author Manuscript Biochim Biophys Acta. Author manuscript; available in PMC 2007 May 24. NIH-PA Author ManuscriptPublished NIH-PA Author Manuscript in final edited NIH-PA Author Manuscript form as: Biochim Biophys Acta. 2007 April ; 1768(4): 794–807. G Protein Coupled Receptor Structure and Activation Brian K. Kobilka Department of Molecular and Cellular Physiology, Stanford University School of Medicine Abstract G protein coupled receptors (GPCRs) are remarkably versatile signaling molecules. The members of this large family of membrane proteins are activated by a spectrum of structurally diverse ligands, and have been shown to modulate the activity of different signaling pathways in a ligand specific manner. In this manuscript I will review what is known about the structure and mechanism of activation of GPCRs focusing primarily on two model systems, rhodopsin and the β2 adrenoceptor. Keywords GPCR; 7TM; structure; conformational changes; efficacy INTRODUCTION G protein coupled receptors (GPCRs) represent the largest family of membrane proteins in the human genome and the richest source of targets for the pharmaceutical industry. There has been remarkable progress in the field of GPCR biology during the past two decades. Notable milestones include the cloning of the first GPCR genes, and the sequencing of the human genome revealing the size of the GPCR family and the number of orphan GPCRs. Moreover, there is a growing appreciation that GPCR regulation and signaling is much more complex than originally envisioned, and includes signalling through G protein independent pathways [2–4]. Consequently, it has been proposed that the term GPCR be abandoned in favor of 7 transmembrane or 7TM receptors. -

They Captured Life in Atomic Detail

THE NOBEL PRIZE IN CHEMISTRY 2017 POPULAR SCIENCE BACKGROUND They captured life in atomic detail Jacques Dubochet, Joachim Frank and Richard Henderson are awarded the Nobel Prize in Chemistry 2017 for their development of an effective method for generating three-dimensional images of the molecules of life. Using cryo-electron microscopy, researchers can now freeze biomolecules mid- movement and portray them at atomic resolution. This technology has taken biochemistry into a new era. Over the last few years, numerous astonishing structures of life’s molecular machinery have flled the scientifc literature (fgure 1): Salmonella’s injection needle for attacking cells; proteins that confer resistance to chemotherapy and antibiotics; molecular complexes that govern circadian rhythms; light-capturing reaction complexes for photosynthesis and a pressure sensor of the type that allows us to hear. These are just a few examples of the hundreds of biomolecules that have now been imaged using cryo-electron microscopy (cryo-EM). When researchers began to suspect that the Zika virus was causing the epidemic of brain-damaged newborns in Brazil, they turned to cryo-EM to visualise the virus. Over a few months, three- dimensional (3D) images of the virus at atomic resolution were generated and researchers could start searching for potential targets for pharmaceuticals. Figure 1. Over the last few years, researchers have published atomic structures of numerous complicated protein complexes. a. A protein complex that governs the circadian rhythm. b. A sensor of the type that reads pressure changes in the ear and allows us to hear. c. The Zika virus. Jacques Dubochet, Joachim Frank and Richard Henderson have made ground-breaking discoveries that have enabled the development of cryo-EM.