UNIX Unleashed Table of Contents Unix Unleashed

Total Page:16

File Type:pdf, Size:1020Kb

Load more

Recommended publications

-

Fundamentals of UNIX Lab 5.4.6 – Listing Directory Information (Estimated Time: 30 Min.)

Fundamentals of UNIX Lab 5.4.6 – Listing Directory Information (Estimated time: 30 min.) Objectives: • Learn to display directory and file information • Use the ls (list files) command with various options • Display hidden files • Display files and file types • Examine and interpret the results of a long file listing • List individual directories • List directories recursively Background: In this lab, the student will use the ls command, which is used to display the contents of a directory. This command will display a listing of all files and directories within the current directory or specified directory or directories. If no pathname is given as an argument, ls will display the contents of the current directory. The ls command will list any subdirectories and files that are in the current working directory if a pathname is specified. The ls command will also default to a wide listing and display only file and directory names. There are many options that can be used with the ls command, which makes this command one of the more flexible and useful UNIX commands. Command Format: ls [-option(s)] [pathname[s]] Tools / Preparation: a) Before starting this lab, the student should review Chapter 5, Section 4 – Listing Directory Contents b) The student will need the following: 1. A login user ID, for example user2, and a password assigned by their instructor. 2. A computer running the UNIX operating system with CDE. 3. Networked computers in classroom. Notes: 1 - 5 Fundamentals UNIX 2.0—-Lab 5.4.6 Copyright 2002, Cisco Systems, Inc. Use the diagram of the sample Class File system directory tree to assist with this lab. -

Copy on Write Based File Systems Performance Analysis and Implementation

Copy On Write Based File Systems Performance Analysis And Implementation Sakis Kasampalis Kongens Lyngby 2010 IMM-MSC-2010-63 Technical University of Denmark Department Of Informatics Building 321, DK-2800 Kongens Lyngby, Denmark Phone +45 45253351, Fax +45 45882673 [email protected] www.imm.dtu.dk Abstract In this work I am focusing on Copy On Write based file systems. Copy On Write is used on modern file systems for providing (1) metadata and data consistency using transactional semantics, (2) cheap and instant backups using snapshots and clones. This thesis is divided into two main parts. The first part focuses on the design and performance of Copy On Write based file systems. Recent efforts aiming at creating a Copy On Write based file system are ZFS, Btrfs, ext3cow, Hammer, and LLFS. My work focuses only on ZFS and Btrfs, since they support the most advanced features. The main goals of ZFS and Btrfs are to offer a scalable, fault tolerant, and easy to administrate file system. I evaluate the performance and scalability of ZFS and Btrfs. The evaluation includes studying their design and testing their performance and scalability against a set of recommended file system benchmarks. Most computers are already based on multi-core and multiple processor architec- tures. Because of that, the need for using concurrent programming models has increased. Transactions can be very helpful for supporting concurrent program- ming models, which ensure that system updates are consistent. Unfortunately, the majority of operating systems and file systems either do not support trans- actions at all, or they simply do not expose them to the users. -

Administering Unidata on UNIX Platforms

C:\Program Files\Adobe\FrameMaker8\UniData 7.2\7.2rebranded\ADMINUNIX\ADMINUNIXTITLE.fm March 5, 2010 1:34 pm Beta Beta Beta Beta Beta Beta Beta Beta Beta Beta Beta Beta Beta Beta Beta Beta UniData Administering UniData on UNIX Platforms UDT-720-ADMU-1 C:\Program Files\Adobe\FrameMaker8\UniData 7.2\7.2rebranded\ADMINUNIX\ADMINUNIXTITLE.fm March 5, 2010 1:34 pm Beta Beta Beta Beta Beta Beta Beta Beta Beta Beta Beta Beta Beta Notices Edition Publication date: July, 2008 Book number: UDT-720-ADMU-1 Product version: UniData 7.2 Copyright © Rocket Software, Inc. 1988-2010. All Rights Reserved. Trademarks The following trademarks appear in this publication: Trademark Trademark Owner Rocket Software™ Rocket Software, Inc. Dynamic Connect® Rocket Software, Inc. RedBack® Rocket Software, Inc. SystemBuilder™ Rocket Software, Inc. UniData® Rocket Software, Inc. UniVerse™ Rocket Software, Inc. U2™ Rocket Software, Inc. U2.NET™ Rocket Software, Inc. U2 Web Development Environment™ Rocket Software, Inc. wIntegrate® Rocket Software, Inc. Microsoft® .NET Microsoft Corporation Microsoft® Office Excel®, Outlook®, Word Microsoft Corporation Windows® Microsoft Corporation Windows® 7 Microsoft Corporation Windows Vista® Microsoft Corporation Java™ and all Java-based trademarks and logos Sun Microsystems, Inc. UNIX® X/Open Company Limited ii SB/XA Getting Started The above trademarks are property of the specified companies in the United States, other countries, or both. All other products or services mentioned in this document may be covered by the trademarks, service marks, or product names as designated by the companies who own or market them. License agreement This software and the associated documentation are proprietary and confidential to Rocket Software, Inc., are furnished under license, and may be used and copied only in accordance with the terms of such license and with the inclusion of the copyright notice. -

System Calls System Calls

System calls We will investigate several issues related to system calls. Read chapter 12 of the book Linux system call categories file management process management error handling note that these categories are loosely defined and much is behind included, e.g. communication. Why? 1 System calls File management system call hierarchy you may not see some topics as part of “file management”, e.g., sockets 2 System calls Process management system call hierarchy 3 System calls Error handling hierarchy 4 Error Handling Anything can fail! System calls are no exception Try to read a file that does not exist! Error number: errno every process contains a global variable errno errno is set to 0 when process is created when error occurs errno is set to a specific code associated with the error cause trying to open file that does not exist sets errno to 2 5 Error Handling error constants are defined in errno.h here are the first few of errno.h on OS X 10.6.4 #define EPERM 1 /* Operation not permitted */ #define ENOENT 2 /* No such file or directory */ #define ESRCH 3 /* No such process */ #define EINTR 4 /* Interrupted system call */ #define EIO 5 /* Input/output error */ #define ENXIO 6 /* Device not configured */ #define E2BIG 7 /* Argument list too long */ #define ENOEXEC 8 /* Exec format error */ #define EBADF 9 /* Bad file descriptor */ #define ECHILD 10 /* No child processes */ #define EDEADLK 11 /* Resource deadlock avoided */ 6 Error Handling common mistake for displaying errno from Linux errno man page: 7 Error Handling Description of the perror () system call. -

Multiview Terminal Emulator User Guide © 2008 by Futuresoft, Inc

MultiView Terminal Emulator User Guide © 2008 by FutureSoft, Inc. All rights reserved. MultiView User Guide This manual, and the software described in it, is furnished under a license agreement. Information in this document is subject to change without notice and does not represent a commitment on the part of FutureSoft. FutureSoft assumes no responsibility or liability for any errors or inaccuracies that may appear in this manual. No part of this manual may be reproduced or transmitted in any form or by any means, electronic or mechanical, including photocopying and recording, or other wise, without the prior, written per- mission of FutureSoft, Inc. MultiView 2007, MultiView 2000 Server Edition, MultiView 2008 Server Edition, MultiView Catalyst, MultiView License Manager, MultiView DeskTop and Host Support Server are tradenames of FutureSoft, Inc. Edition 1 May 2008 Document #E-MVUG-MV2007-P053108 Last Updated: 102308 FutureSoft, Inc. 12012 Wickchester Lane, Suite 600 Houston, Texas 77079 USA Printed in the USA 1.800.989.8908 [email protected] http://www.futuresoft.com Table of Contents Contents Chapter 1 Introduction Introduction to MultiView 2007 ....................................................................................... 2 Minimum Requirements .................................................................................................. 2 Contacting FutureSoft Support ........................................................................................ 3 Chapter 2 Installation and Configuration Installing MultiView -

AVR244 AVR UART As ANSI Terminal Interface

AVR244: AVR UART as ANSI Terminal Interface Features 8-bit • Make use of standard terminal software as user interface to your application. • Enables use of a PC keyboard as input and ascii graphic to display status and control Microcontroller information. • Drivers for ANSI/VT100 Terminal Control included. • Interactive menu interface included. Application Note Introduction This application note describes some basic routines to interface the AVR to a terminal window using the UART (hardware or software). The routines use a subset of the ANSI Color Standard to position the cursor and choose text modes and colors. Rou- tines for simple menu handling are also implemented. The routines can be used to implement a human interface through an ordinary termi- nal window, using interactive menus and selections. This is particularly useful for debugging and diagnostics purposes. The routines can be used as a basic interface for implementing more complex terminal user interfaces. To better understand the code, an introduction to ‘escape sequences’ is given below. Escape Sequences The special terminal functions mentioned (e.g. text modes and colors) are selected using ANSI escape sequences. The AVR sends these sequences to the connected terminal, which in turn executes the associated commands. The escape sequences are strings of bytes starting with an escape character (ASCII code 27) followed by a left bracket ('['). The rest of the string decides the specific operation. For instance, the command '1m' selects bold text, and the full escape sequence thus becomes 'ESC[1m'. There must be no spaces between the characters, and the com- mands are case sensitive. The various operations used in this application note are described below. -

Filesystems HOWTO Filesystems HOWTO Table of Contents Filesystems HOWTO

Filesystems HOWTO Filesystems HOWTO Table of Contents Filesystems HOWTO..........................................................................................................................................1 Martin Hinner < [email protected]>, http://martin.hinner.info............................................................1 1. Introduction..........................................................................................................................................1 2. Volumes...............................................................................................................................................1 3. DOS FAT 12/16/32, VFAT.................................................................................................................2 4. High Performance FileSystem (HPFS)................................................................................................2 5. New Technology FileSystem (NTFS).................................................................................................2 6. Extended filesystems (Ext, Ext2, Ext3)...............................................................................................2 7. Macintosh Hierarchical Filesystem − HFS..........................................................................................3 8. ISO 9660 − CD−ROM filesystem.......................................................................................................3 9. Other filesystems.................................................................................................................................3 -

Serial (RS-232) Commands

Serial (RS-232) Commands Chapter 8 Serial (RS-232) Commands Overview The 7330 Controller has two serial port connectors on the rear panel of the controller labeled RS232-1 and RS232-2. Either port can be configured as the Console port, the port that you use to enter commands to the repeater controller and to perform firmware updates. Whichever port is not being used as the Console port can be used as the Auxiliary port. The 7330 Repeater firmware accepts commands on the Console port. This serial port has a dedicated command queue so that commands can be processed without being delayed by user commands from the DTMF decoders. Commands entered via the serial port have the same format as commands entered via DTMF. The Auxiliary port is currently unused. This chapter describes the uses of the Console port, the command formats, sending a text file of commands, managing files in your controller, and configuring the serial ports. 8-1 7330 Chapter 8 Using the Console Port The Console port has a number of different uses and sets of commands depending on what firmware is running in the 7330 Controller. By default, the 7330 Repeater firmware is controlling the radio equipment attached to the controller. Other firmware installed in the controller, called SBOOT, allows you to manage the files stored in the flash memory of the controller. When power is first applied to the controller, the firmware outputs the following message on the Console port: S-COM 7330 Repeater V3.3 This message tells you what firmware is running and it’s version. -

Interfacing the ESP8266 Wireless Terminal Contents 1 Introduction

Interfacing the ESP8266 Wireless Terminal Ondřej Hruška Katedra měření, FEL ČVUT March 2, 2017 Contents 1 Introduction1 2 Feature overview2 2.1 Terminal implementation........................2 3 Interfacing the terminal3 3.1 UART connection............................3 3.2 Debug port................................4 3.3 Control codes and escape sequences...................4 3.3.1 Escape sequences.........................4 3.3.2 Colors and attributes......................6 3.3.3 Cursor movement.........................6 3.3.4 Clearing commands.......................7 3.3.5 Screen scrolling..........................7 3.3.6 Cursor memory..........................7 3.4 System commands............................7 3.4.1 Query commands.........................8 3.4.2 Changing screen size.......................8 3.4.3 Factory reset...........................8 3.5 User input.................................8 4 WiFi configuration9 5 Useful links 10 1 Introduction The purpose of this document is to present the ESP8266 Wireless Terminal firmware and describe how the module can be interfaced by an external microcontroller. Ondřej Hruška Katedra měření, FEL ČVUT This document is divided into three sections: the first part explains the internal makeup of the module and it’s possibilities, then we move on to the supported control sequences and details of the communication protocol, and in the last part the wireless settings are discussed. 2 Feature overview The module implements a simple, VT100-compatible terminal emulator with a screen of up to 25x80 characters, controlled by ANSI escape sequences for col- ors, cursor movement and screen manipulation. It’s capable of displaying received characters, as well as receiving input from the keyboard or mouse and sending those back over the serial line. The user can access the terminal screen using their web browser thanks to a tiny built-in webserver, after connecting to the module over WiFi. -

Introduction to UNIX Manual

UNIX Introduction to UNIX Manual Michael Gribskov V2018.3 24 August 2018 1 Contents Connecting to servers ................................................................................................................................... 4 Windows ................................................................................................................................................... 4 MobaXterm (recommended) ................................................................................................................ 4 PUTTY .................................................................................................................................................... 5 OS X/MacOS/Linux .................................................................................................................................... 6 SSH ........................................................................................................................................................ 6 Cyberduck ............................................................................................................................................. 6 Edit server files .................................................................................................................................. 7 Transferring files ............................................................................................................................... 7 Purdue University Specific ....................................................................................................................... -

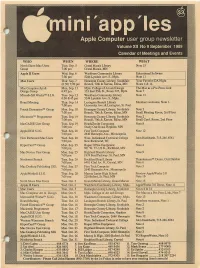

Apple Computer User Group Newsletter Volume XII No 9 September 1989 Calendar of Meetings and Events Mmmmmmmmmmmm WHO WHEN W H E R E W H a T North Shore Mac Users Tues

■-■■-■---•■--■-----■-■•■---■-■■••■■■-■■■■■••■■■■•■■•■■ ""■'" ■■": Apple Computer user group newsletter Volume XII No 9 September 1989 Calendar of Meetings and Events mmmmmmmmmmmm WHO WHEN W H E R E W H A T North Shore Mac Users Tues. Sep. 5 G r a n d M a r a i s L i b r a r y N o t e 1 5 Group 7:00 pm Grand Marais, MN : Apple II Users Wed. Sep. 6 Washburn Community Library Educational Software 7:30 pm 5244 Lyndale Ave. S., Mpls. Note 11 Mac Users Thur. Sep. 7 Hennepin County Library, Southdale Your Favorite DA Night (6:30) 7:00 pm Branch, 70th & Xerxes, Edina, MN Notes 4 & 14 ! Mac Computer Art & Mon. Sep. 11 Mpls. College of Art and Design The Mac as a Pre-Press Link Design Group 6:45 pm 133 East 25th St., Room 325, Mpls. Note 7 MicroSoft® Works™ S.l.G. Tues. Sep. 12 Washburn Community Library Note 13 6:30-8:45 pm 5244 Lyndale Ave. S., Mpls. Board Meeting Thur. Sep. 14 Lexington Branch Library Members welcome, Note 1. 7:00 pm University Ave. & Lexington, St. Paul Fourth Dimension™ Group Mon. Sep. 18 Hennepin County Library, Southdale Note 2 7:00 pm Branch, 70th & Xerxes, Edina, MN Small Meeting Room, 2nd Floor Macintosh™ Programmer Tues. Sep. 19 Hennepin County Library, Southdale Note 2 7:00 pm. Branch, 70th & Xerxes, Edina, MN Small Conf. Room, 2nd Floor MacCAD/E User Group Tues. Sep. 19 Heath/Zenith Computers Note 8 7:00 pm. Shady Oak Road, Hopkins, MN Apple IIGS S.l.G. -

Automatic Detection of Interesting Cellular Automata

Automatic Detection of Interesting Cellular Automata Qitian Liao Electrical Engineering and Computer Sciences University of California, Berkeley Technical Report No. UCB/EECS-2021-150 http://www2.eecs.berkeley.edu/Pubs/TechRpts/2021/EECS-2021-150.html May 21, 2021 Copyright © 2021, by the author(s). All rights reserved. Permission to make digital or hard copies of all or part of this work for personal or classroom use is granted without fee provided that copies are not made or distributed for profit or commercial advantage and that copies bear this notice and the full citation on the first page. To copy otherwise, to republish, to post on servers or to redistribute to lists, requires prior specific permission. Acknowledgement First I would like to thank my faculty advisor, professor Dan Garcia, the best mentor I could ask for, who graciously accepted me to his research team and constantly motivated me to be the best scholar I could. I am also grateful to my technical advisor and mentor in the field of machine learning, professor Gerald Friedland, for the opportunities he has given me. I also want to thank my friend, Randy Fan, who gave me the inspiration to write about the topic. This report would not have been possible without his contributions. I am further grateful to my girlfriend, Yanran Chen, who cared for me deeply. Lastly, I am forever grateful to my parents, Faqiang Liao and Lei Qu: their love, support, and encouragement are the foundation upon which all my past and future achievements are built. Automatic Detection of Interesting Cellular Automata by Qitian Liao Research Project Submitted to the Department of Electrical Engineering and Computer Sciences, University of California at Berkeley, in partial satisfaction of the requirements for the degree of Master of Science, Plan II.