Towards NEXP Versus BPP?

Total Page:16

File Type:pdf, Size:1020Kb

Load more

Recommended publications

-

Notes for Lecture 2

Notes on Complexity Theory Last updated: December, 2011 Lecture 2 Jonathan Katz 1 Review The running time of a Turing machine M on input x is the number of \steps" M takes before it halts. Machine M is said to run in time T (¢) if for every input x the running time of M(x) is at most T (jxj). (In particular, this means it halts on all inputs.) The space used by M on input x is the number of cells written to by M on all its work tapes1 (a cell that is written to multiple times is only counted once); M is said to use space T (¢) if for every input x the space used during the computation of M(x) is at most T (jxj). We remark that these time and space measures are worst-case notions; i.e., even if M runs in time T (n) for only a fraction of the inputs of length n (and uses less time for all other inputs of length n), the running time of M is still T . (Average-case notions of complexity have also been considered, but are somewhat more di±cult to reason about. We may cover this later in the semester; or see [1, Chap. 18].) Recall that a Turing machine M computes a function f : f0; 1g¤ ! f0; 1g¤ if M(x) = f(x) for all x. We will focus most of our attention on boolean functions, a context in which it is more convenient to phrase computation in terms of languages. A language is simply a subset of f0; 1g¤. -

Interactive Proof Systems and Alternating Time-Space Complexity

Theoretical Computer Science 113 (1993) 55-73 55 Elsevier Interactive proof systems and alternating time-space complexity Lance Fortnow” and Carsten Lund** Department of Computer Science, Unicersity of Chicago. 1100 E. 58th Street, Chicago, IL 40637, USA Abstract Fortnow, L. and C. Lund, Interactive proof systems and alternating time-space complexity, Theoretical Computer Science 113 (1993) 55-73. We show a rough equivalence between alternating time-space complexity and a public-coin interactive proof system with the verifier having a polynomial-related time-space complexity. Special cases include the following: . All of NC has interactive proofs, with a log-space polynomial-time public-coin verifier vastly improving the best previous lower bound of LOGCFL for this model (Fortnow and Sipser, 1988). All languages in P have interactive proofs with a polynomial-time public-coin verifier using o(log’ n) space. l All exponential-time languages have interactive proof systems with public-coin polynomial-space exponential-time verifiers. To achieve better bounds, we show how to reduce a k-tape alternating Turing machine to a l-tape alternating Turing machine with only a constant factor increase in time and space. 1. Introduction In 1981, Chandra et al. [4] introduced alternating Turing machines, an extension of nondeterministic computation where the Turing machine can make both existential and universal moves. In 1985, Goldwasser et al. [lo] and Babai [l] introduced interactive proof systems, an extension of nondeterministic computation consisting of two players, an infinitely powerful prover and a probabilistic polynomial-time verifier. The prover will try to convince the verifier of the validity of some statement. -

The Complexity Zoo

The Complexity Zoo Scott Aaronson www.ScottAaronson.com LATEX Translation by Chris Bourke [email protected] 417 classes and counting 1 Contents 1 About This Document 3 2 Introductory Essay 4 2.1 Recommended Further Reading ......................... 4 2.2 Other Theory Compendia ............................ 5 2.3 Errors? ....................................... 5 3 Pronunciation Guide 6 4 Complexity Classes 10 5 Special Zoo Exhibit: Classes of Quantum States and Probability Distribu- tions 110 6 Acknowledgements 116 7 Bibliography 117 2 1 About This Document What is this? Well its a PDF version of the website www.ComplexityZoo.com typeset in LATEX using the complexity package. Well, what’s that? The original Complexity Zoo is a website created by Scott Aaronson which contains a (more or less) comprehensive list of Complexity Classes studied in the area of theoretical computer science known as Computa- tional Complexity. I took on the (mostly painless, thank god for regular expressions) task of translating the Zoo’s HTML code to LATEX for two reasons. First, as a regular Zoo patron, I thought, “what better way to honor such an endeavor than to spruce up the cages a bit and typeset them all in beautiful LATEX.” Second, I thought it would be a perfect project to develop complexity, a LATEX pack- age I’ve created that defines commands to typeset (almost) all of the complexity classes you’ll find here (along with some handy options that allow you to conveniently change the fonts with a single option parameters). To get the package, visit my own home page at http://www.cse.unl.edu/~cbourke/. -

When Subgraph Isomorphism Is Really Hard, and Why This Matters for Graph Databases Ciaran Mccreesh, Patrick Prosser, Christine Solnon, James Trimble

When Subgraph Isomorphism is Really Hard, and Why This Matters for Graph Databases Ciaran Mccreesh, Patrick Prosser, Christine Solnon, James Trimble To cite this version: Ciaran Mccreesh, Patrick Prosser, Christine Solnon, James Trimble. When Subgraph Isomorphism is Really Hard, and Why This Matters for Graph Databases. Journal of Artificial Intelligence Research, Association for the Advancement of Artificial Intelligence, 2018, 61, pp.723 - 759. 10.1613/jair.5768. hal-01741928 HAL Id: hal-01741928 https://hal.archives-ouvertes.fr/hal-01741928 Submitted on 26 Mar 2018 HAL is a multi-disciplinary open access L’archive ouverte pluridisciplinaire HAL, est archive for the deposit and dissemination of sci- destinée au dépôt et à la diffusion de documents entific research documents, whether they are pub- scientifiques de niveau recherche, publiés ou non, lished or not. The documents may come from émanant des établissements d’enseignement et de teaching and research institutions in France or recherche français ou étrangers, des laboratoires abroad, or from public or private research centers. publics ou privés. Journal of Artificial Intelligence Research 61 (2018) 723-759 Submitted 11/17; published 03/18 When Subgraph Isomorphism is Really Hard, and Why This Matters for Graph Databases Ciaran McCreesh [email protected] Patrick Prosser [email protected] University of Glasgow, Glasgow, Scotland Christine Solnon [email protected] INSA-Lyon, LIRIS, UMR5205, F-69621, France James Trimble [email protected] University of Glasgow, Glasgow, Scotland Abstract The subgraph isomorphism problem involves deciding whether a copy of a pattern graph occurs inside a larger target graph. -

The Polynomial Hierarchy

ij 'I '""T', :J[_ ';(" THE POLYNOMIAL HIERARCHY Although the complexity classes we shall study now are in one sense byproducts of our definition of NP, they have a remarkable life of their own. 17.1 OPTIMIZATION PROBLEMS Optimization problems have not been classified in a satisfactory way within the theory of P and NP; it is these problems that motivate the immediate extensions of this theory beyond NP. Let us take the traveling salesman problem as our working example. In the problem TSP we are given the distance matrix of a set of cities; we want to find the shortest tour of the cities. We have studied the complexity of the TSP within the framework of P and NP only indirectly: We defined the decision version TSP (D), and proved it NP-complete (corollary to Theorem 9.7). For the purpose of understanding better the complexity of the traveling salesman problem, we now introduce two more variants. EXACT TSP: Given a distance matrix and an integer B, is the length of the shortest tour equal to B? Also, TSP COST: Given a distance matrix, compute the length of the shortest tour. The four variants can be ordered in "increasing complexity" as follows: TSP (D); EXACTTSP; TSP COST; TSP. Each problem in this progression can be reduced to the next. For the last three problems this is trivial; for the first two one has to notice that the reduction in 411 j ;1 17.1 Optimization Problems 413 I 412 Chapter 17: THE POLYNOMIALHIERARCHY the corollary to Theorem 9.7 proving that TSP (D) is NP-complete can be used with DP. -

Delegating Computation: Interactive Proofs for Muggles∗

Electronic Colloquium on Computational Complexity, Revision 1 of Report No. 108 (2017) Delegating Computation: Interactive Proofs for Muggles∗ Shafi Goldwasser Yael Tauman Kalai Guy N. Rothblum MIT and Weizmann Institute Microsoft Research Weizmann Institute [email protected] [email protected] [email protected] Abstract In this work we study interactive proofs for tractable languages. The (honest) prover should be efficient and run in polynomial time, or in other words a \muggle".1 The verifier should be super-efficient and run in nearly-linear time. These proof systems can be used for delegating computation: a server can run a computation for a client and interactively prove the correctness of the result. The client can verify the result's correctness in nearly-linear time (instead of running the entire computation itself). Previously, related questions were considered in the Holographic Proof setting by Babai, Fortnow, Levin and Szegedy, in the argument setting under computational assumptions by Kilian, and in the random oracle model by Micali. Our focus, however, is on the original inter- active proof model where no assumptions are made on the computational power or adaptiveness of dishonest provers. Our main technical theorem gives a public coin interactive proof for any language computable by a log-space uniform boolean circuit with depth d and input length n. The verifier runs in time n · poly(d; log(n)) and space O(log(n)), the communication complexity is poly(d; log(n)), and the prover runs in time poly(n). In particular, for languages computable by log-space uniform NC (circuits of polylog(n) depth), the prover is efficient, the verifier runs in time n · polylog(n) and space O(log(n)), and the communication complexity is polylog(n). -

Interactive Proofs

Interactive proofs April 12, 2014 [72] L´aszl´oBabai. Trading group theory for randomness. In Proc. 17th STOC, pages 421{429. ACM Press, 1985. doi:10.1145/22145.22192. [89] L´aszl´oBabai and Shlomo Moran. Arthur-Merlin games: A randomized proof system and a hierarchy of complexity classes. J. Comput. System Sci., 36(2):254{276, 1988. doi:10.1016/0022-0000(88)90028-1. [99] L´aszl´oBabai, Lance Fortnow, and Carsten Lund. Nondeterministic ex- ponential time has two-prover interactive protocols. In Proc. 31st FOCS, pages 16{25. IEEE Comp. Soc. Press, 1990. doi:10.1109/FSCS.1990.89520. See item 1991.108. [108] L´aszl´oBabai, Lance Fortnow, and Carsten Lund. Nondeterministic expo- nential time has two-prover interactive protocols. Comput. Complexity, 1 (1):3{40, 1991. doi:10.1007/BF01200056. Full version of 1990.99. [136] Sanjeev Arora, L´aszl´oBabai, Jacques Stern, and Z. (Elizabeth) Sweedyk. The hardness of approximate optima in lattices, codes, and systems of linear equations. In Proc. 34th FOCS, pages 724{733, Palo Alto CA, 1993. IEEE Comp. Soc. Press. doi:10.1109/SFCS.1993.366815. Conference version of item 1997:160. [160] Sanjeev Arora, L´aszl´oBabai, Jacques Stern, and Z. (Elizabeth) Sweedyk. The hardness of approximate optima in lattices, codes, and systems of linear equations. J. Comput. System Sci., 54(2):317{331, 1997. doi:10.1006/jcss.1997.1472. Full version of 1993.136. [111] L´aszl´oBabai, Lance Fortnow, Noam Nisan, and Avi Wigderson. BPP has subexponential time simulations unless EXPTIME has publishable proofs. In Proc. -

Ex. 8 Complexity 1. by Savich Theorem NL ⊆ DSPACE (Log2n)

Ex. 8 Complexity 1. By Savich theorem NL ⊆ DSP ACE(log2n) ⊆ DSP ASE(n). we saw in one of the previous ex. that DSP ACE(n2f(n)) 6= DSP ACE(f(n)) we also saw NL ½ P SP ASE so we conclude NL ½ DSP ACE(n) ½ DSP ACE(n3) ½ P SP ACE but DSP ACE(n) 6= DSP ACE(n3) as needed. 2. (a) We emulate the running of the s ¡ t ¡ conn algorithm in parallel for two paths (that is, we maintain two pointers, both starts at s and guess separately the next step for each of them). We raise a flag as soon as the two pointers di®er. We stop updating each of the two pointers as soon as it gets to t. If both got to t and the flag is raised, we return true. (b) As A 2 NL by Immerman-Szelepsc¶enyi Theorem (NL = vo ¡ NL) we know that A 2 NL. As C = A \ s ¡ t ¡ conn and s ¡ t ¡ conn 2 NL we get that A 2 NL. 3. If L 2 NICE, then taking \quit" as reject we get an NP machine (when x2 = L we always reject) for L, and by taking \quit" as accept we get coNP machine for L (if x 2 L we always reject), and so NICE ⊆ NP \ coNP . For the other direction, if L is in NP \coNP , then there is one NP machine M1(x; y) solving L, and another NP machine M2(x; z) solving coL. We can therefore de¯ne a new machine that guesses the guess y for M1, and the guess z for M2. -

Simple Doubly-Efficient Interactive Proof Systems for Locally

Electronic Colloquium on Computational Complexity, Revision 3 of Report No. 18 (2017) Simple doubly-efficient interactive proof systems for locally-characterizable sets Oded Goldreich∗ Guy N. Rothblumy September 8, 2017 Abstract A proof system is called doubly-efficient if the prescribed prover strategy can be implemented in polynomial-time and the verifier’s strategy can be implemented in almost-linear-time. We present direct constructions of doubly-efficient interactive proof systems for problems in P that are believed to have relatively high complexity. Specifically, such constructions are presented for t-CLIQUE and t-SUM. In addition, we present a generic construction of such proof systems for a natural class that contains both problems and is in NC (and also in SC). The proof systems presented by us are significantly simpler than the proof systems presented by Goldwasser, Kalai and Rothblum (JACM, 2015), let alone those presented by Reingold, Roth- blum, and Rothblum (STOC, 2016), and can be implemented using a smaller number of rounds. Contents 1 Introduction 1 1.1 The current work . 1 1.2 Relation to prior work . 3 1.3 Organization and conventions . 4 2 Preliminaries: The sum-check protocol 5 3 The case of t-CLIQUE 5 4 The general result 7 4.1 A natural class: locally-characterizable sets . 7 4.2 Proof of Theorem 1 . 8 4.3 Generalization: round versus computation trade-off . 9 4.4 Extension to a wider class . 10 5 The case of t-SUM 13 References 15 Appendix: An MA proof system for locally-chracterizable sets 18 ∗Department of Computer Science, Weizmann Institute of Science, Rehovot, Israel. -

Glossary of Complexity Classes

App endix A Glossary of Complexity Classes Summary This glossary includes selfcontained denitions of most complexity classes mentioned in the b o ok Needless to say the glossary oers a very minimal discussion of these classes and the reader is re ferred to the main text for further discussion The items are organized by topics rather than by alphab etic order Sp ecically the glossary is partitioned into two parts dealing separately with complexity classes that are dened in terms of algorithms and their resources ie time and space complexity of Turing machines and complexity classes de ned in terms of nonuniform circuits and referring to their size and depth The algorithmic classes include timecomplexity based classes such as P NP coNP BPP RP coRP PH E EXP and NEXP and the space complexity classes L NL RL and P S P AC E The non k uniform classes include the circuit classes P p oly as well as NC and k AC Denitions and basic results regarding many other complexity classes are available at the constantly evolving Complexity Zoo A Preliminaries Complexity classes are sets of computational problems where each class contains problems that can b e solved with sp ecic computational resources To dene a complexity class one sp ecies a mo del of computation a complexity measure like time or space which is always measured as a function of the input length and a b ound on the complexity of problems in the class We follow the tradition of fo cusing on decision problems but refer to these problems using the terminology of promise problems -

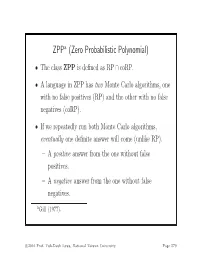

ZPP (Zero Probabilistic Polynomial)

ZPPa (Zero Probabilistic Polynomial) • The class ZPP is defined as RP ∩ coRP. • A language in ZPP has two Monte Carlo algorithms, one with no false positives (RP) and the other with no false negatives (coRP). • If we repeatedly run both Monte Carlo algorithms, eventually one definite answer will come (unlike RP). – A positive answer from the one without false positives. – A negative answer from the one without false negatives. aGill (1977). c 2016 Prof. Yuh-Dauh Lyuu, National Taiwan University Page 579 The ZPP Algorithm (Las Vegas) 1: {Suppose L ∈ ZPP.} 2: {N1 has no false positives, and N2 has no false negatives.} 3: while true do 4: if N1(x)=“yes”then 5: return “yes”; 6: end if 7: if N2(x) = “no” then 8: return “no”; 9: end if 10: end while c 2016 Prof. Yuh-Dauh Lyuu, National Taiwan University Page 580 ZPP (concluded) • The expected running time for the correct answer to emerge is polynomial. – The probability that a run of the 2 algorithms does not generate a definite answer is 0.5 (why?). – Let p(n) be the running time of each run of the while-loop. – The expected running time for a definite answer is ∞ 0.5iip(n)=2p(n). i=1 • Essentially, ZPP is the class of problems that can be solved, without errors, in expected polynomial time. c 2016 Prof. Yuh-Dauh Lyuu, National Taiwan University Page 581 Large Deviations • Suppose you have a biased coin. • One side has probability 0.5+ to appear and the other 0.5 − ,forsome0<<0.5. -

The Weakness of CTC Qubits and the Power of Approximate Counting

The weakness of CTC qubits and the power of approximate counting Ryan O'Donnell∗ A. C. Cem Sayy April 7, 2015 Abstract We present results in structural complexity theory concerned with the following interre- lated topics: computation with postselection/restarting, closed timelike curves (CTCs), and approximate counting. The first result is a new characterization of the lesser known complexity class BPPpath in terms of more familiar concepts. Precisely, BPPpath is the class of problems that can be efficiently solved with a nonadaptive oracle for the Approximate Counting problem. Similarly, PP equals the class of problems that can be solved efficiently with nonadaptive queries for the related Approximate Difference problem. Another result is concerned with the compu- tational power conferred by CTCs; or equivalently, the computational complexity of finding stationary distributions for quantum channels. Using the above-mentioned characterization of PP, we show that any poly(n)-time quantum computation using a CTC of O(log n) qubits may as well just use a CTC of 1 classical bit. This result essentially amounts to showing that one can find a stationary distribution for a poly(n)-dimensional quantum channel in PP. ∗Department of Computer Science, Carnegie Mellon University. Work performed while the author was at the Bo˘gazi¸ciUniversity Computer Engineering Department, supported by Marie Curie International Incoming Fellowship project number 626373. yBo˘gazi¸ciUniversity Computer Engineering Department. 1 Introduction It is well known that studying \non-realistic" augmentations of computational models can shed a great deal of light on the power of more standard models. The study of nondeterminism and the study of relativization (i.e., oracle computation) are famous examples of this phenomenon.