Vector and SIMD Processors

Total Page:16

File Type:pdf, Size:1020Kb

Load more

Recommended publications

-

Data-Level Parallelism

Fall 2015 :: CSE 610 – Parallel Computer Architectures Data-Level Parallelism Nima Honarmand Fall 2015 :: CSE 610 – Parallel Computer Architectures Overview • Data Parallelism vs. Control Parallelism – Data Parallelism: parallelism arises from executing essentially the same code on a large number of objects – Control Parallelism: parallelism arises from executing different threads of control concurrently • Hypothesis: applications that use massively parallel machines will mostly exploit data parallelism – Common in the Scientific Computing domain • DLP originally linked with SIMD machines; now SIMT is more common – SIMD: Single Instruction Multiple Data – SIMT: Single Instruction Multiple Threads Fall 2015 :: CSE 610 – Parallel Computer Architectures Overview • Many incarnations of DLP architectures over decades – Old vector processors • Cray processors: Cray-1, Cray-2, …, Cray X1 – SIMD extensions • Intel SSE and AVX units • Alpha Tarantula (didn’t see light of day ) – Old massively parallel computers • Connection Machines • MasPar machines – Modern GPUs • NVIDIA, AMD, Qualcomm, … • Focus of throughput rather than latency Vector Processors 4 SCALAR VECTOR (1 operation) (N operations) r1 r2 v1 v2 + + r3 v3 vector length add r3, r1, r2 vadd.vv v3, v1, v2 Scalar processors operate on single numbers (scalars) Vector processors operate on linear sequences of numbers (vectors) 6.888 Spring 2013 - Sanchez and Emer - L14 What’s in a Vector Processor? 5 A scalar processor (e.g. a MIPS processor) Scalar register file (32 registers) Scalar functional units (arithmetic, load/store, etc) A vector register file (a 2D register array) Each register is an array of elements E.g. 32 registers with 32 64-bit elements per register MVL = maximum vector length = max # of elements per register A set of vector functional units Integer, FP, load/store, etc Some times vector and scalar units are combined (share ALUs) 6.888 Spring 2013 - Sanchez and Emer - L14 Example of Simple Vector Processor 6 6.888 Spring 2013 - Sanchez and Emer - L14 Basic Vector ISA 7 Instr. -

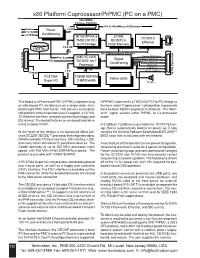

X86 Platform Coprocessor/Prpmc (PC on a PMC)

x86 Platform Coprocessor/PrPMC (PC on a PMC) 32b/33MHz PCI bus PN1/PN2 +5V to Kbd/Mouse/USB power Vcore Power +3.3V +2.5v Conditioning +3.3VIO 1K100 FPGA & 512KB 10/100TX TMS2250 PCI BIOS/PLA Ethernet RJ45 Compact to PCI bridge Flash Memory PLA I/O Flash site 8 32b/33MHz Internal PCI bus Analog SVGA Video Pwr Seq AMD SC2200 Signal COM 1 (RXD/TXD only) IDE "GEODE (tm)" Conditioning COM 2 (RXD/TXD only) Processor USB Port 1 Rear I/O PN4 I/O Rear 64 USB Port 2 LPC Keyboard/Mouse Floppy 36 Pin 36 Pin Connector PC87364 128MB SDRAM Status LEDs Super I/O (16MWx64b) PC Spkr This board is a Processor PMC (PrPMC) implementing A PrPMC implements a TMS2250 PCI-to-PCI bridge to an x86-based PC architecture on a single-wide, stan- the host, and a “Coprocessor” cofniguration implements dard height PMC form factor. This delivers a complete back-to-back 16550 compatible COM ports. The “Mon- x86 platform with comprehensive I/O support, a 10/100- arch” signal selects either PrPMC or Co-processor TX Ethernet interface, and disk access (both floppy and mode. IDE drives). The board features an on-board hard drive using Compact Flash. A 512Kbyte FLASH memory holds the 1K100 PLA im- age that is automatically loaded on power up. It also At the heart of the design is an Advanced Micro De- contains the General Software Embedded BIOS 2000™ vices SC2200 GEODE™ processor that integrates video, BIOS code that is included with each board. -

Convey Overview

THE WORLD’S FIRST HYBRID-CORE COMPUTER. CONVEY HYBRID-CORE COMPUTING Hybrid-core Computing Convey HC-1 High Performance of application- specific hardware Heterogenous solutions • can be much more efficient Performance/ • still hard to program Programmability and Power efficiency deployment ease of an x86 server Application Multicore solutions • don’t always scale well • parallel programming is hard Low Difficult Ease of Deployment Easy 1/22/2010 3 Hybrid-Core Computing Application-Specific Personalities Applications • Extend the x86 instruction set • Implement key operations in Convey Compilers hardware Life Sciences x86-64 ISA Custom ISA CAE Custom Financial Oil & Gas Shared Virtual Memory Cache-coherent, shared memory • Both ISAs address common memory *ISA: Instruction Set Architecture 7/12/2010 4 HC-1 Hardware PCI I/O FPGA FPGA Intel Personalities Chipset FPGA FPGA 8 GB/s 80 GB/s Memory Memory Cache Coherent, Shared Virtual Memory 1/22/2010 5 Using Personalities C/C++ Fortran • Personalities are user specifies reloadable personality at instruction sets Convey Software compile time Development Suite • Compiler instruction generates x86 descriptions and coprocessor instructions from Hybrid-Core Executable P ANSI standard x86-64 and Coprocessor Personalities C/C++ & Fortran Instructions • Executable can run on x86 nodes FPGA Convey HC-1 or Convey Hybrid- bitfiles Core nodes Intel x86 Coprocessor personality loaded at runtime by OS 1/22/2010 6 SYSTEM ARCHITECTURE HC-1 Architecture “Commodity” Intel Server Convey FPGA-based coprocessor Direct -

2.5 Classification of Parallel Computers

52 // Architectures 2.5 Classification of Parallel Computers 2.5 Classification of Parallel Computers 2.5.1 Granularity In parallel computing, granularity means the amount of computation in relation to communication or synchronisation Periods of computation are typically separated from periods of communication by synchronization events. • fine level (same operations with different data) ◦ vector processors ◦ instruction level parallelism ◦ fine-grain parallelism: – Relatively small amounts of computational work are done between communication events – Low computation to communication ratio – Facilitates load balancing 53 // Architectures 2.5 Classification of Parallel Computers – Implies high communication overhead and less opportunity for per- formance enhancement – If granularity is too fine it is possible that the overhead required for communications and synchronization between tasks takes longer than the computation. • operation level (different operations simultaneously) • problem level (independent subtasks) ◦ coarse-grain parallelism: – Relatively large amounts of computational work are done between communication/synchronization events – High computation to communication ratio – Implies more opportunity for performance increase – Harder to load balance efficiently 54 // Architectures 2.5 Classification of Parallel Computers 2.5.2 Hardware: Pipelining (was used in supercomputers, e.g. Cray-1) In N elements in pipeline and for 8 element L clock cycles =) for calculation it would take L + N cycles; without pipeline L ∗ N cycles Example of good code for pipelineing: §doi =1 ,k ¤ z ( i ) =x ( i ) +y ( i ) end do ¦ 55 // Architectures 2.5 Classification of Parallel Computers Vector processors, fast vector operations (operations on arrays). Previous example good also for vector processor (vector addition) , but, e.g. recursion – hard to optimise for vector processors Example: IntelMMX – simple vector processor. -

Vector-Thread Architecture and Implementation by Ronny Meir Krashinsky B.S

Vector-Thread Architecture And Implementation by Ronny Meir Krashinsky B.S. Electrical Engineering and Computer Science University of California at Berkeley, 1999 S.M. Electrical Engineering and Computer Science Massachusetts Institute of Technology, 2001 Submitted to the Department of Electrical Engineering and Computer Science in partial fulfillment of the requirements for the degree of Doctor of Philosophy in Electrical Engineering and Computer Science at the MASSACHUSETTS INSTITUTE OF TECHNOLOGY June 2007 c Massachusetts Institute of Technology 2007. All rights reserved. Author........................................................................... Department of Electrical Engineering and Computer Science May 25, 2007 Certified by . Krste Asanovic´ Associate Professor Thesis Supervisor Accepted by . Arthur C. Smith Chairman, Department Committee on Graduate Students 2 Vector-Thread Architecture And Implementation by Ronny Meir Krashinsky Submitted to the Department of Electrical Engineering and Computer Science on May 25, 2007, in partial fulfillment of the requirements for the degree of Doctor of Philosophy in Electrical Engineering and Computer Science Abstract This thesis proposes vector-thread architectures as a performance-efficient solution for all-purpose computing. The VT architectural paradigm unifies the vector and multithreaded compute models. VT provides the programmer with a control processor and a vector of virtual processors. The control processor can use vector-fetch commands to broadcast instructions to all the VPs or each VP can use thread-fetches to direct its own control flow. A seamless intermixing of the vector and threaded control mechanisms allows a VT architecture to flexibly and compactly encode application paral- lelism and locality. VT architectures can efficiently exploit a wide variety of loop-level parallelism, including non-vectorizable loops with cross-iteration dependencies or internal control flow. -

Lecture 14: Vector Processors

Lecture 14: Vector Processors Department of Electrical Engineering Stanford University http://eeclass.stanford.edu/ee382a EE382A – Autumn 2009 Lecture 14- 1 Christos Kozyrakis Announcements • Readings for this lecture – H&P 4th edition, Appendix F – Required paper • HW3 available on online – Due on Wed 11/11th • Exam on Fri 11/13, 9am - noon, room 200-305 – All lectures + required papers – Closed books, 1 page of notes, calculator – Review session on Friday 11/6, 2-3pm, Gates Hall Room 498 EE382A – Autumn 2009 Lecture 14 - 2 Christos Kozyrakis Review: Multi-core Processors • Use Moore’s law to place more cores per chip – 2x cores/chip with each CMOS generation – Roughly same clock frequency – Known as multi-core chips or chip-multiprocessors (CMP) • Shared-memory multi-core – All cores access a unified physical address space – Implicit communication through loads and stores – Caches and OOO cores lead to coherence and consistency issues EE382A – Autumn 2009 Lecture 14 - 3 Christos Kozyrakis Review: Memory Consistency Problem P1 P2 /*Assume initial value of A and flag is 0*/ A = 1; while (flag == 0); /*spin idly*/ flag = 1; print A; • Intuitively, you expect to print A=1 – But can you think of a case where you will print A=0? – Even if cache coherence is available • Coherence talks about accesses to a single location • Consistency is about ordering for accesses to difference locations • Alternatively – Coherence determines what value is returned by a read – Consistency determines when a write value becomes visible EE382A – Autumn 2009 Lecture 14 - 4 Christos Kozyrakis Sequential Consistency (What the Programmers Often Assume) • Definition by L. -

SIMD Extensions

SIMD Extensions PDF generated using the open source mwlib toolkit. See http://code.pediapress.com/ for more information. PDF generated at: Sat, 12 May 2012 17:14:46 UTC Contents Articles SIMD 1 MMX (instruction set) 6 3DNow! 8 Streaming SIMD Extensions 12 SSE2 16 SSE3 18 SSSE3 20 SSE4 22 SSE5 26 Advanced Vector Extensions 28 CVT16 instruction set 31 XOP instruction set 31 References Article Sources and Contributors 33 Image Sources, Licenses and Contributors 34 Article Licenses License 35 SIMD 1 SIMD Single instruction Multiple instruction Single data SISD MISD Multiple data SIMD MIMD Single instruction, multiple data (SIMD), is a class of parallel computers in Flynn's taxonomy. It describes computers with multiple processing elements that perform the same operation on multiple data simultaneously. Thus, such machines exploit data level parallelism. History The first use of SIMD instructions was in vector supercomputers of the early 1970s such as the CDC Star-100 and the Texas Instruments ASC, which could operate on a vector of data with a single instruction. Vector processing was especially popularized by Cray in the 1970s and 1980s. Vector-processing architectures are now considered separate from SIMD machines, based on the fact that vector machines processed the vectors one word at a time through pipelined processors (though still based on a single instruction), whereas modern SIMD machines process all elements of the vector simultaneously.[1] The first era of modern SIMD machines was characterized by massively parallel processing-style supercomputers such as the Thinking Machines CM-1 and CM-2. These machines had many limited-functionality processors that would work in parallel. -

Computer Hardware Architecture Lecture 4

Computer Hardware Architecture Lecture 4 Manfred Liebmann Technische Universit¨atM¨unchen Chair of Optimal Control Center for Mathematical Sciences, M17 [email protected] November 10, 2015 Manfred Liebmann November 10, 2015 Reading List • Pacheco - An Introduction to Parallel Programming (Chapter 1 - 2) { Introduction to computer hardware architecture from the parallel programming angle • Hennessy-Patterson - Computer Architecture - A Quantitative Approach { Reference book for computer hardware architecture All books are available on the Moodle platform! Computer Hardware Architecture 1 Manfred Liebmann November 10, 2015 UMA Architecture Figure 1: A uniform memory access (UMA) multicore system Access times to main memory is the same for all cores in the system! Computer Hardware Architecture 2 Manfred Liebmann November 10, 2015 NUMA Architecture Figure 2: A nonuniform memory access (UMA) multicore system Access times to main memory differs form core to core depending on the proximity of the main memory. This architecture is often used in dual and quad socket servers, due to improved memory bandwidth. Computer Hardware Architecture 3 Manfred Liebmann November 10, 2015 Cache Coherence Figure 3: A shared memory system with two cores and two caches What happens if the same data element z1 is manipulated in two different caches? The hardware enforces cache coherence, i.e. consistency between the caches. Expensive! Computer Hardware Architecture 4 Manfred Liebmann November 10, 2015 False Sharing The cache coherence protocol works on the granularity of a cache line. If two threads manipulate different element within a single cache line, the cache coherency protocol is activated to ensure consistency, even if every thread is only manipulating its own data. -

Exploiting Free Silicon for Energy-Efficient Computing Directly

Exploiting Free Silicon for Energy-Efficient Computing Directly in NAND Flash-based Solid-State Storage Systems Peng Li Kevin Gomez David J. Lilja Seagate Technology Seagate Technology University of Minnesota, Twin Cities Shakopee, MN, 55379 Shakopee, MN, 55379 Minneapolis, MN, 55455 [email protected] [email protected] [email protected] Abstract—Energy consumption is a fundamental issue in today’s data A well-known solution to the memory wall issue is moving centers as data continue growing dramatically. How to process these computing closer to the data. For example, Gokhale et al [2] data in an energy-efficient way becomes more and more important. proposed a processor-in-memory (PIM) chip by adding a processor Prior work had proposed several methods to build an energy-efficient system. The basic idea is to attack the memory wall issue (i.e., the into the main memory for computing. Riedel et al [9] proposed performance gap between CPUs and main memory) by moving com- an active disk by using the processor inside the hard disk drive puting closer to the data. However, these methods have not been widely (HDD) for computing. With the evolution of other non-volatile adopted due to high cost and limited performance improvements. In memories (NVMs), such as phase-change memory (PCM) and spin- this paper, we propose the storage processing unit (SPU) which adds computing power into NAND flash memories at standard solid-state transfer torque (STT)-RAM, researchers also proposed to use these drive (SSD) cost. By pre-processing the data using the SPU, the data NVMs as the main memory for data-intensive applications [10] to that needs to be transferred to host CPUs for further processing improve the system energy-efficiency. -

CUDA What Is GPGPU

What is GPGPU ? • General Purpose computation using GPU in applications other than 3D graphics CUDA – GPU accelerates critical path of application • Data parallel algorithms leverage GPU attributes – Large data arrays, streaming throughput Slides by David Kirk – Fine-grain SIMD parallelism – Low-latency floating point (FP) computation • Applications – see //GPGPU.org – Game effects (FX) physics, image processing – Physical modeling, computational engineering, matrix algebra, convolution, correlation, sorting Previous GPGPU Constraints CUDA • Dealing with graphics API per thread per Shader Input Registers • “Compute Unified Device Architecture” – Working with the corner cases per Context • General purpose programming model of the graphics API Fragment Program Texture – User kicks off batches of threads on the GPU • Addressing modes Constants – GPU = dedicated super-threaded, massively data parallel co-processor – Limited texture size/dimension Temp Registers • Targeted software stack – Compute oriented drivers, language, and tools Output Registers • Shader capabilities • Driver for loading computation programs into GPU FB Memory – Limited outputs – Standalone Driver - Optimized for computation • Instruction sets – Interface designed for compute - graphics free API – Data sharing with OpenGL buffer objects – Lack of Integer & bit ops – Guaranteed maximum download & readback speeds • Communication limited – Explicit GPU memory management – Between pixels – Scatter a[i] = p 1 Parallel Computing on a GPU Extended C • Declspecs • NVIDIA GPU Computing Architecture – global, device, shared, __device__ float filter[N]; – Via a separate HW interface local, constant __global__ void convolve (float *image) { – In laptops, desktops, workstations, servers GeForce 8800 __shared__ float region[M]; ... • Keywords • 8-series GPUs deliver 50 to 200 GFLOPS region[threadIdx] = image[i]; on compiled parallel C applications – threadIdx, blockIdx • Intrinsics __syncthreads() – __syncthreads .. -

An Introduction to Gpus, CUDA and Opencl

An Introduction to GPUs, CUDA and OpenCL Bryan Catanzaro, NVIDIA Research Overview ¡ Heterogeneous parallel computing ¡ The CUDA and OpenCL programming models ¡ Writing efficient CUDA code ¡ Thrust: making CUDA C++ productive 2/54 Heterogeneous Parallel Computing Latency-Optimized Throughput- CPU Optimized GPU Fast Serial Scalable Parallel Processing Processing 3/54 Why do we need heterogeneity? ¡ Why not just use latency optimized processors? § Once you decide to go parallel, why not go all the way § And reap more benefits ¡ For many applications, throughput optimized processors are more efficient: faster and use less power § Advantages can be fairly significant 4/54 Why Heterogeneity? ¡ Different goals produce different designs § Throughput optimized: assume work load is highly parallel § Latency optimized: assume work load is mostly sequential ¡ To minimize latency eXperienced by 1 thread: § lots of big on-chip caches § sophisticated control ¡ To maXimize throughput of all threads: § multithreading can hide latency … so skip the big caches § simpler control, cost amortized over ALUs via SIMD 5/54 Latency vs. Throughput Specificaons Westmere-EP Fermi (Tesla C2050) 6 cores, 2 issue, 14 SMs, 2 issue, 16 Processing Elements 4 way SIMD way SIMD @3.46 GHz @1.15 GHz 6 cores, 2 threads, 4 14 SMs, 48 SIMD Resident Strands/ way SIMD: vectors, 32 way Westmere-EP (32nm) Threads (max) SIMD: 48 strands 21504 threads SP GFLOP/s 166 1030 Memory Bandwidth 32 GB/s 144 GB/s Register File ~6 kB 1.75 MB Local Store/L1 Cache 192 kB 896 kB L2 Cache 1.5 MB 0.75 MB -

Cuda C Programming Guide

CUDA C PROGRAMMING GUIDE PG-02829-001_v10.0 | October 2018 Design Guide CHANGES FROM VERSION 9.0 ‣ Documented restriction that operator-overloads cannot be __global__ functions in Operator Function. ‣ Removed guidance to break 8-byte shuffles into two 4-byte instructions. 8-byte shuffle variants are provided since CUDA 9.0. See Warp Shuffle Functions. ‣ Passing __restrict__ references to __global__ functions is now supported. Updated comment in __global__ functions and function templates. ‣ Documented CUDA_ENABLE_CRC_CHECK in CUDA Environment Variables. ‣ Warp matrix functions now support matrix products with m=32, n=8, k=16 and m=8, n=32, k=16 in addition to m=n=k=16. www.nvidia.com CUDA C Programming Guide PG-02829-001_v10.0 | ii TABLE OF CONTENTS Chapter 1. Introduction.........................................................................................1 1.1. From Graphics Processing to General Purpose Parallel Computing............................... 1 1.2. CUDA®: A General-Purpose Parallel Computing Platform and Programming Model.............3 1.3. A Scalable Programming Model.........................................................................4 1.4. Document Structure...................................................................................... 5 Chapter 2. Programming Model............................................................................... 7 2.1. Kernels......................................................................................................7 2.2. Thread Hierarchy........................................................................................