NL4DV: a Toolkit for Generating Analytic Specifications for Data Visualization from Natural Language Queries

Total Page:16

File Type:pdf, Size:1020Kb

Load more

Recommended publications

-

Organized Crime and Terrorist Activity in Mexico, 1999-2002

ORGANIZED CRIME AND TERRORIST ACTIVITY IN MEXICO, 1999-2002 A Report Prepared by the Federal Research Division, Library of Congress under an Interagency Agreement with the United States Government February 2003 Researcher: Ramón J. Miró Project Manager: Glenn E. Curtis Federal Research Division Library of Congress Washington, D.C. 20540−4840 Tel: 202−707−3900 Fax: 202−707−3920 E-Mail: [email protected] Homepage: http://loc.gov/rr/frd/ Library of Congress – Federal Research Division Criminal and Terrorist Activity in Mexico PREFACE This study is based on open source research into the scope of organized crime and terrorist activity in the Republic of Mexico during the period 1999 to 2002, and the extent of cooperation and possible overlap between criminal and terrorist activity in that country. The analyst examined those organized crime syndicates that direct their criminal activities at the United States, namely Mexican narcotics trafficking and human smuggling networks, as well as a range of smaller organizations that specialize in trans-border crime. The presence in Mexico of transnational criminal organizations, such as Russian and Asian organized crime, was also examined. In order to assess the extent of terrorist activity in Mexico, several of the country’s domestic guerrilla groups, as well as foreign terrorist organizations believed to have a presence in Mexico, are described. The report extensively cites from Spanish-language print media sources that contain coverage of criminal and terrorist organizations and their activities in Mexico. -

Annotated Bibliography of Non-Fiction Dvds

Resources for Teachers: Nonfiction DVDs to Use in the Classroom Famous Historical Figures Edison - DVD 621.3 Edi Documentary about Thomas Edison that explores the complex alchemy that accounts for the enduring celebrity of America's most famous inventor. It offers new perspectives on the man and his milieu, and illuminates not only the true nature of invention, but its role in turn-of-the-century America's rush into the future. Rating: TV-PG In Search of Shakespeare - DVD 822.3 In An intimate look at William Shakespeare and his world. Rating: Not Rated Johann Sebastian Bach - DVD 921 Bach, J. One of the greatest composers in Western musical history, Bach created masterpieces of choral and instrumental music. More than 1,000 of his works survive from every musical form and genre in use in 18th century Germany. During his lifetime, he was appreciated more as an organist than a composer. It was not until nearly a century after his death that a musical public came to appreciate his body of work. Rating: Not Rated Ludwig Van Beethoven - DVD 921 Beethoven, L. One of the greatest masters of music, Beethoven is particularly admired for his orchestral works. The highly expressive music Beethoven produced, inspired composers like Brahms, Mendelssohn, and Wagner. Rating: Not Rated George Fredric Handel 1685-1759 - DVD 921 Handel, G. Presents a factual outline of Handel's life and an overview of his work. Handel's greatest gift to posterity was undoubtedly the creation of the dramatic oratorio genre, partly out of existing operatic traditions and partly by force of his own musical imagination. -

Austria: Jewish Family History Research Guide

Courtesy of the Ackman & Ziff Family Genealogy Institute Revised June 2012 Updated March 12, 2013 Austria: Jewish Family History Research Guide Austria Like most European countries, Austria’s borders have changed considerably over time. In 1690 the Austrian Hapsburgs completed the reconquest of Hungary and Transylvania from the Ottoman Turks. From 1867 to 1918, Hungary achieved autonomy within the “Dual Monarchy,” or Austro-Hungarian Empire, as well as full control over Transylvania. After World War I, Austro-Hungry was split up among various other countries, so that areas formerly under Austro-Hungarian jurisdiction are today located within the borders of Austria, Bosnia, Croatia, Czech Republic, Hungary, Italy, Poland, Romania, Serbia, Slovakia, Slovenia and the Ukraine. The primary focus of this fact sheet is Austria within its post World War II borders. Fact sheets for other countries formerly part of the Austro-Hungarian Empire are also available. How to Begin Follow the general guidelines in our fact sheets on starting your family history research, immigration records, naturalization records, and finding your ancestral town. Determine whether your town is still within modern-day Austria, and in which county and district it is located. A good resource for starting your research is “Beginner’s Guide to Austrian Jewish Genealogy” by E. Randol Schoenberg. This manual is accessible on the jewishgen Austria-Czech Special Interest Group (SIG) website, www.jewishgen.org/AustriaCzech. Records • Depending on the time period, records may be in several languages: German, Hungarian, Hebrew, or Latin. • By decree of the Austrian Emperor, in 1787 all Jews within the Empire were required to adopt German surnames. -

Outstanding Warrants

Ignacio Lopez Hernandez Wanted for Murder Hispanic Male Date of Birth: 5/11/1977 Height: 5’8 Weight: 165 lbs Birth Place: Actopan, Hidalgo, Mexico Black Hair Brown Eyes Tattoos: N/A Ignacio Hernandez Alias: “Nacho” He has possibly returned to Actopan, Hidalgo, Mexico. Report # 07088934 Place of occurrence: 1604 Chambers Year of occurrence: 2007 Salvador Eder Garcia Wanted for Murder Hispanic Male Date of Birth: 6/20/1982 Height: 6’4 Weight: 165 lbs Birth Place: Michoacan, Mexico Black Hair Brown Eyes Tattoos: “LK” on left hand Dots on right knuckles & left wrist “Garcia” on back Cross on left hand Salvador Eder Garcia Alias: “Flaco” Sandoval Garcia Report # 06065575 Place of occurrence: 2309 Ross Year of occurrence: 2006 Mitchell Walters Rainey Wanted for Murder Black Male Date of Birth: 5/27/1986 Height: 5’10 Weight: 160 Birth Place: Bedford, TX Black Hair Brown Eyes Tattoos: N/A Mitchell Walters Rainey Alias: N/A Report # 06053095 Place of occurrence: 5813 Whittlesey Road Year of occurrence: 2006 Simon Ibarra Wanted for Murder Hispanic Male Date of Birth: N/A Approximately 23 to 28 years old today (2009) Arrest Warrant 06-F-0153-VC Height: 5’9 Weight: 165 Birth Place: Unknown Last known to be in Mexico Black Hair Simon Ibarra Brown eyes Tattoos: N/A Alias: N/A Report # 06002994 Place of occurrence: 1314 Denver Year of occurrence: 2006 Raul Lopez Garcia Wanted for Murder Hispanic Male Date of Birth: 11/27/1985 Height: 5’11 Weight: 190 Birth Place: Mexico Black Hair Brown Eyes Tattoos: N/A Raul Lopez Garcia Alias: N/A Report # 05042701 Place of occurrence: 3105 Roosevelt Year of occurrence: 2005 Victor J. -

A Report to the Assistant Attorney General, Criminal Division, U.S

Robert Jan Verbelen and the United States Government A Report to the Assistant Attorney General, Criminal Division, U.S. Department of Justice NEAL M. SHER, Director Office of Special Investigations ARON A. GOLBERG, Attorney Office of Special Investigations ELIZABETH B. WHITE, Historian Office of Special Investigations June 16, 1988 TABLE OF CONTENTS Pacre I . Introduction A . Background of Verbelen Investigation ...... 1 B . Scope of Investigation ............. 2 C . Conduct of Investigation ............ 4 I1. Early Life Through World War I1 .......... 7 I11 . War Crimes Trial in Belgium ............ 11 IV . The 430th Counter Intelligence Corps Detachment in Austria ..................... 12 A . Mission. Organization. and Personnel ...... 12 B . Use of Former Nazis and Nazi Collaborators ... 15 V . Verbelen's Versions of His Work for the CIC .... 20 A . Explanation to the 66th CIC Group ....... 20 B . Testimony at War Crimes Trial ......... 21 C . Flemish Interview ............... 23 D . Statement to Austrian Journalist ........ 24 E . Version Told to OSI .............. 26 VI . Verbelen's Employment with the 430th CIC Detachment ..................... 28 A . Work for Harris ................ 28 B . Project Newton ................. 35 C . Change of Alias from Mayer to Schwab ...... 44 D . The CIC Ignores Verbelen's Change of Identity .................... 52 E . Verbelen's Work for the 430th CIC from 1950 to1955 .................... 54 1 . Work for Ekstrom .............. 54 2 . Work for Paulson .............. 55 3 . The 430th CIC Refuses to Conduct Checks on Verbelen and His Informants ....... 56 4 . Work for Giles ............... 60 Verbelen's Employment with the 66th CIC Group ... 62 A . Work for Wood ................. 62 B . Verbelen Reveals His True Identity ....... 63 C . A Western European Intelligence Agency Recruits Verbelen .............. -

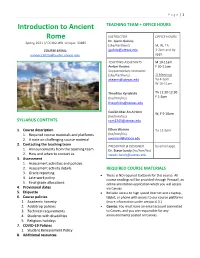

Introduction to Ancient Rome

P a g e | 1 Introduction to Ancient TEACHING TEAM + OFFICE HOURS Rome INSTRUCTOR OFFICE HOURS Dr. Joann Gulizio Spring 2021 // CC302-WB, Unique: 33885 (she/her/hers) M, W, Th COURSE EMAIL: [email protected] 1-2pm and by [email protected] appt. TEACHING ASSISTANTS M 10-11am Amber Kearns F 10-11am Supplementary Instructor (she/her/hers) SI Meetings [email protected] Tu 4-5pm W 10-11am Theofilos Kyriakidis Th 11:30-12:30 (he/him/his) F 1-2pm [email protected] Caolán Mac An Aircinn W, F 9-10am (he/him/his) SYLLABUS CONTENTS [email protected] 1. Course description Ethan Warren Tu 12-2pm 1. Required course materials and platforms (he/him/his) 2. A note on challenging course material [email protected] 2. Contacting the teaching team PRESENTER & DESIGNER by email appt. 1. Announcements from the teaching team Dr. Steve Lundy (he/him/his) 2. How and when to contact us [email protected] 3. Assessment 1. Assessment activities and policies 2. Assessment activity details REQUIRED COURSE MATERIALS 3. Grade reporting • There is NO required textbook for this course. All 4. Late work policy course readings will be provided through Perusall, an 5. Final grade allocations online annotation application which you will access 4. Provisional dates via Canvas 5. Etiquette • Reliable access to high speed Internet and a laptop, 6. Course policies tablet, or phone with access to our course platforms 1. Academic honesty (more information under section 6.3.) 2. Add/drop policies • Canvas: You must have an email account connected 3. -

7/20/20 Time:15:51:12 Drug Court/4 7/27/20 Page 1 Court Notes Subjects Name/Alias Bondsman In

PGM:(PRTDOC) DATE: 7/20/20 TIME:15:51:12 DRUG COURT/4 7/27/20 PAGE 1 COURT NOTES SUBJECTS NAME/ALIAS BONDSMAN INDICT I.D.# DAYS PURPOSE STATUS OFFENSE DEFENSE AT PROSECUTOR DIXON ROBERT LANGSTON KEITH A DAY DIXON ROBERT LEE 19 32570 042600 377 DESIGNATION OF ATTY BOND POSS C/S PEN GRP 1 NO ATTORNEY DP2 LUCAS NAISHIA STAN STANLEY 20 34434 350321 152 INITIAL SETTING BOND POSS C/S PEN GRP 1 NO ATTORNEY DP2 MCELROY DAVID AL REED, SURETY MCELROY DAVID LEONARD 20 34436 217927 152 INITIAL SETTING BOND POSS C/S PEN GRP 1 NO ATTORNEY DP3 RAMIREZ VICTORIA ALICIA KEITH A DAY 20 34468 273519 255 INITIAL SETTING BOND POSS C/S PEN GRP 1 *DAVID BARLOW DP1 **** MISDEMEANOR CASE ***COUNTY COURT AT LAW #3 ,JUDGE CLINT WOODS 7/27/20 325615 0 273519 165 TRIAL THEFT-CLASS B CC3-B PGM:(PRTDOC) DATE: 7/20/20 TIME:15:51:12 DRUG COURT/4 7/27/20 PAGE 2 COURT NOTES SUBJECTS NAME/ALIAS BONDSMAN INDICT I.D.# DAYS PURPOSE STATUS OFFENSE DEFENSE AT PROSECUTOR GARCIA TERESA ANNA ON TIME BAIL BONDING ******** FILE NOTES: WAS TRANSF TO HARRIS CO MOTION TO REVOKE PROBATION 17 27756 337785 564 PUNISHMENT/SENTENCE BOND POSS C/S PEN GRP 1 MIKE VANZANDT DP1 ODNEAL LAUREN JANE HAILEY ON TIME BAIL BONDING MOTION TO REVOKE PROBATION 17 28356 337264 279 PUNISHMENT/SENTENCE BOND POSS C/S PEN GRP 1 CHARLES MCINTOSH DP3 GRAHAM ALFRED DAVID KEITH A DAY 18 30132 143902 698 ANNOUNCMENT PLEA/TRL BOND POSS C/S PEN GRP 1 DAVID BARLOW DP2 GRAHAM ALFRED DAVID KEITH A DAY 19 32827 143902 355 ANNOUNCMENT PLEA/TRL BOND POSS C/S PEN GRP 1 DAVID BARLOW DP2 **** MISDEMEANOR CASE ***COUNTY COURT -

Alias Grace by Margaret Atwood Adapted for the Stage by Jennifer Blackmer Directed by RTE Co-Founder Karen Kessler

Contact: Cathy Taylor / Kelsey Moorhouse Cathy Taylor Public Relations, Inc. For Immediate Release [email protected] June 28, 2017 773-564-9564 Rivendell Theatre Ensemble in association with Brian Nitzkin announces cast for the World Premiere of Alias Grace By Margaret Atwood Adapted for the Stage by Jennifer Blackmer Directed by RTE Co-Founder Karen Kessler Cast features RTE members Ashley Neal and Jane Baxter Miller with Steve Haggard, Maura Kidwell, Ayssette Muñoz, David Raymond, Amro Salama and Drew Vidal September 1 – October 14, 2017 Chicago, IL—Rivendell Theatre Ensemble (RTE), Chicago’s only Equity theatre dedicated to producing new work with women at the core, in association with Brian Nitzkin, announces casting for the world premiere of Alias Grace by Margaret Atwood, adapted for the stage by Jennifer Blackmer, and directed by RTE Co-Founder Karen Kessler. Alias Grace runs September 1 – October 14, 2017, at Rivendell Theatre Ensemble, 5779 N. Ridge Avenue in Chicago. The press opening is Wednesday, September 13 at 7:00pm. This production of Alias Grace replaces the previously announced Cal in Camo, which will now be presented in January 2018. The cast includes RTE members Ashley Neal (Grace Marks) and Jane Baxter Miller (Mrs. Humphrey), with Steve Haggard (Simon Jordan), Maura Kidwell (Nancy Montgomery), Ayssette Muñoz (Mary Whitney), David Raymond (James McDermott), Amro Salama (Jerimiah /Jerome Dupont) and Drew Vidal (Thomas Kinnear). The designers include RTE member Elvia Moreno (scenic), RTE member Janice Pytel (costumes) and Michael Mahlum (lighting). A world premiere adaptation of Margaret Atwood's acclaimed novel Alias Grace takes a look at one of Canada's most notorious murderers. -

ACM 372 EDITING, Instructor: George Wang ASSIGNMENT GUIDELINES & AGREEMENT Assignment #2 Narrative Editing (A): EDITING A

ACM 372 EDITING, Instructor: George Wang ASSIGNMENT GUIDELINES & AGREEMENT Assignment #2 Narrative Editing (A): EDITING A PRIMETIME DRAMA (20 points = 20% of final grade) OBJECTIVES: You will have the rare opportunity to edit a selected segment of a network primetime drama. Your goals- Creatively, as the editor, you view all the footage, take notes, select the best takes and angles and make all the crucial decisions for the best way to construct and tell the story. Technically, your final edit should appear as professional as possible. REQUIREMENTS: 1. There's no limitations on how you can use the footage provided. You may use the filmed plot, or you may alter the structure and story if you wish. If incomplete-extra footage from other scenes are included, you do not have to use them, unless you want to experiment with ways to incorporate. 2. You must add appropriate Music and/or Sound Effects (or –1 point). Please include Music/Sound Effects source information in the credits (during title or end). If you are the composer, or you recorded the effects on your own, please use your alias in the credits. 3. If there's burnt-in time/keycode info on the footage, you must cover such info with a letterbox matte (widescreen matte filter, at 16:9 ratio or 1.78:1), and when necessary, adjust the letterbox offset for proper composition. (or -1pt) 4. You must add title and credits. (or –2 points). You may invent your own show title, but don't alter name of cast/crew. Please obtain from IMDB.com or from other resources: the cast/crew information of the provided footage. -

Gender Politics and Social Class in Atwood's Alias Grace Through a Lens of Pronominal Reference

European Scientific Journal December 2018 edition Vol.14, No.35 ISSN: 1857 – 7881 (Print) e - ISSN 1857- 7431 Gender Politics and Social Class in Atwood’s Alias Grace Through a Lens of Pronominal Reference Prof. Claudia Monacelli (PhD) Faculty of Interpreting and Translation, UNINT University, Rome, Italy Doi:10.19044/esj.2018.v14n35p150 URL:http://dx.doi.org/10.19044/esj.2018.v14n35p150 Abstract In 1843, a 16-year-old Canadian housemaid named Grace Marks was tried for the murder of her employer and his mistress. The jury delivered a guilty verdict and the trial made headlines throughout the world. Nevertheless, opinion remained resolutely divided about Marks in terms of considering her a scorned woman who had taken out her rage on two, innocent victims, or an unwitting victim herself, implicated in a crime she was too young to understand. In 1996 Canadian author Margaret Atwood reconstructs Grace’s story in her novel Alias Grace. Our analysis probes the story of Grace Marks as it appears in the Canadian television miniseries Alias Grace, consisting of 6 episodes, directed by Mary Harron and based on Margaret Atwood’s novel, adapted by Sarah Polley. The series premiered on CBC on 25 September 2017 and also appeared on Netflix on 3 November 2017. We apply a qualitative (corpus-driven) and qualitative (discourse analytical) approach to examine pronominal reference for what it might reveal about the gender politics and social class in the language of the miniseries. Findings reveal pronouns ‘I’, ‘their’, and ‘he’ in episode 5 of the miniseries highly correlate with both the distinction of gender and social class. -

Mexico's Security Dilemma: Michoacán's Militias

Mexico’s Security Dilemma: Michoacán’s Militias The Rise of Vigilantism in Mexico and Its Implications Going Forward Dudley Althaus and Steven Dudley ABSTRACT This working paper explores the rise of citizens' self-defense groups in Mexico’s western state of Michoacán. It is based on extensive field research. The militias arguably mark the most significant social and political development in Mexico's seven years of criminal hyper-violence. Their surprisingly effective response to a large criminal organization has put the government in a dilemma of if, and how, it plans to permanently incorporate the volatile organizations into the government’s security strategy. Executive Summary Since 2006, violence and criminality in Mexico have reached new heights. Battles amongst criminal organizations and between them have led to an unprecedented spike in homicides and other crimes. Large criminal groups have fragmented and their remnants have diversified their criminal portfolios to include widespread and systematic extortion of the civilian population. The state has not provided a satisfactory answer to this issue. In fact, government actors and security forces have frequently sought to take part in the pillaging. Frustrated and desperate, many community leaders, farmers and business elites have armed themselves and created so-called “self-defense” groups. Self-defense groups have a long history in Mexico, but they have traditionally been used to deal with petty crime in mostly indigenous communities. These efforts are recognized by the constitution as legitimate and legal. But the new challenges to security by criminal organizations have led to the emergence of this new generation of militias. The strongest of these vigilante organizations are in Michoacán, an embattled western state where a criminal group called the Knights Templar had been victimizing locals for years and had co-opted local political power. -

Daily Calendar by Att for DM & PR

09/22/2021 18th Judicial District Court Probate and Family Law Dockets By Attorney for Wednesday, September 22, 2021 CASE PLAINTIFF(S) DEFENDANT(S) HEARING TYPE Adams Jones Law Firm, PA Locke Susan M 9/14/21 1:30 pm Kraft Garrett Kraft Lyndsey Family Law Motion Docket 2021-DM-004876-DS Judge: Linda D Kirby, Div. 17 Hearing result for Family Law Motion Docket held on 09/14/2021 01:30 PM: DATA ENTRY ERROR. Reason: ATTORNEY DID NOT FOLLOW THE MAY 2020 MOTION PROCEDURE FOR JUDGE KIRBY 2021097 Notice of Intent to Appear to seek modification of the TO (SUSAN M. LOCKE) CASE PLAINTIFF(S) DEFENDANT(S) HEARING TYPE Adrian & Pankratz Schodorf Kelly J 9/21/21 3:40 pm Hernandez Elizabeth Fabela Mario Family Law Motion Docket 2018-DM-002242-PA Judge: Phillip B Journey, Div. 1 Hearing result for Family Law Motion Docket held on 09/21/2021 03:40 pm: Continued by Agreement REVIEW20210405 Motion to Modify parenting time(WHITNEY HOBSON) CASE PLAINTIFF(S) DEFENDANT(S) HEARING TYPE Alexander & Casey Casey Paula Kidd 9/14/21 1:30 pm Thompson Kimberly Thompson Charles Family Law Motion Docket 2015-DM-002029-DS Judge: Jeff Dewey, Div. 21 Hearing result for Family Law Motion Docket held on 09/14/2021 01:30 PM: Judgment 20210906 Motion to Withdraw (LARRY L MARCZYNSKI) 9/17/21 1:30 pm State of Kansas Department for YankeyChildren Gabriel & Contempt Hearing Families 2015-DM-006031-PA Judge: Michael B Roach, Hearing Officer Hearing result for Contempt Hearing held on 09/17/2021 01:30 PM: Hearing Held BWH (Gabriel Yankey Jr) 9/20/21 1:30 pm Shirley Don Shirley Catherine Family Law Motion Docket 2017-DM-003816-DS Judge: Kellie E Hogan, Div.