Macroeconomic Forecasting in Times of Crises

Total Page:16

File Type:pdf, Size:1020Kb

Load more

Recommended publications

-

* * Top Incomes During Wars, Communism and Capitalism: Poland 1892-2015

WID.world*WORKING*PAPER*SERIES*N°*2017/22* * * Top Incomes during Wars, Communism and Capitalism: Poland 1892-2015 Pawel Bukowski and Filip Novokmet November 2017 ! ! ! Top Incomes during Wars, Communism and Capitalism: Poland 1892-2015 Pawel Bukowski Centre for Economic Performance, London School of Economics Filip Novokmet Paris School of Economics Abstract. This study presents the history of top incomes in Poland. We document a U- shaped evolution of top income shares from the end of the 19th century until today. The initial high level, during the period of Partitions, was due to the strong concentration of capital income at the top of the distribution. The long-run downward trend in top in- comes was primarily induced by shocks to capital income, from destructions of world wars to changed political and ideological environment. The Great Depression, however, led to a rise in top shares as the richest were less adversely affected than the majority of population consisting of smallholding farmers. The introduction of communism ab- ruptly reduced inequalities by eliminating private capital income and compressing earn- ings. Top incomes stagnated at low levels during the whole communist period. Yet, after the fall of communism, the Polish top incomes experienced a substantial and steady rise and today are at the level of more unequal European countries. While the initial up- ward adjustment during the transition in the 1990s was induced both by the rise of top labour and capital incomes, the strong rise of top income shares in 2000s was driven solely by the increase in top capital incomes, which make the dominant income source at the top. -

Macroeconomic and Financial Risks: a Tale of Mean and Volatility

Board of Governors of the Federal Reserve System International Finance Discussion Papers Number 1326 August 2021 Macroeconomic and Financial Risks: A Tale of Mean and Volatility Dario Caldara, Chiara Scotti and Molin Zhong Please cite this paper as: Caldara, Dario, Chiara Scotti and Molin Zhong (2021). \Macroeconomic and Fi- nancial Risks: A Tale of Mean and Volatility," International Finance Discussion Papers 1326. Washington: Board of Governors of the Federal Reserve System, https://doi.org/10.17016/IFDP.2021.1326. NOTE: International Finance Discussion Papers (IFDPs) are preliminary materials circulated to stimu- late discussion and critical comment. The analysis and conclusions set forth are those of the authors and do not indicate concurrence by other members of the research staff or the Board of Governors. References in publications to the International Finance Discussion Papers Series (other than acknowledgement) should be cleared with the author(s) to protect the tentative character of these papers. Recent IFDPs are available on the Web at www.federalreserve.gov/pubs/ifdp/. This paper can be downloaded without charge from the Social Science Research Network electronic library at www.ssrn.com. Macroeconomic and Financial Risks: A Tale of Mean and Volatility∗ Dario Caldara† Chiara Scotti‡ Molin Zhong§ August 18, 2021 Abstract We study the joint conditional distribution of GDP growth and corporate credit spreads using a stochastic volatility VAR. Our estimates display significant cyclical co-movement in uncertainty (the volatility implied by the conditional distributions), and risk (the probability of tail events) between the two variables. We also find that the interaction between two shocks|a main business cycle shock as in Angeletos et al. -

The Shape of the Coming Recovery

The Shape of the Coming Recovery Mark Zandi July 9, 2009 he Great Recession continues. Given these prospects, a heated debate will resume spending more aggressively. After 18 months of economic has broken out over the strength of the Businesses may also have slashed too T contraction, 6.5 million jobs have coming recovery, with the back-and-forth deeply and, once confidence is restored, been lost, and the unemployment rate is simplifying into which letter of the alphabet will ramp up investment and hiring. fast approaching double digits. Many with best describes its shape. Pessimists dismiss this possibility, jobs are seeing their hours cut—the length Optimists argue for a V-shaped arguing instead for a W-shaped or double- of the average workweek fell to a record recovery, with a vigorous economic dip business cycle, with the economy low in June—and are taking cuts in pay as revival beginning soon. History is on their sliding back into recession after a brief overall wage growth stalls. Balance sheets side. The one and only regularity of past recovery, or an L-shaped cycle in which have also been hit hard: Some $15 trillion business cycles is that severe downturns the recession is followed by measurably in household wealth has evaporated, the have been followed by strong recoveries, weaker growth for an extended period. federal government has run up deficits and shallow downturns by shallow A W-shaped cycle seems more likely approaching $1.5 trillion, and many state recoveries.2 The 2001 recession was the if policymakers misstep. The last time and local governments have borrowed mildest since World War II—real GDP the economy suffered a double-dip was heavily to fill gaping budget holes. -

Supporting Documents for DSGE Models Update

Authorized for public release by the FOMC Secretariat on 02/09/2018 BOARD OF GOVERNORS OF THE FEDERAL RESERVE SYSTEM Division of Monetary Affairs FOMC SECRETARIAT Date: June 11, 2012 To: Research Directors From: Deborah J. Danker Subject: Supporting Documents for DSGE Models Update The attached documents support the update on the projections of the DSGE models. 1 of 1 Authorized for public release by the FOMC Secretariat on 02/09/2018 System DSGE Project: Research Directors Drafts June 8, 2012 1 of 105 Authorized for public release by the FOMC Secretariat on 02/09/2018 Projections from EDO: Current Outlook June FOMC Meeting Hess Chung, Michael T. Kiley, and Jean-Philippe Laforte∗ June 8, 2012 1 The Outlook for 2012 to 2015 The EDO model projects economic growth modestly below trend through 2013 while the policy rate is pegged to its e ective lower bound until late 2014. Growth picks up noticeably in 2014 and 2015 to around 3.25 percent on average, but infation remains below target at 1.7 percent. In the current forecast, unemployment declines slowly from 8.25 percent in the third quarter of 2012 to around 7.75 percent at the end of 2014 and 7.25 percent by the end of 2015. The slow decline in unemployment refects both the inertial behavior of unemployment following shocks to risk-premia and the elevated level of the aggregate risk premium over the forecast. By the end of the forecast horizon, however, around 1 percentage point of unemployment is attributable to shifts in household labor supply. -

FPI Pulling Apart Nov 15 Final

Pulling Apart: The Continuing Impact of Income Polarization in New York State Embargoed for 12:01 AM Thursday, Nov. 15, 2012 A Fiscal Policy Institute Report www.fiscalpolicy.org November 15, 2012 Pulling Apart: The Continuing Impact of Polarization in New York State Acknowledgements The primary author of this report is Dr. James Parrott, the Fiscal Policy Institute’s Deputy Director and Chief Economist. Frank Mauro, David Dyssegaard Kallick, Carolyn Boldiston, Dr. Brent Kramer, and Dr. Hee-Young Shin assisted Dr. Parrott in the analysis of the data presented in the report and in the editing of the final report. Special thanks are due to Elizabeth C. McNichol of the Center on Budget and Policy Priorities and Dr. Douglas Hall of the Economic Policy Institute for sharing with us the data from the state- by-state analysis of income trends that they completed for the new national Pulling Apart report being published by their two organizations. This report would not have been possible without the support of the Ford and Charles Stewart Mott Foundations and the many labor, religious, human services, community and other organizations that support and disseminate the Fiscal Policy Institute’s analytical work. For more information on the Fiscal Policy Institute and its work, please visit our web site at www.fiscalpolicy.org. Embargoed for 12:01 AM Thursday, Nov. 15, 2012 FPI November 15, 2012 1 Pulling Apart: The Continuing Impact of Polarization in New York State Highlights In the three decades following World War II, broadly shared prosperity drew millions up from low-wage work into the middle class and lifted living standards for the vast majority of Americans. -

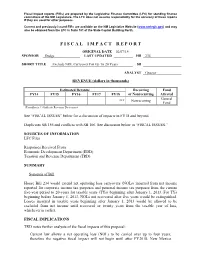

F I S C a L I M P a C T R E P O

Fiscal impact reports (FIRs) are prepared by the Legislative Finance Committee (LFC) for standing finance committees of the NM Legislature. The LFC does not assume responsibility for the accuracy of these reports if they are used for other purposes. Current and previously issued FIRs are available on the NM Legislative Website (www.nmlegis.gov) and may also be obtained from the LFC in Suite 101 of the State Capitol Building North. F I S C A L I M P A C T R E P O R T ORIGINAL DATE 02/07/14 SPONSOR Dodge LAST UPDATED HB 234 SHORT TITLE Exclude NOL Carryover For Up To 20 Years SB ANALYST Graeser REVENUE (dollars in thousands) Estimated Revenue Recurring Fund FY14 FY15 FY16 FY17 FY18 or Nonrecurring Affected General *** Nonrecurring Fund (Parenthesis ( ) Indicate Revenue Decreases) See “FISCAL ISSUES” below for a discussion of impacts in FY18 and beyond. Duplicates SB 156 and conflicts with SB 106. See discussion below in “FISCAL ISSUES.” SOURCES OF INFORMATION LFC Files Responses Received From Economic Development Department (EDD) Taxation and Revenue Department (TRD) SUMMARY Synopsis of Bill House Bill 234 would extend net operating loss carryovers (NOLs) incurred from net income reported for corporate income tax purposes and personal income tax purposes from the current five-year period to 20-years for taxable years (TYs) beginning after January 1, 2013. For TYs beginning before January 1, 2013, NOLs not recovered after five years would be extinguished. Losses incurred in taxable years beginning after January 1, 2013 would be allowed to be excluded from net income until recovered or twenty years from the taxable year of loss, whichever is earlier. -

Essays on Time Inconsistent Policy

Essays on Time Inconsistent Policy By ©2016 Sijun Yu DPhil, University of Kansas, 2016 M.A., University of Kansas, 2013 B.Sc., University of Kansas, 2011 Submitted to the graduate degree program in Department of Economics and the Graduate Faculty of the University of Kansas in partial fulfillment of the requirements for the degree of Doctor of Philosophy. ____________________ Chair: William Barnett ____________________ Professor Zongwu Cai __________________ Professor John Keating _________________ Professor Jianbo Zhang _________________ Professor Paul Johnson Date Defended: 19 September 2016 The dissertation committee for Sijun Yu certifies that this is the approved version of the following dissertation: Essays on Time Inconsistent Policy ______________ Chair: William Barnett Date Approved: 19 September 2016 ii Essays on Time Inconsistent Policy Sijun Yu Abstract This research is mainly focused on the time-inconsistency during policy making process. It contains three chapters as follows: Chapter One is to research into the time-inconsistency problem in policy making with extensive form games. The paper divides the game into four scenarios: one-stage independent game, one-stage forecasting game, finite stage forecasting game and infinite period game. The first two games show that neither government nor public want to be type 1 and government always has an incentive to deviate from announced policy. The last two games are mainly focused on the possibilities that both players want to randomize their strategies. The games are able to conclude -

Supporting Documents for DSGE Models Update

Authorized for public release by the FOMC Secretariat on 02/09/2018 BOARD OF GOVERNORS OF THE FEDERAL RESERVE SYSTEM Division of Monetary Affairs FOMC SECRETARIAT Date: December 3, 2012 To: Research Directors From: Deborah J. Danker Subject: Supporting Documents for DSGE Models Update The attached documents support the update on the projections of the DSGE models. 1 of 1 Authorized for public release by the FOMC Secretariat on 02/09/2018 The Current Outlook and Alternative Policy Rule Simulations in EDO: December FOMC Meeting (Internal FR) Hess Chung November 27, 2012 1 Introduction This note describes the EDO forecast for 2012 to 2015, as well as a number of alternative monetary policy simulations in EDO, most undertaken as part of a special topic for the December Federal Reserve System DSGE project. Specifcally, given the EDO baseline forecast, we consider several alternative dates for the departure of the federal funds rate from the zero lower bound. Taking as given the initial conditions underlying the EDO forecast, we then simulate the results of switching to three alternative rules: a representative Taylor-type rule and two level-targeting rules (nominal income and price level targeting). Finally, we repeat these exercises taking the October Tealbook projection as the baseline. To summarize our qualitative results from these alternative rules simulations, we fnd that 1. Extensions of the period at the zero lower bound to periods where there is a considerable gap between the baseline and the e ective lower bound can have strongly stimulative e ects on both real activity, including unemployment, and infation, even in the very short term. -

Family Adjustments to Large Scale Financial Crises US and Malaysian Cases

Family Adjustments to Large Scale Financial Crises US and Malaysian Cases Kareem Alazem A thesis submitted in partial fulfillment of the requirements for the degree of BACHELOR OF SCIENCE WITH HONORS DEPARTMENT OF ECONOMICS UNIVERSITY OF MICHIGAN April 13, 2011 Advised by: Dr. Frank Stafford TABLE OF CONTENTS Acknowledgements ....................................................................................................................... ii Case 1: 2000/2001 US Recession ............................................................................................. 1-15 Case 2: 1990/1991 US Recession ........................................................................................... 16-24 Case 3: 1997/1998 Asian Financial Crisis in Malaysia ........................................................... 25-36 Bibliography............................................................................................................................ 37-38 ACKNOWLEDGEMENTS I would like to show my gratitude to my thesis adviser, Dr. Frank Stafford, whose encouragement, guidance, and support from the initial to the final stages enabled me to develop an understanding of the subject. Professor Stafford’s recommendations and suggestions have been invaluable for the development of my thesis. I also wish to thank Professors Cheong Kee Cheok and Fatimah Kari at the University of Malaya in Kuala Lumpur, Malaysia, who helped guide me in my project during my eight weeks in Malaysia. Thanks are also due to Salem Ghandour, Nani Abdul Rahman, Muzaffar -

Changing Cyclical Contours of the US Economy

The Changing Cyclical Contours of the U.S. Economy March 2010 1 ©2011 Economic Cycle Research Institute It was over nine months ago that I last presented to you and I think it’s fair to say that at the time ECRI was far more optimistic than the consensus – we had already been predicting an end to recession for a couple months. It now looks as though the recession may have ended in June, around the time we were meeting. Let me say upfront, we’re not economists. Rather, we are students of the business cycle and we do not use econometric forecasting models. The reason why has become more evident in the wake of the Great Recession. Instead ECRI uses leading indexes which correctly anticipate both recessions and the recovery. Let’s begin with where we stand in this business cycle with regard to perhaps the most important dimension, and that is jobs… Job Growth, Household Survey, Showing Margin of Error 1000 500 0 -500 -1000 -1500 Jul-08 Jul-09 Apr-08 Apr-09 Oct-08 Oct-09 Jan-08 Jun-08 Jan-09 Jun-09 Jan-10 Feb-08 Mar-08 Feb-09 Mar-09 Feb-10 Nov-08 Nov-09 Aug-08 Sep-08 Dec-08 Aug-09 Sep-09 Dec-09 May-08 May-09 2 ©2011 Economic Cycle Research Institute For the first time since the recession began, job growth, as measured by the household survey, turned positive in January – even taking the margin of error of 400,000 jobs into account. This jobs number has now been positive for two months, which is a key reason the jobless rate has been trending down. -

Nominal GDP Targeting for a Speedier Economic Recovery

Munich Personal RePEc Archive Nominal GDP targeting for a speedier economic recovery Eagle, David M. Eastern Washington University 8 March 2012 Online at https://mpra.ub.uni-muenchen.de/39821/ MPRA Paper No. 39821, posted 04 Jul 2012 12:20 UTC Nominal GDP Targeting for a Speedier Economic Recovery By David Eagle Associate Professor of Finance Eastern Washington University Email: [email protected] Phone: (509) 828-1228 Abstract: For U.S. recessions since 1948, we study paneled time series of (i) ExUR, the excess of the unemployment rate over the prerecession rate, and (ii) NGAP, the percent deviation of nominal GDP from its prerecession trend. Excluding the 1969-70 and 1973-75 recessions, a regression of ExUR on current and past values of NGAP has an R2 of 75%. Simulations indicate that NGDP targeting could have eliminated 84% of the average ExUR during the period from 1.5 years and 4 years after the recessions began. The maximum effect of NGAP on unemployment occurs with a lag of 2 to 3 quarters. Revised: March 8, 2012 © Copyright 2012, by David Eagle. All rights reserved. Nominal GDP Targeting for a Speedier Economic Recovery By David Eagle “Major institution change occurs only at times of crisis. … I hope no crises will occur that will necessitate a drastic change in domestic monetary institutions. … Yet, it would be burying one’s head in the sand to fail to recognize that such a development is a real possibility. … If it does, the best way to cut it short, to minimize the harm it would do, is to be ready not with Band-Aids but with a real cure for the basic illness.” Milton Friedman, 1984 Figure 1. -

The Critical Importance of an Independent Central Bank

820 First Street NE, Suite 510 Washington, DC 20002 Tel: 202-408-1080 Fax: 202-408-1056 [email protected] www.cbpp.org July 20, 2017 The Critical Importance of an Independent Central Bank Testimony by Jared Bernstein, Senior Fellow, Before the House Committee on Financial Services: Subcommittee on Monetary Policy and Trade Chairman Barr and Ranking Member Moore, thank you for the opportunity to testify today. My testimony begins with a discussion of Title X of the Financial CHOICE Act, which would undermine the independence and flexibility of the Federal Reserve, one of the few national institutions that has, in recent years, worked systematically and transparently to improve the economic lives of working Americans. By aggressively rolling back necessary financial oversight, much of the rest of the CHOICE Act would be an act of economic amnesia, one that would raise the likelihood of a return to underpriced risk, bubbles, bailouts, and recession — while Title X of the Act would hamstring the central bank’s ability to respond to the problems engendered by the rest of the Act. My testimony also makes the following points: • The evidence shows that monetary policy as practiced by the Federal Reserve, while not perfect, significantly boosted jobs and growth in the Great Recession and the recovery that followed, without generating market distortions. • While monetary policy was often helpful in terms of pulling forward the current expansion, fiscal policy, starting around 2010, was uniquely austere and counter-productive, leading to job loss and a weaker expansion. • There are policy measures that could be pursued to help those left behind in the current economy, including both monetary and fiscal policies.