Ginkgo: a High Performance Numerical Linear Algebra Library

Total Page:16

File Type:pdf, Size:1020Kb

Load more

Recommended publications

-

1. INTRODUCTION 1.1. Introductory Remarks on Supersymmetry. 1.2

1. INTRODUCTION 1.1. Introductory remarks on supersymmetry. 1.2. Classical mechanics, the electromagnetic, and gravitational fields. 1.3. Principles of quantum mechanics. 1.4. Symmetries and projective unitary representations. 1.5. Poincar´esymmetry and particle classification. 1.6. Vector bundles and wave equations. The Maxwell, Dirac, and Weyl - equations. 1.7. Bosons and fermions. 1.8. Supersymmetry as the symmetry of Z2{graded geometry. 1.1. Introductory remarks on supersymmetry. The subject of supersymme- try (SUSY) is a part of the theory of elementary particles and their interactions and the still unfinished quest of obtaining a unified view of all the elementary forces in a manner compatible with quantum theory and general relativity. Supersymme- try was discovered in the early 1970's, and in the intervening years has become a major component of theoretical physics. Its novel mathematical features have led to a deeper understanding of the geometrical structure of spacetime, a theme to which great thinkers like Riemann, Poincar´e,Einstein, Weyl, and many others have contributed. Symmetry has always played a fundamental role in quantum theory: rotational symmetry in the theory of spin, Poincar´esymmetry in the classification of elemen- tary particles, and permutation symmetry in the treatment of systems of identical particles. Supersymmetry is a new kind of symmetry which was discovered by the physicists in the early 1970's. However, it is different from all other discoveries in physics in the sense that there has been no experimental evidence supporting it so far. Nevertheless an enormous effort has been expended by many physicists in developing it because of its many unique features and also because of its beauty and coherence1. -

Of Operator Algebras Vern I

proceedings of the american mathematical society Volume 92, Number 2, October 1984 COMPLETELY BOUNDED HOMOMORPHISMS OF OPERATOR ALGEBRAS VERN I. PAULSEN1 ABSTRACT. Let A be a unital operator algebra. We prove that if p is a completely bounded, unital homomorphism of A into the algebra of bounded operators on a Hubert space, then there exists a similarity S, with ||S-1|| • ||S|| = ||p||cb, such that S_1p(-)S is a completely contractive homomorphism. We also show how Rota's theorem on operators similar to contractions and the result of Sz.-Nagy and Foias on the similarity of p-dilations to contractions can be deduced from this result. 1. Introduction. In [6] we proved that a homomorphism p of an operator algebra is similar to a completely contractive homomorphism if and only if p is completely bounded. It was known that if S is such a similarity, then ||5|| • ||5_11| > ||/9||cb- However, at the time we were unable to determine if one could choose the similarity such that ||5|| • US'-1!! = ||p||cb- When the operator algebra is a C*- algebra then Haagerup had shown [3] that such a similarity could be chosen. The purpose of the present note is to prove that for a general operator algebra, there exists a similarity S such that ||5|| • ||5_1|| = ||p||cb- Completely contractive homomorphisms are central to the study of the repre- sentation theory of operator algebras, since they are precisely the homomorphisms that can be dilated to a ^representation on some larger Hilbert space of any C*- algebra which contains the operator algebra. -

Robot and Multibody Dynamics

Robot and Multibody Dynamics Abhinandan Jain Robot and Multibody Dynamics Analysis and Algorithms 123 Abhinandan Jain Ph.D. Jet Propulsion Laboratory 4800 Oak Grove Drive Pasadena, California 91109 USA [email protected] ISBN 978-1-4419-7266-8 e-ISBN 978-1-4419-7267-5 DOI 10.1007/978-1-4419-7267-5 Springer New York Dordrecht Heidelberg London Library of Congress Control Number: 2010938443 c Springer Science+Business Media, LLC 2011 All rights reserved. This work may not be translated or copied in whole or in part without the written permission of the publisher (Springer Science+Business Media, LLC, 233 Spring Street, New York, NY 10013, USA), except for brief excerpts in connection with reviews or scholarly analysis. Use in connection with any form of information storage and retrieval, electronic adaptation, computer software, or by similar or dissimilar methodology now known or hereafter developed is forbidden. The use in this publication of trade names, trademarks, service marks, and similar terms, even if they are not identified as such, is not to be taken as an expression of opinion as to whether or not they are subject to proprietary rights. Printed on acid-free paper Springer is part of Springer Science+Business Media (www.springer.com) In memory of Guillermo Rodriguez, an exceptional scholar and a gentleman. To my parents, and to my wife, Karen. Preface “It is a profoundly erroneous truism, repeated by copybooks and by eminent people when they are making speeches, that we should cultivate the habit of thinking of what we are doing. The precise opposite is the case. -

From String Theory and Moonshine to Vertex Algebras

Preample From string theory and Moonshine to vertex algebras Bong H. Lian Department of Mathematics Brandeis University [email protected] Harvard University, May 22, 2020 Dedicated to the memory of John Horton Conway December 26, 1937 – April 11, 2020. Preample Acknowledgements: Speaker’s collaborators on the theory of vertex algebras: Andy Linshaw (Denver University) Bailin Song (University of Science and Technology of China) Gregg Zuckerman (Yale University) For their helpful input to this lecture, special thanks to An Huang (Brandeis University) Tsung-Ju Lee (Harvard CMSA) Andy Linshaw (Denver University) Preample Disclaimers: This lecture includes a brief survey of the period prior to and soon after the creation of the theory of vertex algebras, and makes no claim of completeness – the survey is intended to highlight developments that reflect the speaker’s own views (and biases) about the subject. As a short survey of early history, it will inevitably miss many of the more recent important or even towering results. Egs. geometric Langlands, braided tensor categories, conformal nets, applications to mirror symmetry, deformations of VAs, .... Emphases are placed on the mutually beneficial cross-influences between physics and vertex algebras in their concurrent early developments, and the lecture is aimed for a general audience. Preample Outline 1 Early History 1970s – 90s: two parallel universes 2 A fruitful perspective: vertex algebras as higher commutative algebras 3 Classification: cousins of the Moonshine VOA 4 Speculations The String Theory Universe 1968: Veneziano proposed a model (using the Euler beta function) to explain the ‘st-channel crossing’ symmetry in 4-meson scattering, and the Regge trajectory (an angular momentum vs binding energy plot for the Coulumb potential). -

Operator Algebras: an Informal Overview 3

OPERATOR ALGEBRAS: AN INFORMAL OVERVIEW FERNANDO LLEDO´ Contents 1. Introduction 1 2. Operator algebras 2 2.1. What are operator algebras? 2 2.2. Differences and analogies between C*- and von Neumann algebras 3 2.3. Relevance of operator algebras 5 3. Different ways to think about operator algebras 6 3.1. Operator algebras as non-commutative spaces 6 3.2. Operator algebras as a natural universe for spectral theory 6 3.3. Von Neumann algebras as symmetry algebras 7 4. Some classical results 8 4.1. Operator algebras in functional analysis 8 4.2. Operator algebras in harmonic analysis 10 4.3. Operator algebras in quantum physics 11 References 13 Abstract. In this article we give a short and informal overview of some aspects of the theory of C*- and von Neumann algebras. We also mention some classical results and applications of these families of operator algebras. 1. Introduction arXiv:0901.0232v1 [math.OA] 2 Jan 2009 Any introduction to the theory of operator algebras, a subject that has deep interrelations with many mathematical and physical disciplines, will miss out important elements of the theory, and this introduction is no ex- ception. The purpose of this article is to give a brief and informal overview on C*- and von Neumann algebras which are the main actors of this summer school. We will also mention some of the classical results in the theory of operator algebras that have been crucial for the development of several areas in mathematics and mathematical physics. Being an overview we can not provide details. Precise definitions, statements and examples can be found in [1] and references cited therein. -

Intertwining Operator Superalgebras and Vertex Tensor Categories for Superconformal Algebras, Ii

TRANSACTIONS OF THE AMERICAN MATHEMATICAL SOCIETY Volume 354, Number 1, Pages 363{385 S 0002-9947(01)02869-0 Article electronically published on August 21, 2001 INTERTWINING OPERATOR SUPERALGEBRAS AND VERTEX TENSOR CATEGORIES FOR SUPERCONFORMAL ALGEBRAS, II YI-ZHI HUANG AND ANTUN MILAS Abstract. We construct the intertwining operator superalgebras and vertex tensor categories for the N = 2 superconformal unitary minimal models and other related models. 0. Introduction It has been known that the N = 2 Neveu-Schwarz superalgebra is one of the most important algebraic objects realized in superstring theory. The N =2su- perconformal field theories constructed from its discrete unitary representations of central charge c<3 are among the so-called \minimal models." In the physics liter- ature, there have been many conjectural connections among Calabi-Yau manifolds, Landau-Ginzburg models and these N = 2 unitary minimal models. In fact, the physical construction of mirror manifolds [GP] used the conjectured relations [Ge1] [Ge2] between certain particular Calabi-Yau manifolds and certain N =2super- conformal field theories (Gepner models) constructed from unitary minimal models (see [Gr] for a survey). To establish these conjectures as mathematical theorems, it is necessary to construct the N = 2 unitary minimal models mathematically and to study their structures in detail. In the present paper, we apply the theory of intertwining operator algebras developed by the first author in [H3], [H5] and [H6] and the tensor product theory for modules for a vertex operator algebra developed by Lepowsky and the first author in [HL1]{[HL6], [HL8] and [H1] to construct the intertwining operator algebras and vertex tensor categories for N = 2 superconformal unitary minimal models. -

A Spatial Operator Algebra

International Journal of Robotics Research vol. 10, pp. 371-381, Aug. 1991 A Spatial Op erator Algebra for Manipulator Mo deling and Control 1 2 1 G. Ro driguez , K. Kreutz{Delgado , and A. Jain 2 1 AMES Department, R{011 Jet Propulsion Lab oratory Univ. of California, San Diego California Institute of Technology La Jolla, CA 92093 4800 Oak Grove Drive Pasadena, CA 91109 Abstract A recently develop ed spatial op erator algebra for manipulator mo deling, control and tra- jectory design is discussed. The elements of this algebra are linear op erators whose domain and range spaces consist of forces, moments, velo cities, and accelerations. The e ect of these op erators is equivalent to a spatial recursion along the span of a manipulator. Inversion of op erators can be eciently obtained via techniques of recursive ltering and smo othing. The op erator algebra provides a high-level framework for describing the dynamic and kinematic b ehavior of a manipu- lator and for control and tra jectory design algorithms. The interpretation of expressions within the algebraic framework leads to enhanced conceptual and physical understanding of manipulator dynamics and kinematics. Furthermore, implementable recursive algorithms can be immediately derived from the abstract op erator expressions by insp ection. Thus, the transition from an abstract problem formulation and solution to the detailed mechanization of sp eci c algorithms is greatly simpli ed. 1 Intro duction: A Spatial Op erator Algebra A new approach to the mo deling and analysis of systems of rigid b o dies interacting among them- selves and their environment has recently b een develop ed in Ro driguez 1987a and Ro driguez and Kreutz-Delgado 1992b . -

Clifford Algebra Analogue of the Hopf–Koszul–Samelson Theorem

Advances in Mathematics AI1608 advances in mathematics 125, 275350 (1997) article no. AI971608 Clifford Algebra Analogue of the HopfKoszulSamelson Theorem, the \-Decomposition C(g)=End V\ C(P), and the g-Module Structure of Ãg Bertram Kostant* Department of Mathematics, Massachusetts Institute of Technology, Cambridge, Massachusetts 02139 Received October 18, 1996 1. INTRODUCTION 1.1. Let g be a complex semisimple Lie algebra and let h/b be, respectively, a Cartan subalgebra and a Borel subalgebra of g. Let n=dim g and l=dim h so that n=l+2r where r=dim gÂb. Let \ be one- half the sum of the roots of h in b. The irreducible representation ?\ : g Ä End V\ of highest weight \ is dis- tinguished among all finite-dimensional irreducible representations of g for r a number of reasons. One has dim V\=2 and, via the BorelWeil theorem, Proj V\ is the ambient variety for the minimal projective embed- ding of the flag manifold associated to g. In addition, like the adjoint representation, ?\ admits a uniform construction for all g. Indeed let SO(g) be defined with respect to the Killing form Bg on g. Then if Spin ad: g Ä End S is the composite of the adjoint representation ad: g Ä Lie SO(g) with the spin representation Spin: Lie SO(g) Ä End S, it is shown in [9] that Spin ad is primary of type ?\ . Given the well-known relation between SS and exterior algebras, one immediately deduces that the g-module structure of the exterior algebra Ãg, with respect to the derivation exten- sion, %, of the adjoint representation, is given by l Ãg=2 V\V\.(a) The equation (a) is only skimming the surface of a much richer structure. -

Descriptive Set Theory and Operator Algebras

Descriptive Set Theory and Operator Algebras Edward Effros (UCLA), George Elliott (Toronto), Ilijas Farah (York), Andrew Toms (Purdue) June 18, 2012–June 22, 2012 1 Overview of the Field Descriptive set theory is the theory of definable sets and functions in Polish spaces. It’s roots lie in the Polish and Russian schools of mathematics from the early 20th century. A central theme is the study of regularity properties of well-behaved (say Borel, or at least analytic/co-analytic) sets in Polish spaces, such as measurability and selection. In the recent past, equivalence relations and their classification have become a central focus of the field, and this was one of the central topics of this workshop. One of the original co-organizers of the workshop, Greg Hjorth, died suddenly in January 2011. His work, and in particular the notion of a turbulent group action, played a key role in many of the discussions during the workshop. Functional analysis is of course a tremendously broad field. In large part, it is the study of Banach spaces and the linear operators which act upon them. A one paragraph summary of this field is simply impossible. Let us say instead that our proposal will be concerned mainly with the interaction between set theory, operator algebras, and Banach spaces, with perhaps an emphasis on the first two items. An operator algebra can be interpreted quite broadly ([4]), but is frequently taken to be an algebra of bounded linear operators on a Hilbert space closed under the formation of adjoints, and furthermore closed under either the norm topology (C∗-algebras) or the strong operator topology (W∗-algebras). -

Vertex Operator Algebras and 3D N = 4 Gauge Theories

Prepared for submission to JHEP Vertex Operator Algebras and 3d N = 4 gauge theories Kevin Costello,1 Davide Gaiotto1 1Perimeter Institute for Theoretical Physics, Waterloo, Ontario, Canada N2L 2Y5 Abstract: We introduce two mirror constructions of Vertex Operator Algebras as- sociated to special boundary conditions in 3d N = 4 gauge theories. We conjecture various relations between these boundary VOA's and properties of the (topologically twisted) bulk theories. We discuss applications to the Symplectic Duality and Geomet- ric Langlands programs. arXiv:1804.06460v1 [hep-th] 17 Apr 2018 Contents 1 Introduction1 1.1 Structure of the paper2 2 Deformable (0; 4) boundary conditions.2 2.1 Generalities2 2.2 Nilpotent supercharges3 2.3 Deformations of boundary conditions4 2.4 Example: free hypermultiplet5 2.5 Example: free vectormultiplet6 2.6 Index calculations7 2.6.1 Example: hypermultiplet indices8 2.6.2 Example: vectormultiplet half-indices9 3 Boundary conditions and bulk observables 10 3.1 Boundary VOA and conformal blocks 10 3.2 Bulk operator algebra and Ext groups 11 3.3 The free hypermultiplet in the SU(2)H -twist 13 3.4 The free hypermultiplet in the SU(2)C -twist 15 3.5 A computation for a U(1) gauge field 16 3.6 Bulk lines and modules 17 3.7 Topological boundary conditions 18 4 The H-twist of standard N = 4 gauge theories 19 4.1 Symmetries of H-twistable boundaries 20 4.2 Conformal blocks and Ext groups 21 4.3 U(1) gauge theory with one flavor 21 4.4 A detailed verification of the duality 23 5 More elaborate examples of H-twist VOAs 26 5.1 U(1) gauge theory with N flavors 27 5.1.1 The T [SU(2)] theory. -

Title a New Stage of Vertex Operator Algebra (Algebraic Combinatorics

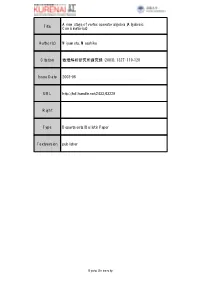

A new stage of vertex operator algebra (Algebraic Title Combinatorics) Author(s) Miyamoto, Masahiko Citation 数理解析研究所講究録 (2003), 1327: 119-128 Issue Date 2003-06 URL http://hdl.handle.net/2433/43229 Right Type Departmental Bulletin Paper Textversion publisher Kyoto University 数理解析研究所講究録 1327 巻 2003 年 119-128 11) 119 Anew stage of vertex operator algebra Masahiko Miyamoto Department of Mathematics University of Tsukuba at RIMS Dec. 182002 1Introduction Historically, aconformal field theory is amathematical method for physical phenomena, for example, astring theory. In astring theory, aparticle (or astring) is expressed by asimple module. Physicists constructed many examples of conformal field theories whose simple modules were explicitly determined. Such conformal field theories are called solvable models. It is natural to divide theories into two types. One has infinitely many kinds of particles or simple modules, the other has only finitely many simple modules. For example, aconformal field theory for free bosons has infinitely many simple modules and lattice theories are of finite type. In 1988, in connection with the moonshine conjecture (a mysterious relation between the largest sporadic finite simple group “Monster” and the classical elliptic modular function $\mathrm{j}(\mathrm{r})=q^{-1}+744+196884q+\cdots)$ , aconcept of vertex operator algebra was introduced as aconformal field theory with arigorous axiom. In this paper, we will treat avertex operator algebra of finite type. Until 1992, in the known theories of finite type, all modules were completely reducible. This fact sounds natural for physicists, because amodule in the string theory was thought as abunch of strings, which should be adirect sum of simple modules. -

Physics © Springer-Verlag 1983

Communications in Commun. Math. Phys. 92, 163 -178 (1983) Mathematical Physics © Springer-Verlag 1983 Pseudodifferential Operators on Supermanifolds and the Atiyah-Singer Index Theorem* Ezra Getzler Department of Mathematics, Harvard University, Cambridge, MA 02138, USA Abstract. Fermionic quantization, or Clifford algebra, is combined with pseudodifferential operators to simplify the proof of the Atiyah-Singer index theorem for the Dirac operator on a spin manifold. Introduction Recently, an outline of a new proof of the Atiyah-Singer index theorem has been proposed by Alvarez-Gaume [1], extending unpublished work of Witten. He makes use of a path integral representation for the heat kernel of a Hamiltonian that involves both bosonic and fermionic degrees of freedom. In effect, a certain democracy is created between the manifold and the fermionic variables cor- responding to the exterior algebra Λ*T*M at each point xeM. In this paper, this idea is pursued within the context of Hamiltonian quantum mechanics, for which the powerful calculus of pseudodifferential operators exists, permitting a rigorous treatment of Alvarez-Gaume's ideas. Most of the work will be to unify pseudodifferential operators with their fermionic equivalent, the sections of a Clifford algebra bundle over the manifold. Using a pseudodifferential calculus based on the papers of Bokobza-Haggiag [7] and Widom [17,18], but incorporating a symbol calculus for the Clifford algebra as well, an explicit formula for composition of principal symbols is derived (0.7). This permits a complete calculation of the index of the Dirac operator to be performed, modeled on a proof of WeyPs theorem on a compact Riemannian manifold M.