And Metacritic Scores?

Total Page:16

File Type:pdf, Size:1020Kb

Load more

Recommended publications

-

Download Download

Frank G. Bosman and Archibald L. H. M. van Wieringen Reading The Book of Joseph A Communication-Oriented Analysis of Far Cry 5 Abstract In the game Far Cry 5, a book called The Book of Joseph plays an important role. It is the confession, autobiography and sermon compilation of Joseph Seed, the leader of the fundamentalist, Christian-inspired violent Doomsday cult called “Project at Eden’s Gate”. In the game, the player is tasked to defeat Seed’s grip on – fictional – Hope Coun- ty, Montana (USA). The Book of Joseph is not only found in the game, where its content is kept hidden from the player, but is also featured in a live-action trailer, called The Baptism. Most importantly, Joseph Seed’s book has also been published as a physical object and was distributed to the first2 ,000 buyers of the Mondo edition of the game. In this article, the authors argue that the communicative function of The Book of Joseph differs significantly from one medial object to the next (game, trailer, book), influenced by the intertextual and intermedial relationships between those medial objects and by their exclusive characteristics. Using a communication-oriented method of text analy- sis, the authors investigate the various communicative processes within the different “texts”, in order to establish the narrative loci of the book’s materiality. Keywords Far Cry 5, The Book of Joseph, Communication-Oriented Method, Intertextuality, Cult, Intermediality, Materiality Biographies Frank G. Bosman is a theologian of culture and a senior researcher at the Tilburg School of Catholic Theology, Tilburg University, the Netherlands. -

Far Cry 5 Editor for Pc Download Far Cry 5 Editor for Pc Download

far cry 5 editor for pc download Far cry 5 editor for pc download. Completing the CAPTCHA proves you are a human and gives you temporary access to the web property. What can I do to prevent this in the future? If you are on a personal connection, like at home, you can run an anti-virus scan on your device to make sure it is not infected with malware. If you are at an office or shared network, you can ask the network administrator to run a scan across the network looking for misconfigured or infected devices. Another way to prevent getting this page in the future is to use Privacy Pass. You may need to download version 2.0 now from the Chrome Web Store. Cloudflare Ray ID: 66b5bd24bd13c447 • Your IP : 188.246.226.140 • Performance & security by Cloudflare. Far Cry 5's Awesome Map Editor Will Let You Create Just About Anything. Far Cry 5 is one game I'm unabashedly looking forward to in 2018. For one thing, I think the entire Far Cry series is a blast to play. Even if this one keeps the exact same format as the past two---big open world filled with wildlife, a crazy but charismatic villain, etc.---I'll be a happy camper. For another, the game's wacky DLC takes you to outer space, back in time, and pits you against zombies in three separate adventures. And now we've learned about Far Cry 5: Arcade, a map maker that allows players to create their own maps using assets from Far Cry 5, " Far Cry 4 (including yetis) and Far Cry Primal , as well as Assassin's Creed IV: Black Flag, Assassin's Creed Unity , and Watch Dogs " according to Ubisoft. -

Economic Model of Microtransactions in Video Games

View metadata, citation and similar papers at core.ac.uk brought to you by CORE provided by Bilingual Publishing Co. (BPC): E-Journals Journal of Economic Science Research | Volume 01 | Issue 01 | 2018 Journal of Economic Science Research http://ojs.bilpublishing.com/index.php/jesr REVIEW Economic Model of Microtransactions in Video Games Nenad Zoran Tomić* Faculty of Economics - Univesity of Kragujevac, Serbia ARTICLE INFO ABSTRACT Article history: Microtransactions denote a payment made for purchase of mobile phone application or addi- Received: 29 December 2018 tional content in video games. The basic revenue principle for publisher was to sell the entire Accepted: 2 January 2019 content at once, with the buyer having to pay the game as a whole. Trends from mobile ap- Published: 4 January 2019 plications and mobile games market have slowly transferred to PC games and console video games. About one half of total revenue of big video games publishers comes from micro- Keywords: transactions. The topics of this paper are the economic effects of microtransactions on video Microtransactions game industry business model. The scope of microtransactions and different implementing Freemium games procedures will be discused in paper. The goal is to determine the change of the position of all Pay-to-win stakeholders involved in the process of creating and publishing video games. Mobile games Video games 1. Introduction Due to the high cost of equipment and unavailability of professional staff, the number of companies on the supply fter initial success, the video game industry side was initially very small. With increasing demand, has undergone a great expansion in the last two more and more development studios and publishers have decades of the 20th century. -

Norton 360 for Gamers Game Optimization Testing Windows 10

Norton 360 for Gamers Game Optimization Testing Windows 10 June 2021 Document: Norton 360 for Gamers Game Optimization Testing (Edition 1) Authors: J. Han, D. Wren Company: PassMark Software Date: 22 June 2021 Edition: 1 File: Norton_Game_Optimization_Testing_2021_Ed1.docx Norton 360 for Gamers Game Optimization Testing PassMark Software Table of Contents REVISION HISTORY ............................................................................................................................................ 3 EXECUTIVE SUMMARY ...................................................................................................................................... 4 OVERALL SCORE ................................................................................................................................................ 5 PRODUCTS LIST ................................................................................................................................................. 6 TEST RESULTS.................................................................................................................................................... 7 PHASE 1 ......................................................................................................................................................... 7 PHASE 2 ....................................................................................................................................................... 15 CONCLUSION ................................................................................................................................................. -

Far Cry 4 Для Xbox 360 Lt 3.0 Скачать Торрент

far cry 4 для xbox 360 lt 3.0 скачать торрент far cry 4 для xbox 360 lt 3.0 скачать торрент far cry 4 для xbox 360 lt 30 скачать торрент - Все результаты Скачать Far Cry 4 для Xbox 360 LT 30 - Pristavkitutru pristavkitutru/tcatalog_elem/95 Far Cry 4 - продолжение известной серии компьютерных игр, шутер от первого лица с открытым миром Действие происходит в регионе под названием Far Cry 4 [REGION FREE/RUSSOUND] (LT+30) » Скачать игры на xbox-torrentru/xbox_360/xbox360/120-far-cry-4- region-free-russound-lt-30html Скачать торрент Far Cry 4 [REGION FREE/RUSSOUND] ( LT + 30 ) своего знаменитого предшественника, Far Cry 4 на Xbox 360 предлагает фирменный Far Cry 4 (2014) XBOX360 - Скачать через торрент игру torrent4gamescom › Action Похожие Издательство: Ubisoft Регион: PAL/NTSC-J Тип издания: Лицензия Прошивка: LT+ 30 (XGD3/16537) Язык интерфейса: Русский Тип перевода: Текст+ Видео 5:57 Como baixar far Cry 4 no xbox 360(LT 30 LT 20) Z Gameplays YouTube - 19 дек 2016 г 2:00 Far Cry 4 XBOX 360 LT JTAG RGH Download Torrent MULTI [ITA] XBOX 360 TORRENT YouTube - 16 сент 2016 г 4:04 Free Download Far Cry 3 And Far Cry 4 Game Torrent For Xbox Learning Xbox 360 YouTube - 2 нояб 2017 г 6:53 FAR CRY 4 XBOX 360 DOWNLOAD TORRENT GM GM YouTube - 27 июл 2015 г 15:09 how to download far cry 4 xbox 360 jtag only XxicenoxX gaming YouTube - 14 окт 2015 г 4:25 Список игр для XBOX 360 Freeboot/LT 30 500Gb GameStore YouTube - 27 февр 2015 г 7:08 Как запустить Far Cry 4 на 2- ядерном процесоре!!! BEIFA YouTube - 26 мар 2015 г 4:43 COMO TENER LOS DLC DE FAR CRY -

The Sacred and the Digital. Critical Depictions of Religions in Digital Games

religions Editorial The Sacred and the Digital. Critical Depictions of Religions in Digital Games Frank G. Bosman Department of Systematic Theology and Philosophy, Tilburg University, 5037 AB Tilburg, The Netherlands; [email protected] Received: 17 January 2019; Accepted: 21 February 2019; Published: 22 February 2019 Abstract: In this editorial, guest editor Frank Bosman introduces the theme of the special issue on critical depictions of religion in video games. He does so by giving a tentative oversight of the academic field of religion and video game research up until present day, and by presenting different ways in which game developers critically approach (institutionalized, fictional and non-fictional) religions in-game, of which many are discussed by individual authors later in the special issue. In this editorial, Bosman will also introduce all articles of the special issue at hand. Keywords: game studies; religion studies; games and religion studies; religion criticism 1. Introduction Video game studies are a relative young but flourishing academic discipline. But within game studies, however, the perspective of religion and spirituality is rather neglected, both by game scholars and religion scholars. Although some fine studies have appeared, like Halos & Avatars (Detweiler 2010), Godwired. Religion, ritual, and virtual reality (Wagner 2012), eGods. Faith versus fantasy in computer gaming (Bainbridge 2013), Of Games and God (Schut 2013), Playing with Religion in digital games (Campbell and Grieve 2014), Methods for Studying Video Games and Religion (Sisler et al. 2018), and Gaming and the Divine (Bosman 2019), still little attention has been given to the depiction of religion, both institutionalized and privatized, both fantasy and non-fictional, deployed by game developers for their games’ stories, aesthetics, and lore. -

DCM Case Study

UBISOFT Far Cry 5 - 2018 Background - In 2018, Ubisoft launched its latest instalment of the Far Cry franchise – a first player shooter game. Amongst stern competition from a wide range of publishers Ubisoft needed the launch campaign for Far Cry 5 to drive awareness, message takeout and ultimately purchase intent. Plan - Cinema’s young, male and highly engaged audience made it a brilliant fit for Ubisoft – it is the ultimate immersive environment. Moreover, cinema provided Ubisoft with a brilliant opportunity to increase the impact of its campaign by aligning Far Cry 5 with similar entertainment content and engaging cinema trailers. - Ubisoft’s cinema campaign was twofold. Firstly, they ran a standard 30” solus Campaign Details copy in Cineworld screenings of Tomb Raider but wanted to take a different innovative approach for the rest of the campaign. Before screenings of Ready Sector Gaming Player One, Pacific Rim: Uprising, and Tomb Raider in other cinema chains, Ubisoft ran 4x5” blips at various points in reel preceding the 30” ad. Ubisoft Target Audience 16-34 Men wanted to measure the effectiveness of using short blips before a 30” advert, hoping to increase the impact and recall of the 30” ad and the campaign as a Package Film Packs whole. Each blip contained no branding but a 5” live-action replication of the story mode; they teased at the storyline and intrigued the audience, drawing their Media Agency Wavemaker attention before the 30” ad. Creative Agency DDB - The 30” ad put the gamer in the game, featuring real-life actors replicating the Far Cry 5 story mode. -

Far Cry 4 Free Download Fast Download Far Cry 5 Torrent Full PC Game Free Download

far cry 4 free download fast download Far Cry 5 Torrent Full PC Game Free Download. Far Cry 5 Torrent For PC Highly Compressed Game. Far Cry 5 For PC Game is the most high-grade shooter game. That was proclaimed and made by “Ubisoft Montreal”. This is the fifth chapter of the Far Cry category. There is one player plus a multiple of players gameplay introduce in it. The player deals with the game with an unfamiliar person. And plays the game with handling that unfamiliar person. That unfamiliar person gets exposure open world in the game the Hope county. Moreover, the game’s revolution amp divides it into three parts. These sections display problem settings. The player can create a force of the villagers to attack the enemy. It may include skillful characters or even unplayed characters. Players are also able to use desert creatures to face the enemy. These creatures will behave to attack the enemies on your order. In-game hunting essentials provide a variety of panfish to use in battle. You will also have a tenant friend for your security. You can use this to start a dispute with another friend. The game is played in Hope County the game open world, via the man Joseph. He stated that God called him to provide security to people in “Hope County”. In fact, he desires the Hope County community to be in its thrall. He created a scheme in which the residents of these areas could not go out for help. He takes the title “father” and a good relationship with them. -

Death Narratives: a Typology of Narratological Embeddings of Player's Death in Digital Games by Frank G

Untitled. Photographer: Pawel Kadysz (https://stocksnap.io/photo/OZ4IBMDS8E). Special Issue Video Gaming and Death edited by John W. Borchert Issue 09 (2018) articles Introduction to a Special Issue on Video Gaming and Death by John W. Borchert, 1 Death Narratives: A Typology of Narratological Embeddings of Player's Death in Digital Games by Frank G. Bosman, 12 No Sympathy for Devils: What Christian Video Games Can Teach Us About Violence in Family-Friendly Entertainment by Vincent Gonzalez, 53 Perilous and Peril-Less Gaming: Representations of Death with Nintendo’s Wolf Link Amiibo by Rex Barnes, 107 “You Shouldn’t Have Done That”: “Ben Drowned” and the Uncanny Horror of the Haunted Cartridge by John Sanders, 135 Win to Exit: Perma-Death and Resurrection in Sword Art Online and Log Horizon by David McConeghy, 170 Death, Fabulation, and Virtual Reality Gaming by Jordan Brady Loewen, 202 The Self Across the Gap of Death: Some Christian Constructions of Continued Identity from Athenagoras to Ratzinger and Their Relevance to Digital Reconstitutions by Joshua Wise, 222 reviews Graveyard Keeper. A Review by Kathrin Trattner, 250 interviews Interview with Dr. Beverley Foulks McGuire on Video-Gaming, Buddhism, and Death by John W. Borchert, 259 reports Dying in the Game: A Perceptive of Life, Death and Rebirth Through World of Warcraft by Wanda Gregory, 265 Death Narratives: A Typology of Narratological Embeddings of Player's Death in Digital Games Frank G. Bosman Abstract Ludologically, the death of the game’s protagonist (also known as player’s death of avatar death) is one of the most prominent feedback systems of almost all digital games. -

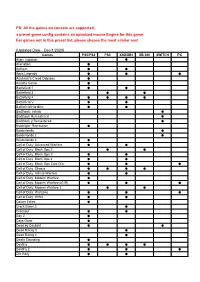

The Games on Console Are Supported, a Preset Game Config Contains an Optmized Mouse Engine for This Game

PS: All the games on console are supported, a preset game config contains an optmized mouse Engine for this game. For games not in this preset list, please choose the most similar one! (Updated Date:Dec/1/2020) Games PS5/PS4 PS3 XSX/XB1 XB 360 SWITCH PC Alien: Isolation ● Alienation ● Anthem ● ● Apex Legends ● ● ● Assassin's Creed Odyssey ● Assetto Corsa ● Battlefield 1 ● ● Battlefield 3 ● ● Battlefield 4 ● ● ● ● Battlefield V ● ● Battlefield Hardline ● ● BioShock: Infinite ● BioShock Remastered ● BioShock 2 Remastered ● Blacklight: Retribution ● Borderlands ● Borderlands 2 ● Borderlands 3 ● Call of Duty: Advanced Warfare ● ● Call of Duty: Black Ops 2 ● ● Call of Duty: Black Ops 3 ● ● Call of Duty: Black Ops 4 ● ● Call of Duty: Black Ops Cold War ● ● ● Call of Duty: Ghosts ● ● ● ● Call of Duty: Infinite Warfare ● ● Call of Duty: Modern Warfare ● Call of Duty: Modern Warfare(2019) ● ● ● Call of Duty: Modern Warfare 3 ● ● Call of Duty: Warzone ● ● ● Call of Duty: WWII ● ● Conan Exiles ● Crack Down 3 ● Crossout ● ● Day Z ● Days Gone ● Dead by Daylight ● ● Dead Rising 3 ● Dead Rising 4 ● Death Stranding ● Destiny ● ● ● ● Destiny 2 ● ● ● Dirt Rally ● ● DIRT 4 ● DJMax Respect ● Doom ● ● ● Doom: Eternal ● DriveClub ● Dying Light ● ● Evolve ● ● F1 2015~2020 ● Fallout 4 ● ● Far Cry 3 ● Far Cry 4 ● Far Cry 5 ● ● Far Cry Primal ● ● For Honor ● Fortnite: Battle Royale ● ● ● Forza 5~7 ● Forza Horizon 2~4 ● Gears of War 3 ● Gears of War 4 ● Gears of War 5 ● Gears of War: Ultimate Edition ● Ghost Recon: Rreakpoint ● Ghost Recon: Wildlands ● ● Grand Theft -

Always Already Monsters—Bioshock's (2007) 'Splicers

ALWAYS ALREADY MONSTERS—BIOSHOCK’S (2007) ‘SPLICERS’ AS COMPUTATIONAL OTHERS Jaroslav Švelch (Charles University and University of Bergen) Abstract: The article explores the manufacturing of monsters in video games, using the case of the influential 2007 first-person shooter BioShock, and ‘splicers’—its most numerous, zombie-like enemies. I combine two methodological perspectives on the ‘manufacturing’ of splicers by analyzing [a] the title’s developer commentary and other official paratexts to trace the design of splicers, and [b] the game’s embedded narrative to reconstruct the diegetic backstory of splicers. I argue that video game enemies, including splicers, are ‘computational others’, who may appear human on the level of representation, but whose behavior is machinic, and driven by computational algorithms. To justify the paradoxical relationship between their human-like representation and machinic behavior, BioShock includes an elaborate narrative that explains how the citizens of the underwater city of Rapture were dehumanized and transformed into hostile splicers. The narrative of dehumanization, explored following Haslam’s dehumanization theory (2006), includes [a] transforming splicers into atomized creatures by depriving them of political power and social bonds, [b] creating fungible and interchangeable enemies through splicers’ masks and bodily disintegration, [c] justifying splicers’ blindness to context and their simplistic behavior by portraying them as mentally unstable addicts. The article concludes that all video game enemies are inherently monstrous, and that critique of video game representation should focus on how games fail to make monsters human, rather than how games render humans monstrous or dehumanized. Keywords: monsters; video games; first-person shooter; BioShock (2007); zombies; otherness; computational other; hauntology. -

The Sacred & the Digital

Tilburg University The Sacred & the Digital Bosman, Frank DOI: 978-3-03897-831-2 Publication date: 2019 Document Version Publisher's PDF, also known as Version of record Link to publication in Tilburg University Research Portal Citation for published version (APA): Bosman, F. (Ed.) (2019). The Sacred & the Digital: Critical Depictions of Religions in Video Games. MDPI AG. https://doi.org/978-3-03897-831-2 General rights Copyright and moral rights for the publications made accessible in the public portal are retained by the authors and/or other copyright owners and it is a condition of accessing publications that users recognise and abide by the legal requirements associated with these rights. • Users may download and print one copy of any publication from the public portal for the purpose of private study or research. • You may not further distribute the material or use it for any profit-making activity or commercial gain • You may freely distribute the URL identifying the publication in the public portal Take down policy If you believe that this document breaches copyright please contact us providing details, and we will remove access to the work immediately and investigate your claim. Download date: 27. sep. 2021 The Sacred & the Digital Critical Depictions of Religions in Video Games Edited by Frank G. Bosman Printed Edition of the Special Issue Published in Religions www.mdpi.com/journal/religions The Sacred & the Digital The Sacred & the Digital: Critical Depictions of Religions in Video Games Special Issue Editor Frank G. Bosman MDPI • Basel • Beijing • Wuhan • Barcelona • Belgrade Special Issue Editor Frank G.