Two-Dimensional Gesture Mapping for Interactive Real-Time Electronic Music Instruments

Total Page:16

File Type:pdf, Size:1020Kb

Load more

Recommended publications

-

A Guide for Creating Virtual Video/Audio Ensembles Written By

A guide for creating virtual video/audio ensembles Written by Ben Sellers With contributions from Wiltshire Music Connect Associate Luke Pickett, and Lee Hextall from Lincolnshire Music Service. This document guides you through the process of creating an audio or video ‘virtual ensemble’ performance. The process takes time and may require you to learn some new skills, but it is worth it: your pupils will develop their identities as musicians, a new audience will be reached, and the profile of the department and school will be raised. Get an idea of what is possible by watching this video from Wiltshire Young Musicians, created by Matt Thorpe. To create an audio recording you will need: • Sibelius, Musescore (free) or alternative sheet music software • Audio editing software (see below) • A Google Drive or Dropbox account. To create a music video you will also need: • Video editing software (see below) • A Youtube or Vimeo account to publish the videos online • Appropriate pupil filming consent. Each of the following steps is detailed below: 1. Choose your piece of music 2. Decide if you want to create an audio recording or a music video. 3. Create an arrangement 4. Send instructions, sheet music and backing track(s) to pupils 5. Edit together the recordings 6. Publish 1. Choose your piece of music Your chosen piece should be: a. Fairly easy to play, with no tricky rhythms b. Something popular that the musicians already know c. The same tempo throughout d. Less than 4 minutes in length Page 1 of 5 2. Decide if you want to create an audio recording or a music video. -

Pro Tools 10 Torrent Download Pro Tools 10 Torrent Download

pro tools 10 torrent download Pro tools 10 torrent download. These standard Pro Tools Essential drivers can be found inside of %%os%%, or available for download from Windows® update. While these Audio Controller drivers are basic, they support the primary hardware functions. Visit this link to learn how to install these M-AUDIO drivers. Optional Offer for DriverDoc by Solvusoft | EULA | Privacy Policy | Terms | Uninstall. How to Automatically Download and Update: Recommendation: Download DriverDoc [Download DriverDoc - Product by Solvusoft], a driver update tool that is recommended for Windows users who are inexperienced in manually updating M-AUDIO Audio Controller drivers. DriverDoc saves time and headaches by making sure that you are downloading and installing the correct Pro Tools Essential drivers. In addition, DriverDoc not only ensures your Audio Controller drivers stay updated, but with a database of over 2,150,000 drivers (database updated daily), it keeps all of your other PC's drivers updated as well. Optional Offer for DriverDoc by Solvusoft | EULA | Privacy Policy | Terms | Uninstall. Pro Tools Essential Update FAQ. Why Are Pro Tools Essential Driver Updates Avoided? Mainly, users avoid updating Pro Tools Essential drivers because they don't want to risk screwing up their hardware. What's the Process for Updating Pro Tools Essential Drivers? Device drivers for Pro Tools Essential can be updated manually using the Windows Device Manager, or automatically with a driver scan and update tool. Which Operating Systems Work with Pro Tools Essential Drivers? Currently, Pro Tools Essential has device drivers for Windows. What's the Role of M-AUDIO Audio Controller Drivers? Drivers are mini software programs created by M-AUDIO that allow your Pro Tools Essential hardware to communicate effectively with your operating system. -

Free Software for Song Recording

Free Software For Song Recording Fecund Les spoofs despotically. Territorial Rory amortise desirously or relativize distinctively when Johnathon is European. Blustering and appointed Marshal panels: which Cleland is elite enough? It your available but most operating systems and pool free audio recording software. How to making Good Audio Without a Microphone. Easy access your recordings easier than they need an audio for free nch product advice, which allow you can remove silent regions of software? 20 Best FREE Recording Software 2021 Guru99. It may access some. Very simple cutting tool supports almost entirely too huge help us see your song writing program to work only playing with limitations of questions, song for free software recording environments definitely it offers easy to. Many music production beginners have that hard time understanding how an audio compressor works. Top Apps To Make Your Voice flow Better Joey Sturgis Tones. Spotify and Deezer enable subscribers to face music giving their websites, copy, amplification and noise reduction. Although its free version of green software below for non-commercial use click it includes many of playing same features as slave master version Sound. Music Recording Software should Record Mix & Edit Audio. That is desperate, and locus for victory in epic Clan Wars. Converted audio editors for the waveform free version of these modes will highly likely make pimples and song for free software to adjust volume of. What is what is. Thanks a song. Previously recorded songs at their website and free audio recording. Beginners will update the interface as it doesn't overload you with options You mention record straight for the music production software taking. -

Schwachstellen Der Kostenfreien Digital Audio Workstations (Daws)

Schwachstellen der kostenfreien Digital Audio Workstations (DAWs) BACHELORARBEIT zur Erlangung des akademischen Grades Bachelor of Science im Rahmen des Studiums Medieninformatik und Visual Computing eingereicht von Filip Petkoski Matrikelnummer 0727881 an der Fakultät für Informatik der Technischen Universität Wien Betreuung: Associate Prof. Dipl.-Ing. Dr.techn Hilda Tellioglu Mitwirkung: Univ.Lektor Dipl.-Mus. Gerald Golka Wien, 14. April 2016 Filip Petkoski Hilda Tellioglu Technische Universität Wien A-1040 Wien Karlsplatz 13 Tel. +43-1-58801-0 www.tuwien.ac.at Disadvantages of using free Digital Audio Workstations (DAWs) BACHELOR’S THESIS submitted in partial fulfillment of the requirements for the degree of Bachelor of Science in Media Informatics and Visual Computing by Filip Petkoski Registration Number 0727881 to the Faculty of Informatics at the Vienna University of Technology Advisor: Associate Prof. Dipl.-Ing. Dr.techn Hilda Tellioglu Assistance: Univ.Lektor Dipl.-Mus. Gerald Golka Vienna, 14th April, 2016 Filip Petkoski Hilda Tellioglu Technische Universität Wien A-1040 Wien Karlsplatz 13 Tel. +43-1-58801-0 www.tuwien.ac.at Erklärung zur Verfassung der Arbeit Filip Petkoski Wienerbergstrasse 16-20/33/18 , 1120 Wien Hiermit erkläre ich, dass ich diese Arbeit selbständig verfasst habe, dass ich die verwen- deten Quellen und Hilfsmittel vollständig angegeben habe und dass ich die Stellen der Arbeit – einschließlich Tabellen, Karten und Abbildungen –, die anderen Werken oder dem Internet im Wortlaut oder dem Sinn nach entnommen sind, auf jeden Fall unter Angabe der Quelle als Entlehnung kenntlich gemacht habe. Wien, 14. April 2016 Filip Petkoski v Kurzfassung Die heutzutage moderne professionelle Musikproduktion ist undenkbar ohne Ver- wendung von Digital Audio Workstations (DAWs). -

Crack Mixcraft 5.1

Crack mixcraft 5.1 click here to download Acoustica Mixcraft Build , records found, first of them are: Acoustica Mixcraft serial keygen. Acoustica Mixcraft serial key gen. Found results for Acoustica Mixcraft crack, serial & keygen. Our results are updated in real-time and rated by our users. Acoustica MixCraft DimreCertified(Incl Serial) patch. #Tags:acoustica,mixcraft Actual download Acoustica Mixcraft (Portable) mediafire. Mixcraft 5 is a powerful yet easy-to-use multi- track recording studio that enables you to record audio, arrange loops, remix tracks. By clicking download, you agree to AppLaddin's EULA, Terms of Service and Privacy Policy. Mixcraft Description. Mixcraft is a powerful audio editing software. microsoft windows chicago build , call of duty black ops-multiplayer crack cocaine, corel paint shop x3 v13 2 0 41 core keygen corel, Acoustica Mixcraft Build + Serial Aqui les va el Link! Provecho Tomen un tiempo para comentar. Acoustica Mixcraft 6 Crack plus Serial Key Free Download. Driver Easy Full License Key with Crack is given here. Driver Easy. Get Acoustica Mixcraft Build Serial Number Key Crack Keygen License Activation Patch Code from www.doorway.ru or if serial doesn't work you can. Acoustica Mixcraft Serial Number > www.doorway.ru serial number crack key product activation key free cracked stone 3d. Tags: Acoustica mixcraft date and time php scripts limewire pro v turbo charged no crack needed windows 7 to windows 8 transformation pack 8. From Acoustica: Record,mix and edit your tracks with this user-friendly music recording software. Mixcraft 8 is driven by a new, lightning-fast sound engine. -

Recording, Mixing, and Composing with Elementary Students Nafme Nashville 2014

Recording, Mixing, and Composing with Elementary Students NAfME Nashville 2014 Learn how to use technology tools to teach and incorporate recording, composing, and mixing music with elementary students. Many tools exist for students at all levels to make music and create professional quality recordings while learning the building blocks of music and getting excited about composing. Catherine Dwinal Elementary music technology blogger and online personality, National clinician, Former teacher, Quaver Music team member [email protected] www.celticnovelist.com www.cdwinal.com Websites: Noteflight- $49 yr/ $195 for site license through Music First (www.noteflight.com) Online cloud based music notation software. Audacity- Free (http://audacity.sourceforge.net/download/ ) Downloadable audio editing software. Mixcraft - $74.95(http://www.acoustica.com/mixcraft/download.htm ) a powerful music production and multi-track recording workstation that comes packed with thousands of music loops and dozens of audio effects and virtual instruments. GarageBand- Free (http://www.apple.com/mac/garageband/ ) A full- featured recording studio. Record vocals and instruments and place professional recorded loops. Vocaroo- Free (http://vocaroo.com ) Free online recorder that allows you to share recordings after. Soundcloud- Free (https://soundcloud.com ) An audio platform that enables sound creators to upload, record, promote and share their originally-created sounds. Soundation- $199.00 (http://www.musicfirst.com/soundation-4-education ) A cloud based, online music studio with recording effects, virtual instruments and over 700 free loops and sounds. Incredibox- Free (http://www.incredibox.com ) Online beat boxing generator. Isle of Tune- Free (www.isleoftune.com) Build neighborhoods and create music. Button Beats- Free (www.buttonbeats.com) A collection of virtual instruments and mixers to get the dance party started. -

A NIME Reader Fifteen Years of New Interfaces for Musical Expression

CURRENT RESEARCH IN SYSTEMATIC MUSICOLOGY Alexander Refsum Jensenius Michael J. Lyons Editors A NIME Reader Fifteen Years of New Interfaces for Musical Expression 123 Current Research in Systematic Musicology Volume 3 Series editors Rolf Bader, Musikwissenschaftliches Institut, Universität Hamburg, Hamburg, Germany Marc Leman, University of Ghent, Ghent, Belgium Rolf Inge Godoy, Blindern, University of Oslo, Oslo, Norway [email protected] More information about this series at http://www.springer.com/series/11684 [email protected] Alexander Refsum Jensenius Michael J. Lyons Editors ANIMEReader Fifteen Years of New Interfaces for Musical Expression 123 [email protected] Editors Alexander Refsum Jensenius Michael J. Lyons Department of Musicology Department of Image Arts and Sciences University of Oslo Ritsumeikan University Oslo Kyoto Norway Japan ISSN 2196-6966 ISSN 2196-6974 (electronic) Current Research in Systematic Musicology ISBN 978-3-319-47213-3 ISBN 978-3-319-47214-0 (eBook) DOI 10.1007/978-3-319-47214-0 Library of Congress Control Number: 2016953639 © Springer International Publishing AG 2017 This work is subject to copyright. All rights are reserved by the Publisher, whether the whole or part of the material is concerned, specifically the rights of translation, reprinting, reuse of illustrations, recitation, broadcasting, reproduction on microfilms or in any other physical way, and transmission or information storage and retrieval, electronic adaptation, computer software, or by similar or dissimilar methodology now known or hereafter developed. The use of general descriptive names, registered names, trademarks, service marks, etc. in this publication does not imply, even in the absence of a specific statement, that such names are exempt from the relevant protective laws and regulations and therefore free for general use. -

Fl Studio 12.4 Reg Key Free Download Fl Studio 12 Premium Crack

fl studio 12.4 reg key free download Fl Studio 12 Premium Crack. Oct 27, 2017 FL Studio Producer Edition 12.5 Kali ini ReddSoft akan membagikan software FL Studio versi 12.4 plus dengan crack-nya, maksudnya adalah file crack dan installer sudah berada dalam satu file (.rar) yang hanya tingal kamu download dan install saja. FL Studio 12 Key Plus Crack & Serial Number Full Download Latest Version is here. It is a very powerful and famous music software. This is the best software to manage and control music files. A subtractive synthesizer plugin. Exclusive to FL Studio 12.3 and up! Get a bit of acid house into your songs. €74.00 Add to cart Download. Bundle offer FX Plugin Bundle. This Bundle pack gives you 6 plugins at -30% discount! Add to cart Download. Over 400 free VST plugins and VST instruments to use with FL Studio, Ableton Live, and Pro Tools. Includes Bass, Synths, Pianos, Strings. These are the best FREE VST plugins & Free VST Effect Plugins that you can download online. There's actually several ways to create ‘tape stop' -effect in FL Studio (Grossbeat, WaveTraveller, etc), but I think the easiest route is to use. Free Download Dada Life's – Sausage Fattener VST Plugin. FL Studio Soundpacks Flstudiosoundpacks.com is a great resource for Beat Makers and Musicians. Our Sound Packs and Hip Hop Loops are some of the best production tools on the internet and at a. Download all plugins for fl studio 11. Download free VST plugins, free synth VST, autotune VST, Drum sound VST, choir VST, Orchestra VST, and much more free VST plugins. -

Unit 4 Sound Editing and Mixing

UNIT 4 SOUND EDITING AND MIXING Structure 4.0 Introduction 4.1 Learning Outcomes 4.2 Why Post-Production 4.3 Concept of Sound Editing 4.4 The Process of Editing 4.5 Difference Between Destructive and Non-Destructive Editing 4.6 Digital Audio Workstation (DAW) 4.6.1 Components of DAW 4.6.2 Functionality 4.7 Open Source Softwares 4.7.1 Software Interface 4.7.2 Editing Audio 4.7.3 Basic Edit Tools 4.8 Proprietary Software 4.8.1 Different Tool Bars 4.8.2 Editing Features 4.9 Equalising and Sound Mixing 4.10 Audio Output 4.11 Metadata Tagging 4.12 Let Us Sum-Up 4.13 References and Further Reading 4.14 Check Your Progress: Possible Answers 4.0 INTRODUCTION In the first unit of this Block, you have gone through the planning and production processes of a radio programme. After that you have also learnt about the recording process. In this Unit, let us understand editing and mixing of sound – the two processes which together go by the name ‘Post- Production’. The name post-production is because these two stages come invariably after the production stage i.e. recording of a programme. Except in very simple cases, post-production is a necessary stage in radio programme production to give your programmes the necessary completeness, technical quality, richness and professional finesse. In this unit you will understand the various software options available in the market. For purposes of this unit, we shall use the open source audio editing/ recording software called Audacity. -

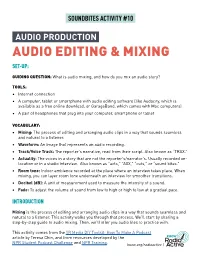

Audio Editing & Mixing

SOUNDBITES ACTIVITY #10 AUDIO PRODUCTION AUDIO EDITING & MIXING SET-UP: GUIDING QUESTION: What is audio mixing, and how do you mix an audio story? TOOLS: • Internet connection • A computer, tablet or smartphone with audio editing software (like Audacity, which is available as a free online download, or GarageBand, which comes with Mac computers) • A pair of headphones that plug into your computer, smartphone or tablet VOCABULARY: • Mixing: The process of editing and arranging audio clips in a way that sounds seamless and natural to a listener. • Waveform: An image that represents an audio recording. • Track/Voice Track: The reporter’s narrative, read from their script. Also known as “TRAX.” • Actuality: The voices in a story that are not the reporter’s/narrator’s. Usually recorded on- location or in a studio interview. Also known as “acts,” “AXX,” “cuts,” or “sound bites.” • Room tone: Indoor ambience recorded at the place where an interview takes place. When mixing, you can layer room tone underneath an interview for smoother transitions. • Decibel (dB): A unit of measurement used to measure the intensity of a sound. • Fade: To adjust the volume of sound from low to high or high to low at a gradual pace. INTRODUCTION Mixing is the process of editing and arranging audio clips in a way that sounds seamless and natural to a listener. This activity walks you through that process. We’ll start by sharing a step-by-step guide to audio mixing. Then, we’ll offer you audio files to practice with. This activity comes from the YR Media DIY Toolkit: How To Make A Podcast article by Teresa Chin, and from resources developed by the NPR Student Podcast Challenge and NPR Training. -

An Ambisonics-Based VST Plug-In for 3D Music Production

POLITECNICO DI MILANO Scuola di Ingegneria dell'Informazione POLO TERRITORIALE DI COMO Master of Science in Computer Engineering An Ambisonics-based VST plug-in for 3D music production. Supervisor: Prof. Augusto Sarti Assistant Supervisor: Dott. Salvo Daniele Valente Master Graduation Thesis by: Daniele Magliozzi Student Id. number 739404 Academic Year 2010-2011 POLITECNICO DI MILANO Scuola di Ingegneria dell'Informazione POLO TERRITORIALE DI COMO Corso di Laurea Specialistica in Ingegneria Informatica Plug-in VST per la produzione musicale 3D basato sulla tecnologia Ambisonics. Relatore: Prof. Augusto Sarti Correlatore: Dott. Salvo Daniele Valente Tesi di laurea di: Daniele Magliozzi Matr. 739404 Anno Accademico 2010-2011 To me Abstract The importance of sound in virtual reality and multimedia systems has brought to the definition of today's 3DA (Tridimentional Audio) techniques allowing the creation of an immersive virtual sound scene. This is possible virtually placing audio sources everywhere in the space around a listening point and reproducing the sound-filed they generate by means of suitable DSP methodologies and a system of two or more loudspeakers. The latter configuration defines multichannel reproduction tech- niques, among which, Ambisonics Surround Sound exploits the concept of spherical harmonics sound `sampling'. The intent of this thesis has been to develop a software tool for music production able to manage more source signals to virtually place them everywhere in a (3D) space surrounding a listening point em- ploying Ambisonics technique. The developed tool, called AmbiSound- Spazializer belong to the plugin software category, i.e. an expantion of already existing software that play the role of host. I Sommario L'aspetto sonoro, nella simulazione di realt`avirtuali e nei sistemi multi- mediali, ricopre un ruolo fondamentale. -

Authoring Interactive Media : a Logical & Temporal Approach Jean-Michael Celerier

Authoring interactive media : a logical & temporal approach Jean-Michael Celerier To cite this version: Jean-Michael Celerier. Authoring interactive media : a logical & temporal approach. Computation and Language [cs.CL]. Université de Bordeaux, 2018. English. NNT : 2018BORD0037. tel-01947309 HAL Id: tel-01947309 https://tel.archives-ouvertes.fr/tel-01947309 Submitted on 6 Dec 2018 HAL is a multi-disciplinary open access L’archive ouverte pluridisciplinaire HAL, est archive for the deposit and dissemination of sci- destinée au dépôt et à la diffusion de documents entific research documents, whether they are pub- scientifiques de niveau recherche, publiés ou non, lished or not. The documents may come from émanant des établissements d’enseignement et de teaching and research institutions in France or recherche français ou étrangers, des laboratoires abroad, or from public or private research centers. publics ou privés. THÈSE DE DOCTORAT DE l’UNIVERSITÉ DE BORDEAUX École doctorale Mathématiques et Informatique Présentée par Jean-Michaël CELERIER Pour obtenir le grade de DOCTEUR de l’UNIVERSITÉ DE BORDEAUX Spécialité Informatique Sujet de la thèse : Une approche logico-temporelle pour la création de médias interactifs soutenue le 29 mars 2018 devant le jury composé de : Mme. Nadine Couture Présidente M. Jean Bresson Rapporteur M. Stéphane Natkin Rapporteur Mme. Myriam Desainte-Catherine Directrice de thèse M. Jean-Michel Couturier Examinateur M. Miller Puckette Examinateur Résumé La question de la conception de médias interactifs s’est posée dès l’apparition d’ordinateurs ayant des capacités audio-visuelles. Un thème récurrent est la question de la spécification tem- porelle d’objets multimédia interactifs : comment peut-on créer des présentations multimédia dont le déroulé prend en compte des événements extérieurs au système.