On Sharing an FIB Table in Named Data Networking

Total Page:16

File Type:pdf, Size:1020Kb

Load more

Recommended publications

-

What Is Routing?

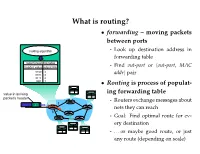

What is routing? • forwarding – moving packets between ports - Look up destination address in forwarding table - Find out-port or hout-port, MAC addri pair • Routing is process of populat- ing forwarding table - Routers exchange messages about nets they can reach - Goal: Find optimal route for ev- ery destination - . or maybe good route, or just any route (depending on scale) Routing algorithm properties • Static vs. dynamic - Static: routes change slowly over time - Dynamic: automatically adjust to quickly changing network conditions • Global vs. decentralized - Global: All routers have complete topology - Decentralized: Only know neighbors & what they tell you • Intra-domain vs. Inter-domain routing - Intra-: All routers under same administrative control - Intra-: Scale to ∼100 networks (e.g., campus like Stanford) - Inter-: Decentralized, scale to Internet Optimality A 6 1 3 2 F 1 E B 4 1 9 C D • View network as a graph • Assign cost to each edge - Can be based on latency, b/w, utilization, queue length, . • Problem: Find lowest cost path between two nodes - Must be computed in distributed way Distance Vector • Local routing algorithm • Each node maintains a set of triples - (Destination, Cost, NextHop) • Exchange updates w. directly connected neighbors - periodically (on the order of several seconds to minutes) - whenever table changes (called triggered update) • Each update is a list of pairs: - (Destination, Cost) • Update local table if receive a “better” route - smaller cost - from newly connected/available neighbor • Refresh existing -

The Routing Table V1.12 – Aaron Balchunas 1

The Routing Table v1.12 – Aaron Balchunas 1 - The Routing Table - Routing Table Basics Routing is the process of sending a packet of information from one network to another network. Thus, routes are usually based on the destination network, and not the destination host (host routes can exist, but are used only in rare circumstances). To route, routers build Routing Tables that contain the following: • The destination network and subnet mask • The “next hop” router to get to the destination network • Routing metrics and Administrative Distance The routing table is concerned with two types of protocols: • A routed protocol is a layer 3 protocol that applies logical addresses to devices and routes data between networks. Examples would be IP and IPX. • A routing protocol dynamically builds the network, topology, and next hop information in routing tables. Examples would be RIP, IGRP, OSPF, etc. To determine the best route to a destination, a router considers three elements (in this order): • Prefix-Length • Metric (within a routing protocol) • Administrative Distance (between separate routing protocols) Prefix-length is the number of bits used to identify the network, and is used to determine the most specific route. A longer prefix-length indicates a more specific route. For example, assume we are trying to reach a host address of 10.1.5.2/24. If we had routes to the following networks in the routing table: 10.1.5.0/24 10.0.0.0/8 The router will do a bit-by-bit comparison to find the most specific route (i.e., longest matching prefix). -

Rule-Based Forwarding (RBF): Improving the Internet's Flexibility

Rule-based Forwarding (RBF): improving the Internet’s flexibility and security Lucian Popa∗ Ion Stoica∗ Sylvia Ratnasamy† 1Introduction 4. rules allow flexible forwarding: rules can select ar- From active networks [33] to the more recent efforts on bitrary forwarding paths and/or invoke functionality GENI [5], a long-held goal of Internet research has been made available by on-path routers; both path and to arrive at a network architecture that is flexible.Theal- function selection can be conditioned on (dynamic) lure of greater flexibility is that it would allow us to more network and packet state. easily incorporate new ideas into the Internet’s infras- The first two properties enable security by ensuring tructure, whether these ideas aim to improve the existing the network will not forward a packet unless it has network (e.g., improving performance [36, 23, 30], relia- been explicitly cleared by its recipients while the third bility [12, 13], security [38, 14, 25], manageability [11], property ensures that rules cannot be (mis)used to attack etc.) or to extend it with altogether new services and the network itself. As we shall show, our final property business models (e.g., multicast, differentiated services, enables flexibility by allowing a user to give the network IPv6, virtualized networks). fine-grained instructions on how to forward his packets. An unfortunate stumbling block in these efforts has In the remainder of this paper, we present RBF, a been that flexibility is fundamentally at odds with forwarding architecture that meets the above properties. another long-held goal: that of devising a secure network architecture. -

Routing and Packet Forwarding

Routing and Packet Forwarding Tina Schmidt September 2008 Contents 1 Introduction 1 1.1 The Different Layers of the Internet . .2 2 IPv4 3 3 Routing and Packet Forwarding 5 3.1 The Shortest Path Problem . .6 3.2 Dijkstras Algorithm . .7 3.3 Practical Realizations of Routing Algorithms . .8 4 Autonomous Systems 10 4.1 Intra-AS-Routing . 10 4.2 Inter-AS-Routing . 11 5 Other Services of IP 11 A Dijkstra's Algorithm 13 1 Introduction The history of the internet and Peer-to-Peer networks starts in the 60s with the ARPANET (Advanced Research Project Agency Network). Back then, when computers were expen- sive and linked to several terminals, the aim of ARPANET was to establish a continuous network inbetween mainframe computers in the U.S. Starting with only three computers in 1969, mainly universities were involved in establishing the ARPANET. The ARPANET became a network inbetween networks of mainframe computes and therefore was called inter-net. Today it became the internet which links millions of computers. For a long time nobody knew what use such a Wide Area Netwok (WAN) could have for the pop- ulation. The internet was used for e-mails, newsgroups, exchange of scientific data and some special software, all in all services that were barely known in public. The spread of 1 Application Layer Peer-to-Peer-networks, e.g. Telnet = Telecommunication Network FTP = File Transfer Protocol HTTP = Hypertext Transfer Protocol SMTP = Simple Mail Transfer Protocol Transport Layer TCP = Transmission Control Protocol UDP = User Datagram Protocol Internet Layer IP = Internet Protocol ICMP = Internet Control Message Protocol IGMP = Internet Group Management Protocol Host-to-Network Layer device drivers or Link Layer (e.g. -

P2P Resource Sharing in Wired/Wireless Mixed Networks 1

INT J COMPUT COMMUN, ISSN 1841-9836 Vol.7 (2012), No. 4 (November), pp. 696-708 P2P Resource Sharing in Wired/Wireless Mixed Networks J. Liao Jianwei Liao College of Computer and Information Science Southwest University of China 400715, Beibei, Chongqing, China E-mail: [email protected] Abstract: This paper presents a new routing protocol called Manager-based Routing Protocol (MBRP) for sharing resources in wired/wireless mixed networks. MBRP specifies a manager node for a designated sub-network (called as a group), in which all nodes have the similar connection properties; then all manager nodes are employed to construct the backbone overlay network with ring topology. The manager nodes act as the proxies between the internal nodes in the group and the external world, that is not only for centralized management of all nodes to a certain extent, but also for avoiding the messages flooding in the whole network. The experimental results show that compared with Gnutella2, which uses super-peers to perform similar management work, the proposed MBRP has less lookup overhead including lookup latency and lookup hop count in the most of cases. Besides, the experiments also indicate that MBRP has well configurability and good scaling properties. In a word, MBRP has less transmission cost of the shared file data, and the latency for locating the sharing resources can be reduced to a great extent in the wired/wireless mixed networks. Keywords: wired/wireless mixed network, resource sharing, manager-based routing protocol, backbone overlay network, peer-to-peer. 1 Introduction Peer-to-Peer technology (P2P) is a widely used network technology, the typical P2P network relies on the computing power and bandwidth of all participant nodes, rather than a few gathered and dedicated servers for central coordination [1, 2]. -

Lab 5.5.2: Examining a Route

Lab 5.5.2: Examining a Route Topology Diagram Addressing Table Device Interface IP Address Subnet Mask Default Gateway S0/0/0 10.10.10.6 255.255.255.252 N/A R1-ISP Fa0/0 192.168.254.253 255.255.255.0 N/A S0/0/0 10.10.10.5 255.255.255.252 10.10.10.6 R2-Central Fa0/0 172.16.255.254 255.255.0.0 N/A N/A 192.168.254.254 255.255.255.0 192.168.254.253 Eagle Server N/A 172.31.24.254 255.255.255.0 N/A host Pod# A N/A 172.16. Pod#.1 255.255.0.0 172.16.255.254 host Pod# B N/A 172.16. Pod#. 2 255.255.0.0 172.16.255.254 S1-Central N/A 172.16.254.1 255.255.0.0 172.16.255.254 All contents are Copyright © 1992–2007 Cisco Systems, Inc. All rights reserved. This document is Cisco Public Information. Page 1 of 7 CCNA Exploration Network Fundamentals: OSI Network Layer Lab 5.5.1: Examining a Route Learning Objectives Upon completion of this lab, you will be able to: • Use the route command to modify a Windows computer routing table. • Use a Windows Telnet client command telnet to connect to a Cisco router. • Examine router routes using basic Cisco IOS commands. Background For packets to travel across a network, a device must know the route to the destination network. This lab will compare how routes are used in Windows computers and the Cisco router. -

Routing Tables

Routing Tables A routing table is a grouping of information stored on a networked computer or network router that includes a list of routes to various network destinations. The data is normally stored in a database table and in more advanced configurations includes performance metrics associated with the routes stored in the table. Additional information stored in the table will include the network topology closest to the router. Although a routing table is routinely updated by network routing protocols, static entries can be made through manual action on the part of a network administrator. How Does a Routing Table Work? Routing tables work similar to how the post office delivers mail. When a network node on the Internet or a local network needs to send information to another node, it first requires a general idea of where to send the information. If the destination node or address is not connected directly to the network node, then the information has to be sent via other network nodes. In order to save resources, most local area network nodes will not maintain a complex routing table. Instead, they will send IP packets of information to a local network gateway. The gateway maintains the primary routing table for the network and will send the data packet to the desired location. In order to maintain a record of how to route information, the gateway will use a routing table that keeps track of the appropriate destination for outgoing data packets. All routing tables maintain routing table lists for the reachable destinations from the router’s location. -

Introduction to IP Multicast Routing

Introduction to IP Multicast Routing by Chuck Semeria and Tom Maufer Abstract The first part of this paper describes the benefits of multicasting, the Multicast Backbone (MBONE), Class D addressing, and the operation of the Internet Group Management Protocol (IGMP). The second section explores a number of different algorithms that may potentially be employed by multicast routing protocols: - Flooding - Spanning Trees - Reverse Path Broadcasting (RPB) - Truncated Reverse Path Broadcasting (TRPB) - Reverse Path Multicasting (RPM) - Core-Based Trees The third part contains the main body of the paper. It describes how the previous algorithms are implemented in multicast routing protocols available today. - Distance Vector Multicast Routing Protocol (DVMRP) - Multicast OSPF (MOSPF) - Protocol-Independent Multicast (PIM) Introduction There are three fundamental types of IPv4 addresses: unicast, broadcast, and multicast. A unicast address is designed to transmit a packet to a single destination. A broadcast address is used to send a datagram to an entire subnetwork. A multicast address is designed to enable the delivery of datagrams to a set of hosts that have been configured as members of a multicast group in various scattered subnetworks. Multicasting is not connection oriented. A multicast datagram is delivered to destination group members with the same “best-effort” reliability as a standard unicast IP datagram. This means that a multicast datagram is not guaranteed to reach all members of the group, or arrive in the same order relative to the transmission of other packets. The only difference between a multicast IP packet and a unicast IP packet is the presence of a “group address” in the Destination Address field of the IP header. -

Analysis and Improvement of Name-Based Packet Forwarding

Analysis and Improvement of Name-based Packet Forwarding over Flat ID Network Architectures Antonio Rodrigues Peter Steenkiste Ana Aguiar [email protected] [email protected] [email protected] Carnegie Mellon University Carnegie Mellon University Faculty of Engineering, Univ. of Porto Pittsburgh, PA, USA Pittsburgh, PA, USA Instituto de Telecomunicações Faculty of Engineering, Univ. of Porto Porto, Portugal Instituto de Telecomunicações Porto, Portugal ABSTRACT URLs to identify web objects. Name-based Information Centric One of ICN’s main challenges is attaining forwarding table scala- Networks (ICNs) such as NDN [8] promise to be a good fit for such bility when confronted with a huge content space. This is specially applications, for two reasons: (1) no need for external name lookup true in flat ID architectures, which do not naturally support route (e.g., DNS or GNS [18]) by using name-based forwarding at the aggregation for hierarchical names. Previous work has proposed network layer; and (2) reduced forwarding table sizes as a result improving scalability by aggregating content names in a fixed-size of the aggregation enabled by the hierarchical structure of names. Bloom Filters (BF). However, the negative impact of false positive In contrast, ICNs based on flat IDs (defined as fixed-size bit strings (FPs) matches on forwarding correctness and performance has not in this paper), e.g., DONA [12], PURSUIT [6], do not natively have been studied thoroughly. In this paper, we study the end-to-end net- these benefits. work performance of BF-based forwarding. We devise an analytical Recently, a forwarding scheme based on Bloom Filters (BFs) [13, model that accurately models BF-based forwarding and the flow of 14] has been proposed to enable aggregation of content descriptors BF-based packets over inter-domain topologies. -

Unicast Reverse Path Forwarding Configuration Guide, Cisco IOS XE 17 (Cisco ASR 900 Series)

Unicast Reverse Path Forwarding Configuration Guide, Cisco IOS XE 17 (Cisco ASR 900 Series) First Published: 2020-01-10 Americas Headquarters Cisco Systems, Inc. 170 West Tasman Drive San Jose, CA 95134-1706 USA http://www.cisco.com Tel: 408 526-4000 800 553-NETS (6387) Fax: 408 527-0883 THE SPECIFICATIONS AND INFORMATION REGARDING THE PRODUCTS IN THIS MANUAL ARE SUBJECT TO CHANGE WITHOUT NOTICE. ALL STATEMENTS, INFORMATION, AND RECOMMENDATIONS IN THIS MANUAL ARE BELIEVED TO BE ACCURATE BUT ARE PRESENTED WITHOUT WARRANTY OF ANY KIND, EXPRESS OR IMPLIED. USERS MUST TAKE FULL RESPONSIBILITY FOR THEIR APPLICATION OF ANY PRODUCTS. THE SOFTWARE LICENSE AND LIMITED WARRANTY FOR THE ACCOMPANYING PRODUCT ARE SET FORTH IN THE INFORMATION PACKET THAT SHIPPED WITH THE PRODUCT AND ARE INCORPORATED HEREIN BY THIS REFERENCE. IF YOU ARE UNABLE TO LOCATE THE SOFTWARE LICENSE OR LIMITED WARRANTY, CONTACT YOUR CISCO REPRESENTATIVE FOR A COPY. The Cisco implementation of TCP header compression is an adaptation of a program developed by the University of California, Berkeley (UCB) as part of UCB's public domain version of the UNIX operating system. All rights reserved. Copyright © 1981, Regents of the University of California. NOTWITHSTANDING ANY OTHER WARRANTY HEREIN, ALL DOCUMENT FILES AND SOFTWARE OF THESE SUPPLIERS ARE PROVIDED “AS IS" WITH ALL FAULTS. CISCO AND THE ABOVE-NAMED SUPPLIERS DISCLAIM ALL WARRANTIES, EXPRESSED OR IMPLIED, INCLUDING, WITHOUT LIMITATION, THOSE OF MERCHANTABILITY, FITNESS FOR A PARTICULAR PURPOSE AND NONINFRINGEMENT OR ARISING FROM A COURSE OF DEALING, USAGE, OR TRADE PRACTICE. IN NO EVENT SHALL CISCO OR ITS SUPPLIERS BE LIABLE FOR ANY INDIRECT, SPECIAL, CONSEQUENTIAL, OR INCIDENTAL DAMAGES, INCLUDING, WITHOUT LIMITATION, LOST PROFITS OR LOSS OR DAMAGE TO DATA ARISING OUT OF THE USE OR INABILITY TO USE THIS MANUAL, EVEN IF CISCO OR ITS SUPPLIERS HAVE BEEN ADVISED OF THE POSSIBILITY OF SUCH DAMAGES. -

Introduction to the Border Gateway Protocol – Case Study Using GNS3

Introduction to The Border Gateway Protocol – Case Study using GNS3 Sreenivasan Narasimhan1, Haniph Latchman2 Department of Electrical and Computer Engineering University of Florida, Gainesville, USA [email protected], [email protected] Abstract – As the internet evolves to become a vital resource for many organizations, configuring The Border Gateway protocol (BGP) as an exterior gateway protocol in order to connect to the Internet Service Providers (ISP) is crucial. The BGP system exchanges network reachability information with other BGP peers from which Autonomous System-level policy decisions can be made. Hence, BGP can also be described as Inter-Domain Routing (Inter-Autonomous System) Protocol. It guarantees loop-free exchange of information between BGP peers. Enterprises need to connect to two or more ISPs in order to provide redundancy as well as to improve efficiency. This is called Multihoming and is an important feature provided by BGP. In this way, organizations do not have to be constrained by the routing policy decisions of a particular ISP. BGP, unlike many of the other routing protocols is not used to learn about routes but to provide greater flow control between competitive Autonomous Systems. In this paper, we present a study on BGP, use a network simulator to configure BGP and implement its route-manipulation techniques. Index Terms – Border Gateway Protocol (BGP), Internet Service Provider (ISP), Autonomous System, Multihoming, GNS3. 1. INTRODUCTION Figure 1. Internet using BGP [2]. Routing protocols are broadly classified into two types – Link State In the figure, AS 65500 learns about the route 172.18.0.0/16 through routing (LSR) protocol and Distance Vector (DV) routing protocol. -

The Routing Table Lookup Process

The Routing Table Part 2 – The Routing Table Lookup Process By Rick Graziani Cisco Networking Academy [email protected] Updated: Oct. 10, 2002 This document is the second of two parts dealing with the routing table. Part I discussed the structure of the routing table, and how routes are created. Part II will discuss how the routing table lookup process finds the “best” route within the routing table. What happens when a router receives an IP packet, examines the IP destination address and looks that address up in the routing table? How does the router decide which route in the routing table is the best match? What affect does the subnet mask have on the routing table? How does the router decide whether or not to use a supernet or default route if there is not a better match? We will answer these questions and more in this document. The network we will be using is a simple three router network. RouterA and Router B share the common major network 172.16.0.0/24. RouterB and RouterC are connected by the 192.168.1.0/24 network. You will notice that RouterC also has a 172.16.4.0/24 subnet which is disconnected, or discontiguous, from the rest of the 172.16.0.0 network. 172.16.1.0/24 172.16.3.0/24 172.16.4.0/24 .1 fa0 .1 fa0 .1 fa0 .1 172.16.2.0/24 .2 .1 192.168.1.0/24 .2 s0 s0 s1 s0 Router A Router B Router C Most of this information is best explained by using examples.