Being Robust (In High Dimensions) Can Be Practical

Total Page:16

File Type:pdf, Size:1020Kb

Load more

Recommended publications

-

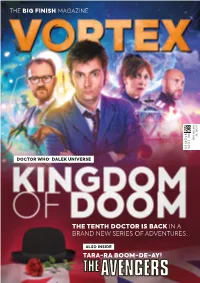

VORTEX Playing Mrs Constance Clarke

THE BIG FINISH MAGAZINE MARCH 2021 MARCH ISSUE 145 DOCTOR WHO: DALEK UNIVERSE THE TENTH DOCTOR IS BACK IN A BRAND NEW SERIES OF ADVENTURES… ALSO INSIDE TARA-RA BOOM-DE-AY! WWW.BIGFINISH.COM @BIGFINISH THEBIGFINISH @BIGFINISHPROD BIGFINISHPROD BIG-FINISH WE MAKE GREAT FULL-CAST AUDIO DRAMAS AND AUDIOBOOKS THAT ARE AVAILABLE TO BUY ON CD AND/OR DOWNLOAD WE LOVE STORIES Our audio productions are based on much-loved TV series like Doctor Who, Torchwood, Dark Shadows, Blake’s 7, The Avengers, The Prisoner, The Omega Factor, Terrahawks, Captain Scarlet, Space: 1999 and Survivors, as well as classics such as HG Wells, Shakespeare, Sherlock Holmes, The Phantom of the Opera and Dorian Gray. We also produce original creations such as Graceless, Charlotte Pollard and The Adventures of Bernice Summerfield, plus the THE BIG FINISH APP Big Finish Originals range featuring seven great new series: The majority of Big Finish releases ATA Girl, Cicero, Jeremiah Bourne in Time, Shilling & Sixpence can be accessed on-the-go via Investigate, Blind Terror, Transference and The Human Frontier. the Big Finish App, available for both Apple and Android devices. Secure online ordering and details of all our products can be found at: bgfn.sh/aboutBF EDITORIAL SINCE DOCTOR Who returned to our screens we’ve met many new companions, joining the rollercoaster ride that is life in the TARDIS. We all have our favourites but THE SIXTH DOCTOR ADVENTURES I’ve always been a huge fan of Rory Williams. He’s the most down-to-earth person we’ve met – a nurse in his day job – who gets dragged into the Doctor’s world THE ELEVEN through his relationship with Amelia Pond. -

Gender and the Quest in British Science Fiction Television CRITICAL EXPLORATIONS in SCIENCE FICTION and FANTASY (A Series Edited by Donald E

Gender and the Quest in British Science Fiction Television CRITICAL EXPLORATIONS IN SCIENCE FICTION AND FANTASY (a series edited by Donald E. Palumbo and C.W. Sullivan III) 1 Worlds Apart? Dualism and Transgression in Contemporary Female Dystopias (Dunja M. Mohr, 2005) 2 Tolkien and Shakespeare: Essays on Shared Themes and Language (ed. Janet Brennan Croft, 2007) 3 Culture, Identities and Technology in the Star Wars Films: Essays on the Two Trilogies (ed. Carl Silvio, Tony M. Vinci, 2007) 4 The Influence of Star Trek on Television, Film and Culture (ed. Lincoln Geraghty, 2008) 5 Hugo Gernsback and the Century of Science Fiction (Gary Westfahl, 2007) 6 One Earth, One People: The Mythopoeic Fantasy Series of Ursula K. Le Guin, Lloyd Alexander, Madeleine L’Engle and Orson Scott Card (Marek Oziewicz, 2008) 7 The Evolution of Tolkien’s Mythology: A Study of the History of Middle-earth (Elizabeth A. Whittingham, 2008) 8 H. Beam Piper: A Biography (John F. Carr, 2008) 9 Dreams and Nightmares: Science and Technology in Myth and Fiction (Mordecai Roshwald, 2008) 10 Lilith in a New Light: Essays on the George MacDonald Fantasy Novel (ed. Lucas H. Harriman, 2008) 11 Feminist Narrative and the Supernatural: The Function of Fantastic Devices in Seven Recent Novels (Katherine J. Weese, 2008) 12 The Science of Fiction and the Fiction of Science: Collected Essays on SF Storytelling and the Gnostic Imagination (Frank McConnell, ed. Gary Westfahl, 2009) 13 Kim Stanley Robinson Maps the Unimaginable: Critical Essays (ed. William J. Burling, 2009) 14 The Inter-Galactic Playground: A Critical Study of Children’s and Teens’ Science Fiction (Farah Mendlesohn, 2009) 15 Science Fiction from Québec: A Postcolonial Study (Amy J. -

Dr Who Pdf.Pdf

DOCTOR WHO - it's a question and a statement... Compiled by James Deacon [2013] http://aetw.org/omega.html DOCTOR WHO - it's a Question, and a Statement ... Every now and then, I read comments from Whovians about how the programme is called: "Doctor Who" - and how you shouldn't write the title as: "Dr. Who". Also, how the central character is called: "The Doctor", and should not be referred to as: "Doctor Who" (or "Dr. Who" for that matter) But of course, the Truth never quite that simple As the Evidence below will show... * * * * * * * http://aetw.org/omega.html THE PROGRAMME Yes, the programme is titled: "Doctor Who", but from the very beginning – in fact from before the beginning, the title has also been written as: “DR WHO”. From the BBC Archive Original 'treatment' (Proposal notes) for the 1963 series: Source: http://www.bbc.co.uk/archive/doctorwho/6403.shtml?page=1 http://aetw.org/omega.html And as to the central character ... Just as with the programme itself - from before the beginning, the central character has also been referred to as: "DR. WHO". [From the same original proposal document:] http://aetw.org/omega.html In the BBC's own 'Radio Times' TV guide (issue dated 14 November 1963), both the programme and the central character are called: "Dr. Who" On page 7 of the BBC 'Radio Times' TV guide (issue dated 21 November 1963) there is a short feature on the new programme: Again, the programme is titled: "DR. WHO" "In this series of adventures in space and time the title-role [i.e. -

The Historian-Filmmaker's Dilemma: Historical Documentaries in Sweden in the Era of Häger and Villius

ACTA UNIVERSITATIS UPSALIENSIS Studia Historica Upsaliensia 210 Utgivna av Historiska institutionen vid Uppsala universitet genom Torkel Jansson, Jan Lindegren och Maria Ågren 1 2 David Ludvigsson The Historian-Filmmaker’s Dilemma Historical Documentaries in Sweden in the Era of Häger and Villius 3 Dissertation in History for the Degree of Doctor of Philosophy presented at Uppsala University in 2003 ABSTRACT Ludvigsson, David, 2003: The Historian-Filmmaker’s Dilemma. Historical Documentaries in Sweden in the Era of Häger and Villius. Written in English. Acta Universitatis Upsalien- sis. Studia Historica Upsaliensia 210. (411 pages). Uppsala 2003. ISSN 0081-6531. ISBN 91-554-5782-7. This dissertation investigates how history is used in historical documentary films, and ar- gues that the maker of such films constantly negotiates between cognitive, moral, and aes- thetic demands. In support of this contention a number of historical documentaries by Swedish historian-filmmakers Olle Häger and Hans Villius are discussed. Other historical documentaries supply additional examples. The analyses take into account both the produc- tion process and the representations themselves. The history culture and the social field of history production together form the conceptual framework for the study, and one of the aims is to analyse the role of professional historians in public life. The analyses show that different considerations compete and work together in the case of all documentaries, and figure at all stages of pre-production, production, and post-produc- tion. But different considerations have particular inuence at different stages in the produc- tion process and thus they are more or less important depending on where in the process the producer puts his emphasis on them. -

Levinthal Workiewicz

Are Two Heads Better than One? A Formal Investigation into the Efficacy of Multi-authority Hierarchies in Coordinating Action in Complex Organizations. Dan Levinthal (Wharton) and Maciej Workiewicz (INSEAD) Background and motivation The task environment – hierarchical complex system Results: steady state fitness levels for the three organizational structures • As organizations grow larger, the task of coordinating distributed and complex simple interdependent action (or projects) becomes increasingly difficult. easy executive’s problem hard Executive’s problem (Penrose, 1959; Burton, Obel, DeSanctis, 2011) Executive Level • Understanding the effects of an organizational structure on performance is one of the central concerns of theoretical and empirical work in strategic management and organizational theory. autonomous (Chandler, 1962; Lawrence, Lorsch 1967; Miller et al. 2009). ( design , manufacturing , financing , advertising ) one boss Manager’s Manager’s problem matrix • There have been different approaches to the challenge of organizing: Unit Managers’ Level asy e • Complexity is best answered with a simple organizational structures with small, only high level only lower level one boss matrix auton. autonomous units (Hamel, Prahalad, 1996; Christensen, 1997) matters matters “Most important is clarity and to some extent simplicity. I am absolutely willing to trade in a 100% perfect – academically perfect – but complex organization for only [a] 70% perfect organization that is simple and easy to understand.” former CEO of ABB (Galbraith, 2009) Manager’s Manager’s problem • employ equally complex organizational structure, like the matrix form Connecting the two levels – an example (Ashby 1956; Nadler, Tushman, 1997; Galbraith, 2009) hard Connecting the two levels Less hierarchy More hierarchy Executive (vehicle type) (SPORT) or (SUV) Level Robustness checks (higher and lower levels are hard) wheels autonomous No bosses One Boss Matrix Structure 1. -

JORIS IVENS' "THE SPANISH EARTH" Author(S): Thomas Waugh Source: Cinéaste , 1983, Vol

"Men Cannot Act in Front of the Camera in the Presence of Death": JORIS IVENS' "THE SPANISH EARTH" Author(s): Thomas Waugh Source: Cinéaste , 1983, Vol. 12, No. 3 (1983), pp. 21-29 Published by: Cineaste Publishers, Inc. Stable URL: http://www.jstor.com/stable/41686180 JSTOR is a not-for-profit service that helps scholars, researchers, and students discover, use, and build upon a wide range of content in a trusted digital archive. We use information technology and tools to increase productivity and facilitate new forms of scholarship. For more information about JSTOR, please contact [email protected]. Your use of the JSTOR archive indicates your acceptance of the Terms & Conditions of Use, available at https://about.jstor.org/terms Cineaste Publishers, Inc. is collaborating with JSTOR to digitize, preserve and extend access to Cinéaste This content downloaded from 95.183.180.42 on Sun, 19 Jul 2020 08:17:33 UTC All use subject to https://about.jstor.org/terms "Men Cannot Act in Front of the Camera in the Presence of Death" JORIS IVENS' THE SPANISH EARTH by Thomas Waugh The first installment of this article burning , churches and the like - as which Jocussed on the political and well as expensive and difficult to piy artistic background to the production out of the notoriously reactionary oj Joris Ivens' Spanish Earth, newsreel companies. The group then appeared in our Vol. XII , No. 2 issue.decided to finish the project as quickly and cheaply as possible, which Van Dongen did using a Dos Passos com- mentary and relying on Soviet footage that the Franco rebellion posed of the front. -

Doctor Who: Auton Invasion

In this, the first adventure of his third 'incarnation', DOCTOR WHO, Liz Shaw and the Brigadier grapple with the nightmarish invasion of the AUTONS - living, giant-sized, plastic-modelled 'humans' with no hair and sightless eyes; waxwork replicas and tailors' dummies whose murderous behaviour is directed by the NESTENE CONSCIOUSNESS - a malignant, squid-like monster of cosmic proportions and indescribably hideous appearance. ISBN 0 426 11295 4 DOCTOR WHO AND THE AUTON INVASION Based on the BBC television serial Doctor Who and the Spearhead from Space by Robert Holmes by arrangement with the British Broadcasting Corporation TERRANCE DICKS published by The Paperback Division of W. H. Allen & Co. Ltd CONTENTS 1 Prologue: Exiled to Earth 2 The Mystery of the Meteorites 3 The Man from Space 4 The Faceless Kidnappers 5 The Hunting Auton 6 The Doctor Disappears 7 The Horror in the Factory 8 The Auton Attacks 9 The Creatures in the Waxworks 10 The Final Battle A Target Book Published in 1974 by the Paperback Division of W. H. Allen & Co. Ltd. A Howard & Wyndham Company 44 Hill Street, London W1X 8LB Novelisation copyright © Terrance Dicks Original script copyright © Robert Holmes 1970 W. H. Allen & Co. Ltd illustrations copyright © 1974 'Doctor Who' series copyright © British Broadcasting Corporation 1970, 1974 Printed and bound in Great Britain by Anchor Brendon Ltd, Tiptree, Essex ISBN 0426 10313 0 This book is sold subject to the condition that it shall not, by way of trade or otherwise, be lent, re-sold, hired out or otherwise circulated without the publisher's prior consent in any form of binding or cover other than that in which it is published and without a similar condition including this condition being imposed on the subsequent purchaser. -

Jumpchain CYOA Version 1.0 by Blackshadow111

Jumpchain CYOA Version 1.0 By blackshadow111 Introduction Welcome, Jumper, to a world without peer. Time travel, space travel, reality travel...basically every travel not only exists here, but is rather common and achievable! A world with Time Lords, hate-filled salt shakers, sapient stars and much, much more! This is a world full of tremendous wonders, of bright and glorious things, beautiful sights and unimaginable delights! Step in, go around, see the sights and have fun! It is also full of hideous horror, unfortunate implications, wars and conflicts that can rip apart entire galaxies and time-spans, memetic horrors and nightmares. Step carefully, lest someone else end up treating you as their fun. Either way, you will need these 1 000 CP. Go on, shoot your own series! Time and Place Being honest, neither time nor place mean all that much here. So we’ll be doing this a tad differently. Start out wherever and whenever you like that isn’t a fixed point in time or otherwise a part of the Time War. Now spend a total of ten years of your own personal duration in this universe, regardless of whatever era those years may be lived in. Age and Gender You may choose your age and gender freely, within the limitations of whatever your species is. This show has had adventure opportunities for everyone from children to octagenarians, and that’s just the humans. Origins Madman: Without a box! Or maybe with a box. Who knows? You arrive as you are, a stranger in a strange land. -

Download Book / Doctor Who and the Auton Invasion

4RW0AHNJAEJL « eBook ~ Doctor Who and the Auton Invasion Doctor Who and the Auton Invasion Filesize: 5.99 MB Reviews Complete guideline! Its this kind of good read. It can be writter in easy terms rather than difficult to understand. I am delighted to tell you that here is the very best book i have got go through during my very own lifestyle and might be he greatest ebook for at any time. (Bill Klein) DISCLAIMER | DMCA BGGLVTLG2OW2 » Doc « Doctor Who and the Auton Invasion DOCTOR WHO AND THE AUTON INVASION To read Doctor Who and the Auton Invasion PDF, remember to refer to the button under and download the file or have access to other information which might be have conjunction with DOCTOR WHO AND THE AUTON INVASION book. Ebury Publishing. Paperback. Book Condition: new. BRAND NEW, Doctor Who and the Auton Invasion, Terrance Dicks, Put on trial by the Time Lords, and found guilty of interfering in the aairs of other worlds, the Doctor is exiled to Earth in the 20th century, his appearance once again changed. His arrival coincides with a meteorite shower. But these are no ordinary meteorites. The Nestene Consciousness has begun its first attempt to invade Earth using killer Autons and deadly shop window dummies. Only the Doctor and UNIT can stop the attack. But the Doctor is recovering in hospital, and his old friend the Brigadier doesn't even recognise him. Can the Doctor recover and win UNIT's trust before the invasion begins? This novel is based on "Spearhead from Space", a Doctor Who story which was originally broadcast from 3-24 January 1970, featuring the Third Doctor as played by Jon Pertwee, his companion Liz Shaw and the UNIT organisation commanded by Brigadier Lethbridge- Stewart. -

The Colonial History of Fairfax County

Volunteer! It's Your OLLI OLLI is run by our members on a volunteer basis. Volunteering is vital to our existence. Volunteers are the creative source for all courses, clubs, special events, social activities, publications, and classroom services. Our bylaws sum it up: OLLI “is governed by its members and functions as an intellectual cooperative in which members volunteer in administration and as planners, instructors, organizational officers, and committee members.” Share your talents with us. Make new friends and connect with fellow OLLI members by volunteering. It’s satisfying and very rewarding. You will love it! If you have been thinking about volunteering to work with a committee or program planning group, now is an excellent time to do so! You are important to our continued success. To learn more about any of our volunteer opportunities at OLLI contact us today via the “volunteer at OLLI” email address [email protected] or call the office at (703) 503-3384. Spotlight on Volunteer Opportunities Program Planning Committee An Opportunity for You! OLLI’s program planners invite all members to join in the fun and challenge of creating the OLLI program. There’s no magic involved in the process of developing about 500 courses and events each year. Program planning starts with the activities listed below and culminates in production of this catalog and every quarterly OLLI catalog. We have many needs for program planning volunteers, some requiring a small amount of time and others a larger commitment. Consider these possibilities: Sharing with a program planner a dynamite idea you have for a course. -

Diary of the Doctor Who Role-Playing Games, Issue

VILLAINS ISSUE The fanzine devoted to Doctor Who Gaming ISSUE # 14 CONTINUITY„THIS IN GAMINGMACHINE - KILLSCH FASCISTS‰ ADVENTURE MODULE NEW FASA„MORE VILLAIN THAN STATS MONEY‰ - CREATING ADVENTURE GOOD VILLAINS MODULE and MORE...ICAGO TARDIS 2011 CON REPORT 1 EDITOR’S NOTES CONTENTS Hi there, Some of our issues feature a special theme, so we EDITOR’S NOTES 2 welcome you to our “Villains Issue”. This is a fanzine that REVIEW: The Brilliant Book 2012 3 concentrates on helping GMs create memorable and ef‐ Angus Abranson Leaving Cubicle 7 4 fective villains in their game. Villain motivations, villain Face to Face With Evil 5 tools and more can be found in these pages. Neil Riebe Not One, But Two, Episodes Recovered 11 even gives us retro FASA stats for Androgums, who can Why Are Your Doing This?! 12 be used as villains or standard characters. Hopefully Gamer Etiquette 105 14 there will be something fun for you to use in your next The Villain’s Toolkit 15 adventure. Continuity in Gaming 16 A review of the Chicago TARDIS 2012 convention New FASA Villain Stats: Androgums 18 is included in this issue, where our staff did a session on REVIEW: Everything I Need to Know I Learned From Doctor Who RPGs and also ran an adventure using the Dungeons & Dragons 23 Cubicle 7 DWAiTS rules as well. Staff member Eric Fettig MODULE: “This Machine Kills Fascists” 24 made it his first official Doctor Who convention (having EVENT REPORT: Chicago TARDIS 2011 Con 28 attended many gaming conventions before that) and Viewpoint of a First Time Who Con Attendee 43 gives us a look from his first timer’s perspective. -

Infant-Toddler Early Learning Guidelines

Maine Department of Health and Human Services Supporting Maine’s Infants & Toddlers: Guidelines for Learning & Development Table of Contents Introduction . .2 Guiding Principles . .3 Process . .4 Younger Infants - Birth to 8 months Introduction to the stage . .6 Developmental Guidelines Development into Social Beings . .8 Development of Strong and Healthy Bodies . .10 Development of the Ability to Communicate . .13 Development of Curious Minds . .15 Application . .17 Older Infants - 8 to 18 months Introduction to the stage . .20 Developmental Guidelines Development into Social Beings . .22 Development of Strong and Healthy Bodies . .24 Development of the Ability to Communicate . .27 Development of Curious Minds . .29 Application . .31 Toddlers – 18 to 36 months Introduction to the stage . .34 Developmental Guidelines Development into Social Beings . .36 Development of Strong and Healthy Bodies . .39 Development of the Ability to Communicate . .41 Development of Curious Minds . .43 Application . .46 Additional Resources . .49 1 Introduction This document offers parents of infants and toddlers, early childhood professionals, and policy- makers a set of guidelines about development and early learning. One of our goals is to help individuals understand what to look for as a baby grows and develops. Another goal is to aid in understanding that infants’ and toddlers’ natural learning patterns and abilities can be nurtured in everyday activities occurring in a home or childcare setting. Young children’s learning comes from discoveries they make on their own under the guidance of caring adults rather than from structured lessons. Suggestions are provided for caregivers, which includes parents and early childhood professionals, for interacting with infants and toddlers, organizing the environment so it supports their learning, and responding to their individual differences.