A Practical Exploration System for Search Advertising

Total Page:16

File Type:pdf, Size:1020Kb

Load more

Recommended publications

-

Bo Pulito Strategic Partner Manager Google 5 Questions You Always Wanted Google to Answer 5 QUESTIONS YOU ALWAYS WANTED GOOGLE to ANSWER

Bo Pulito Strategic Partner Manager Google 5 Questions You Always Wanted Google to Answer 5 QUESTIONS YOU ALWAYS WANTED GOOGLE TO ANSWER Agenda 1 Lead Sources 2 SERP Page 3 Adwords Auction 4 Google My Business 5 Trends 1 Lead Sources “What are the best Google Channels to drive leads?” 5 QUESTIONS YOU ALWAYS WANTED GOOGLE TO ANSWER 1 Lead Sources 2B 2 SERP Page “What should I focus on SEO or SEM?” 5 QUESTIONS YOU ALWAYS WANTED GOOGLE TO ANSWER 2 SERP Page Google Search Ads (SEM) Info Cards: Local map No More Right Hand listings Rail Organic Listings (SEO) 3 Adwords Auction “How do I get to the top of the page?” 5 QUESTIONS YOU ALWAYS WANTED GOOGLE TO ANSWER 3 Adwords Auction 4 Google My Business “How do I get my service area business listed in multiple cities?” 5 QUESTIONS YOU ALWAYS WANTED GOOGLE TO ANSWER 4 Google My Business 5 Trends “What are the top trends Google is thinking about?” 5 QUESTIONS YOU ALWAYS WANTED GOOGLE TO ANSWER Mobile 5 2014 50% of searches from mobile users 2011 2012 2013 2014 2015 2016 Thank You! Learn More About ReachLocal’s Digital Marketing Solutions: www.reachlocal.com ReachLocal Blog Ebooks Subscribe: http://blog.reachlocal.com Download: http://bit.ly/lcsebook Follow Us: reachlocal.com/social 5 QUESTIONS YOU ALWAYS WANTED GOOGLE TO ANSWER RESOURCES GENERAL TOPICS ● ReachLocal Blog: Articles about a variety of topics in digital marketing ● ReachLocal Resource Center: Ebooks, videos, webinar replays, infographics and other content on digital marketing ● ReachLocal Webinar: 25 Digital Marketing Tips ● Think With -

Understanding Pay-Per-Click (PPC) Advertising

Understanding Pay-Per-Click Advertising 5 Ways to Ensure a Successful Campaign WSI White Paper Prepared by: WSI Corporate Understanding Pay-Per-Click Advertising 5 Ways to Ensure a Successful Campaign Introduction Back in the 1870s, US department store pioneer, John Wanamaker, lamented, “Half the money I spend on advertising is wasted; the trouble is I don’t know which half!” In today’s increasingly global market, this is no longer a problem. Every successful marketing agent knows that leveraging pay-per-click (PPC) advertising is the key to controlling costs. This unique form of marketing makes it easy to budget advertising dollars and track return on investment (ROI), while attracting traffic to your Web site and qualified leads and sales to your business. Compared with other traditional forms of advertising, paid search marketing, or PPC, is far and away the most cost effective. This report examines the role of PPC as a central component of a successful marketing strategy. It begins with an overview of PPC’s place in the digital market place and the reasons for its continuing worldwide popularity among business owners and entrepreneurs. It also provides tips to ensure your business is getting the most from its PPC campaign. i FIGURE 1. PPC PROCESS 1. Attract Visitors 2. Convert 4. Measure Visitors to and Optimize Customers 3. Retain and Grow Customers Source: Optimum Web Marketing Whitepaper: Understanding Pay-Per-Click Advertising Copyright ©2010 RAM. Each WSI franchise office is an independently owned and operated business. Page 2 of 19 Understanding Pay-Per-Click Advertising 5 Ways to Ensure a Successful Campaign 1. -

Consumer Click Through Behavior Across Devices in Paid Search Advertising

Click-Through Behavior across Devices In Paid Search Advertising Why Users Favor Top Paid Search Ads And Are Sensitive to Ad Position Change CHONGYU LU This study investigated differences in consumer click-through behavior with paid search Pace University advertisement across devices—smartphone versus desktop versus tablet. The authors [email protected] examined how different device users behave in terms of their tendency to click on the REX YUXING DU top paid search advertisement and their sensitivity to advertisement position change, University of Houston [email protected] and whether tablet users are more similar to smartphone or desktop users when clicking through paid search advertisements. By leveraging Google AdWords data from 13 paid search advertisers, the authors developed empirical findings that provide insights into paid search advertising strategies across devices. INTRODUCTION years, as the majority of search activities have Paid search advertising accounts for 46 percent of shifted from desktop to mobile devices, such as digital-marketing expenditure and is expected to smartphones and tablets. The volume of mobile reach $142.5 billion in 2021 (Ironpaper, 2017). One searches has exceeded that of desktop searches way for advertisers to improve the performance of since 2015 (Sterling, 2015). paid search advertising is to consider segmenting A recent study (iProspect, 2017) reported that their paid search advertising campaigns by devices. mobile devices accounted for 69.4 percent of Discussion of the benefits of doing so is inconclu- Google’s paid search clicks in 2017. This share sive, however. Although some advertisers suggest is expected to grow as mobile devices become that device segmentation is an effective strategy, increasingly affordable and the speed of mobile others believe that it is not worth the trouble of set- networks increases. -

Six-Steps for Search Engine Marketing Learning

SIX-STEPS FOR SEARCH ENGINE MARKETING LEARNING Kai-Yu Wang, Brock University AXCESSCAPON TEACHING INNOVATION COMPETIION TEACHING NOTES Google AdWords is considered to be the most important digital marketing channel, causing firms to consistently increase their budget in this area (Hanapin Marketing, 2016). Paid search advertising expenditures in the US are predicted to reach $45.81 billion by 2018, making up 42.7% of the total expected digital advertising expenditures in the country (eMarketer, 2018). One of the key challenges in teaching digital marketing is determining how to help students learn digital marketing strategies with actionable tactics that they can directly apply to the real digital business world. Thus, a six-step process was proposed and used in my internet and social media class to address this pedagogical problem. Students learned search engine marketing (SEM) through in-class assignments and a SEM campaign for a local organization. 1. Local Businesses (i.e., community partners): Before the semester began, a call for service-learning project participation was sent to a pool of local community partners. Among the 24 interested community partners, nine from various industries (e.g., wine, construction, auto repairs) were selected to work with my internet and social media marketing class. In week two of the semester, nine teams (five students per team) were formed and randomly assigned one local business to work with on the project. Each team was required to schedule a meeting with its community partner in the following week. The purpose of this meeting was to understand the partner’s products/services, current marketing strategies, and digital marketing problems. -

Online and Mobile Advertising: Current Scenario, Emerging Trends, and Future Directions

Marketing Science Institute Special Report 07-206 Online and Mobile Advertising: Current Scenario, Emerging Trends, and Future Directions Venkatesh Shankar and Marie Hollinger © 2007 Venkatesh Shankar and Marie Hollinger MSI special reports are in draft form and are distributed online only for the benefit of MSI corporate and academic members. Reports are not to be reproduced or published, in any form or by any means, electronic or mechanical, without written permission. Online and Mobile Advertising: Current Scenario, Emerging Trends, and Future Directions Venkatesh Shankar Marie Hollinger* September 2007 * Venkatesh Shankar is Professor and Coleman Chair in Marketing and Director of the Marketing PhD. Program at the Mays Business School, Texas A&M University, College Station, TX 77843. Marie Hollinger is with USAA, San Antonio. The authors thank David Hobbs for assistance with data collection and article preparation. They also thank the MSI review team and Thomas Dotzel for helpful comments. Please address all correspondence to [email protected]. Online and Mobile Advertising: Current Scenario, Emerging Trends and Future Directions, Copyright © 2007 Venkatesh Shankar and Marie Hollinger. All rights reserved. Online and Mobile Advertising: Current Scenario, Emerging Trends, and Future Directions Abstract Online advertising expenditures are growing rapidly and are expected to reach $37 billion in the U.S. by 2011. Mobile advertising or advertising delivered through mobile devices or media is also growing substantially. Advertisers need to better understand the different forms, formats, and media associated with online and mobile advertising, how such advertising influences consumer behavior, the different pricing models for such advertising, and how to formulate a strategy for effectively allocating their marketing dollars to different online advertising forms, formats and media. -

What Does the Chicago School Teach About Internet Search and the Antitrust Treatment of Google?

WHAT DOES THE CHICAGO SCHOOL TEACH ABOUT INTERNET SEARCH AND THE ANTITRUST TREATMENT OF GOOGLE? Robert H. Bork* & J. Gregory Sidak† ABSTRACT Antitrust agencies in the United States and the European Union began investigating Google’s search practices in 2010. Google’s critics have consisted mainly of its competitors, particularly Microsoft, Yelp, TripAdvisor, and other search engines. They have alleged that Google is making it more difficult for them to compete by including specialized search results in general search pages and limiting access to search inputs, including “scale,” Google content, and the Android platform. Those claims contradict real-world experiences in search. They demonstrate competitors’ efforts to compete not by investing in efficiency, quality, or innovation, but by using antitrust law to punish the successful competitor. The Chicago School of law and economics teaches—and the Supreme Court has long affirmed—that antitrust law exists to protect consumers, not competitors. Penalizing Google’s practices as anticompetitive would violate that principle, reduce dynamic competition in search, and harm the consumers that the antitrust laws are intended to protect. INTRODUCTION ................................................................................................................................. 2 I. IS GOOGLE THE GATEWAY TO THE INTERNET? .......................................................................... 4 A. The Two-Sided Market for Internet Search ...................................................................... -

Recommendations for Businesses and Policymakers Ftc Report March 2012

RECOMMENDATIONS FOR BUSINESSES AND POLICYMAKERS FTC REPORT FEDERAL TRADE COMMISSION | MARCH 2012 RECOMMENDATIONS FOR BUSINESSES AND POLICYMAKERS FTC REPORT MARCH 2012 CONTENTS Executive Summary . i Final FTC Privacy Framework and Implementation Recommendations . vii I . Introduction . 1 II . Background . 2 A. FTC Roundtables and Preliminary Staff Report .......................................2 B. Department of Commerce Privacy Initiatives .........................................3 C. Legislative Proposals and Efforts by Stakeholders ......................................4 1. Do Not Track ..............................................................4 2. Other Privacy Initiatives ......................................................5 III . Main Themes From Commenters . 7 A. Articulation of Privacy Harms ....................................................7 B. Global Interoperability ..........................................................9 C. Legislation to Augment Self-Regulatory Efforts ......................................11 IV . Privacy Framework . 15 A. Scope ......................................................................15 1. Companies Should Comply with the Framework Unless They Handle Only Limited Amounts of Non-Sensitive Data that is Not Shared with Third Parties. .................15 2. The Framework Sets Forth Best Practices and Can Work in Tandem with Existing Privacy and Security Statutes. .................................................16 3. The Framework Applies to Offline As Well As Online Data. .........................17 -

The Role of Search Engine Optimization in Search Marketing∗

The Role of Search Engine Optimization in Search Marketing∗ Ron Berman and Zsolt Katonay November 6, 2012 ∗We wish to thank Pedro Gardete, Ganesh Iyer, Shachar Kariv, John Morgan, Miklos Sarvary, Dana Sisak, Felix Vardy, Kenneth Wilbur, Yi Zhu and seminar participants at HKUST, University of Florida, University of Houston, UT Austin, and Yale for their useful comments. yRon Berman is Ph.D. candidate and Zsolt Katona is Assistant Professor at the Haas School of Busi- ness, UC Berkeley, 94720-1900 CA. E-mail: ron [email protected], [email protected]. Electronic copy available at: http://ssrn.com/abstract=1745644 The Role of Search Engine Optimization in Search Marketing Abstract In this paper we study the impact of search engine optimization (SEO) on the competition between advertisers for organic and sponsored search results. We find that a positive level of search engine optimization may improve the search engine's ranking quality and thus the satisfaction of its visitors. In the absence of sponsored links, the organic ranking is improved by SEO if and only if the quality provided by a website is sufficiently positively correlated with its valuation for consumers. In the presence of sponsored links, the results are accentuated and hold regardless of the correlation. When sponsored links serve as a second chance to acquire clicks from the search engine, low quality websites have a reduced incentive to invest in SEO, giving an advantage to their high quality counterparts. As a result of the high expected quality on the organic side, consumers begin their search with an organic click. -

The Effects of Search Advertising on Competitors

The Effects of Search Advertising on Competitors: An Experiment Before a Merger Joseph M. Golden John J. Horton∗ October 10, 2019y Abstract We report the results of an experiment in which a company, \Firm Vary," temporarily suspended its sponsored search advertising campaign on Google in randomly selected advertising markets in the US. By shutting off its ads, Firm Vary lost customers, but only 63% as many as a non-experimental estimate would have suggested. Following the experiment, Firm Vary merged with its closest competitor, \Firm Fixed." Using combined data from both companies, the experiment revealed that spillover effects of Firm Vary's search advertising on Firm Fixed's business and its marketing campaigns were surprisingly small, even in the market for Firm Vary's brand name as a keyword search term, where the two firms were effectively duopsonists. 1 Introduction Firms that advertise would like to know if their ads are effective. Any firm that advertises in a sufficiently large number of distinct markets|and that can measure where customers or sales originate|can credibly assess the effectiveness of its ads by running an experiment, suspending campaigns in some markets while maintaining the status quo in others (Lewis et al., 2011). However, a firm by itself typically cannot know the effects of its advertising on competitors, as competitors are not ∗Collage.com and MIT, respectively. yWe thank Susan Athey, Tom Blake, John Bound, Charles Brown, Daisy Dai, Avi Goldfarb, Dan Hirschman, Jason Kerwin, Eric Schwartz, Isaac Sorkin, Frank Stafford, Hal Varian, Steve Tadelis, Brad Shapiro, Zach Winston, and Florian Zettelmeyer for feedback on this research. -

An Empirical Analysis of Search Engine Advertising: Sponsored Search in Electronic Markets

University of Pennsylvania ScholarlyCommons Operations, Information and Decisions Papers Wharton Faculty Research 10-2009 An Empirical Analysis of Search Engine Advertising: Sponsored Search in Electronic Markets Anindya Ghose University of Pennsylvania Sha Yang Follow this and additional works at: https://repository.upenn.edu/oid_papers Part of the E-Commerce Commons, and the Management Sciences and Quantitative Methods Commons Recommended Citation Ghose, A., & Yang, S. (2009). An Empirical Analysis of Search Engine Advertising: Sponsored Search in Electronic Markets. Management Science, 55 (10), 1605-1622. http://dx.doi.org/10.1287/ mnsc.1090.1054 This paper is posted at ScholarlyCommons. https://repository.upenn.edu/oid_papers/159 For more information, please contact [email protected]. An Empirical Analysis of Search Engine Advertising: Sponsored Search in Electronic Markets Abstract The phenomenon of sponsored search advertising—where advertisers pay a fee to Internet search engines to be displayed alongside organic (nonsponsored) Web search results—is gaining ground as the largest source of revenues for search engines. Using a unique six-month panel data set of several hundred keywords collected from a large nationwide retailer that advertises on Google, we empirically model the relationship between different sponsored search metrics such as click-through rates, conversion rates, cost per click, and ranking of advertisements. Our paper proposes a novel framework to better understand the factors that drive differences in these metrics. We use a hierarchical Bayesian modeling framework and estimate the model using Markov Chain Monte Carlo methods. Using a simultaneous equations model, we quantify the relationship between various keyword characteristics, position of the advertisement, and the landing page quality score on consumer search and purchase behavior as well as on advertiser's cost per click and the search engine's ranking decision. -

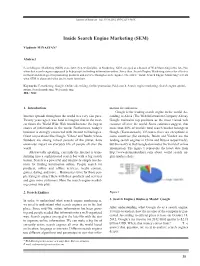

Inside Search Engine Marketing (SEM)

Journal of Business, 1(2):35-38,2012 ISSN:2233-369X Inside Search Engine Marketing (SEM) Vladimir MINASYAN* Abstract Search Engine Marketing (SEM) is a relatively new discipline in Marketing. SEM emerged as a branch of Web Marketing in the late-90s, when first search engines appeared to help people in finding information online. Since then, Search Engine Marketing strives for effective methods and strategies for promoting products and services through search engines. The article “Inside Search Engine Marketing” reveals what SEM is about and what are its main functions. Keywords: E-marketing, Google, Online advertising, Online promotion, Paid search, Search engine marketing, Search engine optimi- zation, Search marketing, Web marketing. JEL: M31 1. Introduction mation for end-users. Google is the leading search engine in the world. Ac- Internet spreads throughout the world in a very fast pace. cording to Alexa (The Web Information Company Alexa), Twenty years ago it was hard to imagine that in the near- Google maintains top positions as the most visited web est future the World Wide Web would become the largest resource all over the world. Some estimates suggest, that source of information in the world. Furthermore, today’s more than 80% of world’s total search market belongs to business is strongly connected with internet technologies. Google (Karmansnack). Of course there are exceptions in Giant corporations like Google, Yahoo! and Baidu, whose some countries (for example, Baidu and Yandex are the founders are among richest persons of the planet, have leading search engines in China and Russia respectively), enormous impact on everyday life of people all over the but the reality is that Google dominates the world of online world. -

Marketing and Advertising Using Google™ Targeting Your Advertising to the Right Audience

Marketing and Advertising Using Google™ Targeting Your Advertising to the Right Audience Marketing and Advertising Using Google™ Copyright © 2007 Google Inc. All rights reserved To order additional copies call (800) 355-9983 (Thomson Custom Customer Service) or go to: adwords.thomsoncustom.com ISBN: -426-62737-8 We want to hear your feedback about this book. Please email [email protected]. Marketing and Advertising Using Google™ Lesson 1 – Google Search Marketing: AdWords Topic – Online Advertising: A Brief History of a Young Medium 5 Topic 2 – Online Advertising Joins the Marketing Mix 8 Topic 3 – Behind the Scenes: How Google Search Works 11 Topic 4 – AdWords Ads Fundamentals 15 Topic 5 – AdWords Ads Appear Across Many Websites 18 Lesson 2 – Overview of Google AdWords Accounts Topic – Getting Started: AdWords Starter Edition 29 Topic 2 – How Ads Are Shown: The AdWords Auction 37 Topic 3 – Ad Rank, AdWords’ Discounter, and Basic Tenets of Optimization 39 Topic 4 – Introduction to Site-Targeted Campaigns 40 Topic 5 – Ad Formats 45 Lesson 3 – Successful Keyword-Targeted Advertising Topic – Choosing the Right Keywords 53 Topic 2 – Writing Successful Ad Text 56 Topic 3 – Choosing Relevant Landing Pages 58 Topic 4 – Monitoring Performance and Analyzing an Ad’s Quality Score 60 Topic 5 – How Do Advertisers Know Their Quality Score? 6 Topic 6 – Optimize Ads to Boost Performance and Quality Score 62 Lesson 4 – Image and Video Ads Topic – Overview of Image Ads 69 Topic 2 – Video Ads 72 Topic 3 – Tips on Creating Successful Video