A Human-Computer Integrated Approach Towards Content Based

Total Page:16

File Type:pdf, Size:1020Kb

Load more

Recommended publications

-

Captain Cool: the MS Dhoni Story

Captain Cool The MS Dhoni Story GULU Ezekiel is one of India’s best known sports writers and authors with nearly forty years of experience in print, TV, radio and internet. He has previously been Sports Editor at Asian Age, NDTV and indya.com and is the author of over a dozen sports books on cricket, the Olympics and table tennis. Gulu has also contributed extensively to sports books published from India, England and Australia and has written for over a hundred publications worldwide since his first article was published in 1980. Based in New Delhi from 1991, in August 2001 Gulu launched GE Features, a features and syndication service which has syndicated columns by Sir Richard Hadlee and Jacques Kallis (cricket) Mahesh Bhupathi (tennis) and Ajit Pal Singh (hockey) among others. He is also a familiar face on TV where he is a guest expert on numerous Indian news channels as well as on foreign channels and radio stations. This is his first book for Westland Limited and is the fourth revised and updated edition of the book first published in September 2008 and follows the third edition released in September 2013. Website: www.guluzekiel.com Twitter: @gulu1959 First Published by Westland Publications Private Limited in 2008 61, 2nd Floor, Silverline Building, Alapakkam Main Road, Maduravoyal, Chennai 600095 Westland and the Westland logo are the trademarks of Westland Publications Private Limited, or its affiliates. Text Copyright © Gulu Ezekiel, 2008 ISBN: 9788193655641 The views and opinions expressed in this work are the author’s own and the facts are as reported by him, and the publisher is in no way liable for the same. -

View Full Paper

International Journal of Scientific and Research Publications, Volume 6, Issue 11, November 2016 238 ISSN 2250-3153 Is Cheering for a Cricket Team a Crime? Shahnawaz Ahmad Assistant Professor, Department of Law, Aligarh Muslim University Centre Malappuram, Kerala (India) Here in these particular cases there was simply actus reus and no Index Terms- Cricket, cheering, BCCI, State guilty mind. Now the primary question is, whether cheering for a cricket team other than our own team is a crime? The answer of this I. INTRODUCTION question is totally dependent on the question whether BCCI n 27 January 2016, Umar Draz a Pakistani fan of Indian represents sovereignty and integrity of India? Whether BCCI is a O cricketer Virat Kohli was arrested by the Pakistani Police statutory body? Whether BCCI is a State within the meaning of on allegation of raising the tricolour on his roof in Punjab Article 12 of the Constitution? If answer is affirmative then Province. Police raided the house of Umar Draz and seized the certainly it is a crime if answer is negative then it is not a crime Indian Flag from his rooftop, when asked why he had hoisted the rather authorities itself breaking the law. Indian Flag, Draz said: "I am a big fan of Virat Kohli. I support For answering this question let us examine the legal status of the Indian team because of Kohli. Hoisting the Indian Flag on the BCCI. The Board of Control for Cricket in India (BCCI) was rooftop of house only shows my love for the Indian Cricketer." formed in 1928 as an unregistered association of persons. -

Cobbling Together the Dream Indian Eleven

COBBLING TOGETHER THE DREAM INDIAN ELEVEN Whenever the five selectors, often dubbed as the five wise men with the onerous responsibility of cobbling together the best players comprising India’s test cricket team, sit together to pick the team they feel the heat of the country’s collective gaze resting on them. Choosing India’s cricket team is one of the most difficult tasks as the final squad is subjected to intense scrutiny by anybody and everybody. Generally the point veers round to questions such as why batsman A was not picked or bowler B was dropped from the team. That also makes it a very pleasurable hobby for followers of the game who have their own views as to who should make the final 15 or 16 when the team is preparing to leave our shores on an away visit or gearing up to face an opposition on a tour of our country. Arm chair critics apart, sports writers find it an enjoyable professional duty when they sit down to select their own team as newspapers speculate on the composition of the squad pointing out why somebody should be in the team at the expense of another. The reports generally appear on the sports pages on the morning of the team selection. This has been a hobby with this writer for over four decades now and once the team is announced, you are either vindicated or amused. And when the player, who was not in your frame goes on to play a stellar role for the country, you inwardly congratulate the selectors for their foresight and knowledge. -

Bangalore for the Visitor

Bangalore For the Visitor PDF generated using the open source mwlib toolkit. See http://code.pediapress.com/ for more information. PDF generated at: Mon, 12 Dec 2011 08:58:04 UTC Contents Articles The City 11 BBaannggaalloorree 11 HHiissttoorryoofBB aann ggaalloorree 1188 KKaarrnnaattaakkaa 2233 KKaarrnnaattaakkaGGoovv eerrnnmmeenntt 4466 Geography 5151 LLaakkeesiinBB aanngg aalloorree 5511 HHeebbbbaalllaakkee 6611 SSaannkkeeyttaannkk 6644 MMaaddiiwwaallaLLaakkee 6677 Key Landmarks 6868 BBaannggaalloorreCCaann ttoonnmmeenntt 6688 BBaannggaalloorreFFoorrtt 7700 CCuubbbboonPPaarrkk 7711 LLaalBBaagghh 7777 Transportation 8282 BBaannggaalloorreMM eettrrooppoolliittaanTT rraannssppoorrtCC oorrppoorraattiioonn 8822 BBeennggaalluurruIInn tteerrnnaattiioonnaalAA iirrppoorrtt 8866 Culture 9595 Economy 9696 Notable people 9797 LLiisstoof ppee oopplleffrroo mBBaa nnggaalloorree 9977 Bangalore Brands 101 KKiinnggffiisshheerAAiirrll iinneess 110011 References AArrttiicclleSSoo uurrcceesaann dCC oonnttrriibbuuttoorrss 111155 IImmaaggeSS oouurrcceess,LL iicceennsseesaa nndCC oonnttrriibbuuttoorrss 111188 Article Licenses LLiicceennssee 112211 11 The City Bangalore Bengaluru (ಬೆಂಗಳೂರು)) Bangalore — — metropolitan city — — Clockwise from top: UB City, Infosys, Glass house at Lal Bagh, Vidhana Soudha, Shiva statue, Bagmane Tech Park Bengaluru (ಬೆಂಗಳೂರು)) Location of Bengaluru (ಬೆಂಗಳೂರು)) in Karnataka and India Coordinates 12°58′′00″″N 77°34′′00″″EE Country India Region Bayaluseeme Bangalore 22 State Karnataka District(s) Bangalore Urban [1][1] Mayor Sharadamma [2][2] Commissioner Shankarlinge Gowda [3][3] Population 8425970 (3rd) (2011) •• Density •• 11371 /km22 (29451 /sq mi) [4][4] •• Metro •• 8499399 (5th) (2011) Time zone IST (UTC+05:30) [5][5] Area 741.0 square kilometres (286.1 sq mi) •• Elevation •• 920 metres (3020 ft) [6][6] Website Bengaluru ? Bangalore English pronunciation: / / ˈˈbæŋɡəɡəllɔəɔər, bæŋɡəˈllɔəɔər/, also called Bengaluru (Kannada: ಬೆಂಗಳೂರು,, Bengaḷūru [[ˈˈbeŋɡəɭ uuːːru]ru] (( listen)) is the capital of the Indian state of Karnataka. -

India Not Stretched in Regaining Cup

India not stretched in regaining cup From R. Mohan and Srikkanth. If Siddhu was castled by that incutter he could blame only his physical condition which did DHAKA, Nov. 4. not permit free movement from the crease. He did India added a second Asia Cup to its glittering col- walk with a springy step when he went to receive lection of international limited-overs cricket trophies. the 'Man of the Match' award from Lt. Gen. Ershad, Its triumph today in an uninspiring final was analogous President of Bangladesh, to taking candy from a kid because Sri Lanka fell Promising beginning: The only inspirational part of back to old and ingenuous ways. The Sri Lankans' the Sri Lankan innings was the opening stand. It Was running between the wickets was a pathetic represen- a good track to bat on even early in the day. tation of their inexperience. Mahanama and Kuruppu were outstanding in running . A side so reliant on batting strength could not af- between the wickets. They never refused each other's ford to throw away its entire middle order to a mid- calls for the singles, but the pattern of the innings morning madness. While the sense of occasion tends changed completely when Mahanama fell leg before. to heighten India's performance, it seemed to inhibit In stretching out, Kapil bowled at least five extra the Sri Lankans so much that they could not live up balls in his first spell because of the no balls and the to the ambitions they had stoked in themselves with wides. -

List of Famous Cricketers of All Time

Follow Us FAMOUS SPORTS PERSONALITIES List of Famous Cricketers of All time “You can also try the quiz on Famous Sports Personalities here – Quiz Link” Name of the Cricketer Famous for/ Known as Sachin Tendulkar Holds most of the batting records Sunil Gavaskar The little master Rahul Dravid The Wall Sourav Ganguly The best Indian captain Virender Sehwag India's finest opener Anil Kumble India's highest wicket taker Kapil Dev India's best all-rounder Javagal Srinath India's leading pacer for a long time Harbhajan Singh Best Indian off-spinner Zaheer Khan India's best left-handed fast bowler Aggressive captain and an equally Mohammad Azharuddin good player VVS Laxman One of the most elegant batsman MS Dhoni The best finisher Mohinder Amarnath Scored first test century for India Bishan Singh Bedi An effective spinner of his era Navjot Singh Siddhu Explosive batsman of his era Virat Kohli Next legend in making Vinod Kambli Short but marvellous career Ajay Jadeja A fine middle order batsman • First player to take a 5-wicket haul and score a 50 in the World Yuvraj Cup matches. • First player to hit 6 sixes in a T20 World Cup www.recruitment.guru/general-knowledge/| 1 Follow Us FAMOUS SPORTS PERSONALITIES Known for his slower deliveries and Venkatesh Prasad was one of its first proponents in world cricket The fastest Indian bowler to the milestone of 100 wickets in 18 test Ashwin matches and the fastest in the world in over 80 years Only Indian batsman to have scored Gambhir more than 300 runs in four consecutive Test series He has a record of fastest 100 wickets Erapalli Prasanna in Tests by an Indian Bowler (in 20 Tests) at this time. -

The Big Three Era Starts

151 editions of the world’s most famous sports book WisdenEXTRA No. 12, July 2014 England v India Test series The Big Three era starts now Given that you can bet on almost anything these most recent book was a lovely biography of Bishan days, it would have been interesting to know the odds Bedi – a stylist who played all his international cricket on the first Test series under N. Srinivasan’s ICC before India’s 1983 World Cup win and the country’s chairmanship running to five matches. (Actually, on wider liberalisation. Since then, the IPL has moved the reflection, let’s steer clear of the betting issue.) But goalposts once again. Menon is in an ideal position to certainly, until this summer, many assumed that – examine what Test cricket means to Indians across the barring the Ashes – the five-Test series was extinct. Yet, social spectrum. here we are, embarking on the first since 2004-05 – The Ranji Trophy has withstood all this to remain when England clung on to win 2–1 in South Africa. the breeding ground for Indian Test cricketers. Although Not so long ago, five- or even six-match series it has never commanded quite the same affection as between the leading Test nations were the core of the the County Championship, it can still produce its fair calendar. Sometimes, when it rained in England or share of romance. We delve into the Wisden archives someone took an early lead in the subcontinent, the to reproduce Siddhartha Vaidyanathan’s account of cricket could be dreary in the extreme. -

'JARA YAAD KARO KURBANI'? (A) Javed Akhtar

Test Paper 10 General Knowledge 1. Who composed the song ‘JARA YAAD KARO KURBANI’? (a) Javed Akhtar (b) Pradeep (c) Nusrat Fateh Ali Khan (d) Raghupati Sahay ‘Firaq’ 2. Which of the following is a folk dance of Rajasthan? (a) Garba (b) Dandiya (c) Jhumar (d) Kathak 3. Kuchipudi is a dance drama of which of the following States? (a) Andhra Pradesh (b) Kerala (c) Karnataka (d) Tamil Nadu 4. ‘The Colonel’ is the name of which Indian Test cricketer? (a) Colonel C. K. Naidu (b) Rahul Dravid (c) Mohinder Amarnath (d) Dilip Vengsarkar 5. Deodhar Trophy is related to which sport? (a) Volleyball (b) Football (c) Cricket (d) Tennis 6. Jaspal Rana is a distinguished athlete in which of the following games? (a) Swimming (b) Weightlifting (c) Shooting (d) Archery 7. The ‘Dronacharya Award’ is associated with (a) Eminent Surgeons (b) Famous Artists (c) Sport Coaches (d) Expert Engineers 8. The author of ‘Gitagovinda’ was (a) Halayudha (b) Jayadeva (c) Kalhana (d) Jona-Raja 9. Who wrote the book ‘India Wins Freedom’? (a) Maulana Abdul Kalam Azad (b) Mahatma Gandhi (c) Sir Mohammad Iqbal (d) Abdul Ghaffar Khan 10. Baba Amte is famous as a (a) Painter (b) Singer (c) Politician (d) Social Worker 11. Who was the first woman Governor of an Indian State? (a) Sushila Nayar (b) Sucheta Kriplani (c) Sarojini Naidu (d) Sulochan Modi 12. Who is the first non-Indian to receive the Bharat Ratna? (a) Martin Luther King (b) Zubin Mehta (c) Mother Teresa (d) Khan Abdul Ghaffar Khan 13. Dr. M. S. Subbulakshmi has distinguished herself in the field of (a) Kathak (b) Bharatha Natyam (c) Playing Violin (d) Vocal Music 14. -

Kapil's Devils to Mark 25 Years of World Cup

Thursday 5th June, 2008 Sri Lanka Kapil's Devils to mark draws with Afghanistan 25 years of World Cup win NEW DELHI — India's only by Reemus Fernando conceding the goal. World Cup winning cricket team Chathura breaking will gather in London later this through the well guarded month to celebrate the 25th year of Sri Lanka settled for a arguably the country's greatest Afghan defense lines, 2-all draw owing to an sporting triumph. accurate penalty goal stroked just outside the It was on June 25, 1983 that Kapil scored by E.B. Channa penalty box to make it one- Dev's unheralded team pulled off a after Afghanistan dominat- all in the eighth minute. stunning upset to deny Clive ed 2-1 throughout most of After Chathura’s hero- Lloyd's all-conquering West Indians the second half in the ics, Sri Lanka toiled in a hat-trick of World Cup titles with SAFF Cup Group A match vain to take the lead. Sri an amazing 43-run win at Lord's. played at a floodlit Lanka lost a couple of The 14-man squad, popularly Sugathadasa Stadium yes- chances with Channa and known as Kapil's Devils, will mark terday. Kasun Jayasuriya missing the anniversary with a private Afghans, who named the goal once each. function at the Long Room in Afghans turned the Lord's on June 25 followed by a gala dinner in London later that evening. Former India captain Sunil Gavaskar, who was part of the win- ning team, has organised the Lord's gathering while liquor baron Vijay Mallya will sponsor the dinner, an official said on Wednesday. -

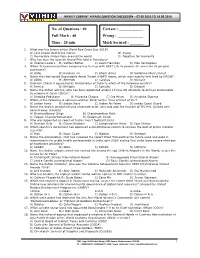

No. of Questions : 40 Full Mark : 40 Time : 20 Min Correct : ___

WEEKLY CURRENT AFFAIRS QUESTION DISCUSSION – 07.05.2019 TO 14.05.2019 No. of Questions : 40 Correct : ____________ Full Mark : 40 Wrong : _____________ Time : 20 min Mark Secured : _______ 1. What was the theme of the World Red Cross Day 2019? A) Less known Red Cross stories B) #Love C) Memorable smiles from around the world D) Together for Humanity 2. Who has won the Spanish Grand Prix held in Barcelona? A) Charles Leclerc B) Valtteri Bottas C) Lewis Hamilton D) Max Verstappen 3. Which Telecommunications company has tied up with HDFC Life to provide life cover for its prepaid customers? A) BSNL B) Reliance Jio C) Bharti Airtel D) Vodafone Idea Limited 4. Name the High-speed Expendable Aerial Target (HEAT) drone, which was recently test fired by DRDO? A) AURA B) ABHYAS C) Lakshya D) Nishant 5. Subhash Chand is appointed as Ambassador of India to which of the following country? A) Eritrea B) Ethiopia C) Somalia D) Djibouti 6. Name the Indian actress, who has been appointed among 17 new UN advocate to achieve Sustainable Development Goals (SDGs)? A) Deepika Padukone B) Priyanka Chopra C) Dia Mirza D) Anushka Sharma 7. Which of the following is commemorating „2019‟ as the „Year of Next of Kin‟? A) Indian Army B) Indian Navy C) Indian Air Force D) Indian Coast Guard 8. Name the India‟s longest-serving corporate chief, who was also the founder of ITC Pvt. Limited who passed away recently? A) BhishmaNarain Singh B) Chandrasekhar Rath C) Yogesh ChanderDeveshwar D) Kedarnath Singh 9. Who was appointed as coach of Indian men‟s football team? A) Graham Reid B) Gulam Rabbani C) Lalnghinglova Hmar D) Igor Stimac 10. -

Opening Batsman Sunil Gavaskar at Its Convocation

This time the Aussies need no help to locate Visakhapatnam. Only in early 1996, on the eve of the World Cup, they were helped out by a Pakistani when the Australian cricketers tried to spot Visakhapatnam, the venue of their first World Cup match, on the map. They not only enjoyed their visit to the City of Destiny but also enthralled the crowd with their display as the Waugh brothers put on 207 runs for the third wicket against Kenya. Shane Warne and Vizag as a venue, made their debut in the World cup on February 23, 1996. Two years later the Aussies played here again against the Board President’s XI in March 1998 and in that match Slater and Ponting emulated the Waugh brothers by adding 206 runs for the second wicket. A double century by the former, 155 by the latter and a century by Kanitkar for the home team marked the three day batting feast. Incidentally Harbhajan Singh played here for the first time against the Australians, claiming the prize wicket of Slater. For the third time in five years the Aussies came to Visakhapatnam in 2001 to play against a resurgent India led by Sourav Ganguly, in the fifth ODI on April 3, 2001. Australia won by 93 runs. Man of the Match was M.Hayden with 111 while Ponting scored 101. Sachin Tendulkar topscored for India with 62. It all began with the great Viv Richards in 1987 when he led the West Indies team against the Board President’s XI. Since then what a galaxy of players have been seen in action here - Kapil Dev, Mohinder Amarnath, Gundappa Viswanath, Sunil Gavaskar, Srikkanth, Manjrekar, Ravi Shastri, Azharuddin, Mike Atherton, Courtney Walsh, Mark Taylor, Shane Warne, Wasim Akram, Aravinda de Silva and Arjuna Ranatunga and almost all the great teams of contemporary cricket. -

(3) New Zealand (4) Yuvraj Singh (4) Australia

1. Who is this cricketer? 6. Who are Kangaroos? (1) Sachin Tendulkar (1) Bangladesh (2) Virat Kohli (2) Kenya (3) Gautam Gambhir (3) New Zealand (4) Yuvraj Singh (4) Australia 2. Test cricket is played over _____ days. 7. Which of the following is first Indian to score (1) Six (2) Five century in ODI? (3) Four (4) Three (1) (2) 3. Where did the 1st ODI match was played in India? (1) New Delhi (2) Ahmedabad (3) Kolkata (4) Mumbai Kapil Dev Sachin Tendulkar 4. Who holds the unique distinction of being the only bowler to dismiss Don Bradman courtesy of a hit-wicket? (3) (4) (1) (2) Ajit Wadekar Mohinder Amarnath C.K. Nayudu Vinoo Mankad 8. One-day Hat-trick was registered by (3) (4) (1) (2) Lala Amarnath Vijay Merchant Chetan Sharma Kapil Dev 5. The Indian Batsman who batted in all positions from 1-11. (3) (4) Both (1) & (2) (1) (2) Rahul Dravid Kapil Dev Vinoo Mankad (3) (4) 9. ________ team won the Titan cup in 1996. (1) Indian (2) Australian (3) Pakistan Sourav Ganguly Sachin Tendulkar (4) England Cricket Clinic Contest / CLASS 1-6 Set-A1 3 10. ________ won the four consecutive “Man of the 15. Who won the Asia Cup Cricket Tournament 2010? Match” award. (1) Bangladesh (2) Sri Lanka (1) (2) (3) India (4) Pakistan 16. Who was the first person to make three test centuries in his first innings as a captain? (1) (2) Sachin Tendulkar Gilchrist (3) (4) Rahul Dravid Sourav Ganguly Virat Kohli MS Dhoni (3) (4) 11.