1 Installation Guide for Columbus Version 7.1 with Openmolcas Support (Colmol-2.1.0)

Total Page:16

File Type:pdf, Size:1020Kb

Load more

Recommended publications

-

Electronic Supporting Information a New Class of Soluble and Stable Transition-Metal-Substituted Polyoxoniobates: [Cr2(OH)4Nb10o

Electronic Supplementary Material (ESI) for Dalton Transactions This journal is © The Royal Society of Chemistry 2012 Electronic Supporting Information A New Class of Soluble and Stable Transition-Metal-Substituted Polyoxoniobates: [Cr2(OH)4Nb10O30]8-. Jung-Ho Son, C. André Ohlin and William H. Casey* A) Computational Results The two most interesting aspects of this new polyoxoanion is the inclusion of a more redox active metal and the optical activity in the visible region. For these reasons we in particular wanted to explore whether it was possible to readily and routinely predict the location of the absorbance bands of this type of compound. Also, owing to the difficulty in isolating the oxidised and reduced forms of the central polyanion dichromate species, we employed computations at the density functional theory (DFT) level to get a better idea of the potential characteristics of these forms. Structures were optimised for anti-parallel and parallel electronic spin alignments, and for both high and low spin cases where relevant. Intermediate spin configurations were 8- II III also tested for [Cr2(OH)4Nb10O30] . As expected, the high spin form of the Cr Cr complex was favoured (Table S1, below). DFT overestimates the bond lengths in general, but gives a good picture of the general distortion of the octahedral chromium unit, and in agreement with the crystal structure predicts that the shortest bond is the Cr-µ2-O bond trans from the central µ6- oxygen in the Lindqvist unit, and that the (Nb-)µ2-O-Cr-µ4-O(-Nb,Nb,Cr) angle is smaller by ca 5˚ from the ideal 180˚. -

Computer-Assisted Catalyst Development Via Automated Modelling of Conformationally Complex Molecules

www.nature.com/scientificreports OPEN Computer‑assisted catalyst development via automated modelling of conformationally complex molecules: application to diphosphinoamine ligands Sibo Lin1*, Jenna C. Fromer2, Yagnaseni Ghosh1, Brian Hanna1, Mohamed Elanany3 & Wei Xu4 Simulation of conformationally complicated molecules requires multiple levels of theory to obtain accurate thermodynamics, requiring signifcant researcher time to implement. We automate this workfow using all open‑source code (XTBDFT) and apply it toward a practical challenge: diphosphinoamine (PNP) ligands used for ethylene tetramerization catalysis may isomerize (with deleterious efects) to iminobisphosphines (PPNs), and a computational method to evaluate PNP ligand candidates would save signifcant experimental efort. We use XTBDFT to calculate the thermodynamic stability of a wide range of conformationally complex PNP ligands against isomeriation to PPN (ΔGPPN), and establish a strong correlation between ΔGPPN and catalyst performance. Finally, we apply our method to screen novel PNP candidates, saving signifcant time by ruling out candidates with non‑trivial synthetic routes and poor expected catalytic performance. Quantum mechanical methods with high energy accuracy, such as density functional theory (DFT), can opti- mize molecular input structures to a nearby local minimum, but calculating accurate reaction thermodynamics requires fnding global minimum energy structures1,2. For simple molecules, expert intuition can identify a few minima to focus study on, but an alternative approach must be considered for more complex molecules or to eventually fulfl the dream of autonomous catalyst design 3,4: the potential energy surface must be frst surveyed with a computationally efcient method; then minima from this survey must be refned using slower, more accurate methods; fnally, for molecules possessing low-frequency vibrational modes, those modes need to be treated appropriately to obtain accurate thermodynamic energies 5–7. -

Dalton2018.0 – Lsdalton Program Manual

Dalton2018.0 – lsDalton Program Manual V. Bakken, A. Barnes, R. Bast, P. Baudin, S. Coriani, R. Di Remigio, K. Dundas, P. Ettenhuber, J. J. Eriksen, L. Frediani, D. Friese, B. Gao, T. Helgaker, S. Høst, I.-M. Høyvik, R. Izsák, B. Jansík, F. Jensen, P. Jørgensen, J. Kauczor, T. Kjærgaard, A. Krapp, K. Kristensen, C. Kumar, P. Merlot, C. Nygaard, J. Olsen, E. Rebolini, S. Reine, M. Ringholm, K. Ruud, V. Rybkin, P. Sałek, A. Teale, E. Tellgren, A. Thorvaldsen, L. Thøgersen, M. Watson, L. Wirz, and M. Ziolkowski Contents Preface iv I lsDalton Installation Guide 1 1 Installation of lsDalton 2 1.1 Installation instructions ............................. 2 1.2 Hardware/software supported .......................... 2 1.3 Source files .................................... 2 1.4 Installing the program using the Makefile ................... 3 1.5 The Makefile.config ................................ 5 1.5.1 Intel compiler ............................... 5 1.5.2 Gfortran/gcc compiler .......................... 9 1.5.3 Using 64-bit integers ........................... 12 1.5.4 Using Compressed-Sparse Row matrices ................ 13 II lsDalton User’s Guide 14 2 Getting started with lsDalton 15 2.1 The Molecule input file ............................ 16 2.1.1 BASIS ................................... 17 2.1.2 ATOMBASIS ............................... 18 2.1.3 User defined basis sets .......................... 19 2.2 The LSDALTON.INP file ............................ 21 3 Interfacing to lsDalton 24 3.1 The Grand Canonical Basis ........................... 24 3.2 Basisset and Ordering .............................. 24 3.3 Normalization ................................... 25 i CONTENTS ii 3.4 Matrix File Format ................................ 26 3.5 The lsDalton library bundle .......................... 27 III lsDalton Reference Manual 29 4 List of lsDalton keywords 30 4.1 **GENERAL ................................... 31 4.1.1 *TENSOR ............................... -

Quantum Chemistry on a Grid

Quantum Chemistry on a Grid Lucas Visscher Vrije Universiteit Amsterdam 1 Outline Why Quantum Chemistry needs to be gridified Computational Demands Other requirements What may be expected ? Program descriptions Dirac • Organization • Distributed development and testing Dalton Amsterdam Density Functional (ADF) An ADF-Dalton-Dirac grid driver Design criteria Current status Quantum Chemistry on the Grid, 08-11-2005 L. Visscher, Vrije Universiteit Amsterdam 2 Computational (Quantum) Chemistry QC is traditionally run on supercomputers Large fraction of the total supercomputer time annually allocated Characteristics Compute Time scales cubically or higher with number of atoms treated. Typical : a few hours to several days on a supercomputer Memory Usage scales quadratically or higher with number of atoms. Typical : A few hundred MB till tens of GB Data Storage variable. Typical: tens of Mb till hundreds of GB. Communication • Often serial (one-processor) runs in production work • Parallelization needs sufficient band width. Typical lower limit 100 Mb/s. Quantum Chemistry on the Grid, 08-11-2005 L. Visscher, Vrije Universiteit Amsterdam 3 Changing paradigms in quantum chemistry Computable molecules are often already “large” enough Many degrees of freedom Search for global minimum energy is less important Statistical sampling of different conformations is desired Let the nuclei move, follow molecules that evolve in time 2 steps : (Quantum) dynamics on PES 1 step : Classical dynamics with forces computed via QM Changing characteristics General: more similar calculations Compute time : Increases : more calculations Memory usage : Stays constant : typical size remains the same Data storage : Decreases : intermediate results are less important Communication: Decreases : only start-up data and final result Quantum Chemistry on the Grid, 08-11-2005 L. -

![Computational Studies of the Unusual Water Adduct [Cp2time (OH2)]: the Roles of the Solvent and the Counterion](https://docslib.b-cdn.net/cover/1987/computational-studies-of-the-unusual-water-adduct-cp2time-oh2-the-roles-of-the-solvent-and-the-counterion-691987.webp)

Computational Studies of the Unusual Water Adduct [Cp2time (OH2)]: the Roles of the Solvent and the Counterion

Dalton Transactions View Article Online PAPER View Journal | View Issue Computational studies of the unusual water + adduct [Cp2TiMe(OH2)] : the roles of the Cite this: Dalton Trans., 2014, 43, 11195 solvent and the counterion† Jörg Saßmannshausen + The recently reported cationic titanocene complex [Cp2TiMe(OH2)] was subjected to detailed computational studies using density functional theory (DFT). The calculated NMR spectra revealed the importance of including the anion and the solvent (CD2Cl2) in order to calculate spectra which were in good agreement Received 29th January 2014, with the experimental data. Specifically, two organic solvent molecules were required to coordinate to Accepted 19th March 2014 the two hydrogens of the bound OH2 in order to achieve such agreement. Further elaboration of the role DOI: 10.1039/c4dt00310a of the solvent led to Bader’s QTAIM and natural bond order calculations. The zirconocene complex + www.rsc.org/dalton [Cp2ZrMe(OH2)] was simulated for comparison. Creative Commons Attribution-NonCommercial 3.0 Unported Licence. Introduction Group 4 metallocenes have been the workhorse for a number of reactions for some decades now. In particular the cationic + compounds [Cp2MR] (M = Ti, Zr, Hf; R = Me, CH2Ph) are believed to be the active catalysts for a number of polymeriz- – ation reactions such as Kaminsky type α-olefin,1 10 11–15 16–18 This article is licensed under a carbocationic or ring-opening lactide polymerization. For these highly electrophilic cationic compounds, water is usually considered a poison as it leads to catalyst decompo- sition. In this respect it was quite surprising that Baird Open Access Article. Published on 21 March 2014. -

A Summary of ERCAP Survey of the Users of Top Chemistry Codes

A survey of codes and algorithms used in NERSC chemical science allocations Lin-Wang Wang NERSC System Architecture Team Lawrence Berkeley National Laboratory We have analyzed the codes and their usages in the NERSC allocations in chemical science category. This is done mainly based on the ERCAP NERSC allocation data. While the MPP hours are based on actually ERCAP award for each account, the time spent on each code within an account is estimated based on the user’s estimated partition (if the user provided such estimation), or based on an equal partition among the codes within an account (if the user did not provide the partition estimation). Everything is based on 2007 allocation, before the computer time of Franklin machine is allocated. Besides the ERCAP data analysis, we have also conducted a direct user survey via email for a few most heavily used codes. We have received responses from 10 users. The user survey not only provide us with the code usage for MPP hours, more importantly, it provides us with information on how the users use their codes, e.g., on which machine, on how many processors, and how long are their simulations? We have the following observations based on our analysis. (1) There are 48 accounts under chemistry category. This is only second to the material science category. The total MPP allocation for these 48 accounts is 7.7 million hours. This is about 12% of the 66.7 MPP hours annually available for the whole NERSC facility (not accounting Franklin). The allocation is very tight. The majority of the accounts are only awarded less than half of what they requested for. -

![Trends in Atomistic Simulation Software Usage [1.3]](https://docslib.b-cdn.net/cover/7978/trends-in-atomistic-simulation-software-usage-1-3-1207978.webp)

Trends in Atomistic Simulation Software Usage [1.3]

A LiveCoMS Perpetual Review Trends in atomistic simulation software usage [1.3] Leopold Talirz1,2,3*, Luca M. Ghiringhelli4, Berend Smit1,3 1Laboratory of Molecular Simulation (LSMO), Institut des Sciences et Ingenierie Chimiques, Valais, École Polytechnique Fédérale de Lausanne, CH-1951 Sion, Switzerland; 2Theory and Simulation of Materials (THEOS), Faculté des Sciences et Techniques de l’Ingénieur, École Polytechnique Fédérale de Lausanne, CH-1015 Lausanne, Switzerland; 3National Centre for Computational Design and Discovery of Novel Materials (MARVEL), École Polytechnique Fédérale de Lausanne, CH-1015 Lausanne, Switzerland; 4The NOMAD Laboratory at the Fritz Haber Institute of the Max Planck Society and Humboldt University, Berlin, Germany This LiveCoMS document is Abstract Driven by the unprecedented computational power available to scientific research, the maintained online on GitHub at https: use of computers in solid-state physics, chemistry and materials science has been on a continuous //github.com/ltalirz/ rise. This review focuses on the software used for the simulation of matter at the atomic scale. We livecoms-atomistic-software; provide a comprehensive overview of major codes in the field, and analyze how citations to these to provide feedback, suggestions, or help codes in the academic literature have evolved since 2010. An interactive version of the underlying improve it, please visit the data set is available at https://atomistic.software. GitHub repository and participate via the issue tracker. This version dated August *For correspondence: 30, 2021 [email protected] (LT) 1 Introduction Gaussian [2], were already released in the 1970s, followed Scientists today have unprecedented access to computa- by force-field codes, such as GROMOS [3], and periodic tional power. -

Lawrence Berkeley National Laboratory Recent Work

Lawrence Berkeley National Laboratory Recent Work Title From NWChem to NWChemEx: Evolving with the Computational Chemistry Landscape. Permalink https://escholarship.org/uc/item/4sm897jh Journal Chemical reviews, 121(8) ISSN 0009-2665 Authors Kowalski, Karol Bair, Raymond Bauman, Nicholas P et al. Publication Date 2021-04-01 DOI 10.1021/acs.chemrev.0c00998 Peer reviewed eScholarship.org Powered by the California Digital Library University of California From NWChem to NWChemEx: Evolving with the computational chemistry landscape Karol Kowalski,y Raymond Bair,z Nicholas P. Bauman,y Jeffery S. Boschen,{ Eric J. Bylaska,y Jeff Daily,y Wibe A. de Jong,x Thom Dunning, Jr,y Niranjan Govind,y Robert J. Harrison,k Murat Keçeli,z Kristopher Keipert,? Sriram Krishnamoorthy,y Suraj Kumar,y Erdal Mutlu,y Bruce Palmer,y Ajay Panyala,y Bo Peng,y Ryan M. Richard,{ T. P. Straatsma,# Peter Sushko,y Edward F. Valeev,@ Marat Valiev,y Hubertus J. J. van Dam,4 Jonathan M. Waldrop,{ David B. Williams-Young,x Chao Yang,x Marcin Zalewski,y and Theresa L. Windus*,r yPacific Northwest National Laboratory, Richland, WA 99352 zArgonne National Laboratory, Lemont, IL 60439 {Ames Laboratory, Ames, IA 50011 xLawrence Berkeley National Laboratory, Berkeley, 94720 kInstitute for Advanced Computational Science, Stony Brook University, Stony Brook, NY 11794 ?NVIDIA Inc, previously Argonne National Laboratory, Lemont, IL 60439 #National Center for Computational Sciences, Oak Ridge National Laboratory, Oak Ridge, TN 37831-6373 @Department of Chemistry, Virginia Tech, Blacksburg, VA 24061 4Brookhaven National Laboratory, Upton, NY 11973 rDepartment of Chemistry, Iowa State University and Ames Laboratory, Ames, IA 50011 E-mail: [email protected] 1 Abstract Since the advent of the first computers, chemists have been at the forefront of using computers to understand and solve complex chemical problems. -

1 Advances in Electronic Structure Theory: Gamess a Decade Later Mark S. Gordon and Michael W. Schmidt Department of Chemistry A

ADVANCES IN ELECTRONIC STRUCTURE THEORY: GAMESS A DECADE LATER MARK S. GORDON AND MICHAEL W. SCHMIDT DEPARTMENT OF CHEMISTRY AND AMES LABORATORY, IOWA STATE UNIVERSITY, AMES, IA 50011 Abstract. Recent developments in advanced quantum chemistry and quantum chemistry interfaced with model potentials are discussed, with the primary focus on new implementations in the GAMESS electronic structure suite of programs. Applications to solvent effects and surface science are discussed. 1. Introduction The past decade has seen an extraordinary growth in novel new electronic structure methods and creative implementations of these methods. Concurrently, there have been important advances in “middleware”, software that enables the implementation of efficient electronic structure algorithms. Combined with continuing improvements in computer and interconnect hardware, these advances have extended both the accuracy of computations and the sizes of molecular systems to which such methods may be applied. The great majority of the new computational chemistry algorithms have found their way into one or more broadly distributed electronic structure packages. Since this work focuses on new features of the GAMESS (General Atomic and Molecular Electronic Structure System1) suite of codes, it is important at the outset to recognize the many other packages that offer both similar and complementary features. These include ACES2 CADPAC3, DALTON4, GAMESS-UK5, HYPERCHEM6, JAGUAR7, MOLCAS8 MOLPRO9, NWChem10, PQS11, PSI312, Q-Chem13, SPARTAN14, TURBOMOLE15, and UT-Chem16. Some1,3,4,10 of these packages are distributed at no cost to all, or to academic users, while others are commercial packages, but all are generally available to users without restriction or constraint. Indeed, the developers of these codes frequently collaborate to share features, a practice that clearly benefits all of their users. -

Adsorption of Imidazole on Au(111) Surface: Dispersion Corrected Density Functional Study

Adsorption of imidazole on Au(111) surface: Dispersion corrected density functional study Safia Izzaouihda1, Khaled Mahjoubi2, Hassna Abou El Makarim1, Najia Komiha1, David M. Benoit3 1LS3ME-Faculté des Sciences, Université Mohammed V Rabat Morocco 2LSAMA- Faculté des Sciences, Tunis Al Manar, Tunisia 3Department of Chemistry, University of Hull, UK Abstract We use density functional theory in the generalized gradient approximation to study the adsorption of imidazole on the Au(111) surface and account for dispersion effect using Grimme’s empirical dispersion correction technique. Our results show that the adsorption energy of imidazole depends on the slab size and on the adsorption site. In agreement with other studies, we find the largest adsorption energy for imidazole on a top site of Au(111). However, we also note that the adsorption energy at other sites is substantial. Keywords: imidazole, interaction, Au(111) surface, adsorbed sites, DFT, DFT-D3, zeolitic imidazole framework (ZIF). 1. Introduction In the present study, we investigate the interaction between imidazole and the Au(111) surface. This surface is often used in experimental investigations as it is easy to prepare and clean using standard annealing methods and sputtering techniques (see ref.1 for example), making it an ideal prototype metal substrate. The interaction of imidazole building blocks with metal surfaces is also of particular interest to the zeolite community as there has been a growing trend in coupling porous materials, such as zeolitic imidazolate frameworks (ZIFs), with metallic supports to create a new type of functional materials.2,3 These meta-materials are developed to improve on the current properties of ZIFs for gas separation,4,5 gas storage6 and catalysis.7 In this paper, we explore the applicability of two slab models for the Au(111) surface and investigate the adsorption of an imidazole molecule using periodic density functional theory (DFT) in the Gaussian plane wave pseudo potential formalism. -

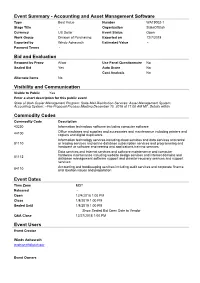

Event Summary

Event Summary - Accounting and Asset Management Software Type Best Value Number WA19002-1 Stage Title - Organization StateOfUtah Currency US Dollar Event Status Open Work Group Division of Purchasing Exported on 12/7/2018 Exported by Windy Aphayrath Estimated Value - Payment Terms - Bid and Evaluation Respond by Proxy Allow Use Panel Questionnaire No Sealed Bid Yes Auto Score No Cost Analysis No Alternate Items No Visibility and Communication Visible to Public Yes Enter a short description for this public event State of Utah Copier Management Program; State Mail Distribution Services; Asset Management System; Accounting System; --Pre-Proposal Process Meeting December 10, 2018 at 11:00 AM MT. Details within. Commodity Codes Commodity Code Description 43230 Information technology software including computer software Office machines and supplies and accessories and maintenance including printers and 44100 copiers and digital duplicators Information technology services including cloud services and data services and rental 81110 or leasing services and online database subscription services and programming and hardware or software engineering and applications training services Data services and internet services and software maintenance and computer hardware maintenance including website design services and internet domains and 81112 database management software support and disaster recovery services and support services Accounting and bookkeeping services including audit services and corporate finance 84110 and taxation issues and preparation -

DALTON2011 Program Manual

DALTON2011 Program Manual C. Angeli, K. L. Bak, V. Bakken, O. Christiansen, R. Cimiraglia, S. Coriani, P. Dahle, E. K. Dalskov, T. Enevoldsen, B. Fernandez, L. Ferrighi, L. Frediani, C. H¨attig, K. Hald, A. Halkier, H. Heiberg, T. Helgaker, H. Hettema, B. Jansik, H. J. Aa. Jensen, D. Jonsson, P. Jørgensen, S. Kirpekar, W. Klopper, S. Knecht, R. Kobayashi, J. Kongsted, H. Koch, A. Ligabue, O. B. Lutnæs, K. V. Mikkelsen, C. B. Nielsen, P. Norman, J. Olsen, A. Osted, M. J. Packer, T. B. Pedersen, Z. Rinkevicius, E. Rudberg, T. A. Ruden, K. Ruud, P. Sa lek,C. C. M. Samson, A. Sanchez de Meras, T. Saue, S. P. A. Sauer, B. Schimmelpfennig, A. H. Steindal, K. O. Sylvester-Hvid, P. R. Taylor, O. Vahtras, D. J. Wilson, and H. Agren,˚ Contents Preface ix 1 Introduction 1 1.1 General description of the manual . 2 1.2 Acknowledgments . 3 2 New features in the Dalton releases 4 2.1 New features in Dalton2011 . 4 2.2 New features in Dalton 2.0 (2005) . 7 2.3 New features in Dalton 1.2 . 10 I DALTON Installation Guide 13 3 Installation 14 3.1 Hardware/software supported . 14 3.2 Source files . 14 3.3 Installing the program using the Makefile . 15 3.4 Running the dalton2011 test suite . 18 4 Maintenance 20 4.1 Memory requirements . 20 4.1.1 Redimensioning dalton2011 ...................... 20 4.2 New versions, patches . 21 4.3 Reporting bugs and user support . 22 II DALTON User’s Guide 23 5 Getting started with dalton2011 24 5.1 The DALTON.INP file .