Operating Systems

Total Page:16

File Type:pdf, Size:1020Kb

Load more

Recommended publications

-

Linux Kernal II 9.1 Architecture

Page 1 of 7 Linux Kernal II 9.1 Architecture: The Linux kernel is a Unix-like operating system kernel used by a variety of operating systems based on it, which are usually in the form of Linux distributions. The Linux kernel is a prominent example of free and open source software. Programming language The Linux kernel is written in the version of the C programming language supported by GCC (which has introduced a number of extensions and changes to standard C), together with a number of short sections of code written in the assembly language (in GCC's "AT&T-style" syntax) of the target architecture. Because of the extensions to C it supports, GCC was for a long time the only compiler capable of correctly building the Linux kernel. Compiler compatibility GCC is the default compiler for the Linux kernel source. In 2004, Intel claimed to have modified the kernel so that its C compiler also was capable of compiling it. There was another such reported success in 2009 with a modified 2.6.22 version of the kernel. Since 2010, effort has been underway to build the Linux kernel with Clang, an alternative compiler for the C language; as of 12 April 2014, the official kernel could almost be compiled by Clang. The project dedicated to this effort is named LLVMLinxu after the LLVM compiler infrastructure upon which Clang is built. LLVMLinux does not aim to fork either the Linux kernel or the LLVM, therefore it is a meta-project composed of patches that are eventually submitted to the upstream projects. -

A Comparison of Two Distributed Systems

A comparison of two distributed systems Finny Varghese Topics Design Philosophies Application environment Processor allocation Design Consequences Kernal Architecture Communication Mechanism File system Process Management 1 Amoeba vs. Sprite 2 philosophical grounds Distributed computing model vs. Unix-style applications Workstation-centered model vs. combination of terminal with a shared processor pool Amoeba vs. Sprite Amoeba Sprite user level IPC RPC model – Kernal use mechanism Caches files only on Client-level caching servers Centralized server – to Process migration model allocate processors 2 Amoeba System Sprite System 3 Design Philosophies 1. How to design a distributed file system with secondary storage shared? 2. How to allow collection of processors to be exploited by individual users Application Environment Amoeba Sprite Process or file = obj Eases – transition from Capability time-sharing to networked Port – hides the server workstations from objects Uniform communication Caching file data – on model workstations Easier - writing distributed application Little or no IPC Orca – programming language 4 Processor Allocation Pure “workstation” – execute tasks on one machine Pure “processor pool” – equal access to all processors Amoeba – closer to processor pool Sprite – closer to workstation model Processor Allocation - Amoeba “pool processor” – network interface and RAM Unlike pure – processors allocation outside pool processors for system services Terminals – only display server 3 reasons for this choice 1. Assumption that processor & memory price decrease 2. Assumption that the cost of adding new processor would be less than adding workstation 3. Entire distributed system – as a time sharing system 5 Processor Allocation - Sprite Priority, processing power of a workstation Unlike pure workstations – uses processing power of idle hosts Dedicated file servers – not for applications 3 reasons for this choice 1. -

Simulation and Comparison of Various Scheduling Algorithm for Improving the Interrupt Latency of Real –Time Kernal

Journal of Computer Science and Applications. ISSN 2231-1270 Volume 6, Number 2 (2014), pp. 115-123 © International Research Publication House http://www.irphouse.com Simulation And Comparison of Various Scheduling Algorithm For Improving The Interrupt Latency of Real –Time Kernal 1.Lavanya Dhanesh 2.Dr.P.Murugesan 1.Research Scholar, Sathyabama University, Chennai, India. 2.Professor, S.A. Engineering College, Chennai, India. Email:1. [email protected] Abstract The main objective of the research is to improve the performance of the Real- time Interrupt Latency using Pre-emptive task Scheduling Algorithm. Interrupt Latency provides an important metric in increasing the performance of the Real Time Kernal So far the research has been investigated with respect to real-time latency reduction to improve the task switching as well the performance of the CPU. Based on the literature survey, the pre-emptive task scheduling plays an vital role in increasing the performance of the interrupt latency. A general disadvantage of the non-preemptive discipline is that it introduces additional blocking time in higher priority tasks, so reducing schedulability . If the interrupt latency is increased the task switching delay shall be increasing with respect to each task. Hence most of the research work has been focussed to reduce interrupt latency by many methods. The key area identified is, we cannot control the hardware interrupt delay but we can improve the Interrupt service as quick as possible by reducing the no of preemptions. Based on this idea, so many researches has been involved to optimize the pre-emptive scheduling scheme to reduce the real-time interrupt latency. -

Get the Inside Track on 27 Years of Microkernel Innovation

Get the inside track on 27 years of microkernel innovation# Sebastien and Colin talked about the history of the QNX Microkernel, the new Hybrid Development Model and then got into some details of how the kernel and the process manager actually work. Archived Web Broadcast# The On-Demand version of the broadcast is available here - http://seminar2.techonline.com/s/qnx_oct1707 Slides# Here are the slides from the webinar - sorry the are in PowerPoint format. Oct27_Microkernel_Innovation/ Webinar_kernel_oct07_final.ppt Questions From The Webinar# There were loads of questions during the webinar! Are there are QNX Kernel development books in process of writing or available now?# Not that I'm aware of - CB Does the Momentics version contain the develoment system and also the OS for the BSP.# What tools one needs to try this out?# is the uK student version FUll or minimal ?# There is only one version - the student and non-commercial aspect is simply the license agreement you select when you download - CB Has or will QNX publish a set of development standards or procedures (e.g., coding conventions)?# Yes - see the developers info page (OSDeveloperInformation) page for our coding guidelines - CB how would the "QNX Comunity" work with the hybrid SW model? how do people out-side of your company contribute?# The OSDeveloperInformation page covers how to contribute - CB could hybrid source model adversely effect stability of customer product ? i.e. enforced product releases for bug fixes....# Our regular releases are still going to be as thoroughly tested as before, there should be no stability problems introduced by sharing our code. -

History of General-Purpose Operating Systems Unix Opera

Software systems and issues Operating system • operating systems • a program that controls the resources of a computer – controlling the computer – interface between hardware and all other software • file systems and databases – examples: Windows 95/98/NT/ME/2000/XP/Vista/7, – storing information Unix/Linux, Mac OS X, Symbian, PalmOS, ... • applications – programs that do things • runs other programs ("applications", your programs) • middleware, platforms • manages information on disk (file system) – where programs meet systems • controls peripheral devices, communicates with outside • interfaces, standards • provides a level of abstraction above the raw hardware – agreements on how to communicate and inter-operate – makes the hardware appear to provide higher-level services than it • open source software really does – freely available software – makes programming much easier • intellectual property – copyrights, patents, licenses What's an operating system? History of general-purpose operating systems • 1950's: signup sheets "Operating system" means the software code that, inter alia, • 1960's: batch operating systems (i) controls the allocation and usage of hardware resources – operators running batches of jobs (such as the microprocessor and various peripheral devices) of – OS/360 (IBM) a Personal Computer, (ii) provides a platform for developing • 1970's: time-sharing applications by exposing functionality to ISVs through APIs, – simultaneous access for multiple users and (iii) supplies a user interface that enables users to access – Unix (Bell Labs; Ken Thompson & Dennis Ritchie) functionality of the operating system and in which they can • 1980's: personal computers, single user systems run applications. – DOS, Windows, MacOS – Unix US District Court for the District of Columbia • 1990's: personal computers, PDA's, … Final Judgment, State of New York, et al v. -

Course Title

"Charting the Course ... ... to Your Success!" Linux Internals Course Summary Description This is an intensive course designed to provide an in-depth examination of the Linux kernel architecture including error handling, system calls, memory and process management, filesystem, and peripheral devices. This course includes concept lectures and discussions, demonstrations, and hands-on programming exercises. Objectives At the end of this course, students will be able to: • Identify and understand the components of • Understand and explain error handling; the Linux system and its file system • Understand and explain memory • Boot a Linux system and identify the boot management phases • Understand and explain process • Understand and utilize selected services management • Identify and understand the various • Understand and explain process scheduling components of the Linux kernal • Utilize and change kernal parameters Topics • Introduction • Memory Management • Booting Linux • Process Management • Selected Services • Process Scheduling • The Linux Kernel • Signals • Kernel Parameters • IPC (Interprocess Communication) • Kernel Modules • The Virtual Filesystem • Kernel Error Handling and Monitoring • Interrupts • Kernel Synchronization • Time and Timers • System Calls • Device Drivers Audience This course is designed for Technology Professionals who need to understand, modify, support, and troubleshoot the Linux Operating System. Prerequisites Students should be proficient with basic tools such as vi, emacs, and file utilities. Experience with systems programming in a UNIX or Linux environment is a recommended. Duration Five days Due to the nature of this material, this document refers to numerous hardware and software products by their trade names. References to other companies and their products are for informational purposes only, and all trademarks are the properties of their respective companies. -

Improving IPC by Kernel Design

Improving IPC by Kernel Design Jochen Liedtke German National Research for Computer Science Presented by Emalayan Agenda • Introduction to Micro Kernal • Design Objectives • L3 & Mach Architecture • Design – Architectural Level – Algorithamatic Level – Interface Level – Coding Level • Resutls • Conclusion Micro Kernels • Micro kernels – Microkernel architectures introduce a heavy reliance on IPC, particularly in modular systems – Mach pioneered an approach to highly modular and configurable systems, but had poor IPC performance – Poor performance leads people - The rationale behind its design is to to avoid microkernels entirely, or architect their design to separate mechanism from policy reduce IPC allowing many policy – Paper examines a performance decisions to be made at user level oriented design process and specific optimizations that achieve good performance • Popular Micro kernels - L3 & Mach Design Objectives • IPC performance is the primary objective • Design discussions before implementation • Poor performance replacements • Top to Bottom detailed design (From architecture to coding level) • Consider synergetic effects • Design with concrete basis • Design with concrete performance L3 & Mach Architecture • Similarities - Tasks, threads - Virtual memory subsystem with external pager interface - IPC via messages • L3 - Synchronous IPC via threads • Mach – Asynchronous IPC via ports • Difference - Ports • L3 is used as the workbench Performance Objective • Finding out the best possible case - NULL message transfer using IPC • Goal -

Operating System Interview Questions Q1.Explain the Meaning of Kernal

Operating system interview questions Q1.Explain the meaning of Kernal. Answer The kernel is the essential center of a computer operating system, the core that provides basic services for all other parts of the operating system. As a basic component of an operating system, a kernel provides the lowest-level abstraction layer for the resources. The kernel's primary purpose is to manage the computer's resources and allow other programs to run and use the resources like the CPU, memory and the I/O devices in the computer. The facilities provides by the kernel are : Memory management The kernel has full access to the system's memory and must allow processes to access safely this memory as they require it. Device management To perform useful functions, processes need access to the peripherals connected to the computer, which are controlled by the kernel through device drivers System calls To actually perform useful work, a process must be able to access the services provided by the kernel. Types of Kernel: Monolithic kernels Every part which is to be accessed by most programs which cannot be put in a library is in the kernel space: Device drivers Scheduler Memory handling File systems Network stacks Microkernls In Microkernels, parts which really require to be in a privileged mode are in kernel space: -Inter-Process Communication, -Basic scheduling -Basic memory handling -Basic I/O primitives Due to this, some critical parts like below run in user space: The complete scheduler Memory handling File systems Network stacks Q2.Explain the meaning of Kernel. Kernel is the core module of an operating system. -

Embedded Systems: a Contemporary Design Tool James Peckol

OS? Based on Embedded Systems: A Contemporary Design Tool James Peckol and EE472 Lecture Notes Pack Blake Hannaford, James Peckol, Shwetak Patel CSE 466 Tasks And Scheduling 1 Why would anyone want an OS? Goal: run multiple programs on the same HW “simultaneously” i.e. multi-tasking…it means more than surfing Facebook during lecture Problem: how to share resources & avoid interference To be shared: processor, memory, GPIOs, PWM, timers, counters, ADCs, etc In embedded case, we may need to do the sharing while respecting “real time” constraints OS is responsible for scheduling the various jobs Also: OS provides abstractions of HW (e.g. device drivers) that make code more portable & re-usable, as well as enabling sharing Code re-use a key goal of ROS (“meta-operating system”) Power: maintain state across power loss, power aware scheduling CSE 466 Tasks and Scheduling 2 Tasks / Processes, Threads Task or process Unit of code and data… a program running in its own memory space Thread Smaller than a process A single process can contain several threads Memory is shared across threads but not across processes Task 1 Task 2 Task 3 Ready Running Waiting With just 1 task, it is either Running or Ready Waiting CSE 466 Tasks and Scheduling 3 Types of tasks Periodic --- Hard real time Control: sense, compute, & generate new motor cmd every 10ms Multimedia: sample audio, compute filter, generate DAC output every 22.73 uS Characterized by P, Period C, Compute time (may differ from instance to instance, but C<=P) D, Deadline (useful if start time of task is variable) C < D < P Intermittent Characterized by C and D, but no P Background Soft realtime or non-realtime Characterized by C only Complex Examples MS Word, Web server Continuous need for CPU Requests for IO or user input free CPU CSE 466 Tasks and Scheduling 4 Scheduling strategies Multiprogramming Running task continues until a stopping point (e.g. -

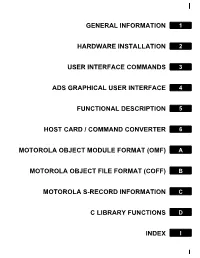

(DSP) Application Development System (ADS) User's Manual

GENERAL INFORMATION 1 HARDWARE INSTALLATION 2 USER INTERFACE COMMANDS 3 ADS GRAPHICAL USER INTERFACE 4 FUNCTIONAL DESCRIPTION 5 HOST CARD / COMMAND CONVERTER 6 MOTOROLA OBJECT MODULE FORMAT (OMF) A MOTOROLA OBJECT FILE FORMAT (COFF) B MOTOROLA S-RECORD INFORMATION C C LIBRARY FUNCTIONS D INDEX I 1 GENERAL INFORMATION 2 HARDWARE INSTALLATION 3 USER INTERFACE COMMANDS 4 ADS GRAPHICAL USER INTERFACE 5 FUNCTIONAL DESCRIPTION 6 HOST CARD / COMMAND CONVERTER A MOTOROLA OBJECT MODULE FORMAT (OMF) B MOTOROLA OBJECT FILE FORMAT (COFF) C MOTOROLA S-RECORD INFORMATION D C LIBRARY FUNCTIONS I INDEX DIGITAL SIGNAL PROCESSOR (DSP) Application Development System (ADS) User’s Manual PRELIMINARY Motorola, Incorporated Semiconductor Products Sector Wireless Signal Processing Division 6501 William Cannon Drive West Austin, TX 78735-8598 This document (and other documents) can be viewed on the World Wide Web at http://www.motorola-dsp.com. OnCE is a trademark of Motorola, Inc. MOTOROLA INC., 1989, 1997 Order this document by DSPADSUM/AD Motorola reserves the right to make changes without further notice to any products herein to improve reliability, function, or design. Motorola does not assume any liability arising out of the application or use of any product or circuit described herein; neither does it convey any license under its patent rights nor the rights of others. Motorola products are not authorized for use as components in life support devices or systems intendedPRELIMINARY for surgical implant into the body or intended to support or sustain life. Buyer agrees to notify Motorola of any such intended end use whereupon Motorola shall determine availability and suitability of its product or products for the use intended. -

Kernal Configuration Parameter Help—Non Networking Part

kernal configuration parameter help—Non networking part CONFIG_EXPERIMENTAL: Some of the various things that Linux supports (such as network drivers, file systems, network protocols, etc.) can be in a state of development where the functionality, stability, or the level of testing is not yet high enough for general use. This is usually known as the "alpha-test" phase amongst developers. If a feature is currently in alpha-test, then the developers usually discourage uninformed widespread use of this feature by the general public to avoid "Why doesn't this work?" type mail messages. However, active testing and use of these systems is welcomed. Just be aware that it may not meet the normal level of reliability or it may fail to work in some special cases. Detailed bug reports from people familiar with the kernel internals are usually welcomed by the developers (before submitting bug reports, please read the documents README, MAINTAINERS, REPORTING-BUGS, Documentation/BUG-HUNTING, and Documentation/oops- tracing.txt in the kernel source). This option will also make obsoleted drivers available. These are drivers that have been replaced by something else, and/or are scheduled to be removed in a future kernel release. Unless you intend to help test and develop a feature or driver that falls into this category, or you have a situation that requires using these features, you should probably say N here, which will cause this configure script to present you with fewer choices. If you say Y here, you will be offered the choice of using features or drivers that are currently considered to be in the alpha-test phase. -

CYBERSECURITY When Will You Be Hacked?

SUFFOLK ACADEMY OF LAW The Educational Arm of the Suffolk County Bar Association 560 Wheeler Road, Hauppauge, NY 11788 (631) 234-5588 CYBERSECURITY When Will You Be Hacked? FACULTY Victor John Yannacone, Jr., Esq. April 26, 2017 Suffolk County Bar Center, NY Cybersecurity Part I 12 May 2017 COURSE MATERIALS 1. A cybersecurity primer 3 – 1.1. Cybersecurity practices for law firms 5 – 1.2. Cybersecurity and the future of law firms 11 – 2. Information Security 14 – 2.1. An information security policy 33 – 2.2. Data Privacy & Cloud Computing 39 – 2.3. Encryption 47 – 3. Computer security 51 – 3.1. NIST Cybersecurity Framework 77 – 4. Cybersecurity chain of trust; third party vendors 113 – 5. Ransomware 117 – 5.1. Exploit kits 132 – 6. Botnets 137 – 7. BIOS 139 – 7.1. Universal Extensible Firmware Interface (UEFI) 154– 8. Operating Systems 172 – 8.1. Microsoft Windows 197 – 8.2. macOS 236– 8.3. Open source operating system comparison 263 – 9. Firmware 273 – 10. Endpoint Security Buyers Guide 278 – 11. Glossaries & Acronym Dictionaries 11.1. Common Computer Abbreviations 282 – 11.2. BABEL 285 – 11.3. Information Technology Acronymns 291 – 11.4. Glossary of Operating System Terms 372 – 2 Cyber Security Primer Network outages, hacking, computer viruses, and similar incidents affect our lives in ways that range from inconvenient to life-threatening. As the number of mobile users, digital applications, and data networks increase, so do the opportunities for exploitation. Cyber security, also referred to as information technology security, focuses on protecting computers, networks, programs, and data from unintended or unauthorized access, change, or destruction.