Coupling Natural Language Interfaces to Database and Named Entity Recognition

Total Page:16

File Type:pdf, Size:1020Kb

Load more

Recommended publications

-

Original Article Being John Malkovich and the Deal with the Devil in If I Were

Identificação projetiva excessiva – Rosa Artigo original Quero ser John Malkovich e o pacto com o diabo de Se eu fosse você: destinos da identificação projetiva excessiva Antonio Marques da Rosa* Um fantoche mira-se em um espelho e não A produção artística sempre proporcionou gosta do que vê. Inicia, então, uma agitada boas oportunidades para ilustrar conceitos da dança, na qual quebra todos os objetos do psicanálise. Freud, apreciador da psicanálise cenário. Após desferir murros e pontapés de aplicada, exercitou-a magistralmente em forma descontrolada, faz algumas piruetas diversos momentos, como em 1910, com as acrobáticas, dá alguns saltos mortais de costas telas de Leonardo. Mais adiante, em 1957, e deixa-se repousar no chão, abandonado, Racker, na área da filmografia, ofereceu um amolecido, desmembrado. brilhante estudo da cena primária baseado em Assim começa a história de Craig Schwartz A janela indiscreta, de Hitchcock. Em 1994, por (John Cusack), um titereiro que se expressa ocasião de um evento científico em Gramado, através de seus bonecos. Ele é o que, na Kernberg sustentou que o cinema, na sociedade americana, convencionou-se atualidade, é o setor das artes que mais chamar de loser: alguém que não consegue ricamente expressa os conflitos humanos. progredir na carreira e vive “dando errado”. Penso que, como qualquer criação, um filme Apesar de seu inegável talento, não é está impregnado de fantasias inconscientes, reconhecido e não alcança sucesso no mundo só que não de um único criador, como uma das marionetes. Não sem razão, a cena inicial tela, mas de vários: do autor da obra original, da marionete foi batizada por ele de a “Dança do roteirista, do diretor e dos atores que nos do Desespero e da Desilusão”. -

Subjectivity, Difference, and Proximity in Transnational Film and Literature

UNIVERSITY OF CALIFORNIA RIVERSIDE Errancies of Desire: Subjectivity, Difference, and Proximity in Transnational Film and Literature A Dissertation submitted in partial satisfaction of the requirements for the degree of Doctor of Philosophy in Comparative Literature by Vartan Patrick Messier March 2011 Dissertation Committee: Dr. Marguerite Waller, Co-Chairperson Dr. Marcel Hénaff, Co-Chairperson Dr. Sabine Doran Dr. Jennifer Doyle Copyright by Vartan Patrick Messier 2011 The Dissertation of Vartan P. Messier is approved: _________________________________________________________________ _________________________________________________________________ _________________________________________________________________ Committee Co-Chairperson _________________________________________________________________ Committee Co-Chairperson University of California, Riverside ACKNOWLEDGMENTS This project has benefited considerably from the guidance and suggestions of professors and colleagues. First and foremost, Margie Waller deserves my utmost gratitude for her unwavering support and constructive criticism throughout the duration of the project. Likewise, I would like to thank Sabine Doran for her commitment and dedication, as well as her excellent mentoring, especially in the earlier stages of the process. My thanks go to Marcel Hénaff who graciously accepted to co-chair the dissertation, and whose unique insights on intellectual history proved invaluable. As well, revisions were facilitated by Jennifer Doyle’s refined critique on the ideas permeating -

Popular Culture, Film and Media Summer 2015 Instructor: Tolga

Popular Culture, Film and Media Summer 2015 Boğaziçi University Department of Western Languages and Literatures Instructor: Tolga Yalur E-mail contact: [email protected] Assessment Method: Class participation, attendance 45% Term paper 55% Course Description: This course is designed to analyze the basic patterns and pillars of popular culture as they are used and revealed in certain examples from American and world cinemas to present to masses the basic institutions of popular culture. In method, it is essentially a film-reading course. Course Content: The meaning of “popular”, a brief history of popular culture, its main pillars and basic elements, characteristic features, paradigms, replication myth and the influence of myths like creation myths, themes and patterns. Readings of films from USA, Turkey, England, TV shows and programs, music, and art, namely; sculpture, architecture; their language and style and presentations, their artistic and political and ideological interpretations. Course Materials: Fishwick, Marshall W. Seven Pillars of Popular Culture (Westport: GreenwoodPress, 1985) Storey, John. Cultural Theory and Popular Culture: An Introduction (Longman, 2008) Supplementary Books: Baudrillard, Jean. Amerika. Çev. Yaşar Avunç (İstanbul: Ayrıntı, 1996) Somay, Bülent. Tarihin Bilinçdışı: Popüler Kültür Üzerine Denemeler (İstanbul: Metis, 2004) Eradam, Yusuf. Vanilyalı İdeoloji (İstanbul: Aykırı Yayıncılık, 2004) Güngör, Nazife (Der.). Popüler Kültür ve İktidar: Popüler Kültür Üzerine Kuramsal İncelemeler (Ankara: Vadi Yayınları, 1999) Week 1: Popular culture Screening and Discussion: Film clips and music videos: Grease (1978), Saturday Night Fever (1977), Tootsie (1982), Michael Jackson’s Thriller, Luciano Pavarotti’s Concert in Hyde Park (London, 1991) Readings: Storey, John. Ch.1 “What is popular culture?” Fishwick, Marshall W. -

Hits the Big Screen

April 2018 Volume 27 Issue 2 The Kelo Case Hits the Big Screen Published Bimonthly by IJ Fights to End For-Profit Prosecution in California • Victory for Free Speech at the Colorado Supreme Court the Institute for Justice IJ’s Network of Volunteer Lawyers Expands the Fight for Liberty • Victory! Mario Levels Up, Unlocks Free Speech Achievement Does the Eighth Amendment Protect Against State and Local Forfeitures? • Overcoming Hurdles, The IJ Way contents The Kelo Case 4 Hits the Big Screen 4 John E. Kramer IJ Fights to End For-Profit 6 Prosecution in California Joshua House Victory for Free Speech at the Colorado Supreme Court 8 Paul Sherman IJ’s Network of Volunteer Lawyers Expands the Fight 10 For Liberty Dana Berliner Victory! Mario Levels Up, Unlocks Free Speech 12 Achievement Robert Frommer 10 Does the Eighth Amendment Protect Against State and 14 Local Forfeitures? Sam Gedge Overcoming Hurdles, The IJ Way 16 Robert McNamara Notable Media Mentions 14 19 2 6 8 April 2018 • Volume 27 Issue 2 About the publication: Editor: Liberty & Law is Shira Rawlinson and published bimonthly John E. Kramer by the Institute for Layout & Design: Justice, which, through Laura Maurice-Apel strategic litigation, training, communication, General Information: activism and research, (703) 682-9320 advances a rule of law Donations: Ext. 233 under which individuals 12 can control their Media: Ext. 205 destinies as free and Website: www.ij.org responsible members of society. IJ litigates Email: [email protected] to secure economic Donate: liberty, educational www.ij.org/donate choice, private property rights, freedom of facebook.com/ speech and other vital instituteforjustice individual liberties, and to restore constitutional youtube.com/ limits on the power of instituteforjustice government. -

Movie Titles List, Genre

ALLEGHENY COLLEGE GAME ROOM MOVIE LIST SORTED BY GENRE As of December 2020 TITLE TYPE GENRE ACTION 300 DVD Action 12 Years a Slave DVD Action 2 Guns DVD Action 21 Jump Street DVD Action 28 Days Later DVD Action 3 Days to Kill DVD Action Abraham Lincoln Vampire Hunter DVD Action Accountant, The DVD Action Act of Valor DVD Action Aliens DVD Action Allegiant (The Divergent Series) DVD Action Allied DVD Action American Made DVD Action Apocalypse Now Redux DVD Action Army Of Darkness DVD Action Avatar DVD Action Avengers, The DVD Action Avengers, End Game DVD Action Aviator DVD Action Back To The Future I DVD Action Bad Boys DVD Action Bad Boys II DVD Action Batman Begins DVD Action Batman v Superman: Dawn of Justice DVD Action Black Hawk Down DVD Action Black Panther DVD Action Blood Father DVD Action Body of Lies DVD Action Boondock Saints DVD Action Bourne Identity, The DVD Action Bourne Legacy, The DVD Action Bourne Supremacy, The DVD Action Bourne Ultimatum, The DVD Action Braveheart DVD Action Brooklyn's Finest DVD Action Captain America: Civil War DVD Action Captain America: The First Avenger DVD Action Casino Royale DVD Action Catwoman DVD Action Central Intelligence DVD Action Commuter, The DVD Action Dark Knight Rises, The DVD Action Dark Knight, The DVD Action Day After Tomorrow, The DVD Action Deadpool DVD Action Die Hard DVD Action District 9 DVD Action Divergent DVD Action Doctor Strange (Marvel) DVD Action Dragon Blade DVD Action Elysium DVD Action Ender's Game DVD Action Equalizer DVD Action Equalizer 2, The DVD Action Eragon -

35 Years of Nominees and Winners 36

3635 Years of Nominees and Winners 2021 Nominees (Winners in bold) BEST FEATURE JOHN CASSAVETES AWARD BEST MALE LEAD (Award given to the producer) (Award given to the best feature made for under *RIZ AHMED - Sound of Metal $500,000; award given to the writer, director, *NOMADLAND and producer) CHADWICK BOSEMAN - Ma Rainey’s Black Bottom PRODUCERS: Mollye Asher, Dan Janvey, ADARSH GOURAV - The White Tiger Frances McDormand, Peter Spears, Chloé Zhao *RESIDUE WRITER/DIRECTOR: Merawi Gerima ROB MORGAN - Bull FIRST COW PRODUCERS: Neil Kopp, Vincent Savino, THE KILLING OF TWO LOVERS STEVEN YEUN - Minari Anish Savjani WRITER/DIRECTOR/PRODUCER: Robert Machoian PRODUCERS: Scott Christopherson, BEST SUPPORTING FEMALE MA RAINEY’S BLACK BOTTOM Clayne Crawford PRODUCERS: Todd Black, Denzel Washington, *YUH-JUNG YOUN - Minari Dany Wolf LA LEYENDA NEGRA ALEXIS CHIKAEZE - Miss Juneteenth WRITER/DIRECTOR: Patricia Vidal Delgado MINARI YERI HAN - Minari PRODUCERS: Alicia Herder, Marcel Perez PRODUCERS: Dede Gardner, Jeremy Kleiner, VALERIE MAHAFFEY - French Exit Christina Oh LINGUA FRANCA WRITER/DIRECTOR/PRODUCER: Isabel Sandoval TALIA RYDER - Never Rarely Sometimes Always NEVER RARELY SOMETIMES ALWAYS PRODUCERS: Darlene Catly Malimas, Jhett Tolentino, PRODUCERS: Sara Murphy, Adele Romanski Carlo Velayo BEST SUPPORTING MALE BEST FIRST FEATURE SAINT FRANCES *PAUL RACI - Sound of Metal (Award given to the director and producer) DIRECTOR/PRODUCER: Alex Thompson COLMAN DOMINGO - Ma Rainey’s Black Bottom WRITER: Kelly O’Sullivan *SOUND OF METAL ORION LEE - First -

Postclassical Hollywood/Postmodern Subjectivity Representation In

Postclassical Hollywood/Postmodern Subjectivity Representation in Some ‘Indie/Alternative’ Indiewood Films Jessica Murrell Thesis submitted for the Degree of Doctor of Philosophy Discipline of English Faculty of Humanities and Social Sciences University of Adelaide August 2010 Contents Abstract .................................................................................................................. iii Declaration ............................................................................................................. iv Acknowledgements.................................................................................................. v Introduction ........................................................................................................... 1 Chapter 1. Critical Concepts/Critical Contexts: Postmodernism, Hollywood, Indiewood, Subjectivity ........................................................................................12 Defining the Postmodern.....................................................................................13 Postmodernism, Cinema, Hollywood. .................................................................22 Defining Indiewood. ...........................................................................................44 Subjectivity and the Classical Hollywood Cinema...............................................52 Chapter 2. Depthlessness in American Psycho and Being John Malkovich.........61 Depthlessness, Hermeneutics, Subjectivity..........................................................63 -

What Is It Like to Be John Malkovich?: the Exploration of Subjectivity in Being John Malkovich Tom Mcclelland University of Suss

Postgraduate Journal of Aesthetics, Vol. 7, No. 2, August 2010 Special issue on Aesthetics and Popular Culture WHAT IS IT LIKE TO BE JOHN MALKOVICH ?: THE EXPLORATION OF SUBJECTIVITY IN BEING JOHN MALKOVICH TOM MCCLELLAND UNIVERSITY OF SUSSEX I. INTRODUCTION “After decades in the shadows”, Murray Smith and Thomas Wartenberg proclaim, “the philosophy of film has arrived, borne on the currents of the wider revival of philosophical aesthetics.”1 Of specific interest in this growing discipline is the notion of film as philosophy. In a question: “To what extent can film - or individual films - act as a vehicle of or forum for philosophy itself?” 2 Many have responded that films can indeed do philosophy, in some significant sense. 3 Furthermore, it has been claimed that this virtue does not belong solely to ‘art’ films, but that popular cinema too can do philosophy. 4 A case in point is Spike Jonze’s 1999 film Being John Malkovich , the Oscar- winning screenplay of which was written by Charlie Kaufman. The outrageous premise of this comic fantasy is summarised by the film’s protagonist, Craig Schwartz: There’s a tiny door in my office Maxine. It’s a portal, and it takes you inside John Malkovich. You see the world through John Malkovich's eyes. And then, after about fifteen minutes, you're spit out into a ditch on the side of the New Jersey Turnpike. 1 Smith and Wartenberg (2006), p.1. 2 Ibid. 3 Notably Mulhall (2002). 4 Cavell (1979) and (1981). TOM MCCLELLAND , The philosophical issues that this scenario raises are manifold. -

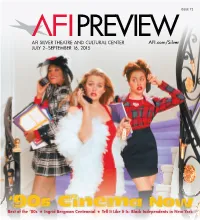

AFI PREVIEW Is Published by the Age 46

ISSUE 72 AFI SILVER THEATRE AND CULTURAL CENTER AFI.com/Silver JULY 2–SEPTEMBER 16, 2015 ‘90s Cinema Now Best of the ‘80s Ingrid Bergman Centennial Tell It Like It Is: Black Independents in New York Tell It Like It Is: Contents Black Independents in New York, 1968–1986 Tell It Like It Is: Black Independents in New York, 1968–1986 ........................2 July 4–September 5 Keepin’ It Real: ‘90s Cinema Now ............4 In early 1968, William Greaves began shooting in Central Park, and the resulting film, SYMBIOPSYCHOTAXIPLASM: TAKE ONE, came to be considered one of the major works of American independent cinema. Later that year, following Ingrid Bergman Centennial .......................9 a staff strike, WNET’s newly created program BLACK JOURNAL (with Greaves as executive producer) was established “under black editorial control,” becoming the first nationally syndicated newsmagazine of its kind, and home base for a Best of Totally Awesome: new generation of filmmakers redefining documentary. 1968 also marked the production of the first Hollywood studio film Great Films of the 1980s .....................13 directed by an African American, Gordon Park’s THE LEARNING TREE. Shortly thereafter, actor/playwright/screenwriter/ novelist Bill Gunn directed the studio-backed STOP, which remains unreleased by Warner Bros. to this day. Gunn, rejected Bugs Bunny 75th Anniversary ...............14 by the industry that had courted him, then directed the independent classic GANJA AND HESS, ushering in a new type of horror film — which Ishmael Reed called “what might be the country’s most intellectual and sophisticated horror films.” Calendar ............................................15 This survey is comprised of key films produced between 1968 and 1986, when Spike Lee’s first feature, the independently Special Engagements ............12-14, 16 produced SHE’S GOTTA HAVE IT, was released theatrically — and followed by a new era of studio filmmaking by black directors. -

December 8, 2009 (XIX:15) Mike Leigh TOPSY-TURVY (1999, 160 Min)

December 8, 2009 (XIX:15) Mike Leigh TOPSY-TURVY (1999, 160 min) Directed and written by Mike Leigh Produced by Simon Channing Williams Cinematography by Dick Pope Film Editing by Robin Sales Art Direction by Helen Scott Set Decoration by John Bush and Eve Stewart Costume Design by Lindy Hemming Allan Corduner...Sir Arthur Sullivan Dexter Fletcher...Louis Sukie Smith...Clothilde Roger Heathcott...Banton Wendy Nottingham...Helen Lenoir Stefan Bednarczyk...Frank Cellier Geoffrey Hutchings...Armourer Timothy Spall...Richard Temple (The Mikado) Francis Lee...Butt Kimi Shaw...Spinner William Neenan...Cook Toksan Takahashi...Calligrapher Adam Searle...Shrimp Akemi Otani...Dancer Martin Savage...George Grossmith (Ko-Ko) Kanako Morishita...Samisen Player Jim Broadbent...W. S. Gilbert Theresa Watson...Maude Gilbert Lesley Manville...Lucy Gilbert Lavinia Bertram...Florence Gilbert Kate Doherty...Mrs. Judd Togo Igawa...First Kabuki Actor Kenneth Hadley...Pidgeon Eiji Kusuhara...Second Kabuki Actor Keeley Gainey...Maidservant Ron Cook...Richard D'Oyly Carte Naoko Mori...Miss 'Sixpence Please' Eleanor David...Fanny Ronalds Eve Pearce...Gilbert's Mother Gary Yershon...Pianist in Brothel Neil Humphries...Boy Actor Katrin Cartlidge...Madame Vincent Franklin...Rutland Barrington (Pooh-Bah) Julia Rayner...Mademoiselle Fromage Michael Simkins...Frederick Bovill Jenny Pickering...Second Prostitute Alison Steadman...Madame Leon Kevin McKidd...Durward Lely (Nanki-Poo) Cathy Sara...Sybil Grey (Peep-Bo) Sam Kelly...Richard Barker Angela Curran...Miss Morton Charles Simon...Gilbert's Father Millie Gregory...Alice Philippe Constantin...Paris Waiter Jonathan Aris...Wilhelm David Neville...Dentist Andy Serkis...John D'Auban Matthew Mills...Walter Simmonds Mia Soteriou...Mrs. Russell Shirley Henderson...Leonora Braham (Yum-Yum) Louise Gold...Rosina Brandram (Katisha) Nicholas Woodeson...Mr. -

101 Films for Filmmakers

101 (OR SO) FILMS FOR FILMMAKERS The purpose of this list is not to create an exhaustive list of every important film ever made or filmmaker who ever lived. That task would be impossible. The purpose is to create a succinct list of films and filmmakers that have had a major impact on filmmaking. A second purpose is to help contextualize films and filmmakers within the various film movements with which they are associated. The list is organized chronologically, with important film movements (e.g. Italian Neorealism, The French New Wave) inserted at the appropriate time. AFI (American Film Institute) Top 100 films are in blue (green if they were on the original 1998 list but were removed for the 10th anniversary list). Guidelines: 1. The majority of filmmakers will be represented by a single film (or two), often their first or first significant one. This does not mean that they made no other worthy films; rather the films listed tend to be monumental films that helped define a genre or period. For example, Arthur Penn made numerous notable films, but his 1967 Bonnie and Clyde ushered in the New Hollywood and changed filmmaking for the next two decades (or more). 2. Some filmmakers do have multiple films listed, but this tends to be reserved for filmmakers who are truly masters of the craft (e.g. Alfred Hitchcock, Stanley Kubrick) or filmmakers whose careers have had a long span (e.g. Luis Buñuel, 1928-1977). A few filmmakers who re-invented themselves later in their careers (e.g. David Cronenberg–his early body horror and later psychological dramas) will have multiple films listed, representing each period of their careers. -

6101 Wilkins and Moss.Indd I 08/07/19 4:47 PM Refocus: the American Directors Series

ReFocus: The Films of Spike Jonze 6101_Wilkins and Moss.indd i 08/07/19 4:47 PM ReFocus: The American Directors Series Series Editors: Robert Singer and Gary D. Rhodes Editorial board: Kelly Basilio, Donna Campbell, Claire Perkins, Christopher Sharrett and Yannis Tzioumakis ReFocus is a series of contemporary methodological and theoretical approaches to the interdisciplinary analyses and interpretations of neglected American directors, from the once- famous to the ignored, in direct relationship to American culture—its myths, values, and historical precepts. The series ignores no director who created a historical space—either in or out of the studio system—beginning from the origins of American cinema and up to the present. These directors produced film titles that appear in university film history and genre courses across international boundaries, and their work is often seen on television or available to download or purchase, but each suffers from a form of “canon envy”; directors such as these, among other important figures in the general history of American cinema, are underrepresented in the critical dialogue, yet each has created American narratives, works of film art, that warrant attention. ReFocus brings these American film directors to a new audience of scholars and general readers of both American and Film Studies. Titles in the series include: ReFocus: The Films of Preston Sturges Edited by Jeff Jaeckle and Sarah Kozloff ReFocus: The Films of Delmer Daves Edited by Matthew Carter and Andrew Nelson ReFocus: The Films of Amy Heckerling Edited by Frances Smith and Timothy Shary ReFocus: The Films of Budd Boetticher Edited by Gary D.