Coding and Testing

Total Page:16

File Type:pdf, Size:1020Kb

Load more

Recommended publications

-

Core Elements of Continuous Testing

WHITE PAPER CORE ELEMENTS OF CONTINUOUS TESTING Today’s modern development disciplines -- whether Agile, Continuous Integration (CI) or Continuous Delivery (CD) -- have completely transformed how teams develop and deliver applications. Companies that need to compete in today’s fast-paced digital economy must also transform how they test. Successful teams know the secret sauce to delivering high quality digital experiences fast is continuous testing. This paper will define continuous testing, explain what it is, why it’s important, and the core elements and tactical changes development and QA teams need to make in order to succeed at this emerging practice. TABLE OF CONTENTS 3 What is Continuous Testing? 6 Tactical Engineering Considerations 3 Why Continuous Testing? 7 Benefits of Continuous Testing 4 Core Elements of Continuous Testing WHAT IS CONTINUOUS TESTING? Continuous testing is the practice of executing automated tests throughout the software development cycle. It’s more than just automated testing; it’s applying the right level of automation at each stage in the development process. Unlike legacy testing methods that occur at the end of the development cycle, continuous testing occurs at multiple stages, including development, integration, pre-release, and in production. Continuous testing ensures that bugs are caught and fixed far earlier in the development process, improving overall quality while saving significant time and money. WHY CONTINUOUS TESTING? Continuous testing is a critical requirement for organizations that are shifting left towards CI or CD, both modern development practices that ensure faster time to market. When automated testing is coupled with a CI server, tests can instantly be kicked off with every build, and alerts with passing or failing test results can be delivered directly to the development team in real time. -

Types of Software Testing

Types of Software Testing We would be glad to have feedback from you. Drop us a line, whether it is a comment, a question, a work proposition or just a hello. You can use either the form below or the contact details on the rightt. Contact details [email protected] +91 811 386 5000 1 Software testing is the way of assessing a software product to distinguish contrasts between given information and expected result. Additionally, to evaluate the characteristic of a product. The testing process evaluates the quality of the software. You know what testing does. No need to explain further. But, are you aware of types of testing. It’s indeed a sea. But before we get to the types, let’s have a look at the standards that needs to be maintained. Standards of Testing The entire test should meet the user prerequisites. Exhaustive testing isn’t conceivable. As we require the ideal quantity of testing in view of the risk evaluation of the application. The entire test to be directed ought to be arranged before executing it. It follows 80/20 rule which expresses that 80% of defects originates from 20% of program parts. Start testing with little parts and extend it to broad components. Software testers know about the different sorts of Software Testing. In this article, we have incorporated majorly all types of software testing which testers, developers, and QA reams more often use in their everyday testing life. Let’s understand them!!! Black box Testing The black box testing is a category of strategy that disregards the interior component of the framework and spotlights on the output created against any input and performance of the system. -

Leading Practice: Test Strategy and Approach in Agile Projects

CA SERVICES | LEADING PRACTICE Leading Practice: Test Strategy and Approach in Agile Projects Abstract This document provides best practices on how to strategize testing CA Project and Portfolio Management (CA PPM) in an agile project. The document does not include specific test cases; the list of test cases and steps for each test case are provided in a separate document. This document should be used by the agile project team that is planning the testing activities, and by end users who perform user acceptance testing (UAT). Concepts Concept Description Test Approach Defines testing strategy, roles and responsibilities of various team members, and test types. Testing Environments Outlines which testing is carried out in which environment. Testing Automation and Tools Addresses test management and automation tools required for test execution. Risk Analysis Defines the approach for risk identification and plans to mitigate risks as well as a contingency plan. Test Planning and Execution Defines the approach to plan the test cases, test scripts, and execution. Review and Approval Lists individuals who should review, approve and sign off on test results. Test Approach The test approach defines testing strategy, roles and responsibilities of various team members, and the test types. The first step is to define the testing strategy. It should describe how and when the testing will be conducted, who will do the testing, the type of testing being conducted, features being tested, environment(s) where the testing takes place, what testing tools are used, and how are defects tracked and managed. The testing strategy should be prepared by the agile core team. -

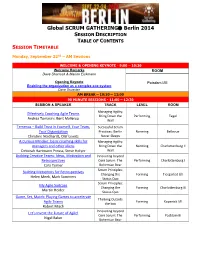

Global SCRUM GATHERING® Berlin 2014

Global SCRUM GATHERING Berlin 2014 SESSION DESCRIPTION TABLE OF CONTENTS SESSION TIMETABLE Monday, September 22nd – AM Sessions WELCOME & OPENING KEYNOTE - 9:00 – 10:30 Welcome Remarks ROOM Dave Sharrock & Marion Eickmann Opening Keynote Potsdam I/III Enabling the organization as a complex eco-system Dave Snowden AM BREAK – 10:30 – 11:00 90 MINUTE SESSIONS - 11:00 – 12:30 SESSION & SPEAKER TRACK LEVEL ROOM Managing Agility: Effectively Coaching Agile Teams Bring Down the Performing Tegel Andrea Tomasini, Bent Myllerup Wall Temenos – Build Trust in Yourself, Your Team, Successful Scrum Your Organization Practices: Berlin Norming Bellevue Christine Neidhardt, Olaf Lewitz Never Sleeps A Curious Mindset: basic coaching skills for Managing Agility: managers and other aliens Bring Down the Norming Charlottenburg II Deborah Hartmann Preuss, Steve Holyer Wall Building Creative Teams: Ideas, Motivation and Innovating beyond Retrospectives Core Scrum: The Performing Charlottenburg I Cara Turner Bohemian Bear Scrum Principles: Building Metaphors for Retrospectives Changing the Forming Tiergarted I/II Helen Meek, Mark Summers Status Quo Scrum Principles: My Agile Suitcase Changing the Forming Charlottenburg III Martin Heider Status Quo Game, Set, Match: Playing Games to accelerate Thinking Outside Agile Teams Forming Kopenick I/II the box Robert Misch Innovating beyond Let's Invent the Future of Agile! Core Scrum: The Performing Postdam III Nigel Baker Bohemian Bear Monday, September 22nd – PM Sessions LUNCH – 12:30 – 13:30 90 MINUTE SESSIONS - 13:30 -

Website Testing • Black-Box Testing

18 0672327988 CH14 6/30/05 1:23 PM Page 211 14 IN THIS CHAPTER • Web Page Fundamentals Website Testing • Black-Box Testing • Gray-Box Testing The testing techniques that you’ve learned in previous • White-Box Testing chapters have been fairly generic. They’ve been presented • Configuration and by using small programs such as Windows WordPad, Compatibility Testing Calculator, and Paint to demonstrate testing fundamentals and how to apply them. This final chapter of Part III, • Usability Testing “Applying Your Testing Skills,” is geared toward testing a • Introducing Automation specific type of software—Internet web pages. It’s a fairly timely topic, something that you’re likely familiar with, and a good real-world example to apply the techniques that you’ve learned so far. What you’ll find in this chapter is that website testing encompasses many areas, including configuration testing, compatibility testing, usability testing, documentation testing, security, and, if the site is intended for a worldwide audience, localization testing. Of course, black-box, white- box, static, and dynamic testing are always a given. This chapter isn’t meant to be a complete how-to guide for testing Internet websites, but it will give you a straightfor- ward practical example of testing something real and give you a good head start if your first job happens to be looking for bugs in someone’s website. Highlights of this chapter include • What fundamental parts of a web page need testing • What basic white-box and black-box techniques apply to web page testing • How configuration and compatibility testing apply • Why usability testing is the primary concern of web pages • How to use tools to help test your website 18 0672327988 CH14 6/30/05 1:23 PM Page 212 212 CHAPTER 14 Website Testing Web Page Fundamentals In the simplest terms, Internet web pages are just documents of text, pictures, sounds, video, and hyperlinks—much like the CD-ROM multimedia titles that were popular in the mid 1990s. -

Configuration Fuzzing Testing Framework for Software Vulnerability Detection

View metadata, citation and similar papers at core.ac.uk brought to you by CORE provided by Columbia University Academic Commons CONFU: Configuration Fuzzing Testing Framework for Software Vulnerability Detection Huning Dai, Christian Murphy, Gail Kaiser Department of Computer Science Columbia University New York, NY 10027 USA ABSTRACT Many software security vulnerabilities only reveal themselves under certain conditions, i.e., particular configurations and inputs together with a certain runtime environment. One approach to detecting these vulnerabilities is fuzz testing. However, typical fuzz testing makes no guarantees regarding the syntactic and semantic validity of the input, or of how much of the input space will be explored. To address these problems, we present a new testing methodology called Configuration Fuzzing. Configuration Fuzzing is a technique whereby the configuration of the running application is mutated at certain execution points, in order to check for vulnerabilities that only arise in certain conditions. As the application runs in the deployment environment, this testing technique continuously fuzzes the configuration and checks "security invariants'' that, if violated, indicate a vulnerability. We discuss the approach and introduce a prototype framework called ConFu (CONfiguration FUzzing testing framework) for implementation. We also present the results of case studies that demonstrate the approach's feasibility and evaluate its performance. Keywords: Vulnerability; Configuration Fuzzing; Fuzz testing; In Vivo testing; Security invariants INTRODUCTION As the Internet has grown in popularity, security testing is undoubtedly becoming a crucial part of the development process for commercial software, especially for server applications. However, it is impossible in terms of time and cost to test all configurations or to simulate all system environments before releasing the software into the field, not to mention the fact that software distributors may later add more configuration options. -

Chap 3. Test Models and Strategies 3.5 Test Integration and Automation 1

Chap 3. Test Models and Strategies 3.5 Test Integration and Automation 1. Introduction 2. Integration Testing 3. System Testing 4. Test Automation Appendix: JUnit Overview 1 1. Introduction -A system is composed of components. System of software components can be defined at any physical scope. Component System Typical intercomponent interfaces (locus of (Focus of Integration) (Scope of integration faults) Integration) Method Class Instance variables Intraclass messages Class Cluster Intraclass messages Cluster Subsystem Interclass messages Interpackage messages Subsystem System Inteprocess communication Remote procedure call ORB services, OS services -Integration test design is concerned with several primary questions: 1. Which components and interfaces should be exercised? 2. In what sequence will component interfaces be exercised? 2 3. Which test design technique should be used to exercise each interface? -Integration testing is a search for component faults that cause intercomponent failures. -System scope testing is a search for faults that lead to a failure to meet a system scope responsibility. ÷System scope testing cannot be done unless components interoperate sufficiently well to exercise system scope responsibilities. -Effective testing at system scope requires a concrete and testable system-level specification. ÷System test cases must be derived from some kind of functional specification. Traditionally, user documentation, product literature, line-item narrative requirements, and system scope models have been used. 3 2. Integration Testing -Unit testing focuses on individual components. Once faults in each component have been removed and the test cases do not reveal any new fault, components are ready to be integrated into larger subsystems. -Integration testing detects faults that have not been detected during unit testing, by focusing on small groups of components. -

Software Testing Training Module

MAST MARKET ALIGNED SKILLS TRAINING SOFTWARE TESTING TRAINING MODULE In partnership with Supported by: INDIA: 1003-1005,DLF City Court, MG Road, Gurgaon 122002 Tel (91) 124 4551850 Fax (91) 124 4551888 NEW YORK: 216 E.45th Street, 7th Floor, New York, NY 10017 www.aif.org SOFTWARE TESTING TRAINING MODULE About the American India Foundation The American India Foundation is committed to catalyzing social and economic change in India, andbuilding a lasting bridge between the United States and India through high impact interventions ineducation, livelihoods, public health, and leadership development. Working closely with localcommunities, AIF partners with NGOs to develop and test innovative solutions and withgovernments to create and scale sustainable impact. Founded in 2001 at the initiative of PresidentBill Clinton following a suggestion from Indian Prime Minister Vajpayee, AIF has impacted the lives of 4.6million of India’s poor. Learn more at www.AIF.org About the Market Aligned Skills Training (MAST) program Market Aligned Skills Training (MAST) provides unemployed young people with a comprehensive skillstraining that equips them with the knowledge and skills needed to secure employment and succeed on thejob. MAST not only meets the growing demands of the diversifying local industries across the country, itharnesses India's youth population to become powerful engines of the economy. AIF Team: Hanumant Rawat, Aamir Aijaz & Rowena Kay Mascarenhas American India Foundation 10th Floor, DLF City Court, MG Road, Near Sikanderpur Metro Station, Gurgaon 122002 216 E. 45th Street, 7th Floor New York, NY 10017 530 Lytton Avenue, Palo Alto, CA 9430 This document is created for the use of underprivileged youth under American India Foundation’s Market Aligned Skills Training (MAST) Program. -

Enabling Devops on Premise Or Cloud with Jenkins

Enabling DevOps on Premise or Cloud with Jenkins Sam Rostam [email protected] Cloud & Enterprise Integration Consultant/Trainer Certified SOA & Cloud Architect Certified Big Data Professional MSc @SFU & PhD Studies – Partial @UBC Topics The Context - Digital Transformation An Agile IT Framework What DevOps bring to Teams? - Disrupting Software Development - Improved Quality, shorten cycles - highly responsive for the business needs What is CI /CD ? Simple Scenario with Jenkins Advanced Jenkins : Plug-ins , APIs & Pipelines Toolchain concept Q/A Digital Transformation – Modernization As stated by a As established enterprises in all industries begin to evolve themselves into the successful Digital Organizations of the future they need to begin with the realization that the road to becoming a Digital Business goes through their IT functions. However, many of these incumbents are saddled with IT that has organizational structures, management models, operational processes, workforces and systems that were built to solve “turn of the century” problems of the past. Many analysts and industry experts have recognized the need for a new model to manage IT in their Businesses and have proposed approaches to understand and manage a hybrid IT environment that includes slower legacy applications and infrastructure in combination with today’s rapidly evolving Digital-first, mobile- first and analytics-enabled applications. http://www.ntti3.com/wp-content/uploads/Agile-IT-v1.3.pdf Digital Transformation requires building an ecosystem • Digital transformation is a strategic approach to IT that treats IT infrastructure and data as a potential product for customers. • Digital transformation requires shifting perspectives and by looking at new ways to use data and data sources and looking at new ways to engage with customers. -

Integrating the GNU Debugger with Cycle Accurate Models a Case Study Using a Verilator Systemc Model of the Openrisc 1000

Integrating the GNU Debugger with Cycle Accurate Models A Case Study using a Verilator SystemC Model of the OpenRISC 1000 Jeremy Bennett Embecosm Application Note 7. Issue 1 Published March 2009 Legal Notice This work is licensed under the Creative Commons Attribution 2.0 UK: England & Wales License. To view a copy of this license, visit http://creativecommons.org/licenses/by/2.0/uk/ or send a letter to Creative Commons, 171 Second Street, Suite 300, San Francisco, California, 94105, USA. This license means you are free: • to copy, distribute, display, and perform the work • to make derivative works under the following conditions: • Attribution. You must give the original author, Jeremy Bennett of Embecosm (www.embecosm.com), credit; • For any reuse or distribution, you must make clear to others the license terms of this work; • Any of these conditions can be waived if you get permission from the copyright holder, Embecosm; and • Nothing in this license impairs or restricts the author's moral rights. The software for the SystemC cycle accurate model written by Embecosm and used in this document is licensed under the GNU General Public License (GNU General Public License). For detailed licensing information see the file COPYING in the source code. Embecosm is the business name of Embecosm Limited, a private limited company registered in England and Wales. Registration number 6577021. ii Copyright © 2009 Embecosm Limited Table of Contents 1. Introduction ................................................................................................................ 1 1.1. Why Use Cycle Accurate Modeling .................................................................... 1 1.2. Target Audience ................................................................................................ 1 1.3. Open Source ..................................................................................................... 2 1.4. Further Sources of Information ......................................................................... 2 1.4.1. -

Integration Testing of Object-Oriented Software

POLITECNICO DI MILANO DOTTORATO DI RICERCA IN INGEGNERIA INFORMATICA E AUTOMATICA Integration Testing of Object-Oriented Software Ph.D. Thesis of: Alessandro Orso Advisor: Prof. Mauro Pezze` Tutor: Prof. Carlo Ghezzi Supervisor of the Ph.D. Program: Prof. Carlo Ghezzi XI ciclo To my family Acknowledgments Finding the right words and the right way for expressing acknowledgments is a diffi- cult task. I hope the following will not sound as a set of ritual formulas, since I mean every single word. First of all I wish to thank professor Mauro Pezze` , for his guidance, his support, and his patience during my work. I know that “taking care” of me has been a hard work, but he only has himself to blame for my starting a Ph.D. program. A very special thank to Professor Carlo Ghezzi for his teachings, for his willingness to help me, and for allowing me to restlessly “steal” books and journals from his office. Now, I can bring them back (at least the one I remember...) Then, I wish to thank my family. I owe them a lot (and even if I don't show this very often; I know this very well). All my love goes to them. Special thanks are due to all my long time and not-so-long time friends. They are (stricty in alphabetical order): Alessandro “Pari” Parimbelli, Ambrogio “Bobo” Usuelli, Andrea “Maken” Machini, Antonio “the Awesome” Carzaniga, Dario “Pitone” Galbiati, Federico “Fede” Clonfero, Flavio “Spadone” Spada, Gianpaolo “the Red One” Cugola, Giovanni “Negroni” Denaro, Giovanni “Muscle Man” Vigna, Lorenzo “the Diver” Riva, Matteo “Prada” Pradella, Mattia “il Monga” Monga, Niels “l’e´ semper chi” Kierkegaard, Pierluigi “San Peter” Sanpietro, Sergio “Que viva Mex- ico” Silva. -

A Confused Tester in Agile World … Qa a Liability Or an Asset

A CONFUSED TESTER IN AGILE WORLD … QA A LIABILITY OR AN ASSET THIS IS A WORK OF FACTS & FINDINGS BASED ON TRUE STORIES OF ONE & MANY TESTERS !! J Presented By Ashish Kumar, WHAT’S AHEAD • A STORY OF TESTING. • FROM THE MIND OF A CONFUSED TESTER. • FEW CASE STUDIES. • CHALLENGES IDENTIFIED. • SURVEY STUDIES. • GLOBAL RESPONSES. • SOLUTION APPROACH. • PRINCIPLES AND PRACTICES. • CONCLUSION & RECAP. • Q & A. A STORY OF TESTING IN AGILE… HAVE YOU HEARD ANY OF THESE ?? • YOU DON’T NEED A DEDICATED SOFTWARE TESTING TEAM ON YOUR AGILE TEAMS • IF WE HAVE BDD,ATDD,TDD,UI AUTOMATION , UNIT TEST >> WHAT IS THE NEED OF MANUAL TESTING ?? • WE WANT 100% AUTOMATION IN THIS PROJECT • TESTING IS BECOMING BOTTLENECK AND REASON OF SPRINT FAILURE • REPEATING REGRESSION IS A BIG TASK AND AN OVERHEAD • MICROSOFT HAS NO TESTERS NOT EVEN GOOGLE, FACEBOOK AND CISCO • 15K+ DEVELOPERS /4K+ PROJECTS UNDER ACTIVE • IN A “MOBILE-FIRST AND CLOUD-FIRST WORLD.” DEVELOPMENT/50% CODE CHANGES PER MONTH. • THE EFFORT, KNOWN AS AGILE SOFTWARE DEVELOPMENT, • 5500+ SUBMISSION PER DAY ON AVERAGE IS DESIGNED TO LOWER COSTS AND HONE OPERATIONS AS THE COMPANY FOCUSES ON BUILDING CLOUD AND • 20+ SUSTAINED CODE CHANGES/MIN WITH 60+PEAKS MOBILE SOFTWARE, SAY ANALYSTS • 75+ MILLION TEST CASES RUN PER DAY. • MR. NADELLA TOLD BLOOMBERG THAT IT MAKES MORE • DEVELOPERS OWN TESTING AND DEVELOPERS OWN SENSE TO HAVE DEVELOPERS TEST & FIX BUGS INSTEAD OF QUALITY. SEPARATE TEAM OF TESTERS TO BUILD CLOUD SOFTWARE. • GOOGLE HAVE PEOPLE WHO COULD CODE AND WANTED • SUCH AN APPROACH, A DEPARTURE FROM THE TO APPLY THAT SKILL TO THE DEVELOPMENT OF TOOLS, COMPANY’S TRADITIONAL PRACTICE OF DIVIDING INFRASTRUCTURE, AND TEST AUTOMATION.