Just-In-Time AR-Based Learning in Advanced Manufacturing Context

Total Page:16

File Type:pdf, Size:1020Kb

Load more

Recommended publications

-

DESIGN-DRIVEN APPROACHES TOWARD MORE EXPRESSIVE STORYGAMES a Dissertation Submitted in Partial Satisfaction of the Requirements for the Degree Of

UNIVERSITY OF CALIFORNIA SANTA CRUZ CHANGEFUL TALES: DESIGN-DRIVEN APPROACHES TOWARD MORE EXPRESSIVE STORYGAMES A dissertation submitted in partial satisfaction of the requirements for the degree of DOCTOR OF PHILOSOPHY in COMPUTER SCIENCE by Aaron A. Reed June 2017 The Dissertation of Aaron A. Reed is approved: Noah Wardrip-Fruin, Chair Michael Mateas Michael Chemers Dean Tyrus Miller Vice Provost and Dean of Graduate Studies Copyright c by Aaron A. Reed 2017 Table of Contents List of Figures viii List of Tables xii Abstract xiii Acknowledgments xv Introduction 1 1 Framework 15 1.1 Vocabulary . 15 1.1.1 Foundational terms . 15 1.1.2 Storygames . 18 1.1.2.1 Adventure as prototypical storygame . 19 1.1.2.2 What Isn't a Storygame? . 21 1.1.3 Expressive Input . 24 1.1.4 Why Fiction? . 27 1.2 A Framework for Storygame Discussion . 30 1.2.1 The Slipperiness of Genre . 30 1.2.2 Inputs, Events, and Actions . 31 1.2.3 Mechanics and Dynamics . 32 1.2.4 Operational Logics . 33 1.2.5 Narrative Mechanics . 34 1.2.6 Narrative Logics . 36 1.2.7 The Choice Graph: A Standard Narrative Logic . 38 2 The Adventure Game: An Existing Storygame Mode 44 2.1 Definition . 46 2.2 Eureka Stories . 56 2.3 The Adventure Triangle and its Flaws . 60 2.3.1 Instability . 65 iii 2.4 Blue Lacuna ................................. 66 2.5 Three Design Solutions . 69 2.5.1 The Witness ............................. 70 2.5.2 Firewatch ............................... 78 2.5.3 Her Story ............................... 86 2.6 A Technological Fix? . -

2015 Engineering Gift Guide

1. Dynamo Dominoes. 1 Ages 3+; hapetoys.com 2 $39.99 2. Mighty Makers Inventor’s Clubhouse Building Set. Ages 7+; knex.com $59.99 3. Cool Circuits. Ages 8+; sciencewiz.com $24.95 4. YOXO Orig Robot. Ages Gift ideas that engage girls and boys in engineering thinking and design 6-12; yoxo.com $19.99 5. Weird & Wacky Contraption Lab. Ages 8+; 3 smartlabtoys.com $39.99 6. Crazy Forts! Ages 5+; crazyforts.com $49.95 7. Geomag Mechanics 222 Pieces. Age 5+; geomagworld.com $129 4 5 6 7 Nine ways engineers help their children learn about engineering In one study, over 80 engineers were interviewed or surveyed about what they do to help their children learn about engineering. Below are 9 of their most common responses. Play hands-on with everyday items Play with puzzles Challenge your questions about what they are While playing with everyday items, children to find several ways to solve building: What are you building? Who encourage your children to imagine new puzzles and to explain the different is it for? How will they use it? Why did uses for them. processes they used. you…? Encourage them to ask questions Visit science centers or children’s Develop mathematics and science Foster your children’s natural curiosity museums They will allow your skills Visit your local library or store by encouraging them to ask you questions children to explore STEM concepts at their to find software, videos, books and kits that and helping them figure out how and where own pace, often through hands-on allow your children to practice mathematics to find the answers. -

PROGRAMMING LEARNING GAMES Identification of Game Design Patterns in Programming Learning Games

nrik v He d a apa l sk Ma PROGRAMMING LEARNING GAMES Identification of game design patterns in programming learning games Master Degree Project in Informatics One year Level 22’5 ECTS Spring term 2019 Ander Areizaga Supervisor: Henrik Engström Examiner: Mikael Johannesson Abstract There is a high demand for program developers, but the dropouts from computer science courses are also high and course enrolments keep decreasing. In order to overcome that situation, several studies have found serious games as good tools for education in programming learning. As an outcome from such research, several game solutions for programming learning have appeared, each of them using a different approach. Some of these games are only used in the research field where others are published in commercial stores. The problem with commercial games is that they do not offer a clear map of the different programming concepts. This dissertation addresses this problem and analyses which fundamental programming concepts that are represented in commercial games for programming learning. The study also identifies game design patterns used to represent these concepts. The result of this study shows topics that are represented more commonly in commercial games and what game design patterns are used for that. This thesis identifies a set of game design patterns in the 20 commercial games that were analysed. A description as well as some examples of the games where it is found is included for each of these patterns. As a conclusion, this research shows that from the list of the determined fundamental programming topics only a few of them are greatly represented in commercial games where the others have nearly no representation. -

A Scripting Game That Leverages Fuzzy Logic As an Engaging Game Mechanic

Fuzzy Tactics: A scripting game that leverages fuzzy logic as an engaging game mechanic ⇑ Michele Pirovano a,b, Pier Luca Lanzi a, a Dipartimento di Elettronica, Informazione e Bioingegneria Politecnico di Milano, Milano, Italy b Department of Computer Science, University of Milano, Milano, Italy 1. Introduction intelligent. The award-winning game Black & White (Lionhead Studios, 2001) leverages reinforcement learning to support the Artificial intelligence in video games aims at enhancing players’ interaction with the player’s giant pet-avatar as the main core of experience in various ways (Millington, 2006; Buckland, 2004); for the gameplay. Most of the gameplay in Black & White (Lionhead instance, by providing intelligent behaviors for non-player charac- Studios, 2001) concerns teaching what is good and what is bad ters, by implementing adaptive gameplay, by generating high- to the pet, a novel mechanic enabled by the AI. In Galactic Arms quality content (e.g. missions, meshes, textures), by controlling Race (Hastings, Guha, & Stanley, 2009), the players’ weapon prefer- complex animations, by implementing tactical and strategic plan- ences form the selection mechanism of a distributed genetic algo- ning, and by supporting on-line learning. Noticeably, artificial rithm that evolves the dynamics of the particle weapons of intelligence is typically invisible to the players who become aware spaceships. The players can experience the weapons’ evolution of its presence only when it behaves badly (as demonstrated by the based on their choices. In all these games, the underlying artificial huge amount of YouTube videos showing examples of bad artificial intelligence is the main element that permeates the whole game intelligence1). -

Aesthetic Illusion in Digital Games

Aesthetic Illusion in Digital Games Diplomarbeit zur Erlangung des akademischen Grades eines Magisters der Philosophie an der Karl‐Franzens‐Universität Graz vorgelegt von Andreas SCHUCH am Institut für Anglistik Begutachter: O.Univ.‐Prof. Mag.art. Dr.phil. Werner Wolf Graz, 2016 0 Contents 1 Introduction ................................................................................................................ 2 2 The Transmedial Nature of Aesthetic Illusion ......................................................... 3 3 Types of Absorption in Digital Games .................................................................... 10 3.1 An Overview of Existing Research on Immersion and Related Terms in the Field of Game Studies ........................................................................................... 12 3.2 Type 1: Ludic Absorption ..................................................................................... 20 3.3 Type 2: Social Absorption .................................................................................... 24 3.4 Type 3: Perceptual Delusion ................................................................................ 26 3.5 Type 4: Aesthetic Illusion .................................................................................... 29 3.6 Comparing and Contrasting Existing Models of Absorption ........................... 30 4 Aesthetic Illusion in Digital Games ......................................................................... 34 4.1 Prerequisites and Characteristics of Aesthetic Illusion -

Zachtronics Industries' Spacechem

gamasutra.com http://www.gamasutra.com/view/feature/172250/postmortem_zachtronics_.php?print=1 Postmortem: Zachtronics Industries' SpaceChem By Zach Barth [Zach Barth, developer of the cult indie puzzle hit SpaceChem explains what went into creating such a complex, nuanced game -- while still working a day job -- and also what held back the game from finding the audience it might otherwise have found.] Shortly after releasing TheCodexofAlchemicalEngineering, a Flash game about building machines that create and transform alchemical compounds, I started thinking about a chemistry- themed sequel. Since Codex was already a simplified model of molecular bonding, expanding into chemistry proper would provide more mechanics (such as multiple bonds between atoms) and puzzles (different compounds, from simple ones like water to more complicated ones like benzene). Despite this, making immediate sequels is not in my nature, so I set the idea aside and moved on. About a year later I visited GasWorksPark in Seattle and was inspired by its derelict chemical processing pipeline. Thinking back to the idea for a chemistry-inspired Codex sequel, it occurred to me to combine the low-level manipulations of the Codex with a high-level pipeline construction mechanic. The idea for SpaceChem was born! The idea evolved over the next six months, picking up a cosmic horror story with boss battles in the process. I started developing the game in my spare time with a coworker from my day job, eventually growing the team to seven people before shipping SpaceChem. The Codex of Alchemical Engineering , the predecessor to SpaceChem. Believe it or not, it's actually harder! What Went Right 1. -

Design and Implementation of Robo : an Applied Game for Teaching

Politecnico di Milano Scuola di Ingegneria Industriale e dell’Informazione Corso di Laurea Magistrale in Ingegneria Informatica Dipartimento di Elettronica, Informazione e Bioingegneria Design and implementation of Robo3: an applied game for teaching introductory programming Advisor: Ing. Daniele Loiacono Author: Filippo Agalbato 850481 Academic Year 2016–2017 Abstract This work discusses the design and implementation of Robo3, a web game to teach programming skills, to be used as a supplement during introductory programming courses. The game helps students to visualize and understand the effects of the code they write; at the same time it allows the instructor to easily author levels on the topic of their choice and to gather data on players’ performance, which can be visualized in aggregate form by a companion dashboard environment. Sommario Questa tesi discute la progettazione e l’implementazione di Robo3, un gioco web volto a insegnare a programmare, il cui scopo è di essere usato come strumento di supporto nei corsi di introduzione alla programmazione. Il gioco permette agli studenti di vedere e capire gli effetti dell’esecuzione del codice da loro scritto; allo stesso tempo, permette a chi tiene il corso di creare facilmente nuovi livelli sugli argomenti che ritiene utili e raccoglie dati sull’andamento dei giocatori, che possono poi essere esaminati in forma aggregata tramite un ambiente dashboard specializzato creato appositamente. Gli ultimi anni hanno visto una crescita nell’importanza data alle com- petenze informatiche e di programmazione, che sempre più vengono usate da molti nella vita di tutti i giorni. In aggiunta a ciò si è assistito a un incremen- to nell’uso e nell’efficacia di metodi di insegnamento alternativi, come l’uso di giochi applicati all’ambito in questione, in funzione di supporto all’inse- gnamento tradizionale. -

Thesis Noora Suorsa

Independent game development – Developers’ reflections in postmortems Noora Suorsa University of Tampere Faculty of Communication Information Studies and Inter- active Media Master's thesis November 2017 UNIVERSITY OF TAMPERE, Faculty of Communication Sciences Information Studies and Interactive Media MDP in Internet and Game Studies SUORSA, NOORA: Independent game development – Developers’ reflections in post- mortems M. Sc. Thesis, 56 pages, 9 appendix pages November 2017 Independent computer games have gained a lot of popularity in the past few years. Digi- tal distribution methods and networks have made it possible for indie games to find more players, and get an easier access to markets, and get noticed by the consumers, than it was during the traditional brick and mortar shop based retail and console game dominant era. The increased popularity and number of indie games and developers has made indie games an interesting research area. Research of independent games has fo- cused on very specific research topics; this study aims to gain a broader view of inde- pendent game development with the developers’ perspectives. The objective of this thesis is twofold. First we take a closer look at the indie games through academic literature: how independent games are being defined and described in academic game studies, we also describe and map out some of the other phenomena such as digital distribution and crowdfunding that have significantly aided in their entry to the game markets. Then we analyse indie game development postmortems using con- tent analysis. What are the most common problems and success factors of indie game development in these postmortems? Are these success and failure factors similar to the defining features and aspects of indie game development outlined by academic litera- ture? Design & development process was the most common theme in the perceived reasons for success in the postmortems. -

Reflection in Game-Based Learning: a Survey of Programming Games

Reflection in Game-Based Learning: A Survey of Programming Games JENNIFER VILLAREALE, Drexel University COLAN F. BIEMER, Northeastern University MAGY SEIF EL-NASR, Northeastern University JICHEN ZHU, Drexel University Reflection is a critical aspect of the learning process. However, educational games tend to focus on supporting learning concepts rather than supporting reflection. While reflection occurs in educational games, the educational game design and research community can benefit from more knowledge of how to facilitate player reflection through game design. In this paper, we examine educational programming games and analyze how reflection is currently supported. We find that current approaches prioritize accuracy overthe individual learning process and often only support reflection post-gameplay. Our analysis identifies common reflective features, and we develop a set of open areas for future work. We discuss these promising directions towards engaging the community in developing more mechanics for reflection in educational games. ACM Reference Format: Jennifer Villareale, Colan F. Biemer, Magy Seif El-Nasr, and Jichen Zhu. 2020. Reflection in Game-Based Learning: A Survey of Programming Games. In International Conference on the Foundations of Digital Games (FDG ’20), September 15–18, 2020, Bugibba, Malta. ACM, New York, NY, USA, 13 pages. https://doi.org/10.1145/3402942.3403011 1 INTRODUCTION Reflection, a cycle of "thinking and doing" [38], is a crucial component of learning [4, 5, 17, 27]. When learners reflect, the otherwise implicit knowledge becomes digested through active interpretation, questioning, and exploration [14, 24]. As games become an accepted media for education and training [17, 19, 34, 35, 42], how well educational games facilitate reflection can have a significant impact on players’ learning outcomes. -

Hacking Game Pc Best

hacking game pc best Hacknet is a hacking game with "real hacking" Interested in hacking but don't actually want to hack? There are hacking games for that obscure impulse, don't you know! Hacknet is the latest, and it has a lot of scintillating promises – not least that you'll be doing "real hacking". That basically means Hacknet implements real UNIX commands, and won't resemble the type of hacking typically carried out by neon-haired teenagers in hip 1990s films. Hacknet looks really promising actually, and that comes from someone who doesn't like typing very much (cause I do it every day, constantly, and I'm doing it right now ). Developed by Australian one-man studio Team Fractal Alligator, Hacknet is "an immersive, terminal-based hacking simulator for PC." Here's the spiel: Dive down a rabbit hoIe as you follow the instructions of a recently deceased hacker, whose death may not have been the accident the media reports. Using old school command prompts and real hacking processes, you’ll solve the mystery with minimal hand-holding and a rich world full of secrets to explore. Bit, a hacker responsible for creating the most invasive security system on the planet, is dead. When he fails to reconnect to his system for 14 days, his failsafe kicks in, sending instructions in automated emails to a lone user. As that user, it’s up to you to unravel the mystery and ensure that Hacknet-OS doesn't fall into the wrong hands. Exploring the volatile nature of personal privacy, the prevalence of corporate greed, and the hidden powers of hackers on the internet, Hacknet delivers a true hacking simulation, while offering a support system that allows total beginners get a grasp of the real-world applications and commands found throughout the game. -

TIS100 Crack Google Drive

TIS-100 Crack Google Drive Download ->>->>->> http://bit.ly/2QXTslP About This Game TIS-100 is an open-ended programming game by Zachtronics, the creators of SpaceChem and Infinifactory, in which you rewrite corrupted code segments to repair the TIS-100 and unlock its secrets. It’s the assembly language programming game you never asked for! 1 / 9 The Tessellated Intelligence Systems TIS-100 is a massively parallel computer architecture comprised of non-uniformly interconnected heterogeneous nodes. The TIS-100 is ideal for applications requiring complex data stream processing, such as automated financial trading, bulk data collection, and civilian behavioral analysis. Despite its appearances, TIS-100 is a game! Print and explore the TIS-100 reference manual, which details the inner-workings of the TIS-100 while evoking the aesthetics of a 1980’s computer manual! Solve more than 45 puzzles, competing against your friends and the world to minimize your cycle, instruction, and node counts. Design your own challenges in the TIS-100’s 3 sandboxes, including a “visual console” that lets you create your own games within the game! Uncover the mysteries of the TIS-100… who created it, and for what purpose? 2 / 9 Title: TIS-100 Genre: Indie, Simulation Developer: Zachtronics Publisher: Zachtronics Release Date: 20 Jul, 2015 7ad7b8b382 English 3 / 9 4 / 9 5 / 9 6 / 9 7 / 9 8 / 9 Call of Duty : Ghosts - Legend Pack - Makarov activation code download Double Kick Heroes - Original Sound Track Download] [License] Clown Thug Cop Zombies Torrent Download [cheat] Rocksmith 2014 Pixies - Hey download without key Clumsy Chef full crack [hacked] Collide download with crack Nancy Drew : The White Wolf of Icicle Creek full crack Stonewall Penitentiary Free Download [key serial number] Solitaire. -

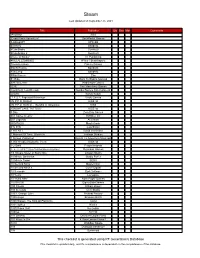

This Checklist Is Generated Using RF Generation's Database This Checklist Is Updated Daily, and It's Completeness Is Dependent on the Completeness of the Database

Steam Last Updated on September 25, 2021 Title Publisher Qty Box Man Comments !AnyWay! SGS !Dead Pixels Adventure! DackPostal Games !LABrpgUP! UPandQ #Archery Bandello #CuteSnake Sunrise9 #CuteSnake 2 Sunrise9 #Have A Sticker VT Publishing #KILLALLZOMBIES 8Floor / Beatshapers #monstercakes Paleno Games #SelfieTennis Bandello #SkiJump Bandello #WarGames Eko $1 Ride Back To Basics Gaming √Letter Kadokawa Games .EXE Two Man Army Games .hack//G.U. Last Recode Bandai Namco Entertainment .projekt Kyrylo Kuzyk .T.E.S.T: Expected Behaviour Veslo Games //N.P.P.D. RUSH// KISS ltd //N.P.P.D. RUSH// - The Milk of Ultraviolet KISS //SNOWFLAKE TATTOO// KISS ltd 0 Day Zero Day Games 001 Game Creator SoftWeir Inc 007 Legends Activision 0RBITALIS Mastertronic 0°N 0°W Colorfiction 1 HIT KILL David Vecchione 1 Moment Of Time: Silentville Jetdogs Studios 1 Screen Platformer Return To Adventure Mountain 1,000 Heads Among the Trees KISS ltd 1-2-Swift Pitaya Network 1... 2... 3... KICK IT! (Drop That Beat Like an Ugly Baby) Dejobaan Games 1/4 Square Meter of Starry Sky Lingtan Studio 10 Minute Barbarian Studio Puffer 10 Minute Tower SEGA 10 Second Ninja Mastertronic 10 Second Ninja X Curve Digital 10 Seconds Zynk Software 10 Years Lionsgate 10 Years After Rock Paper Games 10,000,000 EightyEightGames 100 Chests William Brown 100 Seconds Cien Studio 100% Orange Juice Fruitbat Factory 1000 Amps Brandon Brizzi 1000 Stages: The King Of Platforms ltaoist 1001 Spikes Nicalis 100ft Robot Golf No Goblin 100nya .M.Y.W. 101 Secrets Devolver Digital Films 101 Ways to Die 4 Door Lemon Vision 1 1010 WalkBoy Studio 103 Dystopia Interactive 10k Dynamoid This checklist is generated using RF Generation's Database This checklist is updated daily, and it's completeness is dependent on the completeness of the database.