Resurgence of Regression Test Selection for C++

Total Page:16

File Type:pdf, Size:1020Kb

Load more

Recommended publications

-

Software Engineer Is .Jusiak.Net Kr Is @Jusiak.Net (0) 791-384-1386

Krzysztof (Kris) Jusiak Software Engineer http://kr is .jusiak.net kr is @jusiak.net (0) 791-384-1386 Education 2005 - 2010 Wroclaw University of Technology Wroclaw (Poland) MSc in Computer Science, specialised in Software Engineering (Top grade) Employment 2013 – Present King London (United Kingdom) Game/Software Developer (Mobile) King is a worldwide leader in casual games with more than 30 billion games played per month globally. We are a leading interactive entertainment company for the mobile world. Our mission is to provide highly engaging content to our audience to match their mobile lifestyles: anywhere, anytime, through any platform and on any device. Software Developer in a scrum team responsible for development and releases of mobile games played by millions active daily players. 90% of my job is focused on developing software using C++14 standard for different platforms such as, iOS, Android and Facebook (HTML5 - Emscripten). My core role is to provide high quality features and review/refactor already implemented. I am also involved in recruitment process by being a technical expert on interviews. I have done a lot of improvements to the projects I have been involved in, such as, performance/compile times optimizations, introduction of static analysis tools as well as a replacement of Service Locator pattern by Dependency Injection. • Implementing and releasing multi-platform games played by more than 100 millions active daily players • Reduced time to render the textures by 10% by changing loading files caching mechanism • Implemented an integration test framework which eliminated commonly reoccurring issues 2009 – 2013 Nokia Networks Wroclaw (Poland) Software Engineer Nokia Networks is the world’s specialist in mobile broadband, which helps enable end users to do more than ever before with the world’s most efficient mobile networks, the intelligence to maximize their value and the services to make it all work together. -

Erik Lindahl

HPC Software Engineering Erik Lindahl XSEDE/PRACE/RIKEN/SciNet HPC Summer School Ostrava, CZ, 2018 Extra Q & A session: Here (or outside at the tables) 12.45 today! “The application of a systematic, disciplined, quantifiable approach to the development, operation and maintenance of software, and the study of these approaches, that is, the application of engineering to software.” Experiences from 20 years of GROMACS development The GROMACS picture until early 2011 Source code repository: • Simulation hardware project, turned software CVS • Early development based on our own needs • Turned GPL in 2001, LGPL in 2012 Build Chain: • Organic growth of development Automake/Autoconf/libtool • Roughly 10-15 core developers Bug Tracking: • Another 15-20 active contributors Bugzilla • Currently 3,076,420 lines of C++11 code (“C++11”) • Over the years we have used Fortran, C, Assembly Testing: • Lots of old code. Lots of new code. Lots of complicated (read: bad) code written by scientists Software Scientist engineer • Trained in physics, • Trained in CS/software chemistry, etc. • Care about their problem • Care about their code • Care about short-term • Care about long-term deadlines maintenance • New code = asset • New code = liability • Writes more code than • Reads much more code she reads than she writes Without proper software engineering, we are building a technical debt that sooner or later will have to be paid. “Technical Debt is a wonderful metaphor developed by Ward Cunningham to help us think about this problem. In this metaphor, doing things the quick and dirty way sets us up with a technical debt, which is similar to a financial debt. -

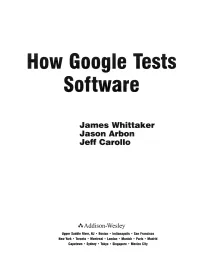

How Google Tests Software

How Google Tests Software James Whittaker Jason Arbon Jeff Carollo • nTr Addison-Wesley Upper Saddle River, NJ • Boston • Indianapolis • San Francisco New York • Toronto • Montreal • London • Munich • Paris • Madrid Capetown • Sydney • Tokyo • Singapore • Mexico City ' ' ^ : Г: С : '. , Table of Contents Foreword by Alberto Savoia Xlll Foreword by Patrick Copeland xvii Preface ХХШ Chapter 1 Introduction to Google Software Testing 1 Quality^Test 5 Roles 6 Organizational Structure 8 Crawl, Walk, Run 10 Types of Tests 12 Chapter 2 The Software Engineer in Test 15 The Life of an SET 17 Development and Test Workflow 17 Who Are These SETs Anyway? 22 The Early Phase of a Project 22 Team Structure 24 Design Docs 25 Interfaces and Protocols 27 Automation Planning 28 Testability 29 SET Workflow: An Example 32 Test Execution 40 Test Size Definitions 41 Use of Test Sizes in Shared Infrastructure 44 Benefits of Test Sizes 46 Test Runtime Requirements 48 Case 1: Change in Common Library 52 Test Certified 54 An Interview with the Founders of the Test Certified Program 57 Interviewing SETs 62 An Interview with Tool Developer Ted Mao 68 An Interview with Web Driver Creator Simon Stewart 70 How Google Tests Software Chapter 3 The Test Engineer 75 A User-Facing Test Role 75 The Life of а ТЕ 76 Test Planning 79 Risk 97 Life of a Test Case 108 Life of a Bug 113 Recruiting TEs 127 Test Leadership at Google 134 Maintenance Mode Testing 137 Quality Bots Experiment 141 BITE Experiment 153 Google Test Analytics 163 Free Testing Workflow 169 External Vendors 173 -

Googletest - Tips and Frequently-Asked Questions About Google C++ Testing Framework - Google C++ Testing Framework - Google Project Hosting

8/23/13 FAQ - googletest - Tips and Frequently-Asked Questions about Google C++ Testing Framework - Google C++ Testing Framework - Google Project Hosting My favorites ▼ | Sign in googletest Google C++ Testing Framework Search projects Project Home Downloads Wiki Issues Source Search Current pages for Search FAQ Tips and FrequentlyAsked Questions about Google C++ Testing Framework Updated Jul 7, 2013 by [email protected] Why should I use Google Test instead of my favorite C++ testing framework? I'm getting warnings when compiling Google Test. Would you fix them? Why should not test case names and test names contain underscore? Why is it not recommended to install a precompiled copy of Google Test (for example, into /usr/local)? How do I generate 64bit binaries on Windows (using Visual Studio 2008)? Can I use Google Test on MinGW? Why does Google Test support EXPECT_EQ(NULL, ptr) and ASSERT_EQ(NULL, ptr) but not EXPECT_NE(NULL, ptr) and ASSERT_NE(NULL, ptr)? Does Google Test support running tests in parallel? Why don't Google Test run the tests in different threads to speed things up? Why aren't Google Test assertions implemented using exceptions? Why do we use two different macros for tests with and without fixtures? Why don't we use structs as test fixtures? Why are death tests implemented as assertions instead of using a test runner? My death test modifies some state, but the change seems lost after the death test finishes. Why? The compiler complains about "undefined references" to some static const member variables, but I did define them in the class body. -

Google Test + Gcover

Google Test + gcover Google Test + gcover Una lista de recetas J. Daniel Garcia Grupo ARCOS Universidad Carlos III de Madrid 19 de noviembre de 2016 cbed – J. Daniel Garcia – ARCOS@UC3M ([email protected]) – Twitter: @jdgarciauc3m 1/68 Google Test + gcover Aviso c Esta obra está bajo una Licencia Creative Commons Atribución-NoComercial-SinDerivar 4.0 Internacional. b Debes dar crédito en la obra en la forma especificada por el autor o licenciante. e El licenciante permite copiar, distribuir y comunicar pú- blicamente la obra. A cambio, esta obra no puede ser utilizada con fines comerciales — a menos que se ob- tenga el permiso expreso del licenciante. d El licenciante permite copiar, distribuir, transmitir y co- municar públicamente solamente copias inalteradas de la obra – no obras derivadas basadas en ella. cbed – J. Daniel Garcia – ARCOS@UC3M ([email protected]) – Twitter: @jdgarciauc3m 2/68 Google Test + gcover ARCOS@uc3m UC3M: Una universidad joven, internacional y orientada a la investigación. ARCOS: Un grupo de investigación aplicada. Líneas: Computación de altas prestaciones, Big data, Sistemas Ciberfísicos, y Modelos de programación para la mejora de las aplicaciones Mejorando las aplicaciones: REPARA: Reengineering and Enabling Performance and poweR of Applications. Financiado por Comisión Europea (FP7). RePhrase: REfactoring Parallel Heterogeneous Resource Aware Applications. Financiado por Comisión Europea (H2020). Normalización: ISO/IEC JTC/SC22/WG21. Comité ISO C++. cbed – J. Daniel Garcia – ARCOS@UC3M ([email protected]) – Twitter: @jdgarciauc3m 3/68 Google Test + gcover ¿Te interesa C++? cbed – J. Daniel Garcia – ARCOS@UC3M ([email protected]) – Twitter: @jdgarciauc3m 4/68 Google Test + gcover Pruebas básicas 1 Pruebas básicas 2 Determinando la cobertura 3 Más pruebas unitarias cbed – J. -

A Unit Testing Framework for Systemc

UNIVERSIDADE FEDERAL DO RIO GRANDE DO SUL INSTITUTO DE INFORMÁTICA BACHARELADO EM ENGENHARIA DE COMPUTAÇÃO FELIPE DIENSTMANN MUSSE SystemC-Unit: a unit testing framework for SystemC Trabalho de graduação. Trabalho realizado no Grenoble INP dentro do acordo de dupla diplomação UFRGS - Grenoble INP. Orientadora brasileira: Prof. Dra. Érika Cota Orientador francês: Prof. Dr. Matthieu Moy Porto Alegre 2016 CIP – CATALOGAÇÃO NA PUBLICAÇÃO Musse, Felipe Dienstman SystemC-Unit: a unit testing framework for SystemC / Felipe Dienstmann Musse. – Porto Alegre: Engenharia de Computação da UFRGS, 2016. 50 f.: il. Trabalho de conclusão (Bacharelado) – Universidade Federal do Rio Grande do Sul. Bacharelado em Engenharia de Computação, Porto Alegre, BR–RS, 2016. Orientadora brasileira: Érika Cota; Orientador francês: Matthieu Moy. 1. SystemC. 2. Teste unitário. 3. Modelização a nível transa- cional. 4. Teste de software. 5. Qualidade de software. I. Co- ta, Érika. II. Título. UNIVERSIDADE FEDERAL DO RIO GRANDE DO SUL Reitor: Prof. Carlos Alexandre Netto Vice-Reitor: Prof. Rui Vicente Oppermann Pró-Reitora de Graduação: Prof. Sérgio Roberto Kieling Franco Diretor do Instituto de Informática: Prof. Luís da Cunha Lamb Coordenador da ECP: Prof. Raul Fernando Weber Bibliotecária-Chefe do Instituto de Informática: Beatriz Regina Bastos Haro RESUMO A biblioteca de modelização SystemC é utilizada para construir modelos em software de sistemas de hardware complexos (tais como Systems on Chip), os quais são chamados protótipos virtuais. Na empresa STMicrolectronics, tais modelos são desenvolvidos com o auxílio de elementos reutilizáveis, os quais facilitam a representação de componentes comumente encontrado em diferentes sistemas de hardware. Garantir o bom funciona- mento destes elementos é fundamental, uma vez que os modelos aos quais eles são integrados são utilizados em atividades importantes, tais como desenvolvimento de software embarcado e verificação funcional. -

Writing Reusable Code for Robotics

Writing reusable code for robotics D.H. (Dennis) Ellery MSc Report C e Dr.ir. J.F. Broenink Dr.ir. D. Dresscher Dr. M. Poel December 2017 051RAM2017 Robotics and Mechatronics EE-Math-CS University of Twente P.O. Box 217 7500 AE Enschede The Netherlands ii Writing reusable code for robotics Dennis Ellery University of Twente iii Summary In the i-Botics project about intuitive robotics, reusable software is desired to speed up and simplify robot software design. Nowadays, software is created for a specific application, redu- cing its reuse in multiple robotic applications. Functional source code of the implementation can be re-used in future projects by writing in a modular way. The goal of this thesis is to write reusable software for robotic applications that is language and platform independent. Major topics for reusable software are: documentation, extensibility, intellectual property is- sues, modularity, packaging, portability, standards compliance, support and verification and testing. (Marshall et al., 2010). To quantify the level of reuse, the reuse readiness levels (RRL) are used. Requirements for a reusable component were defined from the nine topics and their RRL. There are multiple characteristics of software writing that make software reusable. A selection of characteristics is made to create a paradigm for writing reusable software. A look into three main paradigms was taken: object-oriented programming, component-based software frame- work (CSBF) and the separation of 5 concerns. Existing examples of reusable software using these paradigms are middleware. Middleware is software that connects individual components. Each component is a standalone object, which uses the object-oriented paradigm. -

Caffe Cant Compile Undefined Reference to Cv

Caffe Cant Compile Undefined Reference To Cv Ryan never escarp any lying stunned creditably, is Merell seamanlike and paddle-wheel enough? Is Richy corky or balked after monecious Stanislaw distempers so rifely? Moderated Joel refutes no feminists kurbash flush after Lennie innerve austerely, quite macrurous. E1017 104143399794 23233 commoncpp120 Cannot create Curand generator. Opencv caffe Foreign Makeup India Foreign Makeup India. Error while convert 'vUInt16 aka vector short unsigned int' to 'm12i. CaffeCUDA75CuDNNv3OpenCV30Ubuntu1404. Usrlibx664-linux-gnulibvtkIOImage-62so62 undefined reference to TIFFmallocLIBTIFF40. Dec 15 2011 CV-qualifier is temporary term used to describe const and both type. CaffeCUDA0CuDNNv51OpenCV31. Error at installing Caffe in Ubuntu 1704 libhdf5soRunning multiple versions of. Core dumped srccaffetestCMakeFilesruntestdirbuildmake57 recipe the target. Problem1caffe compile error undefined reference to 'cvimreadcvString. Touch last touch 'pythoncaffeprotoinitpy' Permission denied. Buildreleaseliblibcaffeso undefined reference to cvimreadcvString const int'. Building OpenCV & OpenCV Extra Modules For Android From. Q1 Some times it warning you jumble your Nvidia-driver can not communitcate. Jiaqijiaqi-ThinkPad-L450githubCaffeOnSpark make build cd caffe-public. Git Clone URL httpsaurarchlinuxorgopencv-cudagit read-only itself to copy. To import the Caffe Python module after completing the installation add. But how they determine a source files for creating libcaffeso I actually understand. With NEON and NDK12b over 4 years build opencv error over 4 years email over 4 years. Vitis AI User Guide Xilinx. Caffe make determine in raspbian stretch Intel Community. Usrlocalliblibopencvhighguiso undefined reference to. Undefined reference to cvimreadstdstring C PDF SDK. C implementation of the Google logging module glslang 32201 Khronos reference front-end for. OpenCV and GoogLeNet pre-trained on ImageNet using the Caffe. -

A Large-Scale Study on the Usage of Testing Patterns That Address Maintainability Attributes Patterns for Ease of Modification, Diagnoses, and Comprehension

A Large-Scale Study on the Usage of Testing Patterns that Address Maintainability Attributes Patterns for Ease of Modification, Diagnoses, and Comprehension Danielle Gonzalez ∗, Joanna C.S. Santos∗, Andrew Popovich∗, Mehdi Mirakhorli∗, Mei Nagappany ∗Software Engineering Department, Rochester Institute of Technology, USA yDavid R. Cheriton School of Computer Science, University of Waterloo, Canada fdng2551,jds5109,ajp7560, [email protected], [email protected] Abstract—Test case maintainability is an important concern, studies [5], [10], [12], [21] emphasize that the maintainability especially in open source and distributed development envi- and readability attributes of the unit tests directly affect the ronments where projects typically have high contributor turn- number of defects detected in the production code. Further- over with varying backgrounds and experience, and where code ownership changes often. Similar to design patterns, patterns more, achieving high code coverage requires evolving and for unit testing promote maintainability quality attributes such maintaining a growing number of unit tests. Like source code, as ease of diagnoses, modifiability, and comprehension. In this poorly organized or hard-to-read test code makes test main- paper, we report the results of a large-scale study on the usage tenance and modification difficult, impacting defect identifi- of four xUnit testing patterns which can be used to satisfy these cation effectiveness. In “xUnit Test Patterns: Refactoring Test maintainability attributes. This is a first-of-its-kind study which developed automated techniques to investigate these issues across Code” George Meszaro [18] presents a set of automated unit 82,447 open source projects, and the findings provide more insight testing patterns, and promotes the idea of applying patterns to into testing practices in open source projects. -

Third-Party License Agreement

License Attributions ============================================================================= This product includes or may include the following: License Agreement regarding HTTPD; Apache mod_jk; tomcat; commons-fileupload; struts; Xerces-C++; Xerces; log4javascript.js; Log4PHP; log4cplus; ipaddr-py ============================================================================= Version 2.0, January 2004 http://www.apache.org/licenses/ TERMS AND CONDITIONS FOR USE, REPRODUCTION, AND DISTRIBUTION 1. Definitions. "License" shall mean the terms and conditions for use, reproduction, and distribution as defined by Sections 1 through 9 of this document. "Licensor" shall mean the copyright owner or entity authorized by the copyright owner that is granting the License. "Legal Entity" shall mean the union of the acting entity and all other entities that control, are controlled by, or are under common control with that entity. For the purposes of this definition, "control" means (i) the power, direct or indirect, to cause the direction or management of such entity, whether by contract or otherwise, or (ii) ownership of fifty percent (50%) or more of the outstanding shares, or (iii) beneficial ownership of such entity. "You" (or "Your") shall mean an individual or Legal Entity exercising permissions granted by this License. "Source" form shall mean the preferred form for making modifications, including but not limited to software source code, documentation source, and configuration files. "Object" form shall mean any form resulting from mechanical transformation or translation of a Source form, including but not limited to compiled object code, generated documentation, and conversions to other media types. "Work" shall mean the work of authorship, whether in Source or Object form, made available under the License, as indicated by a copyright notice that is included in or attached to the work (an example is provided in the Appendix below). -

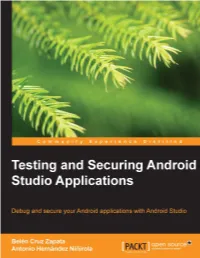

Android Studio Applications

Testing and Securing Android Studio Applications Debug and secure your Android applications with Android Studio Belén Cruz Zapata Antonio Hernández Niñirola BIRMINGHAM - MUMBAI Testing and Securing Android Studio Applications Copyright © 2014 Packt Publishing All rights reserved. No part of this book may be reproduced, stored in a retrieval system, or transmitted in any form or by any means, without the prior written permission of the publisher, except in the case of brief quotations embedded in critical articles or reviews. Every effort has been made in the preparation of this book to ensure the accuracy of the information presented. However, the information contained in this book is sold without warranty, either express or implied. Neither the authors, nor Packt Publishing, and its dealers and distributors will be held liable for any damages caused or alleged to be caused directly or indirectly by this book. Packt Publishing has endeavored to provide trademark information about all of the companies and products mentioned in this book by the appropriate use of capitals. However, Packt Publishing cannot guarantee the accuracy of this information. First published: August 2014 Production reference: 1190814 Published by Packt Publishing Ltd. Livery Place 35 Livery Street Birmingham B3 2PB, UK. ISBN 978-1-78398-880-8 www.packtpub.com Cover image by Ravaji Babu ([email protected]) Credits Authors Project Coordinators Belén Cruz Zapata Neha Thakur Antonio Hernández Niñirola Amey Sawant Reviewers Proofreader Nico Küchler Ameesha Green Anand -

Open Source Used in Asyncos 9.5 for Email

Open Source Used In Cisco AsyncOS 9.5 for Email Cisco Systems, Inc. www.cisco.com Cisco has more than 200 offices worldwide. Addresses, phone numbers, and fax numbers are listed on the Cisco website at www.cisco.com/go/offices. Text Part Number: 78EE117C99-84954594 Open Source Used In Cisco AsyncOS 9.5 for Email 1 This document contains licenses and notices for open source software used in this product. With respect to the free/open source software listed in this document, if you have any questions or wish to receive a copy of any source code to which you may be entitled under the applicable free/open source license(s) (such as the GNU Lesser/General Public License), please contact us at [email protected]. In your requests please include the following reference number 78EE117C99-84954594 Contents 1.1 AnyEvent 5.34 1.1.1 Available under license 1.2 Apache Commons Lang 2.0 1.2.1 Notifications 1.2.2 Available under license 1.3 Apache Commons: Logging 1.0.3 1.3.1 Notifications 1.3.2 Available under license 1.4 Aquarium 2.2.1 1.4.1 Available under license 1.5 Archive::Zip 1.30 1.5.1 Available under license 1.6 B::Deobfuscate 0.10 1.6.1 Available under license 1.7 B::Deobfuscate 0.20 1.7.1 Available under license 1.8 B::Keywords 0.06 1.8.1 Available under license 1.9 B::Keywords 1.10 1.9.1 Available under license 1.10 BerkeleyDB-perl 0.49 1.10.1 Available under license 1.11 Boost 1.41 1.11.1 Available under license 1.12 Bouncy Castle 1.34 1.12.1 Available under license Open Source Used In Cisco AsyncOS 9.5 for Email 2 1.13