Polytopes and the Simplex Method

Total Page:16

File Type:pdf, Size:1020Kb

Load more

Recommended publications

-

Geometry of Generalized Permutohedra

Geometry of Generalized Permutohedra by Jeffrey Samuel Doker A dissertation submitted in partial satisfaction of the requirements for the degree of Doctor of Philosophy in Mathematics in the Graduate Division of the University of California, Berkeley Committee in charge: Federico Ardila, Co-chair Lior Pachter, Co-chair Matthias Beck Bernd Sturmfels Lauren Williams Satish Rao Fall 2011 Geometry of Generalized Permutohedra Copyright 2011 by Jeffrey Samuel Doker 1 Abstract Geometry of Generalized Permutohedra by Jeffrey Samuel Doker Doctor of Philosophy in Mathematics University of California, Berkeley Federico Ardila and Lior Pachter, Co-chairs We study generalized permutohedra and some of the geometric properties they exhibit. We decompose matroid polytopes (and several related polytopes) into signed Minkowski sums of simplices and compute their volumes. We define the associahedron and multiplihe- dron in terms of trees and show them to be generalized permutohedra. We also generalize the multiplihedron to a broader class of generalized permutohedra, and describe their face lattices, vertices, and volumes. A family of interesting polynomials that we call composition polynomials arises from the study of multiplihedra, and we analyze several of their surprising properties. Finally, we look at generalized permutohedra of different root systems and study the Minkowski sums of faces of the crosspolytope. i To Joe and Sue ii Contents List of Figures iii 1 Introduction 1 2 Matroid polytopes and their volumes 3 2.1 Introduction . .3 2.2 Matroid polytopes are generalized permutohedra . .4 2.3 The volume of a matroid polytope . .8 2.4 Independent set polytopes . 11 2.5 Truncation flag matroids . 14 3 Geometry and generalizations of multiplihedra 18 3.1 Introduction . -

Two Poset Polytopes

Discrete Comput Geom 1:9-23 (1986) G eometrv)i.~.reh, ~ ( :*mllmlati~ml © l~fi $1~ter-Vtrlq New Yorklu¢. t¢ Two Poset Polytopes Richard P. Stanley* Department of Mathematics, Massachusetts Institute of Technology, Cambridge, MA 02139 Abstract. Two convex polytopes, called the order polytope d)(P) and chain polytope <~(P), are associated with a finite poset P. There is a close interplay between the combinatorial structure of P and the geometric structure of E~(P). For instance, the order polynomial fl(P, m) of P and Ehrhart poly- nomial i(~9(P),m) of O(P) are related by f~(P,m+l)=i(d)(P),m). A "transfer map" then allows us to transfer properties of O(P) to W(P). In particular, we transfer known inequalities involving linear extensions of P to some new inequalities. I. The Order Polytope Our aim is to investigate two convex polytopes associated with a finite partially ordered set (poset) P. The first of these, which we call the "order polytope" and denote by O(P), has been the subject of considerable scrutiny, both explicit and implicit, Much of what we say about the order polytope will be essentially a review of well-known results, albeit ones scattered throughout the literature, sometimes in a rather obscure form. The second polytope, called the "chain polytope" and denoted if(P), seems never to have been previously considered per se. It is a special case of the vertex-packing polytope of a graph (see Section 2) but has many special properties not in general valid or meaningful for graphs. -

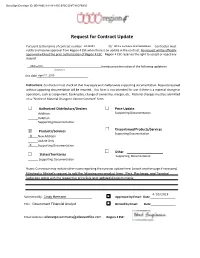

Request for Contract Update

DocuSign Envelope ID: 0E48A6C9-4184-4355-B75C-D9F791DFB905 Request for Contract Update Pursuant to the terms of contract number________________R142201 for _________________________ Office Furniture and Installation Contractor must notify and receive approval from Region 4 ESC when there is an update in the contract. No request will be officially approved without the prior authorization of Region 4 ESC. Region 4 ESC reserves the right to accept or reject any request. Allsteel Inc. hereby provides notice of the following update on (Contractor) this date April 17, 2019 . Instructions: Contractor must check all that may apply and shall provide supporting documentation. Requests received without supporting documentation will be returned. This form is not intended for use if there is a material change in operations, such as assignment, bankruptcy, change of ownership, merger, etc. Material changes must be submitted on a “Notice of Material Change to Vendor Contract” form. Authorized Distributors/Dealers Price Update Addition Supporting Documentation Deletion Supporting Documentation Discontinued Products/Services X Products/Services Supporting Documentation X New Addition Update Only X Supporting Documentation Other States/Territories Supporting Documentation Supporting Documentation Notes: Contractor may include other notes regarding the contract update here: (attach another page if necessary). Attached is Allsteel's request to add the following new product lines: Park, Recharge, and Townhall Collection along with the respective price -

A General Geometric Construction of Coordinates in a Convex Simplicial Polytope

A general geometric construction of coordinates in a convex simplicial polytope ∗ Tao Ju a, Peter Liepa b Joe Warren c aWashington University, St. Louis, USA bAutodesk, Toronto, Canada cRice University, Houston, USA Abstract Barycentric coordinates are a fundamental concept in computer graphics and ge- ometric modeling. We extend the geometric construction of Floater’s mean value coordinates [8,11] to a general form that is capable of constructing a family of coor- dinates in a convex 2D polygon, 3D triangular polyhedron, or a higher-dimensional simplicial polytope. This family unifies previously known coordinates, including Wachspress coordinates, mean value coordinates and discrete harmonic coordinates, in a simple geometric framework. Using the construction, we are able to create a new set of coordinates in 3D and higher dimensions and study its relation with known coordinates. We show that our general construction is complete, that is, the resulting family includes all possible coordinates in any convex simplicial polytope. Key words: Barycentric coordinates, convex simplicial polytopes 1 Introduction In computer graphics and geometric modelling, we often wish to express a point x as an affine combination of a given point set vΣ = {v1,...,vi,...}, x = bivi, where bi =1. (1) i∈Σ i∈Σ Here bΣ = {b1,...,bi,...} are called the coordinates of x with respect to vΣ (we shall use subscript Σ hereafter to denote a set). In particular, bΣ are called barycentric coordinates if they are non-negative. ∗ [email protected] Preprint submitted to Elsevier Science 3 December 2006 v1 v1 x x v4 v2 v2 v3 v3 (a) (b) Fig. -

![The Geometry of Nim Arxiv:1109.6712V1 [Math.CO] 30](https://docslib.b-cdn.net/cover/8642/the-geometry-of-nim-arxiv-1109-6712v1-math-co-30-48642.webp)

The Geometry of Nim Arxiv:1109.6712V1 [Math.CO] 30

The Geometry of Nim Kevin Gibbons Abstract We relate the Sierpinski triangle and the game of Nim. We begin by defining both a new high-dimensional analog of the Sierpinski triangle and a natural geometric interpretation of the losing positions in Nim, and then, in a new result, show that these are equivalent in each finite dimension. 0 Introduction The Sierpinski triangle (fig. 1) is one of the most recognizable figures in mathematics, and with good reason. It appears in everything from Pascal's Triangle to Conway's Game of Life. In fact, it has already been seen to be connected with the game of Nim, albeit in a very different manner than the one presented here [3]. A number of analogs have been discovered, such as the Menger sponge (fig. 2) and a three-dimensional version called a tetrix. We present, in the first section, a generalization in higher dimensions differing from the more typical simplex generalization. Rather, we define a discrete Sierpinski demihypercube, which in three dimensions coincides with the simplex generalization. In the second section, we briefly review Nim and the theory behind optimal play. As in all impartial games (games in which the possible moves depend only on the state of the game, and not on which of the two players is moving), all possible positions can be divided into two classes - those in which the next player to move can force a win, called N-positions, and those in which regardless of what the next player does the other player can force a win, called P -positions. -

Notes Has Not Been Formally Reviewed by the Lecturer

6.896 Topics in Algorithmic Game Theory February 22, 2010 Lecture 6 Lecturer: Constantinos Daskalakis Scribe: Jason Biddle, Debmalya Panigrahi NOTE: The content of these notes has not been formally reviewed by the lecturer. It is recommended that they are read critically. In our last lecture, we proved Nash's Theorem using Broweur's Fixed Point Theorem. We also showed Brouwer's Theorem via Sperner's Lemma in 2-d using a limiting argument to go from the discrete combinatorial problem to the topological one. In this lecture, we present a multidimensional generalization of the proof from last time. Our proof differs from those typically found in the literature. In particular, we will insist that each step of the proof be constructive. Using constructive arguments, we shall be able to pin down the complexity-theoretic nature of the proof and make the steps algorithmic in subsequent lectures. In the first part of our lecture, we present a framework for the multidimensional generalization of Sperner's Lemma. A Canonical Triangulation of the Hypercube • A Legal Coloring Rule • In the second part, we formally state Sperner's Lemma in n dimensions and prove it using the following constructive steps: Colored Envelope Construction • Definition of the Walk • Identification of the Starting Simplex • Direction of the Walk • 1 Framework Recall that in the 2-dimensional case we had a square which was divided into triangles. We had also defined a legal coloring scheme for the triangle vertices lying on the boundary of the square. Now let us extend those concepts to higher dimensions. 1.1 Canonical Triangulation of the Hypercube We begin by introducing the n-simplex as the n-dimensional analog of the triangle in 2 dimensions as shown in Figure 1. -

1 Lifts of Polytopes

Lecture 5: Lifts of polytopes and non-negative rank CSE 599S: Entropy optimality, Winter 2016 Instructor: James R. Lee Last updated: January 24, 2016 1 Lifts of polytopes 1.1 Polytopes and inequalities Recall that the convex hull of a subset X n is defined by ⊆ conv X λx + 1 λ x0 : x; x0 X; λ 0; 1 : ( ) f ( − ) 2 2 [ ]g A d-dimensional convex polytope P d is the convex hull of a finite set of points in d: ⊆ P conv x1;:::; xk (f g) d for some x1;:::; xk . 2 Every polytope has a dual representation: It is a closed and bounded set defined by a family of linear inequalities P x d : Ax 6 b f 2 g for some matrix A m d. 2 × Let us define a measure of complexity for P: Define γ P to be the smallest number m such that for some C s d ; y s ; A m d ; b m, we have ( ) 2 × 2 2 × 2 P x d : Cx y and Ax 6 b : f 2 g In other words, this is the minimum number of inequalities needed to describe P. If P is full- dimensional, then this is precisely the number of facets of P (a facet is a maximal proper face of P). Thinking of γ P as a measure of complexity makes sense from the point of view of optimization: Interior point( methods) can efficiently optimize linear functions over P (to arbitrary accuracy) in time that is polynomial in γ P . ( ) 1.2 Lifts of polytopes Many simple polytopes require a large number of inequalities to describe. -

Interval Vector Polytopes

Interval Vector Polytopes By Gabriel Dorfsman-Hopkins SENIOR HONORS THESIS ROSA C. ORELLANA, ADVISOR DEPARTMENT OF MATHEMATICS DARTMOUTH COLLEGE JUNE, 2013 Contents Abstract iv 1 Introduction 1 2 Preliminaries 4 2.1 Convex Polytopes . 4 2.2 Lattice Polytopes . 8 2.2.1 Ehrhart Theory . 10 2.3 Faces, Face Lattices and the Dual . 12 2.3.1 Faces of a Polytope . 12 2.3.2 The Face Lattice of a Polytope . 15 2.3.3 Duality . 18 2.4 Basic Graph Theory . 22 3IntervalVectorPolytopes 23 3.1 The Complete Interval Vector Polytope . 25 3.2 TheFixedIntervalVectorPolytope . 30 3.2.1 FlowDimensionGraphs . 31 4TheIntervalPyramid 38 ii 4.1 f-vector of the Interval Pyramid . 40 4.2 Volume of the Interval Pyramid . 45 4.3 Duality of the Interval Pyramid . 50 5 Open Questions 57 5.1 Generalized Interval Pyramid . 58 Bibliography 60 iii Abstract An interval vector is a 0, 1 -vector where all the ones appear consecutively. An { } interval vector polytope is the convex hull of a set of interval vectors in Rn.Westudy several classes of interval vector polytopes which exhibit interesting combinatorial- geometric properties. In particular, we study a class whose volumes are equal to the catalan numbers, another class whose legs always form a lattice basis for their affine space, and a third whose face numbers are given by the pascal 3-triangle. iv Chapter 1 Introduction An interval vector is a 0, 1 -vector in Rn such that the ones (if any) occur consecu- { } tively. These vectors can nicely and discretely model scheduling problems where they represent the length of an uninterrupted activity, and so understanding the combi- natorics of these vectors is useful in optimizing scheduling problems of continuous activities of certain lengths. -

The Orientability of Small Covers and Coloring Simple Polytopes

View metadata, citation and similar papers at core.ac.uk brought to you by CORE provided by Osaka City University Repository Nakayama, H. and Nishimura, Y. Osaka J. Math. 42 (2005), 243–256 THE ORIENTABILITY OF SMALL COVERS AND COLORING SIMPLE POLYTOPES HISASHI NAKAYAMA and YASUZO NISHIMURA (Received September 1, 2003) Abstract Small Cover is an -dimensional manifold endowed with a Z2 action whose or- bit space is a simple convex polytope . It is known that a small cover over is characterized by a coloring of which satisfies a certain condition. In this paper we shall investigate the topology of small covers by the coloring theory in com- binatorics. We shall first give an orientability condition for a small cover. In case = 3, an orientable small cover corresponds to a four colored polytope. The four color theorem implies the existence of orientable small cover over every simple con- vex 3-polytope. Moreover we shall show the existence of non-orientable small cover over every simple convex 3-polytope, except the 3-simplex. 0. Introduction “Small Cover” was introduced and studied by Davis and Januszkiewicz in [5]. It is a real version of “Quasitoric manifold,” i.e., an -dimensional manifold endowed with an action of the group Z2 whose orbit space is an -dimensional simple convex poly- tope. A typical example is provided by the natural action of Z2 on the real projec- tive space R whose orbit space is an -simplex. Let be an -dimensional simple convex polytope. Here is simple if the number of codimension-one faces (which are called “facets”) meeting at each vertex is , equivalently, the dual of its boundary complex ( ) is an ( 1)-dimensional simplicial sphere. -

Uniform Polychora

BRIDGES Mathematical Connections in Art, Music, and Science Uniform Polychora Jonathan Bowers 11448 Lori Ln Tyler, TX 75709 E-mail: [email protected] Abstract Like polyhedra, polychora are beautiful aesthetic structures - with one difference - polychora are four dimensional. Although they are beyond human comprehension to visualize, one can look at various projections or cross sections which are three dimensional and usually very intricate, these make outstanding pieces of art both in model form or in computer graphics. Polygons and polyhedra have been known since ancient times, but little study has gone into the next dimension - until recently. Definitions A polychoron is basically a four dimensional "polyhedron" in the same since that a polyhedron is a three dimensional "polygon". To be more precise - a polychoron is a 4-dimensional "solid" bounded by cells with the following criteria: 1) each cell is adjacent to only one other cell for each face, 2) no subset of cells fits criteria 1, 3) no two adjacent cells are corealmic. If criteria 1 fails, then the figure is degenerate. The word "polychoron" was invented by George Olshevsky with the following construction: poly = many and choron = rooms or cells. A polytope (polyhedron, polychoron, etc.) is uniform if it is vertex transitive and it's facets are uniform (a uniform polygon is a regular polygon). Degenerate figures can also be uniform under the same conditions. A vertex figure is the figure representing the shape and "solid" angle of the vertices, ex: the vertex figure of a cube is a triangle with edge length of the square root of 2. -

Articles and Scheduling for Student Seminar in Combinatorics: Linear Complementarity

Articles and Scheduling for Student Seminar in Combinatorics: Linear Complementarity Komei Fukuda Department of Mathematics and Institute of Theoretical Computer Science, ETH Zurich, Switzerland http://www.inf.ethz.ch/personal/fukudak/ October 7, 2015 (Last changes marked by blue) 1 Seminar Schedule The schedule is meant to be tentative and may be modified depending on the progress of our seminar. Students may use blackboards, overhead projector, or beamer for presenta- tion. The lecture room is HG E 33.3 (Tuesday 10-12). Date Article Presenter(s) September 15 overview, initial planning Komei Fukuda September 22 fixing teams and planning Komei Fukuda September 29 Preparation (no seminar) October 6 QP duality [5] team 1 (Bausch and Heins) October 13 LCP classics [6, pages 103-114] team 2 (Buchmann and Mishra) October 20 LCP classics [6, pages 114-124] team 3 (Akeret) October 27 NP-completeness [4] [20, Section 3.4] team 4 (Dummermuth) November 3 PSD- and P-matrices [7, Sec 3.1{3.3] team 5 (G¨oggeland Schneebeli) November 10 SU-matrices [7, Sec 3.4{3.6] team 6 (Allemann) November 17 P(& SU)-LCP may not be hard [22, 16] team 7 (Schiesser) November 24 Randomized algorithms [15, 23] team 8 (Sidler and Towa) December 1 Two polynomial cases [11] team 9 December 8 Oriented matroids and LCP [17] team 10 (Leroy and Stenz) December 15 Combinatorial char. of K-matrices [12] team 11 (Gleinig) 1 2 Presentations and Teams In this seminar, we study some of the most critical literatures on linear complementarity with a strong emphasis on combinatorial and algorithmic aspects. -

Combinatorial Characterizations of K-Matrices

Combinatorial Characterizations of K-matricesa Jan Foniokbc Komei Fukudabde Lorenz Klausbf October 29, 2018 We present a number of combinatorial characterizations of K-matrices. This extends a theorem of Fiedler and Pt´ak on linear-algebraic character- izations of K-matrices to the setting of oriented matroids. Our proof is elementary and simplifies the original proof substantially by exploiting the duality of oriented matroids. As an application, we show that a simple principal pivot method applied to the linear complementarity problems with K-matrices converges very quickly, by a purely combinatorial argument. Key words: P-matrix, K-matrix, oriented matroid, linear complementarity 2010 MSC: 15B48, 52C40, 90C33 1 Introduction A matrix M ∈ Rn×n is a P-matrix if all its principal minors (determinants of principal submatrices) are positive; it is a Z-matrix if all its off-diagonal elements are non-positive; and it is a K-matrix if it is both a P-matrix and a Z-matrix. Z- and K-matrices often occur in a wide variety of areas such as input–output produc- tion and growth models in economics, finite difference methods for partial differential equations, Markov processes in probability and statistics, and linear complementarity problems in operations research [2]. In 1962, Fiedler and Pt´ak [9] listed thirteen equivalent conditions for a Z-matrix to be a K-matrix. Some of them concern the sign structure of vectors: arXiv:0911.2171v3 [math.OC] 3 Aug 2010 a This research was partially supported by the project ‘A Fresh Look at the Complexity of Pivoting in Linear Complementarity’ no.