The Shannon Sampling Theorem and Its Implications

Total Page:16

File Type:pdf, Size:1020Kb

Load more

Recommended publications

-

Mathematical Basics of Bandlimited Sampling and Aliasing

Signal Processing Mathematical basics of bandlimited sampling and aliasing Modern applications often require that we sample analog signals, convert them to digital form, perform operations on them, and reconstruct them as analog signals. The important question is how to sample and reconstruct an analog signal while preserving the full information of the original. By Vladimir Vitchev o begin, we are concerned exclusively The limits of integration for Equation 5 T about bandlimited signals. The reasons are specified only for one period. That isn’t a are both mathematical and physical, as we problem when dealing with the delta func- discuss later. A signal is said to be band- Furthermore, note that the asterisk in Equa- tion, but to give rigor to the above expres- limited if the amplitude of its spectrum goes tion 3 denotes convolution, not multiplica- sions, note that substitutions can be made: to zero for all frequencies beyond some thresh- tion. Since we know the spectrum of the The integral can be replaced with a Fourier old called the cutoff frequency. For one such original signal G(f), we need find only the integral from minus infinity to infinity, and signal (g(t) in Figure 1), the spectrum is zero Fourier transform of the train of impulses. To the periodic train of delta functions can be for frequencies above a. In that case, the do so we recognize that the train of impulses replaced with a single delta function, which is value a is also the bandwidth (BW) for this is a periodic function and can, therefore, be the basis for the periodic signal. -

Efficient Supersampling Antialiasing for High-Performance Architectures

Efficient Supersampling Antialiasing for High-Performance Architectures TR91-023 April, 1991 Steven Molnar The University of North Carolina at Chapel Hill Department of Computer Science CB#3175, Sitterson Hall Chapel Hill, NC 27599-3175 This work was supported by DARPA/ISTO Order No. 6090, NSF Grant No. DCI- 8601152 and IBM. UNC is an Equa.l Opportunity/Affirmative Action Institution. EFFICIENT SUPERSAMPLING ANTIALIASING FOR HIGH PERFORMANCE ARCHITECTURES Steven Molnar Department of Computer Science University of North Carolina Chapel Hill, NC 27599-3175 Abstract Techniques are presented for increasing the efficiency of supersampling antialiasing in high-performance graphics architectures. The traditional approach is to sample each pixel with multiple, regularly spaced or jittered samples, and to blend the sample values into a final value using a weighted average [FUCH85][DEER88][MAMM89][HAEB90]. This paper describes a new type of antialiasing kernel that is optimized for the constraints of hardware systems and produces higher quality images with fewer sample points than traditional methods. The central idea is to compute a Poisson-disk distribution of sample points for a small region of the screen (typically pixel-sized, or the size of a few pixels). Sample points are then assigned to pixels so that the density of samples points (rather than weights) for each pixel approximates a Gaussian (or other) reconstruction filter as closely as possible. The result is a supersampling kernel that implements importance sampling with Poisson-disk-distributed samples. The method incurs no additional run-time expense over standard weighted-average supersampling methods, supports successive-refinement, and can be implemented on any high-performance system that point samples accurately and has sufficient frame-buffer storage for two color buffers. -

ESE 531: Digital Signal Processing

ESE 531: Digital Signal Processing Lec 12: February 21st, 2017 Data Converters, Noise Shaping (con’t) Penn ESE 531 Spring 2017 - Khanna Lecture Outline ! Data Converters " Anti-aliasing " ADC " Quantization " Practical DAC ! Noise Shaping Penn ESE 531 Spring 2017 - Khanna 2 ADC Penn ESE 531 Spring 2017 - Khanna 3 Anti-Aliasing Filter with ADC Penn ESE 531 Spring 2017 - Khanna 4 Oversampled ADC Penn ESE 531 Spring 2017 - Khanna 5 Oversampled ADC Penn ESE 531 Spring 2017 - Khanna 6 Oversampled ADC Penn ESE 531 Spring 2017 - Khanna 7 Oversampled ADC Penn ESE 531 Spring 2017 - Khanna 8 Sampling and Quantization Penn ESE 531 Spring 2017 - Khanna 9 Sampling and Quantization Penn ESE 531 Spring 2017 - Khanna 10 Effect of Quantization Error on Signal ! Quantization error is a deterministic function of the signal " Consequently, the effect of quantization strongly depends on the signal itself ! Unless, we consider fairly trivial signals, a deterministic analysis is usually impractical " More common to look at errors from a statistical perspective " "Quantization noise” ! Two aspects " How much noise power (variance) does quantization add to our samples? " How is this noise distributed in frequency? Penn ESE 531 Spring 2017 - Khanna 11 Quantization Error ! Model quantization error as noise ! In that case: Penn ESE 531 Spring 2017 - Khanna 12 Ideal Quantizer ! Quantization step Δ ! Quantization error has sawtooth shape, ! Bounded by –Δ/2, +Δ/2 ! Ideally infinite input range and infinite number of quantization levels Penn ESE 568 Fall 2016 - Khanna adapted from Murmann EE315B, Stanford 13 Ideal B-bit Quantizer ! Practical quantizers have a limited input range and a finite set of output codes ! E.g. -

Comparing Oversampling Techniques to Handle the Class Imbalance Problem: a Customer Churn Prediction Case Study

Received September 14, 2016, accepted October 1, 2016, date of publication October 26, 2016, date of current version November 28, 2016. Digital Object Identifier 10.1109/ACCESS.2016.2619719 Comparing Oversampling Techniques to Handle the Class Imbalance Problem: A Customer Churn Prediction Case Study ADNAN AMIN1, SAJID ANWAR1, AWAIS ADNAN1, MUHAMMAD NAWAZ1, NEWTON HOWARD2, JUNAID QADIR3, (Senior Member, IEEE), AHMAD HAWALAH4, AND AMIR HUSSAIN5, (Senior Member, IEEE) 1Center for Excellence in Information Technology, Institute of Management Sciences, Peshawar 25000, Pakistan 2Nuffield Department of Surgical Sciences, University of Oxford, Oxford, OX3 9DU, U.K. 3Information Technology University, Arfa Software Technology Park, Lahore 54000, Pakistan 4College of Computer Science and Engineering, Taibah University, Medina 344, Saudi Arabia 5Division of Computing Science and Maths, University of Stirling, Stirling, FK9 4LA, U.K. Corresponding author: A. Amin ([email protected]) The work of A. Hussain was supported by the U.K. Engineering and Physical Sciences Research Council under Grant EP/M026981/1. ABSTRACT Customer retention is a major issue for various service-based organizations particularly telecom industry, wherein predictive models for observing the behavior of customers are one of the great instruments in customer retention process and inferring the future behavior of the customers. However, the performances of predictive models are greatly affected when the real-world data set is highly imbalanced. A data set is called imbalanced if the samples size from one class is very much smaller or larger than the other classes. The most commonly used technique is over/under sampling for handling the class-imbalance problem (CIP) in various domains. -

Super-Sampling Anti-Aliasing Analyzed

Super-sampling Anti-aliasing Analyzed Kristof Beets Dave Barron Beyond3D [email protected] Abstract - This paper examines two varieties of super-sample anti-aliasing: Rotated Grid Super- Sampling (RGSS) and Ordered Grid Super-Sampling (OGSS). RGSS employs a sub-sampling grid that is rotated around the standard horizontal and vertical offset axes used in OGSS by (typically) 20 to 30°. RGSS is seen to have one basic advantage over OGSS: More effective anti-aliasing near the horizontal and vertical axes, where the human eye can most easily detect screen aliasing (jaggies). This advantage also permits the use of fewer sub-samples to achieve approximately the same visual effect as OGSS. In addition, this paper examines the fill-rate, memory, and bandwidth usage of both anti-aliasing techniques. Super-sampling anti-aliasing is found to be a costly process that inevitably reduces graphics processing performance, typically by a substantial margin. However, anti-aliasing’s posi- tive impact on image quality is significant and is seen to be very important to an improved gaming experience and worth the performance cost. What is Aliasing? digital medium like a CD. This translates to graphics in that a sample represents a specific moment as well as a Computers have always strived to achieve a higher-level specific area. A pixel represents each area and a frame of quality in graphics, with the goal in mind of eventu- represents each moment. ally being able to create an accurate representation of reality. Of course, to achieve reality itself is impossible, At our current level of consumer technology, it simply as reality is infinitely detailed. -

Discrete - Time Signals and Systems

Discrete - Time Signals and Systems Sampling – II Sampling theorem & Reconstruction Yogananda Isukapalli Sampling at diffe- -rent rates From these figures, it can be concluded that it is very important to sample the signal adequately to avoid problems in reconstruction, which leads us to Shannon’s sampling theorem 2 Fig:7.1 Claude Shannon: The man who started the digital revolution Shannon arrived at the revolutionary idea of digital representation by sampling the information source at an appropriate rate, and converting the samples to a bit stream Before Shannon, it was commonly believed that the only way of achieving arbitrarily small probability of error in a communication channel was to 1916-2001 reduce the transmission rate to zero. All this changed in 1948 with the publication of “A Mathematical Theory of Communication”—Shannon’s landmark work Shannon’s Sampling theorem A continuous signal xt( ) with frequencies no higher than fmax can be reconstructed exactly from its samples xn[ ]= xn [Ts ], if the samples are taken at a rate ffs ³ 2,max where fTss= 1 This simple theorem is one of the theoretical Pillars of digital communications, control and signal processing Shannon’s Sampling theorem, • States that reconstruction from the samples is possible, but it doesn’t specify any algorithm for reconstruction • It gives a minimum sampling rate that is dependent only on the frequency content of the continuous signal x(t) • The minimum sampling rate of 2fmax is called the “Nyquist rate” Example1: Sampling theorem-Nyquist rate x( t )= 2cos(20p t ), find the Nyquist frequency ? xt( )= 2cos(2p (10) t ) The only frequency in the continuous- time signal is 10 Hz \ fHzmax =10 Nyquist sampling rate Sampling rate, ffsnyq ==2max 20 Hz Continuous-time sinusoid of frequency 10Hz Fig:7.2 Sampled at Nyquist rate, so, the theorem states that 2 samples are enough per period. -

CSMOUTE: Combined Synthetic Oversampling and Undersampling Technique for Imbalanced Data Classification

CSMOUTE: Combined Synthetic Oversampling and Undersampling Technique for Imbalanced Data Classification Michał Koziarski Department of Electronics AGH University of Science and Technology Al. Mickiewicza 30, 30-059 Kraków, Poland Email: [email protected] Abstract—In this paper we propose a novel data-level algo- ity observations (oversampling). Secondly, the algorithm-level rithm for handling data imbalance in the classification task, Syn- methods, which adjust the training procedure of the learning thetic Majority Undersampling Technique (SMUTE). SMUTE algorithms to better accommodate for the data imbalance. In leverages the concept of interpolation of nearby instances, previ- ously introduced in the oversampling setting in SMOTE. Further- this paper we focus on the former. Specifically, we propose more, we combine both in the Combined Synthetic Oversampling a novel undersampling algorithm, Synthetic Majority Under- and Undersampling Technique (CSMOUTE), which integrates sampling Technique (SMUTE), which leverages the concept of SMOTE oversampling with SMUTE undersampling. The results interpolation of nearby instances, previously introduced in the of the conducted experimental study demonstrate the usefulness oversampling setting in SMOTE [5]. Secondly, we propose a of both the SMUTE and the CSMOUTE algorithms, especially when combined with more complex classifiers, namely MLP and Combined Synthetic Oversampling and Undersampling Tech- SVM, and when applied on datasets consisting of a large number nique (CSMOUTE), which integrates SMOTE oversampling of outliers. This leads us to a conclusion that the proposed with SMUTE undersampling. The aim of this paper is to approach shows promise for further extensions accommodating serve as a preliminary study of the potential usefulness of local data characteristics, a direction discussed in more detail in the proposed approach, with the final goal of extending it to the paper. -

The Nyquist Sampling Rate for Spiraling Curves 11

THE NYQUIST SAMPLING RATE FOR SPIRALING CURVES PHILIPPE JAMING, FELIPE NEGREIRA & JOSE´ LUIS ROMERO Abstract. We consider the problem of reconstructing a compactly supported function from samples of its Fourier transform taken along a spiral. We determine the Nyquist sampling rate in terms of the density of the spiral and show that, below this rate, spirals suffer from an approximate form of aliasing. This sets a limit to the amount of under- sampling that compressible signals admit when sampled along spirals. More precisely, we derive a lower bound on the condition number for the reconstruction of functions of bounded variation, and for functions that are sparse in the Haar wavelet basis. 1. Introduction 1.1. The mobile sampling problem. In this article, we consider the reconstruction of a compactly supported function from samples of its Fourier transform taken along certain curves, that we call spiraling. This problem is relevant, for example, in magnetic resonance imaging (MRI), where the anatomy and physiology of a person are captured by moving sensors. The Fourier sampling problem is equivalent to the sampling problem for bandlimited functions - that is, functions whose Fourier transform are supported on a given compact set. The most classical setting concerns functions of one real variable with Fourier transform supported on the unit interval [ 1/2, 1/2], and sampled on a grid ηZ, with η > 0. The sampling rate η determines whether− every bandlimited function can be reconstructed from its samples: reconstruction fails if η > 1 and succeeds if η 6 1 [42]. The transition value η = 1 is known as the Nyquist sampling rate, and it is the benchmark for all sampling schemes: modern sampling strategies that exploit the particular structure of a certain class of signals are praised because they achieve sub-Nyquist sampling rates. -

2.161 Signal Processing: Continuous and Discrete Fall 2008

MIT OpenCourseWare http://ocw.mit.edu 2.161 Signal Processing: Continuous and Discrete Fall 2008 For information about citing these materials or our Terms of Use, visit: http://ocw.mit.edu/terms. MASSACHUSETTS INSTITUTE OF TECHNOLOGY DEPARTMENT OF MECHANICAL ENGINEERING 2.161 Signal Processing - Continuous and Discrete 1 Sampling and the Discrete Fourier Transform 1 Sampling Consider a continuous function f(t) that is limited in extent, T1 · t < T2. In order to process this function in the computer it must be sampled and represented by a ¯nite set of numbers. The most common sampling scheme is to use a ¯xed sampling interval ¢T and to form a sequence of length N: ffng (n = 0 : : : N ¡ 1), where fn = f(T1 + n¢T ): In subsequent processing the function f(t) is represented by the ¯nite sequence ffng and the sampling interval ¢T . In practice, sampling occurs in the time domain by the use of an analog-digital (A/D) converter. The mathematical operation of sampling (not to be confused with the physics of sampling) is most commonly described as a multiplicative operation, in which f(t) is multiplied by a Dirac comb sampling function s(t; ¢T ), consisting of a set of delayed Dirac delta functions: X1 s(t; ¢T ) = ±(t ¡ n¢T ): (1) n=¡1 ? We denote the sampled waveform f (t) as X1 ? f (t) = s(t; ¢T )f(t) = f(t)±(t ¡ n¢T ) (2) n=¡1 ? as shown in Fig. 1. Note that f (t) is a set of delayed weighted delta functions, and that the waveform must be interpreted in the distribution sense by the strength (or area) of each component impulse. -

Efficient Multidimensional Sampling

EUROGRAPHICS 2002 / G. Drettakis and H.-P. Seidel Volume 21 (2002 ), Number 3 (Guest Editors) Efficient Multidimensional Sampling Thomas Kollig and Alexander Keller Department of Computer Science, Kaiserslautern University, Germany Abstract Image synthesis often requires the Monte Carlo estimation of integrals. Based on a generalized con- cept of stratification we present an efficient sampling scheme that consistently outperforms previous techniques. This is achieved by assembling sampling patterns that are stratified in the sense of jittered sampling and N-rooks sampling at the same time. The faster convergence and improved anti-aliasing are demonstrated by numerical experiments. Categories and Subject Descriptors (according to ACM CCS): G.3 [Probability and Statistics]: Prob- abilistic Algorithms (including Monte Carlo); I.3.2 [Computer Graphics]: Picture/Image Generation; I.3.7 [Computer Graphics]: Three-Dimensional Graphics and Realism. 1. Introduction general concept of stratification than just joining jit- tered and Latin hypercube sampling. Since our sam- Many rendering tasks are given in integral form and ples are highly correlated and satisfy a minimum dis- usually the integrands are discontinuous and of high tance property, noise artifacts are attenuated much 22 dimension, too. Since the Monte Carlo method is in- more efficiently and anti-aliasing is improved. dependent of dimension and applicable to all square- integrable functions, it has proven to be a practical tool for numerical integration. It relies on the point 2. Monte Carlo Integration sampling paradigm and such on sample placement. In- The Monte Carlo method of integration estimates the creasing the uniformity of the samples is crucial for integral of a square-integrable function f over the s- the efficiency of the stochastic method and the level dimensional unit cube by of noise contained in the rendered images. -

Signal Sampling

FYS3240 PC-based instrumentation and microcontrollers Signal sampling Spring 2017 – Lecture #5 Bekkeng, 30.01.2017 Content – Aliasing – Sampling – Analog to Digital Conversion (ADC) – Filtering – Oversampling – Triggering Analog Signal Information Three types of information: • Level • Shape • Frequency Sampling Considerations – An analog signal is continuous – A sampled signal is a series of discrete samples acquired at a specified sampling rate – The faster we sample the more our sampled signal will look like our actual signal Actual Signal – If not sampled fast enough a problem known as aliasing will occur Sampled Signal Aliasing Adequately Sampled SignalSignal Aliased Signal Bandwidth of a filter • The bandwidth B of a filter is defined to be between the -3 dB points Sampling & Nyquist’s Theorem • Nyquist’s sampling theorem: – The sample frequency should be at least twice the highest frequency contained in the signal Δf • Or, more correctly: The sample frequency fs should be at least twice the bandwidth Δf of your signal 0 f • In mathematical terms: fs ≥ 2 *Δf, where Δf = fhigh – flow • However, to accurately represent the shape of the ECG signal signal, or to determine peak maximum and peak locations, a higher sampling rate is required – Typically a sample rate of 10 times the bandwidth of the signal is required. Illustration from wikipedia Sampling Example Aliased Signal 100Hz Sine Wave Sampled at 100Hz Adequately Sampled for Frequency Only (Same # of cycles) 100Hz Sine Wave Sampled at 200Hz Adequately Sampled for Frequency and Shape 100Hz Sine Wave Sampled at 1kHz Hardware Filtering • Filtering – To remove unwanted signals from the signal that you are trying to measure • Analog anti-aliasing low-pass filtering before the A/D converter – To remove all signal frequencies that are higher than the input bandwidth of the device. -

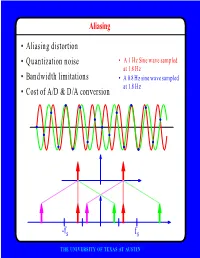

F • Aliasing Distortion • Quantization Noise • Bandwidth Limitations • Cost of A/D & D/A Conversion

Aliasing • Aliasing distortion • Quantization noise • A 1 Hz Sine wave sampled at 1.8 Hz • Bandwidth limitations • A 0.8 Hz sine wave sampled at 1.8 Hz • Cost of A/D & D/A conversion -fs fs THE UNIVERSITY OF TEXAS AT AUSTIN Advantages of Digital Systems Perfect reconstruction of a Better trade-off between signal is possible even after bandwidth and noise severe distortion immunity performance digital analog bandwidth Increase signal-to-noise ratio simply by adding more bits SNR = -7.2 + 6 dB/bit THE UNIVERSITY OF TEXAS AT AUSTIN Advantages of Digital Systems Programmability • Modifiable in the field • Implement multiple standards • Better user interfaces • Tolerance for changes in specifications • Get better use of hardware for low-speed operations • Debugging • User programmability THE UNIVERSITY OF TEXAS AT AUSTIN Disadvantages of Digital Systems Programmability • Speed is too slow for some applications • High average power and peak power consumption RISC (2 Watts) vs. DSP (50 mW) DATA PROG MEMORY MEMORY HARVARD ARCHITECTURE • Aliasing from undersampling • Clipping from quantization Q[v] v v THE UNIVERSITY OF TEXAS AT AUSTIN Analog-to-Digital Conversion 1 --- T h(t) Q[.] xt() yt() ynT() yˆ()nT Anti-Aliasing Sampler Quantizer Filter xt() y(nT) t n y(t) ^y(nT) t n THE UNIVERSITY OF TEXAS AT AUSTIN Resampling Changing the Sampling Rate • Conversion between audio formats Compact 48.0 Digital Disc ---------- Audio Tape 44.1 KHz44.1 48 KHz • Speech compression Speech 1 Speech for on DAT --- Telephone 48 KHz 6 8 KHz • Video format conversion