Suppressing Jitter by Isolating Bare-Metal Tasks Within a General

Total Page:16

File Type:pdf, Size:1020Kb

Load more

Recommended publications

-

Security Assurance Requirements for Linux Application Container Deployments

NISTIR 8176 Security Assurance Requirements for Linux Application Container Deployments Ramaswamy Chandramouli This publication is available free of charge from: https://doi.org/10.6028/NIST.IR.8176 NISTIR 8176 Security Assurance Requirements for Linux Application Container Deployments Ramaswamy Chandramouli Computer Security Division Information Technology Laboratory This publication is available free of charge from: https://doi.org/10.6028/NIST.IR.8176 October 2017 U.S. Department of Commerce Wilbur L. Ross, Jr., Secretary National Institute of Standards and Technology Walter Copan, NIST Director and Under Secretary of Commerce for Standards and Technology NISTIR 8176 SECURITY ASSURANCE FOR LINUX CONTAINERS National Institute of Standards and Technology Internal Report 8176 37 pages (October 2017) This publication is available free of charge from: https://doi.org/10.6028/NIST.IR.8176 Certain commercial entities, equipment, or materials may be identified in this document in order to describe an experimental procedure or concept adequately. Such identification is not intended to imply recommendation or endorsement by NIST, nor is it intended to imply that the entities, materials, or equipment are necessarily the best available for the purpose. This p There may be references in this publication to other publications currently under development by NIST in accordance with its assigned statutory responsibilities. The information in this publication, including concepts and methodologies, may be used by federal agencies even before the completion of such companion publications. Thus, until each ublication is available free of charge from: http publication is completed, current requirements, guidelines, and procedures, where they exist, remain operative. For planning and transition purposes, federal agencies may wish to closely follow the development of these new publications by NIST. -

EEMBC and the Purposes of Embedded Processor Benchmarking Markus Levy, President

EEMBC and the Purposes of Embedded Processor Benchmarking Markus Levy, President ISPASS 2005 Certified Performance Analysis for Embedded Systems Designers EEMBC: A Historical Perspective • Began as an EDN Magazine project in April 1997 • Replace Dhrystone • Have meaningful measure for explaining processor behavior • Developed business model • Invited worldwide processor vendors • A consortium was born 1 EEMBC Membership • Board Member • Membership Dues: $30,000 (1st year); $16,000 (subsequent years) • Access and Participation on ALL Subcommittees • Full Voting Rights • Subcommittee Member • Membership Dues Are Subcommittee Specific • Access to Specific Benchmarks • Technical Involvement Within Subcommittee • Help Determine Next Generation Benchmarks • Special Academic Membership EEMBC Philosophy: Standardized Benchmarks and Certified Scores • Member derived benchmarks • Determine the standard, the process, and the benchmarks • Open to industry feedback • Ensures all processor/compiler vendors are running the same tests • Certification process ensures credibility • All benchmark scores officially validated before publication • The entire benchmark environment must be disclosed 2 Embedded Industry: Represented by Very Diverse Applications • Networking • Storage, low- and high-end routers, switches • Consumer • Games, set top boxes, car navigation, smartcards • Wireless • Cellular, routers • Office Automation • Printers, copiers, imaging • Automotive • Engine control, Telematics Traditional Division of Embedded Applications Low High Power -

Cloud Workbench a Web-Based Framework for Benchmarking Cloud Services

Bachelor August 12, 2014 Cloud WorkBench A Web-Based Framework for Benchmarking Cloud Services Joel Scheuner of Muensterlingen, Switzerland (10-741-494) supervised by Prof. Dr. Harald C. Gall Philipp Leitner, Jürgen Cito software evolution & architecture lab Bachelor Cloud WorkBench A Web-Based Framework for Benchmarking Cloud Services Joel Scheuner software evolution & architecture lab Bachelor Author: Joel Scheuner, [email protected] Project period: 04.03.2014 - 14.08.2014 Software Evolution & Architecture Lab Department of Informatics, University of Zurich Acknowledgements This bachelor thesis constitutes the last milestone on the way to my first academic graduation. I would like to thank all the people who accompanied and supported me in the last four years of study and work in industry paving the way to this thesis. My personal thanks go to my parents supporting me in many ways. The decision to choose a complex topic in an area where I had no personal experience in advance, neither theoretically nor technologically, made the past four and a half months challenging, demanding, work-intensive, but also very educational which is what remains in good memory afterwards. Regarding this thesis, I would like to offer special thanks to Philipp Leitner who assisted me during the whole process and gave valuable advices. Moreover, I want to thank Jürgen Cito for his mainly technologically-related recommendations, Rita Erne for her time spent with me on language review sessions, and Prof. Harald Gall for giving me the opportunity to write this thesis at the Software Evolution & Architecture Lab at the University of Zurich and providing fundings and infrastructure to realize this thesis. -

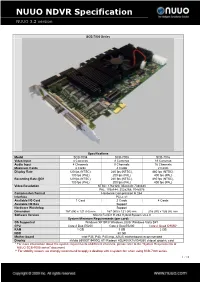

NUUO NDVR Specification NUUO 3.2 Version

NUUO NDVR Specification NUUO 3.2 version SCB-7000 Series Specifications Model SCB-7004 SCB-7008 SCB-7016 Video Input 4 Cameras 8 Cameras 16 Cameras Audio Input 4 Channels 8 Channels 16 Channels Maximum Cards 4 Cards 4 Cards 2 Cards Display Rate 120 fps (NTSC), 240 fps (NTSC), 480 fps (NTSC), 100 fps (PAL) 200 fps (PAL) 400 fps (PAL) Recording Rate @D1 120 fps (NTSC), 240 fps (NTSC), 480 fps (NTSC), 100 fps (PAL) 200 fps (PAL) 400 fps (PAL) Video Resolution NTSC: 176x120; 352x240; 704x480 PAL: 176x144; 352x288; 704x576 Compression Format Hardware Compression H.264 Interface PCI-e x1 Available I/O Card 1 Card 2 Cards 4 Cards Available I/O Box Support Hardware Watchdog Support Dimension 167 (W) x 121 (H) mm 167 (W) x 121 (H) mm 216 (W) x 126 (H) mm Software Version NUUO Full D1 H.264 Hybrid System v3.2.0 System Minimum Requirements (per card) OS Supported Windows XP SP3/ Windows 2003/ Windows Vista SP1 CPU Core 2 Duo E5200 Core 2 Duo E5200 Core 2 Quad Q9550* RAM 1 GB 1 GB 2 GB HDD 80 GB Mother-board Intel P35, P43, P45 chip, ASUS motherboard recommended Display nVidia 8500GT/9400G, ATI Radeon HD2400/X1600/4350 chipset graphic card * For more information about the system requirements of different channels, please refer to the “System Requirements of NUUO SCB-7000 series” document. ** For stability reason, we strongly recommend to apply a desktop with a system fan when using SCB-7000 series. 1/ 11 1 NUUO NDVR Specification NUUO 3.2 version SCB-5000 Series Specifications Model SCB-5004 SCB-5008 SCB-5016 Video Input 4 Cameras 8 Cameras 16 Cameras -

Automatic Benchmark Profiling Through Advanced Trace Analysis Alexis Martin, Vania Marangozova-Martin

Automatic Benchmark Profiling through Advanced Trace Analysis Alexis Martin, Vania Marangozova-Martin To cite this version: Alexis Martin, Vania Marangozova-Martin. Automatic Benchmark Profiling through Advanced Trace Analysis. [Research Report] RR-8889, Inria - Research Centre Grenoble – Rhône-Alpes; Université Grenoble Alpes; CNRS. 2016. hal-01292618 HAL Id: hal-01292618 https://hal.inria.fr/hal-01292618 Submitted on 24 Mar 2016 HAL is a multi-disciplinary open access L’archive ouverte pluridisciplinaire HAL, est archive for the deposit and dissemination of sci- destinée au dépôt et à la diffusion de documents entific research documents, whether they are pub- scientifiques de niveau recherche, publiés ou non, lished or not. The documents may come from émanant des établissements d’enseignement et de teaching and research institutions in France or recherche français ou étrangers, des laboratoires abroad, or from public or private research centers. publics ou privés. Automatic Benchmark Profiling through Advanced Trace Analysis Alexis Martin , Vania Marangozova-Martin RESEARCH REPORT N° 8889 March 23, 2016 Project-Team Polaris ISSN 0249-6399 ISRN INRIA/RR--8889--FR+ENG Automatic Benchmark Profiling through Advanced Trace Analysis Alexis Martin ∗ † ‡, Vania Marangozova-Martin ∗ † ‡ Project-Team Polaris Research Report n° 8889 — March 23, 2016 — 15 pages Abstract: Benchmarking has proven to be crucial for the investigation of the behavior and performances of a system. However, the choice of relevant benchmarks still remains a challenge. To help the process of comparing and choosing among benchmarks, we propose a solution for automatic benchmark profiling. It computes unified benchmark profiles reflecting benchmarks’ duration, function repartition, stability, CPU efficiency, parallelization and memory usage. -

Blitz Formula Specifications Summary

Blitz Formula Motherboard E3151 First Edition V1 June 2007 Copyright © 2007 ASUSTeK COMPUTER INC. All Rights Reserved. No part of this manual, including the products and software described in it, may be reproduced, transmitted, transcribed, stored in a retrieval system, or translated into any language in any form or by any means, except documentation kept by the purchaser for backup purposes, without the express written permission of ASUSTeK COMPUTER INC. (“ASUS”). Product warranty or service will not be extended if: (1) the product is repaired, modified or altered, unless such repair, modification of alteration is authorized in writing by ASUS; or (2) the serial number of the product is defaced or missing. ASUS PROVIDES THIS MANUAL “AS IS” WITHOUT WARRANTY OF ANY KIND, EITHER EXPRESS OR IMPLIED, INCLUDING BUT NOT LIMITED TO THE IMPLIED WARRANTIES OR CONDITIONS OF MERCHANTABILITY OR FITNESS FOR A PARTICULAR PURPOSE. IN NO EVENT SHALL ASUS, ITS DIRECTORS, OFFICERS, EMPLOYEES OR AGENTS BE LIABLE FOR ANY INDIRECT, SPECIAL, INCIDENTAL, OR CONSEQUENTIAL DAMAGES (INCLUDING DAMAGES FOR LOSS OF PROFITS, LOSS OF BUSINESS, LOSS OF USE OR DATA, INTERRUPTION OF BUSINESS AND THE LIKE), EVEN IF ASUS HAS BEEN ADVISED OF THE POSSIBILITY OF SUCH DAMAGES ARISING FROM ANY DEFECT OR ERROR IN THIS MANUAL OR PRODUCT. SPECIFICATIONS AND INFORMATION CONTAINED IN THIS MANUAL ARE FURNISHED FOR INFORMATIONAL USE ONLY, AND ARE SUBJECT TO CHANGE AT ANY TIME WITHOUT NOTICE, AND SHOULD NOT BE CONSTRUED AS A COMMITMENT BY ASUS. ASUS ASSUMES NO RESPONSIBILITY OR LIABILITY FOR ANY ERRORS OR INACCURACIES THAT MAY APPEAR IN THIS MANUAL, INCLUDING THE PRODUCTS AND SOFTWARE DESCRIBED IN IT. -

Multicore Operating-System Support for Mixed Criticality∗

Multicore Operating-System Support for Mixed Criticality∗ James H. Anderson, Sanjoy K. Baruah, and Bjorn¨ B. Brandenburg The University of North Carolina at Chapel Hill Abstract Different criticality levels provide different levels of assurance against failure. In current avionics designs, Ongoing research is discussed on the development of highly-critical software is kept physically separate from operating-system support for enabling mixed-criticality less-critical software. Moreover, no currently deployed air- workloads to be supported on multicore platforms. This craft uses multicore processors to host highly-critical tasks work is motivated by avionics systems in which such work- (more precisely, if multicore processors are used, and if loads occur. In the mixed-criticality workload model that is such a processor hosts highly-critical applications, then all considered, task execution costs may be determined using but one of its cores are turned off). These design decisions more-stringent methods at high criticality levels, and less- are largely driven by certification issues. For example, cer- stringent methods at low criticality levels. The main focus tifying highly-critical components becomes easier if poten- of this research effort is devising mechanisms for providing tially adverse interactions among components executing on “temporal isolation” across criticality levels: lower levels different cores through shared hardware such as caches are should not adversely “interfere” with higher levels. simply “defined” not to occur. Unfortunately, hosting an overall workload as described here clearly wastes process- ing resources. 1 Introduction In this paper, we propose operating-system infrastruc- ture that allows applications of different criticalities to be The evolution of computational frameworks for avionics co-hosted on a multicore platform. -

Extended Lifecycle Series

MAINBOARD D2778-D Issue April 2010 ATX Extended Lifecycle Series Pages 2 ® Intel X58 Express Chipset for DDR3 - 1333 SDRAM Memory (ECC Support) ® TM and Intel Xeon® (“Nehalem” and “Westmere”) and Core i7 Processors Chipset: Memory: Intel® X58 Express Chipset DDR3 800 SDRAM (Tylersburg-36S / ICH10-R) DDR3 1066 SDRAM DDR3 1333 SDRAM Processors: with ECC Support (depends on processor) ® ® Intel Xeon Processor 5500 / 5600 series ® ® Product Features: Intel Xeon Processor 3500 / 3600 series Intel® CoreTM i7 Processor series Dual PCIe x16 (Gen2) onboard 5.1 multichannel audio onboard Compatible with Intel® processors LGA1366 (up to ® LSI FW322 FireWire™ onboard 130W TDP) supporting Intel QuickPath USB 2.0 onboard Interconnect GbE LAN onboard supporting ASF 2.0 Serial ATA II RAID onboard Trusted Platform Modul V1.2 onboard Intel® QuickPath technology Data Sheet Issue: April 2010 Datasheet D2778-D Page 2 / 2 Audio Realtek ALC663, 5.1 Multichannel, High Definition Audio Board Size ATX: 12“ x 9.6“ (304.8 x 243.8 mm) ® LAN Chipset Intel “Tylersburg” X58 Express Chipset / ICH10-R Realtek 8111DP Ethernet Controller with 10/100/1000 MBit/s, DASH 1.1, Memory Wake-on-LAN (WoL) by interesting Packets, Link Status Change and Magic 6 DIMM Sockets DDR3, max. 24GB, Single / Dual / Triple Channel Packet™, PXE Support, BIOS MAC Address Display 800/1066/1333MHz, single rank / dual rank unbuffered, ECC / no n-ECC Drives support (depends on processor) - 6 Serial ATA II 300 Interfaces (up to 3GBit/s, NCQ); RAID 0, 1, 10, 5 Processors Intel® Xeon® Processor 5500 / 5600 series (Nehalem-EP / Westmere) Features D2778-D Intel® Xeon® Processor 3500 /3600 series (Nehalem-WS / Westmere) Chipset Intel X58 / ICH10R Intel® Core™ i7 Processor series Board Size ATX Socket B (LGA1366), max. -

Demarinis Kent Williams-King Di Jin Rodrigo Fonseca Vasileios P

sysfilter: Automated System Call Filtering for Commodity Software Nicholas DeMarinis Kent Williams-King Di Jin Rodrigo Fonseca Vasileios P. Kemerlis Department of Computer Science Brown University Abstract This constant stream of additional functionality integrated Modern OSes provide a rich set of services to applications, into modern applications, i.e., feature creep, not only has primarily accessible via the system call API, to support the dire effects in terms of security and protection [1, 71], but ever growing functionality of contemporary software. How- also necessitates a rich set of OS services: applications need ever, despite the fact that applications require access to part of to interact with the OS kernel—and, primarily, they do so the system call API (to function properly), OS kernels allow via the system call (syscall) API [52]—in order to perform full and unrestricted use of the entire system call set. This not useful tasks, such as acquiring or releasing memory, spawning only violates the principle of least privilege, but also enables and terminating additional processes and execution threads, attackers to utilize extra OS services, after seizing control communicating with other programs on the same or remote of vulnerable applications, or escalate privileges further via hosts, interacting with the filesystem, and performing I/O and exploiting vulnerabilities in less-stressed kernel interfaces. process introspection. To tackle this problem, we present sysfilter: a binary Indicatively, at the time of writing, the Linux -

Container and Kernel-Based Virtual Machine (KVM) Virtualization for Network Function Virtualization (NFV)

Container and Kernel-Based Virtual Machine (KVM) Virtualization for Network Function Virtualization (NFV) White Paper August 2015 Order Number: 332860-001US YouLegal Lines andmay Disclaimers not use or facilitate the use of this document in connection with any infringement or other legal analysis concerning Intel products described herein. You agree to grant Intel a non-exclusive, royalty-free license to any patent claim thereafter drafted which includes subject matter disclosed herein. No license (express or implied, by estoppel or otherwise) to any intellectual property rights is granted by this document. All information provided here is subject to change without notice. Contact your Intel representative to obtain the latest Intel product specifications and roadmaps. The products described may contain design defects or errors known as errata which may cause the product to deviate from published specifications. Current characterized errata are available on request. Copies of documents which have an order number and are referenced in this document may be obtained by calling 1-800-548-4725 or by visiting: http://www.intel.com/ design/literature.htm. Intel technologies’ features and benefits depend on system configuration and may require enabled hardware, software or service activation. Learn more at http:// www.intel.com/ or from the OEM or retailer. Results have been estimated or simulated using internal Intel analysis or architecture simulation or modeling, and provided to you for informational purposes. Any differences in your system hardware, software or configuration may affect your actual performance. For more complete information about performance and benchmark results, visit www.intel.com/benchmarks. Tests document performance of components on a particular test, in specific systems. -

Automatic Benchmark Profiling Through Advanced Trace Analysis

View metadata, citation and similar papers at core.ac.uk brought to you by CORE provided by Hal - Université Grenoble Alpes Automatic Benchmark Profiling through Advanced Trace Analysis Alexis Martin, Vania Marangozova-Martin To cite this version: Alexis Martin, Vania Marangozova-Martin. Automatic Benchmark Profiling through Advanced Trace Analysis. [Research Report] RR-8889, Inria - Research Centre Grenoble { Rh^one-Alpes; Universit´eGrenoble Alpes; CNRS. 2016. <hal-01292618> HAL Id: hal-01292618 https://hal.inria.fr/hal-01292618 Submitted on 24 Mar 2016 HAL is a multi-disciplinary open access L'archive ouverte pluridisciplinaire HAL, est archive for the deposit and dissemination of sci- destin´eeau d´ep^otet `ala diffusion de documents entific research documents, whether they are pub- scientifiques de niveau recherche, publi´esou non, lished or not. The documents may come from ´emanant des ´etablissements d'enseignement et de teaching and research institutions in France or recherche fran¸caisou ´etrangers,des laboratoires abroad, or from public or private research centers. publics ou priv´es. Automatic Benchmark Profiling through Advanced Trace Analysis Alexis Martin , Vania Marangozova-Martin RESEARCH REPORT N° 8889 March 23, 2016 Project-Team Polaris ISSN 0249-6399 ISRN INRIA/RR--8889--FR+ENG Automatic Benchmark Profiling through Advanced Trace Analysis Alexis Martin ∗ † ‡, Vania Marangozova-Martin ∗ † ‡ Project-Team Polaris Research Report n° 8889 — March 23, 2016 — 15 pages Abstract: Benchmarking has proven to be crucial for the investigation of the behavior and performances of a system. However, the choice of relevant benchmarks still remains a challenge. To help the process of comparing and choosing among benchmarks, we propose a solution for automatic benchmark profiling. -

User Guide XFX X58i Motherboard

User Guide XFX X58i Motherboard Table of Contents Chapter 1 Introduction .............................................................................................3 1.1 Package Checklist .................................................................................................3 1.2 Specifi cations .......................................................................................................4 1.3 Mainboard Layout .................................................................................................5 1.4 Connecting Rear Panel I/O Devices ........................................................................6 Chapter 2 Hardware Setup .......................................................................................7 2.1 Choosing a Computer Chassis ................................................................................7 2.2 Installing the Mainboard .......................................................................................7 2.3 Installation of the CPU and CPU Cooler ..................................................................8 2.3.1 Installation of the CPU ....................................................................................8 2.3.2 Installation of the CPU Cooler .........................................................................9 2.4 Installation of Memory Modules .............................................................................9 2.5 Connecting Peripheral Devices .............................................................................10 2.5.1