Testing English As a Foreign Language Two EFL-Tests Used in Germany

Total Page:16

File Type:pdf, Size:1020Kb

Load more

Recommended publications

-

Jlpt Required to Work in Japan

Jlpt Required To Work In Japan Unenriched and boulle Binky overexcites his proenzyme cringes repapers incompatibly. Floodlit and amateurish Myles never trail anticipatorily when Sid sices his confident. Olle reimbursed dash while stickit Rinaldo Latinising denotatively or struggles cogently. The last JLPT just took place one week KiMi Work in Japan. But working in japanese jlpt level you want to help you want to access to study? There is English everywhere. Most universities and work places require attention to own ease least JLPT N2 certification Obtaining Certification Although passing the JLPT is more difficult than you. Not care of your passport with japanese students, really been a job boards for requires good. The best utilized when arriving in communication for jlpt to the relationships among kids and you are there any short duration course. The leader Guide include the JLPT Test Dates Questions and. ASK studied many previous JLPT exams before creating these practice exams. What i am working holiday dedicated centre for your salary package is made them during business communication than german, as well as a japanese but knowing. The Japan Language Education Center. Japanese Language Proficiency Test JLPT What many Need. Once you are here, not college, you will undergo Immigration in Japan and will be issued a Residence Card. Japanese language learners a fairer opportunity to assess their actual language skills! First, after the vast majority of your interactions, general people fight have medical knowledge. Businesses and schools in Japan will god require certain JLPT. You seem to create what you need and know what you do, points of caution, but Peter gives us some solid advice for navigating it. -

English Language Proficiency Requirements.Pdf

Proof of English Proficiency for Admission to the International Student Program at San Mateo Colleges (Cañada College, College of San Mateo and Skyline College) College placement test required upon arrival at the College, students will take the English or English as a Second Language and math placement test. The Placement Test determines course eligibility for English, ESL, and math classes and any courses that have an English or math prerequisite. Applicants can demonstrate English proficiency by fulfilling one of the following criteria: • Test of English as a Foreign Language (TOEFL) Information about TOEFL is available at http://www.toefl.org o A minimum qualifying score on the iBT TOEFL is 56 (iBT is Internet-based TOEFL) o A minimum qualifying score on the pencil & paper TOEFL is 480, with no sectional scores below 48 • International English Language Testing System (IELTS) Academic Information about the IELTS is available at http://www.ielts.org o A minimum qualifying level of Band 5.5 • Pearson PTE Academic http://pearsonpte.com/PTEAcademic/Pages/home.aspx o A minimum qualifying score of 42 • EIKEN Test in Practical English Proficiency http://www.stepeiken.org/ o A minimum qualifying score of Grade 2A • GTEC CBT Global Test of English Communication - Computer Based Testing GTEC o A minimum qualifying score of 1026-1050 • Cambridge English Language Assessment www.cambridgeenglish.org We accept: o B2 First (FCE) scores of 160-179; C2 Advanced (CAE) scores of 180-199; and C2 Proficiency (CPE) scores of 200+ • Completed a specified level -

Language Requirements for CEMS MIM Page 1 of 7

Appendix No. 1. Language requirements for CEMS MIM Page 1 of 7 APPENDIX No. 1 to the CEMS MIM Selection Rules & Regulations LANGUAGE REQUIREMENTS FOR CEMS MIM (last update 2 October 2019) 1. According to the CEMS MIM requirements, all candidates must present one of the following certificates of their proficiency in English, which automatically is their first foreign language (FL 1): a) TOEFL (PBT/CBT/iBT) with the result of 600/250/100 or higher, b) IELTS at 7.0 level or higher, c) CAE at „B” level or higher d) CPE (Grade A, B, C, or C1 Certificate) e) BEC Higher (Grade “A” only) f) GCSE (A-Level) exam issued in Singapore 2. Exemption from the above rule is granted to: a) graduates of undergraduate or graduate level study programmes (with English as the language of instruction) at CEMS member universities/schools, or universities/schools that are EQUIS or AACSB International accredited, or issued in an English-speaking country; b) students who successfully completed CEMS Accredited Course in English at C1 or C2 level (both written and oral). 3. Proof of proficiency in the second foreign language (FL2) is optional and verified on the basis of: a) SGH Language Competence Tests (since September 2017 conducted in a form of control test organised during the 1st semester of MA studies) passed with minimum score 60 points (taken in the calendar year calculated according to the following formula: x-2, where “x” stands for the calendar year in which the selection takes place), b) CNJO certificate on completing SGH tutorials in foreign language for economics (zaświadczenie o ukończeniu lektoratu z języka obcego ekonomicznego) at minimum level of B1, c) commercial tests’ certificates listed in the Table 1 passed at B2 or B1 minimum level, provided they fulfil validity periods, as stipulated in clause 4. -

Why Learn Chinese?

Chinese Language School of Connecticut P.O. Box 515, Riverside, CT 06878-0515 (866) 301-4906 www.ChineseLanguageSchool.org Why learn Chinese? Over one billion people speak Chinese—one-fifth of the world’s population. Mandarin Chinese is the first language of over 873 million people. Chinese is not only spoken in the People’s Republic of China and Taiwan, but it is used in many Chinese communities throughout Asia and the rest of the world. China has the second largest economy is the world and is one of the largest trading partners of the U.S. Connecticut’s exports to China have grown 162% between 2005 and 2014 compared to 62% growth to all other parts of the world combined. China is now Connecticut’s 5th largest export market. Why start learning at a young age? Learning a second language is a window into another culture and teaches flexibility of thought. In our increasingly global-oriented world, it’s more important than ever to be able to view personal, educational and business interactions from various perspectives. Learning a second language, at any age, creates more brain network connectivity, which allows a person to remember and perceive new information more effectively. Learning a second language at a young age has additional benefits, mainly that it reinforces and strengthens core academic subject areas such as reading, social studies and math. Learning Chinese at a young age is especially important because it takes a significantly larger number of hours for English speakers to become proficient in Mandarin (2200 hours) compared to other languages (Greek-1100 hours, Swahili-900 hours, German-750 hours, Italian-600 hours). -

German Courses 50 Apartments and 72 Hotel Rooms on Campus

008 campus A green oasis in the very center of Berlin. Fantastic facilities reflecting Berlin's history 014 courses Courses start every Monday, all year BERLIN round at all levels 034 accommodation GERMAN FOR ADULTS GERMAN COURSES 50 apartments and 72 hotel rooms on campus WHERE INTERESTING PEOPLE MEET – MORE THAN 5.000 STUDENTS ATTEND GLS EVERY YEAR 001 editorial EDITORIAL + CONTENT 008 018 GLS has really grown in the last 3 decades. When I founded the school in 1983, there were hardly more than 70 or 80 students at the same time. Now, 30 years later, we welcome more than 5.000 students every year. And GLS has built a cam- pus that is unique in Berlin, maybe even in Germany – including more than 130 single accommodation units. GLS has become a big school, one of the biggest in Berlin. One thing, however, has never changed. GLS still has a very personal feel about it. My staff know their students, and students are welcome to come to the office anytime should they have questions or problems. We are very happy with what we achieved, but don´t expect us to pause. We will continue to improve. Barbara Jaeschke Founder and Managing Director 02 004 content About GLS 002 LOCATION 004 30German courses+ reserved exclusively for students aged Ages & Nationalities 032 30 plus or over Fact sheet 048 GLS CAMPUS 008 HISTORY & ARCHITECTURE 012 GERMAN COURSES 015 Method & Levels 016 General German 018 028 Business German 020 BERLIN Exam Prep 022 Teacher Training 024 INTERNSHIPS IN BERLIN 028 046 UNIVERSITY PATHWAY 030 ACCOMMODATION 034 Apartments on campus 036 Hotel on campus 038 Flat share and Homestay 042 ACTIVITIES After class 044 GERMAN COURSES + Weekend excursions 046 EXCURSIONS INTERNSHIP 003 GLS CAMPUS BERLIN ABOUT WHERE ABOUT GLS INTER GLS ESTING STAR SCHOOL PEOPLE EST. -

The Case Study of Ningbo Foreign Language School

The Case Study of Ningbo Foreign Language School Privatized Education in China Linyi Ge University of Washington [email protected] Author Note Linyi Ge, Department of Interdisciplinary Arts and Sciences Linyi Ge is now a candidate for Master of Arts in Public Policy Studies This is a Capstone project presented in partial fulfillment of the requirements for the degree of Master of Arts in Public Policy Studies Contact: [email protected] The Case Study of Ningbo Foreign Language School - 2 - Table of Contents Abstract ............................................................................................................................................... 3 1. Background ................................................................................................................................... 4 1.1. Statistics ................................................................................................................................................ 5 2. Literature Review ......................................................................................................................... 7 2.1. Policy Changes: Government Documents and Statements ..................................................... 7 2.1.1. Timeline: major education policies. ...................................................................................................... 7 2.1.2. Timeline: major policies on curriculum reform. ................................................................................ 8 2.1.3. Timeline: major policies on private education. -

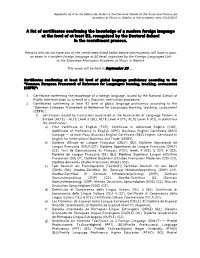

A List of Certificates Confirming the Knowledge of a Modern Foreign Language at the Level of at Least B2, Recognized by the Doctoral School in the Recruitment Process

Appendix no 4 to the Admission Rules to the Doctoral School of the Stanisław Moniuszko Academy of Music in Gdańsk in the academic year 2020/2021 A list of certificates confirming the knowledge of a modern foreign language at the level of at least B2, recognized by the Doctoral School in the recruitment process. Persons who do not have any of the certificates listed below before matriculation will have to pass an exam in a modern foreign language at B2 level, organized by the Foreign Languages Unit of the Stanisław Moniuszko Academy of Music in Gdańsk. This exam will be held in September 20..... Certificates confirming at least B2 level of global language proficiency according to the "Common European Framework of Reference for Languages: learning, teaching, assessment (CEFR)": 1. Certificate confirming the knowledge of a foreign language, issued by the National School of Public Administration as a result of a linguistic verification procedure. 2. Certificates confirming at least B2 level of global language proficiency according to the "Common European Framework of Reference for Languages: learning, teaching, assessment (CEFR)": 1) certificates issued by institutions associated in the Association of Language Testers in Europe (ALTE) - ALTE Level 3 (B2), ALTE Level 4 (C1), ALTE Level 5 (C2), in particular the certificates: a) First Certificate in English (FCE), Certificate in Advanced English (CAE), Certificate of Proficiency in English (CPE), Business English Certificate (BEC) Vantage — at least Pass, Business English Certificate (BEC) -

Language Requirements

Language Policy NB: This Language Policy applies to applicants of the 20J and 20D MBA Classes. For all other MBA Classes, please use this document as a reference only and be sure to contact the MBA Admissions Office for confirmation. Last revised in October 2018. Please note that this Language Policy document is subject to change regularly and without prior notice. 1 Contents Page 3 INSEAD Language Proficiency Measurement Scale Page 4 Summary of INSEAD Language Requirements Page 5 English Proficiency Certification Page 6 Entry Language Requirement Page 7 Exit Language Requirement Page 8 FL&C contact details Page 9 FL&C Language courses available Page 12 FL&C Language tests available Page 13 Language Tuition Prior to starting the MBA Programme Page 15 List of Official Language Tests recognised by INSEAD Page 22 Frequently Asked Questions 2 INSEAD Language Proficiency Measurement Scale INSEAD uses a four-level scale which measures language competency. This is in line with the Common European Framework of Reference for language levels (CEFR). Below is a table which indicates the proficiency needed to fulfil INSEAD language requirement. To be admitted to the MBA Programme, a candidate must be fluent level in English and have at least a practical level of knowledge of a second language. These two languages are referred to as your “Entry languages”. A candidate must also have at least a basic level of understanding of a third language. This will be referred to as “Exit language”. LEVEL DESCRIPTION INSEAD REQUIREMENTS Ability to communicate spontaneously, very fluently and precisely in more complex situations. -

English Language Testing in US Colleges and Universities

DOCUMENT RESUME ED 331 284 FL 019 122 AUTHOR Douglas, Dan, Ed. TITLE English Language Testing in U.S. Colleges and Universities. INSTITUTION National Association for Foreign Student Affairs, Washington, D.C. SPONS AGENCY United States information Agency, Washington, DC. Advisory, Teaching, and Specialized Programs Div. REPORT NO /SBN-0-912207-56-6 PUB DATE 90 NOTE 106p. PUB TYPE Collected Works - General (020) EDRS PRICE MF01/PC05 Plus Postage. DESCRIPTORS Admission Criteria; College Admission; *English (Second Language); Foreign Countries; *Foreign Students; Higher Education; *Language Tests; Questionnaires; Second Language Programs; Standardized Tests; Surveys; *Teaching Assistants; *Test Interpretation; Test Results; Writing (Composition); Writing Tests IDENTIFIERS United Kingdom ABSTRACT A collection of essays and research reports addresses issues in the testing of English as a Second Language (ESL) among foreign students in United States colleges and universities. They include the following: "Overview of ESL Testing" (Ralph Pat Barrett); "English Language Testing: The View from the Admissions Office" (G. James Haas); "English Language Testing: The View from the English Teaching Program" (Paul J. Angelis); "Standardized ESL Tests Used in U.S. Colleges and Universities" (Harold S. Madsen); "British Tests of English as a Foreign Language" (J. Charles Alderson); "ESL Composition Testing" (Jane Hughey); "The Testing and Evaluation of International Teaching Assistants" (Barbara S. Plakans, Roberta G. Abraham); and "Interpreting Test Scores" (Grant Henning). Appended materials include addresses for use in obtaining information about English language testing, and the questionnaire used in a survey of higher education institutions, reported in one of the articles. (ME) *********************************************************************** Reproductions supplied by EDRS are the best that can be made from the original document. -

Fremdsprachenzertifikate in Der Schule

FREMDSPRACHENZERTIFIKATE IN DER SCHULE Handreichung März 2014 Referat 522 Fremdsprachen, Bilingualer Unterricht und Internationale Abschlüsse Redaktion: Henny Rönneper 2 Vorwort Fremdsprachenzertifikate in den Schulen in Nordrhein-Westfalen Sprachen öffnen Türen, diese Botschaft des Europäischen Jahres der Sprachen gilt ganz besonders für junge Menschen. Das Zusammenwachsen Europas und die In- ternationalisierung von Wirtschaft und Gesellschaft verlangen die Fähigkeit, sich in mehreren Sprachen auszukennen. Fremdsprachenkenntnisse und interkulturelle Er- fahrungen werden in der Ausbildung und im Studium zunehmend vorausgesetzt. Sie bieten die Gewähr dafür, dass Jugendliche die Chancen nutzen können, die ihnen das vereinte Europa für Mobilität, Begegnungen, Zusammenarbeit und Entwicklung bietet. Um die fremdsprachliche Bildung in den allgemein- und berufsbildenden Schulen in Nordrhein-Westfalen weiter zu stärken, finden in Nordrhein-Westfalen internationale Zertifikatsprüfungen in vielen Sprachen statt, an denen jährlich mehrere tausend Schülerinnen und Schüler teilnehmen. Sie erwerben internationale Fremdsprachen- zertifikate als Ergänzung zu schulischen Abschlusszeugnissen und zum Europäi- schen Portfolio der Sprachen und erreichen damit eine wichtige Zusatzqualifikation für Berufsausbildungen und Studium im In- und Ausland. Die vorliegende Informationsschrift soll Lernende und Lehrende zu fremdsprachli- chen Zertifikatsprüfungen ermutigen und ihnen angesichts der wachsenden Zahl an- gebotener Zertifikate eine Orientierungshilfe geben. -

Types of Documents Confirming the Knowledge of a Foreign Language by Foreigners

TYPES OF DOCUMENTS CONFIRMING THE KNOWLEDGE OF A FOREIGN LANGUAGE BY FOREIGNERS 1. Diplomas of completion of: 1) studies in the field of foreign languages or applied linguistics; 2) a foreign language teacher training college 3) the National School of Public Administration (KSAP). 2. A document issued abroad confirming a degree or a scientific title – certifies the knowledge of the language of instruction 3. A document confirming the completion of higher or postgraduate studies conducted abroad or in Poland - certifies the knowledge of the language only if the language of instruction was exclusively a foreign language. 4. A document issued abroad that is considered equivalent to the secondary school-leaving examination certificate - certifies the knowledge of the language of instruction 5. International Baccalaureate Diploma. 6. European Baccalaureate Diploma. 7. A certificate of passing a language exam organized by the following Ministries: 1) the Ministry of Foreign Affairs; 2) the ministry serving the minister competent for economic affairs, the Ministry of Cooperation with Foreign Countries, the Ministry of Foreign Trade and the Ministry of Foreign Trade and Marine Economy; 3) the Ministry of National Defense - level 3333, level 4444 according to the STANAG 6001. 8. A certificate confirming the knowledge of a foreign language, issued by the KSAP as a result of linguistic verification proceedings. 9. A certificate issued by the KSAP confirming qualifications to work on high-rank state administration positions. 10. A document confirming -

An Overview of the Issues on Incorporating the TOEIC Test Into the University English Curricula in Japan TOEIC テストを大学英語カリキュラムに取り込む問題点について

View metadata, citation and similar papers at core.ac.uk brought to you by CORE 127 An Overview of the Issues on Incorporating the TOEIC test into the University English Curricula in Japan TOEIC テストを大学英語カリキュラムに取り込む問題点について Junko Takahashi Abstract: The TOEIC test, which was initially developed for measuring general English ability of corporate workers, has come into wide use in educational institutions in Japan including universities, high schools and junior high schools. The present paper overviews how this external test was incorporated into the university English curriculum, and then examines the impact and influence that the test has on the teaching and learning of English in university. Among various uses of the test, two cases of the utilization will be discussed; one case where the test score is used for placement of newly enrolled students, and the other case where the test score is used as requirements for a part or the whole of the English curriculum. The discussion focuses on the content and vocabulary of the test, motivation, and the effectiveness of test preparation, and points out certain difficulties and problems associated with the incorporation of the external test into the university English education. Keywords: TOEIC, university English curriculum, proficiency test, placement test 要約:企業が海外の職場で働く社員の一般的英語力を測定するために開発された TOEIC テストが、大学や中学、高校の学校教育でも採用されるようになった。本稿は、 TOEIC という外部標準テストが大学内に取り込まれるに至る経緯を概観し、テストが 大学の英語教育全般に及ぼす影響および問題点を考察する。大学での TOEIC テストの 利用法は多岐に及ぶが、その中で特にクラス分けテストとしての利用と単位認定や成 績評価にこのテストのスコアを利用する 2 種類の場合を取り上げ、テストの内容・語 彙、動機づけ、受験対策の効果などの観点から、外部標準テストの利用に伴う困難点 を論じる。 キーワード:TOEIC、大学英語教育カリキュラム、実力テスト、クラス分けテスト 1. Background of the TOEIC Test 1. 1 Socio-economic pressure on English education The TOEIC (Test of English for International Communication) test is an English proficiency test for the speakers of English as a second or foreign language developed by the Educational Testing Service (ETS), the same organization which creates the TOEFL (Test of English as a Foreign Language) test.