Streamutils Documentation Release 0.1.1-Dev

Total Page:16

File Type:pdf, Size:1020Kb

Load more

Recommended publications

-

Game Engine Architecture

Game Engine Architecture Chapter 1 Introduction prepared by Roger Mailler, Ph.D., Associate Professor of Computer Science, University of Tulsa 1 Structure of a game team • Lots of members, many jobs o Engineers o Artists o Game Designers o Producers o Publisher o Other Staff prepared by Roger Mailler, Ph.D., Associate Professor of Computer Science, University of Tulsa 2 Engineers • Build software that makes the game and tools works • Lead by a senior engineer • Runtime programmers • Tools programmers prepared by Roger Mailler, Ph.D., Associate Professor of Computer Science, University of Tulsa 3 Artists • Content is king • Lead by the art director • Come in many Flavors o Concept Artists o 3D modelers o Texture artists o Lighting artists o Animators o Motion Capture o Sound Design o Voice Actors prepared by Roger Mailler, Ph.D., Associate Professor of Computer Science, University of Tulsa 4 Game designers • Responsible for game play o Story line o Puzzles o Levels o Weapons • Employ writers and sometimes ex-engineers prepared by Roger Mailler, Ph.D., Associate Professor of Computer Science, University of Tulsa 5 Producers • Manage the schedule • Sometimes act as the senior game designer • Do HR related tasks prepared by Roger Mailler, Ph.D., Associate Professor of Computer Science, University of Tulsa 6 Publisher • Often not part of the same company • Handles manufacturing, distribution and marketing • You could be the publisher in an Indie company prepared by Roger Mailler, Ph.D., Associate Professor of Computer Science, University of -

PG National Vigorsol Beats,Quando Il Complotto Incontra Il Videogame,Top 5

PG National Vigorsol Beats Lo scorso 7 Aprile, al Teatro Ciak di Milano si è disputata una delle serie più emozionanti dell’ultimo split del National Predator, campionato nazionale di League of Legends, la sfida finale che ha visto contrapporsi due team formati recentemente, i Campus Party Sparks e i Samsung Morning Stars. Il match ha animato il pubblico fisico e “virtuale” con colpi di scena e momento mozzafiato, registrando più di un migliaio di spettatori fisici e oltre 5000 su Twitch. Organizzata su una “Best of 5”, la partita ha visto uno scatto iniziale dei Morning Stars, vicini alla vittoria con un 2-0 sugli avversari. Tuttavia, l’ampia capacità d’adattamento e di “mind-reset” degli Sparks e, qualche errore anche dal lato dei Samsung, hanno garantito il recupero e la vittoria degli primi in classifica, concludendo con un 3-2 ottenuto dopo una partita da brividi. Durante lo Spring Split l’andamento del team vincitore è stato per lo più dominante, con sole 2 sconfitte durante la prima e terza settimana della competizione e ben 12 vittorie in totale. Essendo entrambe new entry della scena italiana, i team hanno sorpreso tutti, riuscendo a scavalcare team veterani e non. Per la prima volta vediamo come title sponsor della competizione Vigorsol, famosissima società di chewing-gum che ha deciso d’investire nella scena italiana, altro segno dell’importanza degli eSport e di League of Legends sempre crescente negli anni. Basti pensare come siano aumentate anche le poste in palio: il premio delle competizioni mondiali è passato dai 50.000 dollari della prima season a un picco di 2.680.000 dollari nella sesta season. -

GAME DEVELOPERS a One-Of-A-Kind Game Concept, an Instantly Recognizable Character, a Clever Phrase— These Are All a Game Developer’S Most Valuable Assets

HOLLYWOOD >> REVIEWS ALIAS MAYA 6 * RTZEN RT/SHADER ISSUE AUGUST 2004 THE LEADING GAME INDUSTRY MAGAZINE >>SIGGRAPH 2004 >>DEVELOPER DEFENSE >>FAST RADIOSITY SNEAK PEEK: LEGAL TOOLS TO SPEEDING UP LIGHTMAPS DISCREET 3DS MAX 7 PROTECT YOUR I.P. WITH PIXEL SHADERS POSTMORTEM: THE CINEMATIC EFFECT OF ZOMBIE STUDIOS’ SHADOW OPS: RED MERCURY []CONTENTS AUGUST 2004 VOLUME 11, NUMBER 7 FEATURES 14 COPYRIGHT: THE BIG GUN FOR GAME DEVELOPERS A one-of-a-kind game concept, an instantly recognizable character, a clever phrase— these are all a game developer’s most valuable assets. To protect such intangible properties from pirates, you’ll need to bring out the big gun—copyright. Here’s some free advice from a lawyer. By S. Gregory Boyd 20 FAST RADIOSITY: USING PIXEL SHADERS 14 With the latest advances in hardware, GPU, 34 and graphics technology, it’s time to take another look at lightmapping, the divine art of illuminating a digital environment. By Brian Ramage 20 POSTMORTEM 30 FROM BUNGIE TO WIDELOAD, SEROPIAN’S BEAT GOES ON 34 THE CINEMATIC EFFECT OF ZOMBIE STUDIOS’ A decade ago, Alexander Seropian founded a SHADOW OPS: RED MERCURY one-man company called Bungie, the studio that would eventually give us MYTH, ONI, and How do you give a player that vicarious presence in an imaginary HALO. Now, after his departure from Bungie, environment—that “you-are-there” feeling that a good movie often gives? he’s trying to repeat history by starting a new Zombie’s answer was to adopt many of the standard movie production studio: Wideload Games. -

Re-Engining Competitors Vie to Keep These U.S. Nuclear, Conventional Workhorses fl Ying Past 2050 PAGE 24

HUMAN SPACEFLIGHT 18 Q&A 14 WEATHER FORECASTING 32 The year of Commercial Crew OneWeb’s satellite maker Radio occultation explained Re-engining competitors vie to keep these U.S. nuclear, conventional workhorses fl ying past 2050 PAGE 24 FEBRUARY 2019 | A publication of the American Institute of Aeronautics and Astronautics | aerospaceamerica.aiaa.org AIAA CONGRESSIONAL WEDNESDAY, 20 MARCH VISITS DAY Advocate for Aerospace on Capitol Hill Every year, AIAA members—engineers, scientists, researchers, students, educators, and technology executives—travel to Washington, DC, for a day of advocacy and awareness with national decision makers. Spend a day meeting with new and established congressional members and their staff. Your participation, enthusiasm, and passion remind our lawmakers that aerospace is a key component of an economically strong and secure nation. If you are interested in the future of your profession, the advancement of technology, the furthering of scientific research, and the strengthening of our nation’s security, this event is for you! Travel subsidies are available Participating in CVD was like getting a bird’s eye view of a grand and magnificent “ national aerospace project. I knew that my contribution might be small, but being a voice of the aerospace community filled my heart with immense pride and humility at the same time. RUCHIR GOSWAMI PhD Candidate Iowa State University ” REGISTER NOW aiaa.org/CVD2019 FEATURES | February 2019 MORE AT aerospaceamerica.aiaa.org U.S. Air U.S. Force 18 32 40 24 Commercial Radio occultation The cost of New engines Crew’s payoff is put to the test aerospace NASA looks to 2019 Entrepreneurs aim advances as the year it might to prove that the for the B-52 An aerospace expert be liberated from technique is accurate gives his take on The U.S. -

INTEGRATED REPORT 2018 Code Number: 9697

CAPCOM CO., LTD. INTEGRATED REPORT © CAPCOM U.S.A., INC. 2016, 2018 ALL RIGHTS RESERVED. Photo by: Carlton Beener 2018 CAPCOM INTEGRATED REPORT 2018 Code Number: 9697 Code Number: 9697 CAPCOM INTEGRATED REPORT Capcom’s Value Creation: Past, Present, Future 05 Value Creation Model 2018 07 History of Value Creation Past 09 Major Intellectual Properties (IP) Present ESG-Based Value Creation 11 Financial and ESG Highlights Present 49 The Head of Development Discusses 15 Business Segments Highlights Present Human Resources Strategy 17 Medium- to Long-Term Vision Future 53 Social 58 Environmental 59 Corporate Governance Medium- to Long-Term Growth Strategy 59 Directors 19 CEO Commitment 62 Features of Capcom Corporate Governance 29 The COO’s Discussion of Growth Strategies 63 Corporate Governance Structure and Initiatives 35 Case Study: Analysis of a Global Hit 69 Major Discussions at Board of Directors 37 Analysis of Successful PDCA Examples Meetings and Audit and Supervisory 39 Financial Strategy According to the CFO Committee Meetings Held in Fiscal 2017 71 Risk Management The Heart of Value Creation Financial Analysis and Corporate Data 41 The Passion and the Prowess to Compete Globally 73 An Analysis of the Market and Capcom 43 Resident Evil 2 75 Market Analysis 45 Devil May Cry 5 77 11-Year Summary of Consolidated 47 Mega Man 11 Financial Indicators 79 Financial Review 83 Segment Information 89 Consolidated Financial Statement 94 Stock Data 95 Corporate Data Editorial Policy Capcom’s Integrated Report conveys initiatives aimed at improving corporate value over the medium- to long-term to shareholders, investors and all other stakeholders. -

Kynapse User Guide

SDK Release 3.8 Published Friday, June 24, 2005 Copyright © 2000-2005 Kynogon S.A. All rights reserved. Kynapse powered by Kynogon SDK Contents Kynapse User Guide 5 Introduction 6 Getting started 7 What is Kynapse? 8 Purpose of Kynapse 9 Design philosophy 10 Kynapse specs 11 Using this documentation 13 Typographic conventions 14 Finding information 15 Programming Guide 16 Kynapse 3.7 to Kynapse 3.8 migration guide 17 Getting started with Kynapse 18 Fundamental principles 19 Behaviors 20 How behaviors are generated 21 Kynapse architecture 22 Architectural overview 23 Kynapse layout 25 Simulation engine integration 26 Main objects 27 AI engine 28 AI world 29 Entities and entity attributes 30 Actions and action attributes 32 Brains 33 Agents 35 Services 36 Teams 38 Pathfinding constraints 39 Pathfinding heuristics 40 Pathobjects 41 Main data structures 43 Resources data structures 44 PathData 45 Pathways 47 Configuration data structures 48 AI engine configuration parameters (CParamBlock) 49 AI world definition 50 Entity definition 51 Core management 52 Time management 3 Kynapse powered by Kynogon SDK Exact time measurement 55 Deterministic time measurement 56 Time management sample code 57 Memory management 59 Object management 62 Data management 63 Initializing, updating and terminating Kynapse 65 Overview 66 Initializing Kynapse 67 Updating Kynapse 69 Terminating Kynapse 70 Kynapse coordinate system 71 Using Kynapse standard components 72 Creating and customizing Kynapse components 73 Configuring Kynapse 74 Generating pathdata 75 Common topics 76 Integration helpers 77 Tools 78 Reference 79 Glossary 4 Kynapse powered by Kynogon SDK Kynapse user guide Welcome to the Kynapse 3.8 user guide (online LIMITED version)! New to Kynapse? If you're new to Kynapse you should start reading: • The introduction (p.6). -

A Queer Game Informed by Research

University of Central Florida STARS Honors Undergraduate Theses UCF Theses and Dissertations 2021 Cares, Labors, and Dangers: A Queer Game Informed by Research Amy Schwinge University of Central Florida Part of the Game Design Commons, and the Lesbian, Gay, Bisexual, and Transgender Studies Commons Find similar works at: https://stars.library.ucf.edu/honorstheses University of Central Florida Libraries http://library.ucf.edu This Open Access is brought to you for free and open access by the UCF Theses and Dissertations at STARS. It has been accepted for inclusion in Honors Undergraduate Theses by an authorized administrator of STARS. For more information, please contact [email protected]. Recommended Citation Schwinge, Amy, "Cares, Labors, and Dangers: A Queer Game Informed by Research" (2021). Honors Undergraduate Theses. 1003. https://stars.library.ucf.edu/honorstheses/1003 CARES, LABORS, AND DANGERS: A QUEER GAME INFORMED BY RESEARCH by AMY SCHWINGE A thesis submitted in partial fulfillment of the requirements for the Honors in the Major Program in Integrative General Studies in the College of Undergraduate Studies and in the Burnett Honors College at the University of Central Florida Orlando, Florida Spring 2021 i Abstract Queerness as a quality has a permanent fluidity. Videogames as a medium are continually evolving and advancing. Thus, queer games have a vast potential as an art form and research subject. While there is already a wealth of knowledge surrounding queer games my contribution takes the form of both research paper and creative endeavor. I created a game by interpreting the queer elements present in games research. My game reflects the trends and qualities present in contemporary queer games, such as critiques on empathy and alternative game-making programs. -

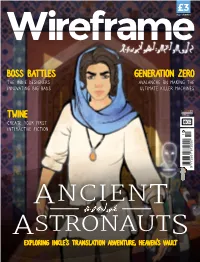

ANCIENT ASTRONAUTS Exploring Inkle’S Translation Adventure, Heaven’S Vault Subscribe Today 12 Weeks for £12*

ALL FORMATS BOSS BATTLES GENERATION ZERO THE INDIE DESIGNERS AVALANCHE ON MAKING THE INNOVATING BIG BADS ULTIMATE KILLER MACHINES Issue 10 £3 TWINE wfmag.cc CREATE YOUR FIRST INTERACTIVE FICTION 10 72000 16 7263 97 ANCIENT ASTRONAUTS EXPLORING INKLE’S TRANSLATION ADVENTURE, HEAVEN’S VAULT Subscribe today 12 weeks for £12* Visit: wfmag.cc/12weeks to order * UK Price. 6 issue introductory offer Let’s talk about games and xenophobia laying video games doesn’t cause otherwise their potency, I’d ask: how many people over the course non-violent people to go on shooting of history have structured their lives, lived, named sprees. Let’s get that out of the way right children, killed, and died for the story of a man who died P off the bat. Video games no more cause on a cross? violence than action movies, violent TV shows, crime Our stories are powerful, and they can be novels, The Ballad of Billy the Kid, The Iliad, or any other JESSICA PRICE extraordinarily powerful: the rational response to the storytelling that contains violence. Your kid isn’t going to economy, political environment, and looming climate Jessica Price is a go shoot up their school if you let them play Call of Duty. producer, writer, and catastrophe for millennials and those who come after Unfortunately, efforts to demonise video games in the manager with over a us would probably be despair and helplessness. But wake of tragedies, and the spectre of hostile crusaders decade of experience we get involved, we vote, we act, and we keep going. -

Gay Engines: Imagining a Queer Development Platform for Video

Gay Engines: Imagining a Queer Development Platform for Video Games Cass Zegura MCM 1990: Honors Thesis/Project in Modern Culture and Media April 5, 2020 1 Preface The story of this project begins in the fall of 2017, at a crowded tapas bar in downtown Providence. My aunt and uncle, who live in Boston, had come down to visit and take me out to dinner. It was a perfectly ordinary night except in one regard: when I told my aunt and uncle about the classes I was taking that semester, a strange look crossed their faces. I thought the source of their confusion might be the computer science course I had mentioned, an upper-level design class called Independent Study in 2D Game Engines. I assumed that they, like I, had never heard of the term “game engine” before and thus did not know what it was. And as I launched into my explanation (which I won’t repeat here—I’ve already written an entire chapter dedicated to this question), the confusion diminished, but only somewhat, and we soon changed subjects. On a phone call with my mom a few days later, I learned the true source of my aunt and uncle’s befuddlement. In that noisy restaurant, the word “game” had transformed into the word “gay.” They thought that I was taking a class on 2D gay engines. No wonder they were confused! I would’ve been too. What the heck is a gay engine anyway? Of course, I didn’t know then that I was going to spend my senior year trying to answer that question. -

CAPCOM INTEGRATED REPORT 2019 Code Number: 9697

CAPCOM CO., LTD. INTEGRATED REPORT 2019 Captivating a Connected World CAPCOM INTEGRATED REPORT 2019 Code Number: 9697 Code Number: 9697 Advancing Our Global Brand Further Monster Hunter World: Iceborne Released in January 2018, Monster Hunter: World (MH:W, below), succeeded on two key elements of our growth strategy, namely globalization and shifting to digital. This propelled it to over 12.4 million units shipped worldwide, making it Capcom’s biggest hit ever. We aim to grow the fanbase even further by continuing to advance these two elements on Monster Hunter World: Iceborne (MHW:I, below), which is scheduled for release during the fiscal year ending March 2020. For details, see p. 35 of the Integrated Report 2018. Globalization Increasing global users by supporting 12 languages 1 and launching titles simultaneously worldwide The two key MH : W raised the Monster Hunter series to global Overseas Approximately 25% elements to brand status by increasing the overseas sales ratio to our success roughly 60%, compared to its historical 25%. We plan to solidify our global user base with MHW:I Overseas by releasing it simultaneously around the globe and Approximately offering the game in 12 languages. 75% 01 CAPCOM INTEGRATED REPORT 2019 Digital Shift 2 Taking our main sales and marketing channels online We expect the bulk of MHW:I sales to be digital. While we maximize revenue using the digital marketing data Trial version we have accumulated up to this point, we will analyze Feedback Capcom user purchasing trends to utilize in digital sales -

Avid Maestro | Virtual Set Setup Guide V2019.8

Avid® Maestro | Virtual Set Setup Guide Version 2019.8 Legal Notices Product specifications are subject to change without notice and do not represent a commitment on the part of Avid Technology, Inc. This product is subject to the terms and conditions of a software license agreement provided with the software. The product may only be used in accordance with the license agreement. This product may be protected by one or more U.S. and non-U.S patents. Details are available at www.avid.com/patents. This document is protected under copyright law. An authorized licensee of may reproduce this publication for the licensee’s own use in learning how to use the software. This document may not be reproduced or distributed, in whole or in part, for commercial purposes, such as selling copies of this document or providing support or educational services to others. This document is supplied as a guide for . Reasonable care has been taken in preparing the information it contains. However, this document may contain omissions, technical inaccuracies, or typographical errors. Avid Technology, Inc. does not accept responsibility of any kind for customers’ losses due to the use of this document. Product specifications are subject to change without notice. Copyright © 2019 Avid Technology, Inc. and its licensors. All rights reserved. The following disclaimer is required by Epic Games, Inc.: Unreal® is a trademark or registered trademark of Epic Games, Inc. in the United States of America and elsewhere. Unreal® Engine, Copyright 1998 - 2018 Epic Games, Inc. All rights reserved." The following disclaimer is required by Apple Computer, Inc.: APPLE COMPUTER, INC. -

Comparison Between Famous Game Engines and Eminent Games Prerna Mishra and Urmila Shrawankar

Special Issue on Artificial Intelligence Underpinning Comparison between Famous Game Engines and Eminent Games Prerna Mishra and Urmila Shrawankar Department of Computer Science & Engineering, RTMNU, Nagpur (MS), India Abstract — Nowadays game engines are imperative for building of network quality of experience (QoE). While in conformist Online 3D applications and games. This is for the reason that the engines Gaming the user experience is spawned at the client side so the network appreciably reduce resources for employing obligatory but intricate does not have any impact on the performance, affecting the worth of utilities. This paper elucidates about a game engine, popular games Cloud Gaming [2, 4] developed by these engines and its foremost elements. It portrays With the constituent of video gaming disappearing, people merely a number of special kinds of contemporary game developed by favour to finance time in real-time mobile games that are compatible engines in the way of their aspects, procedure and deliberates their with an extensive array of platforms and operating systems. These stipulations with comparison. engines takes the gaming experience to an entirely new-fangled echelon, avoiding poor graphics and quality experiences with the Keywords — Game Engine, Non-Cloud Gaming Platforms, similar joysticks from the past to play around. Cloud Gaming Platforms, Popular Games, GPGPU This paper portrays the comparative study of few diverse cloud and non-cloud platforms that are currently associated with gaming. I. INTRODUCTION As games are evolving progressively delivering profounder and added a biding experience for players, their prospective for psychosomatic N general, the notion of game engine is very easy to comprehend.