Integrated Computational Intelligence and Japanese Candlestick Method for Short-Term Financial Forecasting

Total Page:16

File Type:pdf, Size:1020Kb

Load more

Recommended publications

-

Japanese Candlestick Patterns

Presents Japanese Candlestick Patterns www.ForexMasterMethod.com www.ForexMasterMethod.com RISK DISCLOSURE STATEMENT / DISCLAIMER AGREEMENT Trading any financial market involves risk. This course and all and any of its contents are neither a solicitation nor an offer to Buy/Sell any financial market. The contents of this course are for general information and educational purposes only (contents shall also mean the website http://www.forexmastermethod.com or any website the content is hosted on, and any email correspondence or newsletters or postings related to such website). Every effort has been made to accurately represent this product and its potential. There is no guarantee that you will earn any money using the techniques, ideas and software in these materials. Examples in these materials are not to be interpreted as a promise or guarantee of earnings. Earning potential is entirely dependent on the person using our product, ideas and techniques. We do not purport this to be a “get rich scheme.” Although every attempt has been made to assure accuracy, we do not give any express or implied warranty as to its accuracy. We do not accept any liability for error or omission. Examples are provided for illustrative purposes only and should not be construed as investment advice or strategy. No representation is being made that any account or trader will or is likely to achieve profits or losses similar to those discussed in this report. Past performance is not indicative of future results. By purchasing the content, subscribing to our mailing list or using the website or contents of the website or materials provided herewith, you will be deemed to have accepted these terms and conditions in full as appear also on our site, as do our full earnings disclaimer and privacy policy and CFTC disclaimer and rule 4.41 to be read herewith. -

Trading Guide

Tim Trush & Julie Lavrin Introducing MAGIC FOREX CANDLESTICKS Trading Guide Your guide to financial freedom. © Tim Trush, Julie Lavrin, T&J Profit Club, 2017, All rights reserved https://tinyurl.com/forexmp Table Of Contents Chapter I: Introduction to candlesticks I.1. Understanding the candlestick chart 3 Most traders focus purely on technical indicators and they don't realize how valuable the original candlesticks are. I.2. Candlestick patterns really work! 4 When a candlestick reversal pattern appears, you should exit position before it's too late! Chapter II: High profit candlestick patterns II.1. Bullish reversal patterns 6 This category of candlestick patterns signals a potential trend reversal from bearish to bullish. II.2. Bullish continuation patterns 8 Bullish continuation patterns signal that the established trend will continue. II.3. Bearish reversal patterns 9 This category of candlestick patterns signals a potential trend reversal from bullish to bearish. II.4. Bearish continuation patterns 11 This category of candlestick patterns signals a potential trend reversal from bullish to bearish. Chapter III: How to find out the market trend? 12 The Heiken Ashi indicator is a popular tool that helps to identify the trend. The disadvantage of this approach is that it does not include consolidation. Chapter IV: Simple scalping strategy IV.1. Wow, Lucky Spike! 14 Everyone can learn it, use it, make money with it. There are traders who make a living trading just this pattern. IV.2. Take a profit now! 15 When to enter, where to place Stop Loss and when to exit. IV.3. Examples 15 The next examples show you not only trend reversal signals, but the Lucky Spike concept helps you to identify when the correction is over and the main trend is going to recover. -

Candlesticks, Fibonacci, and Chart Pattern Trading Tools

ffirs.qxd 6/17/03 8:17 AM Page iii CANDLESTICKS, FIBONACCI, AND CHART PATTERN TRADING TOOLS A SYNERGISTIC STRATEGY TO ENHANCE PROFITS AND REDUCE RISK ROBERT FISCHER JENS FISCHER JOHN WILEY & SONS, INC. ffirs.qxd 6/17/03 8:17 AM Page iii ffirs.qxd 6/17/03 8:17 AM Page i CANDLESTICKS, FIBONACCI, AND CHART PATTERN TRADING TOOLS ffirs.qxd 6/17/03 8:17 AM Page ii Founded in 1870, John Wiley & Sons is the oldest independent publishing company in the United States. With offices in North America, Europe, Australia, and Asia, Wiley is globally committed to developing and market- ing print and electronic products and services for our customers’ professional and personal knowledge and understanding. The Wiley Trading series features books by traders who have survived the market’s ever-changing temperament and have prospered—some by re- investing systems, others by getting back to basics. Whether a novice trader, professional, or somewhere in-between, these books will provide the advice and strategies needed to prosper today and well into the future. For a list of available titles, visit our web site at www.WileyFinance.com. ffirs.qxd 6/17/03 8:17 AM Page iii CANDLESTICKS, FIBONACCI, AND CHART PATTERN TRADING TOOLS A SYNERGISTIC STRATEGY TO ENHANCE PROFITS AND REDUCE RISK ROBERT FISCHER JENS FISCHER JOHN WILEY & SONS, INC. ffirs.qxd 6/17/03 8:17 AM Page iv Copyright © 2003 by Robert Fischer, Dr. Jens Fischer. All rights reserved. Published by John Wiley & Sons, Inc., Hoboken, New Jersey. Published simultaneously in Canada. PHI-spirals, PHI-ellipse, PHI-channel, and www.fibotrader.com are registered trademarks and protected by U.S. -

Candlestick Patterns

INTRODUCTION TO CANDLESTICK PATTERNS Learning to Read Basic Candlestick Patterns www.thinkmarkets.com CANDLESTICKS TECHNICAL ANALYSIS Contents Risk Warning ..................................................................................................................................... 2 What are Candlesticks? ...................................................................................................................... 3 Why do Candlesticks Work? ............................................................................................................. 5 What are Candlesticks? ...................................................................................................................... 6 Doji .................................................................................................................................................... 6 Hammer.............................................................................................................................................. 7 Hanging Man ..................................................................................................................................... 8 Shooting Star ...................................................................................................................................... 8 Checkmate.......................................................................................................................................... 9 Evening Star .................................................................................................................................... -

© 2012, Bigtrends

1 © 2012, BigTrends Congratulations! You are now enhancing your quest to become a successful trader. The tools and tips you will find in this technical analysis primer will be useful to the novice and the pro alike. While there is a wealth of information about trading available, BigTrends.com has put together this concise, yet powerful, compilation of the most meaningful analytical tools. You’ll learn to create and interpret the same data that we use every day to make trading recommendations! This course is designed to be read in sequence, as each section builds upon knowledge you gained in the previous section. It’s also compact, with plenty of real life examples rather than a lot of theory. While some of these tools will be more useful than others, your goal is to find the ones that work best for you. Foreword Technical analysis. Those words have come to have much more meaning during the bear market of the early 2000’s. As investors have come to realize that strong fundamental data does not always equate to a strong stock performance, the role of alternative methods of investment selection has grown. Technical analysis is one of those methods. Once only a curiosity to most, technical analysis is now becoming the preferred method for many. But technical analysis tools are like fireworks – dangerous if used improperly. That’s why this book is such a valuable tool to those who read it and properly grasp the concepts. The following pages are an introduction to many of our favorite analytical tools, and we hope that you will learn the ‘why’ as well as the ‘what’ behind each of the indicators. -

How to Day Trade Using the ARMS Index

How to Day Trade Using the ARMS Index ARMS Index – Brief Overview The Arms index is a market breadth indicator used mostly by active traders to forecast intraday price movements. The Arms index was developed by Richard Arms in the 1960’s and is commonly referred to as the TRIN, which stands forTr ading Index. The TRIN or Arms index determines the strength of the market by taking into account the relationship between advancers, declineers, and their respective volume. In theory, if there is broad market strength – all boats rise. Conversely, if the broad market is tanking, stocks are likely going lower on the sh0rt-term. In this article, you will learn key factors and strategies for how to use the Arms Index to help with your trading. 4 Quick Things to Know About the ARMs Index #1 – Understanding the Arms Index (TRIN) For all its complexity in terms of number of calculations performed in real-time, the Arms Index is pretty simple to visually understand. The indicator fluctuates around the zero-line. Depending on where the TRIN indicator is relative to the zero-line, the market can be viewed as either overbought or oversold. In this regard, the Arms index is similar to other oscillators in that it fluctuates around fixed values and provides overbought and oversold conditions. One of the most important aspects of the TRIN/Arms index is that it not only shows how many stocks are advancing and declining but also includes volume which brings additional confidence to signals. Think about it, would you want to take a buy or sell signal if a stock is up 100% on 100 shares? I know this is an extreme example, but imagine if a few thinly traded stocks had the ability to wildly swing the values on the Arms Index. -

Everything You Wanted to Know About Candlestick Charts Is an Unregulated Product Published by Thames Publishing Ltd

EEvveerryytthhiinngg yyoouu wwaanntteedd ttoo kknnooww aabboouutt ccaannddlleessttiicckk cchhaarrttss by Mark Rose • Read candlestick charts accurately • Spot patterns quickly and easily • Use that information to make profitable trading decisions Contents Chapter 1. What is a candlestick chart? 3 Chapter 2. Candlestick shapes: 6 Anatomy of a candle 6 Doji 7 Marubozo 8 Chapter 3. Candlestick Patterns 9 Harami (bullish / bearish) 9 Hammer / Hanging Man 11 Inverted Hammer / Shooting Star 13 Engulfing (bullish/ bearish) 14 Morning Star / Evening Star 15 Three White Soldiers / Three Black Crows 16 Piercing Line / Dark Cloud Cover 17 Chapter 4. The history of candlestick charts 18 Conclusion 20 Candlestick Cheat Sheet 22 2 Chapter 1. What is a candlestick chart? Before I start to talk about candlestick patterns, I’d like to get right back to basics on candles: what they are, what they look like, and why we use them … Drawing lines When you look at a chart of market prices, you can usually choose from line charts or candlestick charts. A line chart will take its price levels from the opening or closing prices according to the timeframe you have selected. So, if you’re looking at a one-minute line chart of closing prices, it will plot the closing price for each one-minute period – something like this … Line charts can be useful for looking at the “bigger picture” and finding long-term trends, but they simply cannot offer up the kind of information contained in a candlestick chart. Here is a one-minute candlestick chart for the same period … 3 At first glance, it might look a little confusing, but I can assure you that once you’re used to candlestick charts – you won’t look back. -

Bearish Belt Hold Line

How to Day Trade using the Belt Hold Line Pattern Belt Hold Line Definition The belt hold line candlestick is basically the white marubozu and black marubozu within the context of a trend. The bullish belt hold candle opens on the low of the day and closes near the high. This candle presents itself in a downtrend and is an early sign that there is a potential bullish reversal. Conversely the bearish belt candle opens at the high of the day and closes near the low. This candle presents itself in an uptrend and is an early sign that there is a potential bearish reversal. These candles are reliable reversal bars, but lose their importance if there are a number of belt hold lines in close proximity. Not to complicate the matter further, but the pattern can also act as a continuation pattern, which we will cover later in this post. Bullish Belt Hold Line The bullish belt hold line gaps down on the open of the bar, which represents the low of the bar, and then rallies higher. Shorts who entered positions on the open of the bar are now underwater, which adds to the buying frenzy. Bullish Belt Hold Line You are now looking at a chart which shows the bullish belt hold line candlestick pattern. As you see, the trading day starts with a big bearish gap, which is the beginning of the pattern. The price action then continues with a big bullish candle. The candle has no lower candle wick and closes at its high. This price action confirms both a bullish marubozu and bullish belt hold line pattern. -

Candlestick and Pivot Point Trading Triggers

ffirs.qxd 9/25/06 10:00 AM Page iii Candlestick and Pivot Point Trading Triggers Setups for Stock, Forex, and Futures Markets JOHN L. PERSON John Wiley & Sons, Inc. ffirs.qxd 9/25/06 10:00 AM Page iv Copyright © 2007 by John L. Person. All rights reserved. Published by John Wiley & Sons, Inc., Hoboken, New Jersey. Published simultaneously in Canada. No part of this publication may be reproduced, stored in a retrieval system, or transmitted in any form or by any means, electronic, mechanical, photocopying, recording, scanning, or otherwise, except as per- mitted under Section 107 or 108 of the 1976 United States Copyright Act, without either the prior written permission of the Publisher, or authorization through payment of the appropriate per-copy fee to the Copyright Clearance Center, Inc., 222 Rosewood Drive, Danvers, MA 01923, (978) 750-8400, fax (978) 646- 8600, or on the web at www.copyright.com. Requests to the Publisher for permission should be addressed to the Permissions Department, John Wiley & Sons, Inc., 111 River Street, Hoboken, NJ 07030, (201) 748-6011, fax (201) 748-6008, or online at http://www.wiley.com/go/permissions. Limit of Liability/Disclaimer of Warranty: While the publisher and author have used their best efforts in preparing this book, they make no representations or warranties with respect to the accuracy or com- pleteness of the contents of this book and specifically disclaim any implied warranties of merchantability or fitness for a particular purpose. No warranty may be created or extended by sales representatives or written sales materials. -

The Top 10 Best Candlestick Patterns

The Top 10 Best Candlestick Patterns There are many candlestick patterns but only a few are actually worth knowing. Here are 10 candlestick patterns worth looking for. Remember that these patterns are only useful when you understand what is happening in each pattern. They must be combined with other forms of technical analysis to really be useful. For example, when you see one of these patterns on the daily chart, move down to the hourly chart. Does the hourly chart agree with your expectations on the daily chart? If so, then the odds of a reversal increase. The following patterns are divided into two parts: Bullish patterns and bearish patterns. These are reversal patterns that show up after a pullback (bullish patterns) or a rally (bearish patterns). Bullish Candlestick Patterns Engulfing:This is my all timefavorite candlestick pattern. This pattern consists of two candles. The first day is a narrow range candle that closes down for the day. The sellers are still in control of the stock but because it is a narrow range candle and volatility is low, the sellers are not very aggressive. The second day is a wide range candle that "engulfs" the body of the first candle and closes near the top of the range. The buyers have overwhelmed the sellers (demand is greater than supply). Buyers are ready to take control of this stock! Hammer: As discussed on the previous page, the stock opened, then at some point the sellers took control of the stock and pushed it lower. By the end of the day, the buyers won and had enough strength to close the stock at the top of the range. -

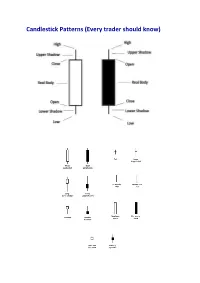

Candlestick Patterns (Every Trader Should Know)

Candlestick Patterns (Every trader should know) A doji represents an equilibrium between supply and demand, a tug of war that neither the bulls nor bears are winning. In the case of an uptrend, the bulls have by definition won previous battles because prices have moved higher. Now, the outcome of the latest skirmish is in doubt. After a long downtrend, the opposite is true. The bears have been victorious in previous battles, forcing prices down. Now the bulls have found courage to buy, and the tide may be ready to turn. For example = INET Doji Star A “long-legged” doji is a far more dramatic candle. It says that prices moved far higher on the day, but then profit taking kicked in. Typically, a very large upper shadow is left. A close below the midpoint of the candle shows a lot of weakness. Here’s an example of a long-legged doji. For example = K Long-legged Doji A “gravestone doji” as the name implies, is probably the most ominous candle of all, on that day, price rallied, but could not stand the altitude they achieved. By the end of the day. They came back and closed at the same level. Here ’s an example of a gravestone doji: A “Dragonfly” doji depicts a day on which prices opened high, sold off, and then returned to the opening price. Dragonflies are fairly infrequent. When they do occur, however, they often resolve bullishly (provided the stock is not already overbought as show by Bollinger bands and indicators such as stochastic). For example = DSGT The hangman candle , so named because it looks like a person who has been executed with legs swinging beneath, always occurs after an extended uptrend The hangman occurs because traders, seeing a sell-off in the shares, rush in to grab the stock a bargain price. -

Candlestick Patterns

CANDLESTICK CHART PATTERNS A GUIDE TO THE MOST COMMON CANDLESTICK CHART PATTERNS. THE STOCK MARKET GEEKS Candlestick Patterns A candlestick shows a stock’s price movement over a defined time range. The candlestick displays four different price levels. The highest point that price reached in that time period, the lowest price, the opening price and the closing price. Knowing how to read a candlestick is the first step in learning to understand and predict market movements. The next step, as a part of technical analysis, is piecing a series of candlesticks together and understanding what “story” is being told. Candlestick patterns are formed by a series of contiguous candlesticks and used to quickly interpret price information and form a thesis for future movement. They can be used to help identify key areas of supply/demand, otherwise known as support/resistance. We use candlestick patterns to identify the “story” between buyers and sellers and which side is stronger. Certain patterns help identify bullish sentiment, bearish sentiment, trend continuation, reversals or indecision. Understanding the basic patterns is crucial in constructing a trade theory. Below are 13 of the most common recurring patterns. These patterns can be found on all time frames and should be used accordingly. It is important to note that these patterns won’t always look picture perfect while actually trading. Nor is it important to remember the names of each of these patterns. What is important, is understanding the story (of buyers and sellers) that is being told by each pattern and how to piece that into your trading plan.