HEVC OPTIMIZATION in MOBILE ENVIRONMENTS by Ray Garcia a Dissertation Submitted to the Faculty of the College of Engineering

Total Page:16

File Type:pdf, Size:1020Kb

Load more

Recommended publications

-

Versatile Video Coding – the Next-Generation Video Standard of the Joint Video Experts Team

31.07.2018 Versatile Video Coding – The Next-Generation Video Standard of the Joint Video Experts Team Mile High Video Workshop, Denver July 31, 2018 Gary J. Sullivan, JVET co-chair Acknowledgement: Presentation prepared with Jens-Rainer Ohm and Mathias Wien, Institute of Communication Engineering, RWTH Aachen University 1. Introduction Versatile Video Coding – The Next-Generation Video Standard of the Joint Video Experts Team 1 31.07.2018 Video coding standardization organisations • ISO/IEC MPEG = “Moving Picture Experts Group” (ISO/IEC JTC 1/SC 29/WG 11 = International Standardization Organization and International Electrotechnical Commission, Joint Technical Committee 1, Subcommittee 29, Working Group 11) • ITU-T VCEG = “Video Coding Experts Group” (ITU-T SG16/Q6 = International Telecommunications Union – Telecommunications Standardization Sector (ITU-T, a United Nations Organization, formerly CCITT), Study Group 16, Working Party 3, Question 6) • JVT = “Joint Video Team” collaborative team of MPEG & VCEG, responsible for developing AVC (discontinued in 2009) • JCT-VC = “Joint Collaborative Team on Video Coding” team of MPEG & VCEG , responsible for developing HEVC (established January 2010) • JVET = “Joint Video Experts Team” responsible for developing VVC (established Oct. 2015) – previously called “Joint Video Exploration Team” 3 Versatile Video Coding – The Next-Generation Video Standard of the Joint Video Experts Team Gary Sullivan | Jens-Rainer Ohm | Mathias Wien | July 31, 2018 History of international video coding standardization -

특집 : VVC(Versatile Video Coding) 표준기술

26 특집 : VVC(Versatile Video Coding) 표준기술 특집 VVC(Versatile Video Coding) 표준기술 Versatile Video Codec 의 Picture 분할 구조 및 부호화 단위 □ 남다윤, 한종기 / 세종대학교 요 약 터를 압축할 수 있는 고성능 영상 압축 기술의 필요 본 고에서는 최근 표준화가 진행되어 2020년에 표준화 완 성이 제기되었다. 고용량 비디오 신호를 압축하는 성을 앞두고 있는 VVC 의 표준 기술들 중에서 화면 정보를 코덱 기술은 그 시대 산업체의 요청에 따라 계속 발 구성하는 픽쳐의 부호화 단위 및 분할 구조에 대해 설명한 전해왔으며, 이에 따라 다양한 표준 코덱 기술들이 다. 본 고에서 설명되는 내용들은 VTM 6.0 을 기준으로 삼는 다. 세부적으로는 한 개의 픽처를 분할하는 구조들인 슬라 발표되었다. 현재 가장 활발히 쓰이고 있는 동영상 이스, 타일, 브릭, 서브픽처에 대해서 설명한 후, 이 구조들 압축 코덱들 중에는 H.264/AVC[1] 와 HEVC[2] 가 의 내부를 구성하는 CTU 및 CU 의 분할 구조에 대해서 설명 있다. H.264/AVC 기술은 2003년에 ITU-T 와 한다. MPEG 이 공동으로 표준화한 코덱 기술이고, 높은 압축률로 선명한 영상으로 복호화 할 수 있다. I. 서 론 H.264/AVC[1] 개발 후 10년 만인 2013년에 H.264 /AVC 보다 2배 이상의 부호화 효율을 지원하는 최근 동영상 촬영 장비, 디스플레이 장비와 같은 H.265/HEVC(High Efficiency Video Coding) 가 하드웨어가 발전되고, 동시에 5 G 통신망을 통해 실 개발되었다[2]. 그 후 2018년부터는 ITU-T VCEG 시간으로 고화질 영상의 전송이 가능해졌다. 따라 (Video Coding Experts Group) 와 ISO/IEC 서 4 K(UHD) 에서 더 나아가 8 K 고초화질 영상, 멀 MPEG(Moving Picture Experts Group) 은 티뷰 영상, VR 컨텐츠와 같은 동영상 서비스에 대 JVET(Joint Video Experts Team) 그룹을 만들어, 한 관심이 높아지고 있다. -

The Whole H.264 Standard

DRAFT ISO/IEC 14496-10 : 2002 (E) Joint Video Team (JVT) of ISO/IEC MPEG & ITU-T VCEG Document: JVT-G050 (ISO/IEC JTC1/SC29/WG11 and ITU-T SG16 Q.6) Filename: JVT-G050d35.doc 7th Meeting: Pattaya, Thailand, 7-14 March, 2003 Title: Draft ITU-T Recommendation and Final Draft International Standard of Joint Video Specification (ITU-T Rec. H.264 | ISO/IEC 14496-10 AVC) Status: Approved Output Document of JVT Purpose: Text Author(s) or Thomas Wiegand Tel: +49 - 30 - 31002 617 Contact(s): Heinrich Hertz Institute (FhG), Fax: +49 - 30 - 392 72 00 Einsteinufer 37, D-10587 Berlin, Email: [email protected] Germany Gary Sullivan Microsoft Corporation Tel: +1 (425) 703-5308 One Microsoft Way Fax: +1 (425) 706-7329 Redmond, WA 98052 USA Email: [email protected] Source: Editor _____________________________ This document is an output document to the March 2003 Pattaya meeting of the JVT. Title page to be provided by ITU-T | ISO/IEC DRAFT INTERNATIONAL STANDARD DRAFT ISO/IEC 14496-10 : 2002 (E) DRAFT ITU-T Rec. H.264 (2002 E) DRAFT ITU-T RECOMMENDATION TABLE OF CONTENTS Foreword ................................................................................................................................................................................xi 0 Introduction ..................................................................................................................................................................xii 0.1 Prologue................................................................................................................................................................ -

High Efficiency Video Coding (HEVC) and Its Extensions

2/13/2015 High Efficiency Video Coding (HEVC) and its Extensions Gary Sullivan Microsoft, Rapporteur Q6/16, co-chair JCT-VC, JCT-3V, MPEG Video 13 Febuary 2015 Major Video Coding Standards Mid 1990s: Mid 2000s: Mid 2010s: MPEG-2 H.264/MPEG-4 AVC HEVC • These are the joint work of the same two bodies ▫ ISO/IEC Moving Picture Experts Group (MPEG) ▫ ITU-T Video Coding Experts Group (VCEG) ▫ Most recently working on High Efficiency Video Coding (HEVC) as Joint Collaborative Team on Video Coding (JCT-VC) • HEVC version 1 was completed in January 2013 ▫ Standardized by ISO/IEC as ISO/IEC 23008-2 (MPEG-H Part 2) ▫ Standardized by ITU-T as H.265 Gary J. Sullivan 2015-02-13 1 2/13/2015 H.264/MPEG-4 Advanced Video Coding (AVC): The basic idea (2003) • Compress digital video content • Twice as much as you did before • With the same video quality, e.g. as MPEG-2 or H.263 • Or get higher quality with the same number of bits (or a combo) • Example: higher quality may mean higher resolution, e.g. HD • And better adaptation to applications and network environments • Unfortunately, with substantially higher computing requirements and memory requirements for both encoders and decoders Gary J. Sullivan 2015-02-13 High Efficiency Video Coding (HEVC): The basic idea (2013) • Compress digital video content • Twice as much as you did before • With the same video quality, e.g. as H.264 / MPEG-4 AVC • Or get higher quality with the same number of bits (or a combo) • Example: higher quality may mean higher resolution, e.g. -

The Arrival of the High Efficiency Video Coding Standard (HEVC)

3/21/2013 H.264/MPEG-4 Advanced Video The Arrival of the High Efficiency Coding (AVC): The basic idea (2003) Video Coding Standard (HEVC) • Compress digital video content • Twice as much as you did before (Rec. ITU-T H.265 | ISO/IEC 23008-2 | MPEG-H Part 2) • With the same video quality, e.g. as MPEG-2 or H.263 • Or get higher quality with the same number of bits (or a combo) • Example: higher quality may mean higher resolution, e.g. HD Gary J. Sullivan • And better adaptation to applications and network environments Co-Chair JCT-VC, Co-Chair JCT-3V, Chair VCEG, Co-Chair MPEG Video, • Unfortunately, with substantially higher computing requirements Video & Image Technology Architect, Microsoft <[email protected]> and memory requirements for both encoders and decoders 20 March 2013 Presentation for Data Compression Conference (DCC 2013) HEVC presentation for DCC 2013 Gary J. Sullivan 2013-03-20 High Efficiency Video Coding Chronology of International Video Coding Standards (HEVC): The basic idea (2013) MPEG-1 • Compress digital video content MPEG-4 Visual (1993) (1998-2001+) • Twice as much as you did before ISO/IEC • With the same video quality, e.g. as AVC H.262 / MPEG-2 H.264 / MPEG-4 • Or get higher quality with the same number of bits (or a combo) (1994/95- AVC T - 1998+) (2003-2009+) • Example: higher quality may mean higher resolution, e.g. Ultra-HD H.261 (1990+) • And better adaptation to applications and network environments ITU H.263 H.120 (1995-2000+) • Unfortunately, with substantially higher computing requirements (1984- and memory requirements for both encoders and decoders 1988) ▫ But this time the decoder is not so tough (~1.5x) ▫ And the memory increase is not so much 1990 1992 1994 1996 1998 2000 2002 2004 ▫ And the parallelism opportunities are better (throughout) HEVC presentation for DCC 2013 Gary J. -

CSR-T Volume 9 #8, November-December 1998

COMMUNICATIONS STANDARDS REVIEW TELECOMMUNICATIONS Volume 9, Number 8 November-December 1998 In This Issue The following reports of recent standards meetings represent the view of the reporter and are not official, authorized minutes of the meetings. ITU-T SG16, Multimedia, September 14 – 25, 1998, Geneva, Switzerland....................................................2 WP 1 Report..........................................................................................................................5 WP 2 Report..........................................................................................................................5 WP 3 Report..........................................................................................................................6 Q1/16 WP2, Audiovisual/Multimedia Services................................................................................6 Q2/16 WP2, Interactive Multimedia Information Retrieval Services (MIRS)...........................................7 Q3/16 WP2, Data Protocols for Multimedia Conferencing.................................................................7 Q4/16 WP1, Modems for Switched Telephone Network and Telephone Type Leased Lines..........................9 Q5/16 WP1, ISDN terminal adapters, and interworking of DTEs on ISDNs with DTEs on other networks........10 Q6/16 WP1, DTE-DCE Interchange Circuits...................................................................................11 Q7/16 WP1, DTE-DCE Interface Protocols.....................................................................................12 -

Video Coding: Recent Developments for HEVC and Future Trends

4/8/2016 Video Coding: Recent Developments for HEVC and Future Trends Initial overview section by Gary Sullivan Video Architect, Microsoft Corporate Standards Group 30 March 2016 Presentation for Data Compression Conference, Snowbird, Utah Major Video Coding Standards Mid 1990s: Mid 2000s: Mid 2010s: MPEG-2 H.264/MPEG-4 AVC HEVC • These are the joint work of the same two bodies ▫ ISO/IEC Moving Picture Experts Group (MPEG) ▫ ITU-T Video Coding Experts Group (VCEG) ▫ Most recently working on High Efficiency Video Coding (HEVC) as Joint Collaborative Team on Video Coding (JCT-VC) • HEVC version 1 was completed in January 2013 ▫ Standardized by ISO/IEC as ISO/IEC 23008-2 (MPEG-H Part 2) ▫ Standardized by ITU-T as H.265 ▫ 3 profiles: Main, 10 bit, and still picture Gary J. Sullivan 2016-03-30 1 4/8/2016 Project Timeline and Milestones First Test Model under First Working Draft and ISO/IEC CD ISO/IEC DIS ISO/IEC FDIS & ITU-T Consent Consideration (TMuC) Test Model (Draft 1 / HM1) (Draft 6 / HM6) (Draft 8 / HM8) (Draft 10 / HM10) 1200 1000 800 600 400 Participants 200 Documents 0 Gary J. Sullivan 2016-03-30 4 HEVC Block Diagram Input General Coder General Video Control Control Signal Data Transform, - Scaling & Quantized Quantization Scaling & Transform Split into CTUs Inverse Coefficients Transform Coded Header Bitstream Intra Prediction Formatting & CABAC Intra-Picture Data Estimation Filter Control Analysis Filter Control Intra-Picture Data Prediction Deblocking & Motion SAO Filters Motion Data Intra/Inter Compensation Decoder Selection Output Motion Decoded Video Estimation Picture Signal Buffer Gary J. -

Visual Coding"

INTERNATIONAL TELECOMMUNICATION UNION STUDY GROUP 16 TELECOMMUNICATION TD 215 R1 (WP3/16) STANDARDIZATION SECTOR STUDY PERIOD 2013-2016 English only Original: English Question(s): 6/16 Geneva, 12-23 October 2015 TD Source: Rapporteur Q6/16 Title: Report of Question 6/16 "Visual coding" CONTENTS 1 RESULTS ................................................................................................................................................................ 2 1.1 SUMMARY ............................................................................................................................................................. 2 1.1.1 Recommendations for Approval .................................................................................................................. 2 1.1.2 Recommendations proposed for Consent in accordance with Rec. A.8. ..................................................... 2 1.1.3 Other documents for Approval .................................................................................................................... 2 1.1.4 Question 6/16 summary .............................................................................................................................. 2 1.2 QUESTION 6/16-VISUAL CODING ........................................................................................................................... 3 1.2.1 Documentation ............................................................................................................................................ 3 1.2.2 Report -

Versatile Video Coding (VVC) – the New Standard and Its Capabilities

19/11/2020 Versatile Video Coding (VVC) – The New Standard and its Capabilities Gary Sullivan Co-chair, ITU-T/ISO/IEC Joint Video Experts Team (JVET) Microsoft Research based primarily on slides prepared by Benjamin Bross, Fraunhofer Heinrich Hertz Institute, Berlin 1 Versatile Video Coding (VVC) Joint ITU-T (VCEG) and ISO/IEC (MPEG) project, completed July 2020 Rec. ITU-T H.266 & ISO/IEC 23090-3 Coding Efficiency Versatility Rendered ”screen“ content coding ~50% saving over H.265/HEVC Adaptive resolution changes Independent sub-pictures emph. HD / UHD / 8K resolutions Tiles, slices and wavefronts emph. HDR / WCG Layered multistream & scalability emph. 10 bit Bitstream extraction and merging 360° video projection handling Random access & splicing features Gradual decoder refresh 2 2 1 19/11/2020 History of Video Coding Standards Quality VVC HEVC AVC MPEG-2 / PSNR H.266 / MPEG-I H.265 / MPEG-H H.264 / MPEG-4 H.262 (dB) 41 (2020) (2013) (2003) (1995) 39 37 35 BasketballDrive (1920 x 1080, 50Hz) 33 31 bit rate 0 5 10 15 20 25 30 35 40 (Mbit/s) 3 3 VVC – Coding Efficiency Jevons Paradox "The efficiency with which a resource is used tends to increase (rather than decrease) the rate of consumption of that resource." Video is >80% of internet traffic (and rising) 4 4 2 19/11/2020 Video Coding Consumption 2018–2020 82% 12X 7X of all Internet increase in AR/VR increase in video traffic is now video traffic monitoring traffic * Cisco Visual Networking Index: Forecast and Trends, 2018–2022 5 5 VVC – Timeline 2015 Oct. -

The H.264/MPEG-4 Advanced Video Coding (AVC) Standard

International Telecommunication Union TheThe H.264/MPEGH.264/MPEG--44 AdvancedAdvanced VideoVideo CodingCoding (AVC)(AVC) StandardStandard Gary J. Sullivan, Ph.D. ITU-T VCEG Rapporteur | Chair ISO/IEC MPEG Video Rapporteur | Co-Chair ITU/ISO/IEC JVT Rapporteur | Co-Chair July 2005 ITU- T VICA Workshop 22-23 July 2005, ITU Headquarter, Geneva TheThe AdvancedAdvanced VideoVideo CodingCoding ProjectProject AVCAVC == ITUITU--TT H.264H.264 // MPEGMPEG--44 partpart 1010 § History: ITU-T Q.6/SG16 (VCEG - Video Coding Experts Group) “H.26L” standardization activity (where the “L” stood for “long-term”) § August 1999: 1st test model (TML-1) § July 2001: MPEG open call for technology: H.26L demo’ed § December 2001: Formation of the Joint Video Team (JVT) between VCEG and MPEG to finalize H.26L as a new joint project (similar to MPEG-2/H.262) § July 2002: Final Committee Draft status in MPEG § Dec ‘02 technical freeze, FCD ballot approved § May ’03 completed in both orgs § July ’04 Fidelity Range Extensions (FRExt) completed § January ’05 Scalable Video Coding launched H.264/AVC July ‘05 Gary Sullivan 1 AVCAVC ObjectivesObjectives § Primary technical objectives: • Significant improvement in coding efficiency • High loss/error robustness • “Network Friendliness” (carry it well on MPEG-2 or RTP or H.32x or in MPEG-4 file format or MPEG-4 systems or …) • Low latency capability (better quality for higher latency) • Exact match decoding § Additional version 2 objectives (in FRExt): • Professional applications (more than 8 bits per sample, 4:4:4 color -

Overview of International Video Coding Standards (Preceding H

International Telecommunication Union OverviewOverview ofof InternationalInternational VideoVideo CodingCoding StandardsStandards (preceding(preceding H.264/AVC)H.264/AVC) Gary J. Sullivan, Ph.D. ITU-T VCEG Rapporteur | Chair ISO/IEC MPEG Video Rapporteur | Co-Chair ITU/ISO/IEC JVT Rapporteur | Co-Chair July 2005 ITU- T VICA Workshop 22-23 July 2005, ITU Headquarter, Geneva VideoVideo CodingCoding StandardizationStandardization OrganizationsOrganizations § Two organizations have dominated video compression standardization: • ITU-T Video Coding Experts Group (VCEG) International Telecommunications Union – Telecommunications Standardization Sector (ITU-T, a United Nations Organization, formerly CCITT), Study Group 16, Question 6 • ISO/IEC Moving Picture Experts Group (MPEG) International Standardization Organization and International Electrotechnical Commission, Joint Technical Committee Number 1, Subcommittee 29, Working Group 11 Video Standards Overview July ‘05 Gary Sullivan 1 Chronology of International Video Coding Standards H.120H.120 (1984(1984--1988)1988) H.263H.263 (1995-2000+) T (1995-2000+) - H.261H.261 (1990+)(1990+) H.262 / H.264 / ITU H.262 / H.264 / MPEGMPEG--22 MPEGMPEG--44 (1994/95(1994/95--1998+)1998+) AVCAVC (2003(2003--2006)2006) MPEGMPEG--11 MPEGMPEG--44 (1993) (1993) VisualVisual ISO/IEC (1998(1998--2001+)2001+) 1990 1992 1994 1996 1998 2000 2002 2004 Video Standards Overview July ‘05 Gary Sullivan 2 TheThe ScopeScope ofof PicturePicture andand VideoVideo CodingCoding StandardizationStandardization § Only the Syntax -

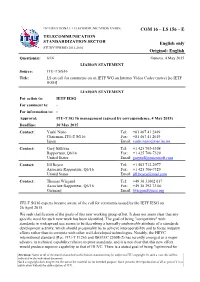

Draft LS on Call for Comments on an IETF WG on Internet Video Codec

INTERNATIONAL TELECOMMUNICATION UNION COM 16 – LS 156 – E TELECOMMUNICATION STANDARDIZATION SECTOR English only STUDY PERIOD 2013-2016 Original: English Question(s): 6/16 Geneva, 4 May 2015 LIAISON STATEMENT Source: ITU-T SG16 Title: LS on call for comments on an IETF WG on Internet Video Codec (netvc) [to IETF IESG] LIAISON STATEMENT For action to: IETF IESG For comment to: - For information to: - Approval: ITU-T SG 16 management (agreed by correspondence, 4 May 2015) Deadline: 30 May 2015 Contact: Yushi Naito Tel: +81 467 41 2449 Chairman, ITU-T SG16 Fax: +81 467 41 2019 Japan Email: [email protected] Contact: Gary Sullivan Tel: +1 425 703-5308 Rapporteur, Q6/16 Fax: +1 425 706-7329 United States Email: [email protected] Contact: Jill Boyce Tel: +1 503 712-2977 Associate Rapporteur, Q6/16 Fax: +1 425 706-7329 United States Email: [email protected] Contact: Thomas Wiegand Tel: +49 30 31002 617 Associate Rapporteur, Q6/16 Fax: +49 30 392 72 00 Germany Email: [email protected] ITU-T SG16 experts became aware of the call for comments issued by the IETF IESG on 26 April 2015. We seek clarification of the goals of this new working group effort. It does not seem clear that any specific need for such new work has been identified. The goal of being "competitive" with standards in widespread use seems to be describing a basically undesirable attribute of a standards development activity, which should presumably be to achieve interoperability and to focus industry efforts rather than to compete with other well-developed technologies.