News Sentiment Impact Analysis (NSIA) Framework

Total Page:16

File Type:pdf, Size:1020Kb

Load more

Recommended publications

-

GVH Közleménye a Kötelezettségvállalásokról

Eötvös Loránd Tudományegyetem Állam- és Jogtudományi Kar Kötelezettségvállaláson alapuló döntések az európai uniós és magyar versenyjogban Konzulens: Dr. Király Miklós Készítette: Kováts Surd Budapest 2019 Tartalom 1. Bevezetés ........................................................................................................................................ 7 1.1 A kötelezettségvállalások ........................................................................................................ 7 1.2 Hipotézisek .............................................................................................................................. 7 1.3 Módszertan ............................................................................................................................. 8 1.4 A dolgozat szerkezete ............................................................................................................. 9 2. A kötelezettségvállalások intézménye .......................................................................................... 11 2.1 A kötelezettségvállalások definíciója .................................................................................... 11 2.2 A kötelezettségvállalások sajátosságai ................................................................................. 12 2.2.1 A kötelezettségvállalásos eljárások tartalmi sajátosságai ............................................ 13 2.2.2 A kötelezettségvállalások jellemző eljárási elemei ....................................................... 15 2.3 A kötelezettségvállalások -

Thomson Reuters Spreadsheet Link User Guide

THOMSON REUTERS SPREADSHEET LINK USER GUIDE MN-212 Date of issue: 13 July 2011 Legal Information © Thomson Reuters 2011. All Rights Reserved. Thomson Reuters disclaims any and all liability arising from the use of this document and does not guarantee that any information contained herein is accurate or complete. This document contains information proprietary to Thomson Reuters and may not be reproduced, transmitted, or distributed in whole or part without the express written permission of Thomson Reuters. Contents Contents About this Document ...................................................................................................................................... 1 Intended Readership ................................................................................................................................. 1 In this Document........................................................................................................................................ 1 Feedback ................................................................................................................................................... 1 Chapter 1 Thomson Reuters Spreadsheet Link .......................................................................................... 2 Chapter 2 Template Library ........................................................................................................................ 3 View Templates (Template Library) .............................................................................................................................................. -

Securities Identifiers Capital Markets (From 4 Day Workshop)

Securities Identifiers Capital Markets (From 4 day workshop) Khader Shaik 1 Financial Markets • A marketplace where financial products are bought and sold • Financial Products – Securities • Stocks, bonds – Currencies – Derivatives • Options, Futures, Forwards • Equity derivatives, credit derivatives, commodity derivatives etc 2 Trading – Security IDs • Some identifier is used to locate all trading instrument • There are multiple types of identifiers are in use • Most commonly used are – TICKER / SYMBOL – CUSIP – ISIN – SEDOL – RIC Code 3 TICKER • Ticker is very commonly used identifier • Ticker has other alternate terms in use – Just Ticker – Ticker Symbol – Just Symbol – Trading Symbol • Ticker Term is originated from the sound of ticker tape machine that was used in early days of stock exchanges • Ticker is heavily used for largely traded securities like Stocks, Options and Futures 4 Stock Symbol • Stock Symbol is made up of two parts – Root Symbol – Special Code (not used always) • Root Symbol – All publicly trading stocks are assigned a unique symbol – GE, MSFT, IBM, GM, A, C – NYSE stocks ususally use 3 characters – NASDAQ stocks are usually use 4 characters • Special Code – Code attached to root symbol to identiy additional information of the company of the stock 5 Common Special Codes A – Class A Shares B – Class B Shares E – Delinquent SEC filing F – Foreign Security G – First Convertible bond H – Second Convertible I – Third Convertible bond bond W – Warrant Q – In bankruptcy Y – ADR X – Mutual Fund NM – Nasdaq National PK – -

Analysis of News Sentiment and Its Application to Finance

ANALYSIS OF NEWS SENTIMENT AND ITS APPLICATION TO FINANCE By Xiang Yu A thesis submitted for the degree of Doctor of Philosophy School of Information Systems, Computing and Mathematics, Brunel University 6 May 2014 Dedication: To the loving memory of my mother. Abstract We report our investigation of how news stories influence the behaviour of tradable financial assets, in particular, equities. We consider the established methods of turning news events into a quantifiable measure and explore the models which connect these measures to financial decision making and risk control. The study of our thesis is built around two practical, as well as, research problems which are determining trading strategies and quantifying trading risk. We have constructed a new measure which takes into consideration (i) the volume of news and (ii) the decaying effect of news sentiment. In this way we derive the impact of aggregated news events for a given asset; we have defined this as the impact score. We also characterise the behaviour of assets using three parameters, which are return, volatility and liquidity, and construct predictive models which incorporate impact scores. The derivation of the impact measure and the characterisation of asset behaviour by introducing liquidity are two innovations reported in this thesis and are claimed to be contributions to knowledge. The impact of news on asset behaviour is explored using two sets of predictive models: the univariate models and the multivariate models. In our univariate predictive models, a universe of 53 assets were considered in order to justify the relationship of news and assets across 9 different sectors. -

Thomson Reuters Elektron Edge V2.3.0

Thomson Reuters Elektron Edge v2.3.0 Programmer’s Guide Version: 1.0 07 May 2013 © Thomson Reuters 2013. All Rights Reserved. Thomson Reuters, by publishing this document, does not guarantee that any information contained herein is and will remain accurate or that use of the information will ensure correct and faultless operation of the relevant service or equipment. Thomson Reuters, its agents and employees, shall not be held liable to or through any user for any loss or damage whatsoever resulting from reliance on the information contained herein. This document contains information proprietary to Thomson Reuters and may not be reproduced, disclosed, or used in whole or part without the express written permission of Thomson Reuters. Any Software, including but not limited to, the code, screen, structure, sequence, and organization thereof, and Documentation are protected by national copyright laws and international treaty provisions. This manual is subject to U.S. and other national export regulations. Nothing in this document is intended, nor does it, alter the legal obligations, responsibilities or relationship between yourself and Thomson Reuters as set out in the contract existing between us. Thomson Reuters Elektron Edge Programmer’s Guide ii 1 Table of Contents 1 Table of Contents ............................................................................................................................ iii 2 List of Figures.................................................................................................................................. -

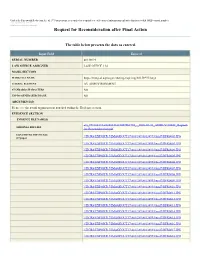

Request for Reconsideration After Final Action

Under the Paperwork Reduction Act of 1995 no persons are required to respond to a collection of information unless it displays a valid OMB control number. PTO Form 1960 (Rev 10/2011) OMB No. 0651-0050 (Exp 09/20/2020) Request for Reconsideration after Final Action The table below presents the data as entered. Input Field Entered SERIAL NUMBER 88138993 LAW OFFICE ASSIGNED LAW OFFICE 114 MARK SECTION MARK FILE NAME https://tmng-al.uspto.gov/resting2/api/img/88138993/large LITERAL ELEMENT AV AEROVIRONMENT STANDARD CHARACTERS NO USPTO-GENERATED IMAGE NO ARGUMENT(S) Please see the actual argument text attached within the Evidence section. EVIDENCE SECTION EVIDENCE FILE NAME(S) evi_971188172-20200131221057561772_._2020-01-31_AERO-VI1804T_Request- ORIGINAL PDF FILE for-Reconsideration.pdf CONVERTED PDF FILE(S) \\TICRS\EXPORT17\IMAGEOUT17\881\389\88138993\xml7\RFR0002.JPG (27 pages) \\TICRS\EXPORT17\IMAGEOUT17\881\389\88138993\xml7\RFR0003.JPG \\TICRS\EXPORT17\IMAGEOUT17\881\389\88138993\xml7\RFR0004.JPG \\TICRS\EXPORT17\IMAGEOUT17\881\389\88138993\xml7\RFR0005.JPG \\TICRS\EXPORT17\IMAGEOUT17\881\389\88138993\xml7\RFR0006.JPG \\TICRS\EXPORT17\IMAGEOUT17\881\389\88138993\xml7\RFR0007.JPG \\TICRS\EXPORT17\IMAGEOUT17\881\389\88138993\xml7\RFR0008.JPG \\TICRS\EXPORT17\IMAGEOUT17\881\389\88138993\xml7\RFR0009.JPG \\TICRS\EXPORT17\IMAGEOUT17\881\389\88138993\xml7\RFR0010.JPG \\TICRS\EXPORT17\IMAGEOUT17\881\389\88138993\xml7\RFR0011.JPG \\TICRS\EXPORT17\IMAGEOUT17\881\389\88138993\xml7\RFR0012.JPG \\TICRS\EXPORT17\IMAGEOUT17\881\389\88138993\xml7\RFR0013.JPG -

Text and Context: Language Analytics in Finance

Text and Context: Language Analytics in Finance Text and Context: Language Analytics in Finance Sanjiv Ranjan Das Santa Clara University Leavey School of Business [email protected] Boston — Delft Foundations and Trends R in Finance Published, sold and distributed by: now Publishers Inc. PO Box 1024 Hanover, MA 02339 United States Tel. +1-781-985-4510 www.nowpublishers.com [email protected] Outside North America: now Publishers Inc. PO Box 179 2600 AD Delft The Netherlands Tel. +31-6-51115274 The preferred citation for this publication is S. R. Das. Text and Context: Language Analytics in Finance. Foundations and Trends R in Finance, vol. 8, no. 3, pp. 145–260, 2014. R This Foundations and Trends issue was typeset in LATEX using a class file designed by Neal Parikh. Printed on acid-free paper. ISBN: 978-1-60198-910-9 c 2014 S. R. Das All rights reserved. No part of this publication may be reproduced, stored in a retrieval system, or transmitted in any form or by any means, mechanical, photocopying, recording or otherwise, without prior written permission of the publishers. Photocopying. In the USA: This journal is registered at the Copyright Clearance Cen- ter, Inc., 222 Rosewood Drive, Danvers, MA 01923. Authorization to photocopy items for internal or personal use, or the internal or personal use of specific clients, is granted by now Publishers Inc for users registered with the Copyright Clearance Center (CCC). The ‘services’ for users can be found on the internet at: www.copyright.com For those organizations that have been granted a photocopy license, a separate system of payment has been arranged. -

Sentiment Analysis of News Headlines for Stock Trend Prediction

© 2020 IJRTI | Volume 5, Issue 12 | ISSN: 2456-3315 Sentiment Analysis of News Headlines For Stock Trend Prediction Oshi Gupta Student of Bachelor of Technology Department of Computer Science and Technology Symbiosis University of Applied Sciences, Indore, India Abstract: Stock market is the bone of fast emerging economies. Stock market data analysis needs the help of artificial intelligence and data mining techniques. The volatility of stock prices depends on gains or losses of certain companies. News articles are one of the most important factors which influence the stock market. This project is about taking non quantifiable data such as financial news articles about a company and predicting its future stock trend with news sentiment classification. Assuming that news articles have impact on stock market, this is an attempt to study relationship between news and stock trend. Index Terms: Text Mining, Sentiment analysis, Naive Bayes, Random Forest, Stock trends. 1. INTRODUCTION News has always been an important source of information to build perception of market investments. As the volumes of news and news sources are increasing rapidly, it’s becoming impossible for an investor or even a group of investors to find out relevant news from the big chunk of news available. But, it’s important to make a rational choice of investing timely in order to make maximum profit out of the investment plan. And having this limitation, computation comes into the place which automatically extracts news from all possible news sources, filter and aggregate relevant ones, analyse them in order to give them real time sentiments. Stock market is the bone of fast emerging economies such as India. -

Its Impact on Liquidity and Trading Review Authors

DRAFT DRAFT DRAFT Automated Analysis of News to Compute Market Sentiment: Its Impact on Liquidity and Trading Review Authors: 1 DRAFT DRAFT DRAFT CONTENTS 0. Abstract 1. Introduction 2. Consideration of asset classes for automated trading 3. Market micro structure and liquidity 4. Categorisation of trading activities 5. Automated news analysis and market sentiment 6. News analytics and market sentiment: impact on liquidity 7. News analytics and its application to trading 8. Discussions 9. References 2 DRAFT DRAFT DRAFT 0. Abstract Computer trading in financial markets is a rapidly developing field with a growing number of applications. Automated analysis of news and computation of market sentiment is a related applied research topic which impinges on the methods and models deployed in the former. In this review we have first explored the asset classes which are best suited for computer trading. We critically analyse the role of different classes of traders and categorise alternative types of automated trading. We present in a summary form the essential aspects of market microstructure and the process of price formation as this takes place in trading. We introduce alternative measures of liquidity which have been developed in the context of bid-ask of price quotation and explore its connection to market microstructure and trading. We review the technology and the prevalent methods for news sentiment analysis whereby qualitative textual news data is turned into market sentiment. The impact of news on liquidity and automated trading is critically examined. Finally we explore the interaction between manual and automated trading. 3 DRAFT DRAFT DRAFT 1. Introduction This report is prepared as a driver review study for the Foresight project: The Future of Computer Trading in Financial Markets. -

Download File

From Language to the Real World: Entity-Driven Text Analytics Boyi Xie Submitted in partial fulfillment of the requirements for the degree of Doctor of Philosophy in the Graduate School of Arts and Sciences COLUMBIA UNIVERSITY 2015 c 2015 Boyi Xie All Rights Reserved ABSTRACT From Language to the Real World: Entity-Driven Text Analytics Boyi Xie This study focuses on the modeling of the underlying structured semantic information in natural language text to predict real world phenomena. The thesis of this work is that a general and uniform representation of linguistic information that combines multiple levels, such as semantic frames and roles, syntactic dependency structure, lexical items and their sentiment values, can support challeng- ing classification tasks for NLP problems. The hypothesis behind this work is that it is possible to generate a document representation using more complex data structures, such as trees and graphs, to distinguish the depicted scenarios and semantic roles of the entity mentions in text, which can facil- itate text mining tasks by exploiting the deeper semantic information. The testbed for the document representation is entity-driven text analytics, a recent area of active research where large collection of documents are analyzed to study and make predictions about real world outcomes of the entity mentions in text, with the hypothesis that the prediction will be more successful if the representation can capture not only the actual words and grammatical structures but also the underlying semantic generalizations encoded in frame semantics, and the dependency relations among frames and words. The main contribution of this study includes the demonstration of the benefits of frame semantic features and how to use them in document representation. -

How Conflict and Corruption Impact Financial Markets

January 2018 Risk Systems That Read® Northfield Information Services Research Dan diBartolomeo After a series of research projects going back to 1997, Northfield has commercially introduced risk models augmented with quantified news flows for investors. News conditioned versions of our “near horizon” models became available to Northfield clients in January 2018. We believe this is the biggest step forward in risk modeling for asset management since the creation of the multi-factor risk model in the 1970s. News and Conditional Risk Models Almost all available risk models are “unconditional.” They are based on a sample of past history that is deemed relevant, possibly giving more weight to recent observations, or assuming a simple trend in volatility (e.g. GARCH). We recently surveyed industry practice and found models based on sample periods ranging from as short as sixty trading days to more than twenty years. Once the sample period is determined, the heroic assumption is made that the future will be like the past. This process omits everything we know about the present, and how the present is different from the past average conditions of the sample period. Using the information about the present to adjust the risk estimates has been standard in some Northfield models since 1997 and in all models since 2009. For our purposes, “News” is the set of information coming to investors that tell us how the present is different from the past. This definition implies that routine information affirming the “status quo” is not news irrespective of how it is delivered. We also recognize the extensive literature showing that investors respond differently to “announcements” (time of information release anticipated) than to “news” where both the content and timing are a surprise. -

Sache COMP/M.4726 – Thomson Corporation/Reuters Group

DE Die Veröffentlichung dieses Textes dient lediglich der Information. Eine Zusammenfassung dieser Entscheidung wird in allen Amtssprachen der Gemeinschaft im Amtsblatt der Europäischen Union veröffentlicht. Sache COMP/M.4726 – Thomson Corporation/Reuters Group Nur der englische Text ist verbindlich. VERORDNUNG (EG) Nr. 139/2004 FUSIONSKONTROLLVERFAHREN Artikel 8 Absatz 2 Datum: 19.2.2008 KOMMISSION DER EUROPÄISCHEN GEMEINSCHAFTEN Brüssel, den 19.2.2008 SG-Greffe(2008) D/200711 NICHTVERTRAULICHE FASSUNG ENTSCHEIDUNG DER KOMMISSION vom 19.2.2008 zur Feststellung der Vereinbarkeit eines Zusammenschlusses mit dem Gemeinsamen Markt und dem EWR-Abkommen (Sache Nr. COMP/M.4726 – Thomson Corporation/ Reuters Group) Entscheidung der Kommission vom 19.2.2008 zur Feststellung der Vereinbarkeit eines Zusammenschlusses mit dem Gemeinsamen Markt und dem EWR-Abkommen (Sache COMP/M.4726 – Thomson Corporation/ Reuters Group) (Nur der englische Text ist verbindlich) (Text von Bedeutung für den EWR) DIE KOMMISSION DER EUROPÄISCHEN GEMEINSCHAFTEN – gestützt auf den Vertrag zur Gründung der Europäischen Gemeinschaft, gestützt auf das Abkommen über den europäischen Wirtschaftsraum, insbesondere auf Artikel 57, gestützt auf die Verordnung (EG) Nr. 139/2004 des Rates vom 20. Januar 2004 über die Kontrolle von Unternehmenszusammenschlüssen1, insbesondere Artikel 8 Absatz 2, gestützt auf die Entscheidung der Kommission vom 8. Oktober 2007 zur Einleitung eines Verfahrens in dieser Sache, gestützt auf die Stellungnahme des Beratenden Ausschusses für Unternehmenszusammenschlüsse,