Apisonar: Mining API Usage Examples

Total Page:16

File Type:pdf, Size:1020Kb

Load more

Recommended publications

-

Unlocking Trapped Value in Data | Accenture

Unlocking trapped value in data – accelerating business outcomes with artificial intelligence Actions to bridge the data and AI value gap Enterprises need a holistic data strategy to harness the massive power of artificial intelligence (AI) in the cloud By 2022, 90% of corporate strategies will explicitly cite data as a critical enterprise asset1. The reason why? Fast-growing pressure on enterprises to be more responsive, customer obsessed and market relevant. These capabilities demand real-time decision-making, augmented with AI/machine learning (ML)-based insights. And that requires end-to-end data visibility—free from complexities in the underlying infrastructure. For enterprises still reliant on legacy systems, this can be particularly challenging. Irrespective of challenges, however, the direction is clear. According to IDC estimates, spend on AI platforms and applications by enterprises in Asia Pacific (excluding Japan and China) is set to increase by a five-year CAGR of 30%, rising from US$753.6 million in 2020 to US$2.2 billion in 202 4 2. The need to invest strategically in AI/ML is higher than ever as CXOs move away from an experimental mindset and focus on breaking down data silos to extract full value from their data assets. These technologies are likely to have a significant impact on enterprises’ data strategies—making them increasingly By 2022, 90% of corporate strategies will integrated into CXOs’ growth objectives for the business. explicitly cite data as a critical enterprise asset. This is supported by research for Accenture’s Technology Vision 2021, which shows that the top three technology areas where enterprises are prioritizing - Gartner investment over the next year are cloud (45%), AI (39%) and IoT (35%)3. -

Jeffrey Scudder Google Inc. March 28, 2007 Google Spreadsheets Automation Using Web Services

Jeffrey Scudder Google Inc. March 28, 2007 Google Spreadsheets Automation using Web Services Jeffrey Scudder Google Inc. March 28, 2007 2 Overview What is Google Spreadsheets? • Short Demo What is the Google Spreadsheets Data API? • Motivations (Why an API?) • Protocol design • Atom Publishing Protocols • GData • List feed deconstructed How do I use the Google Spreadsheets Data API? • Authentication • Longer Demo Questions 3 What is Google Spreadsheets? Let’s take a look 4 What is Google Spreadsheets? Why not ask why • Spreadsheets fits well with our mission… – “Organize My Information… and… – Make it Accessible and Useful… – With whomever I choose (and nobody else, thanks)” • In other words…. – Do-it-yourself Content Creation – Accepted/Familiar Interface of Spreadsheets and Documents – Accessibility from anywhere (…connected) – Easy-to-use Collaboration – Do-it-yourself Community Creation 5 What is the Google Spreadsheets Data API? Motivations • Foster development of specific-use apps • Allow users to create new UIs • To extend features offered • To integrate with 3rd party products • To offer new vertical applications 6 What is the Google Spreadsheets Data API? Protocol design based on existing open standards • Deciding on design principles – Use a RESTful approach – Reuse open standards – Reuse design from other Google APIs • The end result – REST web service based on GData – Data is in Atom XML and protocol based on the Atom Publishing Protocol (APP) – GData is based on Atom and APP and adds authentication, query semantics, and more -

Vermont Wood Works Council

REPORT FOR APR 1, 2021 - APR 30, 2021 (GENERATED 4/30/2021) VERMONT WOOD - SEO & DIGITAL MARKETING REPORT Search Engine Visibility & Competitors NUMBER OF ORGANIC KEYWORDS IN TOP 10 BY DOMAIN verm on twood.com verm on tfu rn itu rem akers.com m adein verm on tm arketplace.c… verm on twoodworkin gsch ool.… 1/2 20 15 10 5 0 May Ju n Ju l Au g Sep Oct Nov Dec Jan Feb Mar Apr ORGANIC SEARCH ENGINE VISIBILITY ORGANIC VISIBILITY INCL. COMPETITORS 10 .0 0 Domain Organic visibilit y Pre vio us p e m adeinverm ontm arketplace.com 6.91 +0.47 7.50 verm ontwood.com 6.43 - 11.60 verm ontfurniturem akers.com 2.50 - 10.60 5.0 0 verm ontwoodworkingschool.com 1.56 = vtfpa.org 0.13 - 12.91 verm ontwoodlands.org 2.50 0.09 +89.7 3 0 .0 0 May Ju n Ju l Au g Sep Oct Nov Dec Jan Feb Mar Apr Google Keyword Ranking Distribution # OF KEYWORDS TRACKED # OF KEYWORDS IN TOP 3 # OF KEYWORDS IN TOP 10 # OF KEYWORDS IN TOP 20 0 8 10 Previou s period Previou s year Previou s period Previou s year Previou s period Previou s year 29 0% - 100% 0% 14% 0% 0% 1 of 8 Google Keyword Rankings ORGANIC POSITION NOTES Ke yword Organic posit ion Posit ion change verm ont wooden toys 4 = T he "Or ganic Posit ion" means t he it em r anking on t he Google woodworkers verm ont 4 = sear ch r esult page. -

Query Expansion Based on Crowd Knowledge for Code Search

IEEE TRANSACTIONS ON SERVICES COMPUTING, VOL. 9, NO. X, XXXXX 2016 1 Query Expansion Based on Crowd Knowledge for Code Search Liming Nie, He Jiang, Zhilei Ren, Zeyi Sun, and Xiaochen Li Abstract—As code search is a frequent developer activity in software development practices, improving the performance of code search is a critical task. In the text retrieval based search techniques employed in the code search, the term mismatch problem is a critical language issue for retrieval effectiveness. By reformulating the queries, query expansion provides effective ways to solve the term mismatch problem. In this paper, we propose Query Expansion based on Crowd Knowledge (QECK), a novel technique to improve the performance of code search algorithms. QECK identifies software-specific expansion words from the high quality pseudo relevance feedback question and answer pairs on Stack Overflow to automatically generate the expansion queries. Furthermore, we incorporate QECK in the classic Rocchio’s model, and propose QECK based code search method QECKRocchio. We conduct three experiments to evaluate our QECK technique and investigate QECKRocchio in a large-scale corpus containing real-world code snippets and a question and answer pair collection. The results show that QECK improves the performance of three code search algorithms by up to 64 percent in Precision, and 35 percent in NDCG. Meanwhile, compared with the state-of-the-art query expansion method, the improvement of QECKRocchio is 22 percent in Precision, and 16 percent in NDCG. Index Terms—Code search, crowd knowledge, query expansion, information retrieval, question & answer pair Ç 1INTRODUCTION ODE search is a frequent developer activity in software Obviously, it is not an easy task to formulate a good query, Cdevelopment practices, which has been a part of soft- which depends greatly on the experience of the developer and ware development for decades [47]. -

![Google Cheat Sheets [.Pdf]](https://docslib.b-cdn.net/cover/9906/google-cheat-sheets-pdf-999906.webp)

Google Cheat Sheets [.Pdf]

GOOGLE | CHEAT SHEET Key for skill required Novice This two page Google Cheat Sheet lists all Google services and tools as to understand the Intermediate well as background information. The Cheat Sheet offers a great reference underlying concepts to grasp of basic to advance Google query building concepts and ideas. Expert CHEAT SHEET GOOGLE SERVICES Google domains google.co.kr Google Company Information google.ae google.kz Public (NASDAQ: GOOG) and google.com.af google.li (LSE: GGEA) Google AdSense https://www.google.com/adsense/ google.com.ag google.lk google.off.ai google.co.ls Founded Google AdWords https://adwords.google.com/ google.am google.lt Menlo Park, California (1998) Google Analytics http://google.com/analytics/ google.com.ar google.lu google.as google.lv Location Google Answers http://answers.google.com/ google.at google.com.ly Mountain View, California, USA Google Base http://base.google.com/ google.com.au google.mn google.az google.ms Key people Google Blog Search http://blogsearch.google.com/ google.ba google.com.mt Eric E. Schmidt Google Bookmarks http://www.google.com/bookmarks/ google.com.bd google.mu Sergey Brin google.be google.mw Larry E. Page Google Books Search http://books.google.com/ google.bg google.com.mx George Reyes Google Calendar http://google.com/calendar/ google.com.bh google.com.my google.bi google.com.na Revenue Google Catalogs http://catalogs.google.com/ google.com.bo google.com.nf $6.138 Billion USD (2005) Google Code http://code.google.com/ google.com.br google.com.ni google.bs google.nl Net Income Google Code Search http://www.google.com/codesearch/ google.co.bw google.no $1.465 Billion USD (2005) Google Deskbar http://deskbar.google.com/ google.com.bz google.com.np google.ca google.nr Employees Google Desktop http://desktop.google.com/ google.cd google.nu 5,680 (2005) Google Directory http://www.google.com/dirhp google.cg google.co.nz google.ch google.com.om Contact Address Google Earth http://earth.google.com/ google.ci google.com.pa 2400 E. -

Opensocialに見る Googleのオープン戦略 G オ 戦略

OpenSocialに見る Googleのオオ戦略ープン戦略 Seasar Conference 2008 Autumn よういちろう(TANAKA Yoichiro) (C) 2008 Yoichiro Tanaka. All rights reserved. 08.9.6 1 Seasar Conference 2008 Autumn Self‐introduction • 田中 洋郎洋一郎(TANAKA Yoichiro) – Mash up Award 3rd 3部門同時受賞 – Google API Expert(OpenSocial) 天使やカイザー と呼ばれて 検索 (C) 2008 Yoichiro Tanaka. All rights reserved. 08.9.6 2 Seasar Conference 2008 Autumn Agenda • Impression of Google • What’s OpenSocial? • Process to open • Google now (C) 2008 Yoichiro Tanaka. All rights reserved. 08.9.6 3 Seasar Conference 2008 Autumn Agenda • Impression of Google • What’s OpenSocial? • Process to open • Google now (C) 2008 Yoichiro Tanaka. All rights reserved. 08.9.6 4 Seasar Conference 2008 Autumn Services produced by Google http://www.google.co.jp/intl/ja/options/ (C) 2008 Yoichiro Tanaka. All rights reserved. 08.9.6 5 Seasar Conference 2008 Autumn APIs & Developer Tools Android Google Web Toolkit Blogger Data API Chromium Feedbunner APIs Gadgets API Gmail Atom Feeds Google Account Google AdSense API Google AdSense for Audio Authentication API Google AdWords API Google AJAX APIs Google AJAX Feed API Google AJAX LAnguage API Google AJAX Search API Google Analytics Google App Engine Google Apps APIs Google Base Data API Google Book Search APIs Google Calendar APIs and Google Chart API Google Checkout API Google Code Search Google Code Search Data Tools API GlGoogle Custom ShSearch API GlGoogle Contacts Data API GlGoogle Coupon FdFeeds GlGoogle DkDesktop GdGadget GlGoogle DkDesktop ShSearch API APIs Google Documents List Google -

App Search Optimisation Plan and Implementation. Case: Primesmith Oy (Jevelo)

App search optimisation plan and implementation. Case: Primesmith Oy (Jevelo) Thuy Nguyen Bachelor’s Thesis Degree Programme in International Business 2016 Abstract 19 August 2016 Author Thuy Nguyen Degree programme International Business Thesis title Number of pages App search optimisation plan and implementation. Case: Primesmith and appendix pages Oy (Jevelo). 49 + 7 This is a project-based thesis discussing the fundamentals of app search optimisation in modern business. Project plan and implementation were carried out for Jevelo, a B2C hand- made jewellery company, within three-month period and thenceforth recommendations were given for future development. This thesis aims to approach new marketing techniques that play important roles in online marketing tactics. The main goal of this study is to accumulate best practices of app search optimisation and develop logical thinking when performing project elements and analysing results. The theoretical framework introduces an overview of facts and the innovative updates in the internet environment. They include factors of online marketing, mobile marketing, app search optimisation, with the merger of search engine optimisation (SEO) and app store op- timisation (ASO) being considered as an immediate action for digital marketers nowadays. The project of app search optimisation in chapter 4 reflects the accomplished activities of the SEO implementation from scratch and a piece of ASO analysis was conducted and sugges- tions for Jevelo. Other marketing methods like search engine marketing, affiliate marketing, content marketing, together with the customer analysis are briefly described to support of theoretical aspects. The discussion section visualises the entire thesis process by summing up theoretical knowledge, providing project evaluation as well as a reflection of self-learning. -

Seo-101-Guide-V7.Pdf

Copyright 2017 Search Engine Journal. Published by Alpha Brand Media All Rights Reserved. MAKING BUSINESSES VISIBLE Consumer tracking information External link metrics Combine data from your web Uploading backlink data to a crawl analytics. Adding web analytics will also identify non-indexable, redi- data to a crawl will provide you recting, disallowed & broken pages with a detailed gap analysis and being linked to. Do this by uploading enable you to find URLs which backlinks from popular backlinks have generated traffic but A checker tools to track performance of AT aren’t linked to – also known as S D BA the most link to content on your site. C CK orphans. TI L LY IN A K N D A A T B A E W O R G A A T N A I D C E S IL Search Analytics E F Crawler requests A G R O DeepCrawl’s Advanced Google CH L Integrate summary data from any D Search Console Integration ATA log file analyser tool into your allows you to connect technical crawl. Integrating log file data site performance insights with enables you to discover the pages organic search information on your site that are receiving from Google Search Console’s attention from search engine bots Search Analytics report. as well as the frequency of these requests. Monitor site health Improve your UX Migrate your site Support mobile first Unravel your site architecture Store historic data Internationalization Complete competition Analysis [email protected] +44 (0) 207 947 9617 +1 929 294 9420 @deepcrawl Free trail at: https://www.deepcrawl.com/free-trial Table of Contents 9 Chapter 1: 20 -

Google Search Console

Google Search Console Google Search Console is a powerful free tool to see how your website performs in Google search results and to help monitor the health of your website. How does your site perform in Google Search results? Search Analytics is the main tool in Search Console to see how your site performs in Google search results. Using the various filters on Search Analytics you can see: The top search queries people used to find your website Which countries your web traffic is coming from What devices visitors are using (desktop, mobile, or tablet) How many people saw your site in a search results list (measured as impressions) How many people clicked on your site from their search results Your website’s ranking in a search results list for a given term For example, the search query used most often to find the SCLS website is ‘South Central Library System.’ When people search ‘South Central Library System’ www.scls.info is the first result in the list. As shown below, this July, 745 people used this search query and 544 people clicked on the search result to bring them to www.scls.info. Monitoring your website health Google Search console also has tools to monitor your website’s health. The Security Issues tab lets you know if there are any signs that your site has been hacked and has information on how to recover. The Crawl Error tool lets you know if Google was unable to navigate parts of your website in order to index it for searching. If Google can’t crawl your website, it often means visitors can’t see it either, so it is a good idea to address any errors you find here. -

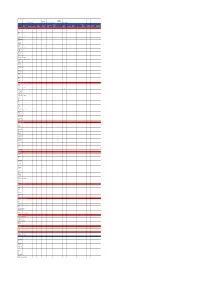

SNSW for Business Assisted Channels & Middle Office App Dev

Assisted SNSW for Channels & Digital Channels Business Personal Transactions Middle Office MyService NSW a/c Transactions Digital App Dev Digital SNSW for My Community & Service Proof Of Auth & Licence Knowledge & IT Service Tech Design & Portal Mobile Apps Website Licence Business Project (Forms) Unify Identity Tell Gov Once Linking API Platform Test Team Delivery Development Tech Stacks/Language/Tools/Frameworks Node/5 x XML x React x x x x x x NodeJS x x x Mongo DB - At x AngularJS x x Drupal 7/8 x Secure Logic PKI x HTML5 x Post CSS x Bootstrap x Springboot x x x Kotlin x x x Style components (react) x Postgres RDS x Apigee x Next.JS (react) x Graph QL x Express x x Form IO x Mongo DB/ Mongo Atlas x Mongo DB (form IO) hosted on atlas x Java x x Angular 6 x Angular 5 x SQL server x Typescript x x x Mysql Aurora DB x Vue JS x x Bulma CSS x Javascript ES6 x x x Note JS x Oauth x Objective C x Swift x Redux x ASP.Net x Docker Cloud & Infrastructure Apigee x x x x Firebase x PCF (hosting demo App) x PCF x x x x x x Google Play Store x x Apple App Store x Google SafetyNet x Google Cloud Console x Firebase Cloud Messaging x Lambda x x x Cognito x ECS x x ECR x ALB x S3 x x Jfrog x Route 53 x Form IO x Google cloud (vendor hosted) x Cloudfront x Docker x x Docker/ Kubernetes x Postgres (RDS) x x Rabbit MQ (PCF) x Secure Logic PKI x AWS Cloud x Dockerhub (DB snapshots) x Development Tools Atom x Eclipse x x Postman x x x x x x x x x VS Code x x x x Rider (Jetbrain) x Cloud 9 x PHP Storm x Sublime x Sourcetree x x x Webstorm x x x Intelij x -

How Can I Get Deep App Screens Indexed for Google Search?

HOW CAN I GET DEEP APP SCREENS INDEXED FOR GOOGLE SEARCH? Setting up app indexing for Android and iOS apps is pretty straightforward and well documented by Google. Conceptually, it is a three-part process: 1. Enable your app to handle deep links. 2. Add code to your corresponding Web pages that references deep links. 3. Optimize for private indexing. These steps can be taken out of order if the app is still in development, but the second step is crucial. Without it, your app will be set up with deep links but not set up for Google indexing, so the deep links will not show up in Google Search. NOTE: iOS app indexing is still in limited release with Google, so there is a special form submission and approval process even after you have added all the technical elements to your iOS app. That being said, the technical implementations take some time; by the time your company has finished, Google may have opened up indexing to all iOS apps, and this cumbersome approval process may be a thing of the past. Steps For Google Deep Link Indexing Step 1: Add Code To Your App That Establishes The Deep Links A. Pick A URL Scheme To Use When Referencing Deep Screens In Your App App URL schemes are simply a systematic way to reference the deep linked screens within an app, much like a Web URL references a specific page on a website. In iOS, developers are currently limited to using Custom URL Schemes, which are formatted in a way that is more natural for app design but different from Web. -

Leveraging Social Coding Websites DISSERTATION Submitted in Partial

UNIVERSITY OF CALIFORNIA, IRVINE Beyond Similar Code: Leveraging Social Coding Websites DISSERTATION submitted in partial satisfaction of the requirements for the degree of DOCTOR OF PHILOSOPHY in Software Engineering by Di Yang Dissertation Committee: Professor Cristina V. Lopes, Chair Professor James A. Jones Professor Sam Malek 2019 c 2019 Di Yang DEDICATION To my loving husband, Zikang. ii TABLE OF CONTENTS Page LIST OF FIGURES vi LIST OF TABLES viii ACKNOWLEDGMENTS ix CURRICULUM VITAE x ABSTRACT OF THE DISSERTATION xiv 1 Introduction 1 1.1 Overview . .1 1.1.1 Background . .1 1.1.2 Research Questions . .2 1.2 Thesis Statement . .3 1.3 Methodology and Key Findings . .3 2 Usability Analysis of Stack Overflow Code Snippets 6 2.1 Introduction . .6 2.2 Related Work . .9 2.3 Usability Rates . 11 2.3.1 Snippets Processing . 12 2.3.2 Findings . 15 2.3.3 Error Messages . 18 2.3.4 Heuristic Repairs for Java and C# Snippets . 19 2.4 Qualitative Analysis . 24 2.5 Google Search Results . 27 2.6 Conclusion . 30 3 File-level Duplication Analysis of GitHub 32 3.1 Introduction . 33 3.2 Related Work . 37 3.3 Analysis Pipeline . 40 3.3.1 Tokenization . 40 3.3.2 Database . 41 iii 3.3.3 SourcererCC . 42 3.4 Corpus . 43 3.5 Quantitative Analysis . 45 3.5.1 File-Level Analysis . 45 3.5.2 File-Level Analysis Excluding Small Files . 47 3.6 Mixed Method Analysis . 48 3.6.1 Most Duplicated Non-Trivial Files . 49 3.6.2 File Duplication at Different Levels .