From Random Fields to Networks

Total Page:16

File Type:pdf, Size:1020Kb

Load more

Recommended publications

-

CITY MOOD How Does Your City Feel?

CITY MOOD how does your city feel? Luisa Fabrizi Interaction Design One year Master 15 Credits Master Thesis August 2014 Supervisor: Jörn Messeter CITY MOOD how does your city feel? 2 Thesis Project I Interaction Design Master 2014 Malmö University Luisa Fabrizi [email protected] 3 01. INDEX 01. INDEX .................................................................................................................................03 02. ABSTRACT ...............................................................................................................................04 03. INTRODUCTION ...............................................................................................................................05 04. METHODOLOGY ..............................................................................................................................08 05. THEORETICAL FRamEWORK ...........................................................................................................09 06. DESIGN PROCESS ...................................................................................................................18 07. GENERAL CONCLUSIONS ..............................................................................................................41 08. FURTHER IMPLEMENTATIONS ....................................................................................................44 09. APPENDIX ..............................................................................................................................47 02. -

Parameters Estimation for Asymmetric Bifurcating Autoregressive Processes with Missing Data

Electronic Journal of Statistics ISSN: 1935-7524 Parameters estimation for asymmetric bifurcating autoregressive processes with missing data Benoîte de Saporta Université de Bordeaux, GREThA CNRS UMR 5113, IMB CNRS UMR 5251 and INRIA Bordeaux Sud Ouest team CQFD, France e-mail: [email protected] and Anne Gégout-Petit Université de Bordeaux, IMB, CNRS UMR 525 and INRIA Bordeaux Sud Ouest team CQFD, France e-mail: [email protected] and Laurence Marsalle Université de Lille 1, Laboratoire Paul Painlevé, CNRS UMR 8524, France e-mail: [email protected] Abstract: We estimate the unknown parameters of an asymmetric bi- furcating autoregressive process (BAR) when some of the data are missing. In this aim, we model the observed data by a two-type Galton-Watson pro- cess consistent with the binary Bee structure of the data. Under indepen- dence between the process leading to the missing data and the BAR process and suitable assumptions on the driven noise, we establish the strong con- sistency of our estimators on the set of non-extinction of the Galton-Watson process, via a martingale approach. We also prove a quadratic strong law and the asymptotic normality. AMS 2000 subject classifications: Primary 62F12, 62M09; secondary 60J80, 92D25, 60G42. Keywords and phrases: least squares estimation, bifurcating autoregres- sive process, missing data, Galton-Watson process, joint model, martin- gales, limit theorems. arXiv:1012.2012v3 [math.PR] 27 Sep 2011 1. Introduction Bifurcating autoregressive processes (BAR) generalize autoregressive (AR) pro- cesses, when the data have a binary tree structure. Typically, they are involved in modeling cell lineage data, since each cell in one generation gives birth to two offspring in the next one. -

A University of Sussex Phd Thesis Available Online Via Sussex

A University of Sussex PhD thesis Available online via Sussex Research Online: http://sro.sussex.ac.uk/ This thesis is protected by copyright which belongs to the author. This thesis cannot be reproduced or quoted extensively from without first obtaining permission in writing from the Author The content must not be changed in any way or sold commercially in any format or medium without the formal permission of the Author When referring to this work, full bibliographic details including the author, title, awarding institution and date of the thesis must be given Please visit Sussex Research Online for more information and further details NON-STATIONARY PROCESSES AND THEIR APPLICATION TO FINANCIAL HIGH-FREQUENCY DATA Mailan Trinh A thesis submitted for the degree of Doctor of Philosophy University of Sussex March 2018 UNIVERSITY OF SUSSEX MAILAN TRINH A THESIS FOR THE DEGREE OF DOCTOR OF PHILOSOPHY NON-STATIONARY PROCESSES AND THEIR APPLICATION TO FINANCIAL HIGH-FREQUENCY DATA SUMMARY The thesis is devoted to non-stationary point process models as generalizations of the standard homogeneous Poisson process. The work can be divided in two parts. In the first part, we introduce a fractional non-homogeneous Poisson process (FNPP) by applying a random time change to the standard Poisson process. We character- ize the FNPP by deriving its non-local governing equation. We further compute moments and covariance of the process and discuss the distribution of the arrival times. Moreover, we give both finite-dimensional and functional limit theorems for the FNPP and the corresponding fractional non-homogeneous compound Poisson process. The limit theorems are derived by using martingale methods, regular vari- ation properties and Anscombe's theorem. -

Network Traffic Modeling

Chapter in The Handbook of Computer Networks, Hossein Bidgoli (ed.), Wiley, to appear 2007 Network Traffic Modeling Thomas M. Chen Southern Methodist University, Dallas, Texas OUTLINE: 1. Introduction 1.1. Packets, flows, and sessions 1.2. The modeling process 1.3. Uses of traffic models 2. Source Traffic Statistics 2.1. Simple statistics 2.2. Burstiness measures 2.3. Long range dependence and self similarity 2.4. Multiresolution timescale 2.5. Scaling 3. Continuous-Time Source Models 3.1. Traditional Poisson process 3.2. Simple on/off model 3.3. Markov modulated Poisson process (MMPP) 3.4. Stochastic fluid model 3.5. Fractional Brownian motion 4. Discrete-Time Source Models 4.1. Time series 4.2. Box-Jenkins methodology 5. Application-Specific Models 5.1. Web traffic 5.2. Peer-to-peer traffic 5.3. Video 6. Access Regulated Sources 6.1. Leaky bucket regulated sources 6.2. Bounding-interval-dependent (BIND) model 7. Congestion-Dependent Flows 7.1. TCP flows with congestion avoidance 7.2. TCP flows with active queue management 8. Conclusions 1 KEY WORDS: traffic model, burstiness, long range dependence, policing, self similarity, stochastic fluid, time series, Poisson process, Markov modulated process, transmission control protocol (TCP). ABSTRACT From the viewpoint of a service provider, demands on the network are not entirely predictable. Traffic modeling is the problem of representing our understanding of dynamic demands by stochastic processes. Accurate traffic models are necessary for service providers to properly maintain quality of service. Many traffic models have been developed based on traffic measurement data. This chapter gives an overview of a number of common continuous-time and discrete-time traffic models. -

On Conditionally Heteroscedastic Ar Models with Thresholds

Statistica Sinica 24 (2014), 625-652 doi:http://dx.doi.org/10.5705/ss.2012.185 ON CONDITIONALLY HETEROSCEDASTIC AR MODELS WITH THRESHOLDS Kung-Sik Chan, Dong Li, Shiqing Ling and Howell Tong University of Iowa, Tsinghua University, Hong Kong University of Science & Technology and London School of Economics & Political Science Abstract: Conditional heteroscedasticity is prevalent in many time series. By view- ing conditional heteroscedasticity as the consequence of a dynamic mixture of in- dependent random variables, we develop a simple yet versatile observable mixing function, leading to the conditionally heteroscedastic AR model with thresholds, or a T-CHARM for short. We demonstrate its many attributes and provide com- prehensive theoretical underpinnings with efficient computational procedures and algorithms. We compare, via simulation, the performance of T-CHARM with the GARCH model. We report some experiences using data from economics, biology, and geoscience. Key words and phrases: Compound Poisson process, conditional variance, heavy tail, heteroscedasticity, limiting distribution, quasi-maximum likelihood estimation, random field, score test, T-CHARM, threshold model, volatility. 1. Introduction We can often model a time series as the sum of a conditional mean function, the drift or trend, and a conditional variance function, the diffusion. See, e.g., Tong (1990). The drift attracted attention from very early days, although the importance of the diffusion did not go entirely unnoticed, with an example in ecological populations as early as Moran (1953); a systematic modelling of the diffusion did not seem to attract serious attention before the 1980s. For discrete-time cases, our focus, as far as we are aware it is in the econo- metric and finance literature that the modelling of the conditional variance has been treated seriously, although Tong and Lim (1980) did include conditional heteroscedasticity. -

Playing God? Towards Machines That Deny Their Maker

Lecture with Prof. Rosalind Picard, Massachusetts Institute of Technology (MIT) Playing God? Towards Machines that Deny Their Maker 22. April 2016 14:15 UniS, Hörsaal A003 CAMPUS live VBG Bern Institut für Philosophie Christlicher Studentenverein Christliche Hochschulgruppe Länggassstrasse 49a www.campuslive.ch/bern www.bern.vbg.net 3000 Bern 9 www.philosophie.unibe.ch 22. April 2016 14:15 UniS, Hörsaal A003 (Schanzeneckstrasse 1, Bern) Lecture with Prof. Rosalind Picard (MIT) Playing God? Towards Machines that Deny their Maker "Today, technology is acquiring the capability to sense and respond skilfully to human emotion, and in some cases to act as if it has emotional processes that make it more intelligent. I'll give examples of the potential for good with this technology in helping people with autism, epilepsy, and mental health challenges such as anxie- ty and depression. However, the possibilities are much larger: A few scientists want to build computers that are vastly superior to humans, capable of powers beyond reproducing their own kind. What do we want to see built? And, how might we make sure that new affective technologies make human lives better?" Professor Rosalind W. Picard, Sc.D., is founder and director of the Affective Computing Research Group at the Massachusetts Institute of Technology (MIT) Media Lab where she also chairs MIT's Mind+Hand+Heart initiative. Picard has co- founded two businesses, Empatica, Inc. creating wearable sensors and analytics to improve health, and Affectiva, Inc. delivering software to measure and communi- cate emotion through facial expression analysis. She has authored or co-authored over 200 scientific articles, together with the book Affective Computing, which was instrumental in giving rise to the field by that name. -

Multivariate ARMA Processes

LECTURE 10 Multivariate ARMA Processes A vector sequence y(t)ofn elements is said to follow an n-variate ARMA process of orders p and q if it satisfies the equation A0y(t)+A1y(t − 1) + ···+ Apy(t − p) (1) = M0ε(t)+M1ε(t − 1) + ···+ Mqε(t − q), wherein A0,A1,...,Ap,M0,M1,...,Mq are matrices of order n × n and ε(t)is a disturbance vector of n elements determined by serially-uncorrelated white- noise processes that may have some contemporaneous correlation. In order to signify that the ith element of the vector y(t) is the dependent variable of the ith equation, for every i, it is appropriate to have units for the diagonal elements of the matrix A0. These represent the so-called normalisation rules. Moreover, unless it is intended to explain the value of yi in terms of the remaining contemporaneous elements of y(t), then it is natural to set A0 = I. It is also usual to set M0 = I. Unless a contrary indication is given, it will be assumed that A0 = M0 = I. It is assumed that the disturbance vector ε(t) has E{ε(t)} = 0 for its expected value. On the assumption that M0 = I, the dispersion matrix, de- noted by D{ε(t)} = Σ, is an unrestricted positive-definite matrix of variances and of contemporaneous covariances. However, if restriction is imposed that D{ε(t)} = I, then it may be assumed that M0 = I and that D(M0εt)= M0M0 =Σ. The equations of (1) can be written in summary notation as (2) A(L)y(t)=M(L)ε(t), where L is the lag operator, which has the effect that Lx(t)=x(t − 1), and p q where A(z)=A0 + A1z + ···+ Apz and M(z)=M0 + M1z + ···+ Mqz are matrix-valued polynomials assumed to be of full rank. -

AUTOREGRESSIVE MODEL with PARTIAL FORGETTING WITHIN RAO-BLACKWELLIZED PARTICLE FILTER Kamil Dedecius, Radek Hofman

AUTOREGRESSIVE MODEL WITH PARTIAL FORGETTING WITHIN RAO-BLACKWELLIZED PARTICLE FILTER Kamil Dedecius, Radek Hofman To cite this version: Kamil Dedecius, Radek Hofman. AUTOREGRESSIVE MODEL WITH PARTIAL FORGETTING WITHIN RAO-BLACKWELLIZED PARTICLE FILTER. Communications in Statistics - Simulation and Computation, Taylor & Francis, 2011, 41 (05), pp.582-589. 10.1080/03610918.2011.598992. hal- 00768970 HAL Id: hal-00768970 https://hal.archives-ouvertes.fr/hal-00768970 Submitted on 27 Dec 2012 HAL is a multi-disciplinary open access L’archive ouverte pluridisciplinaire HAL, est archive for the deposit and dissemination of sci- destinée au dépôt et à la diffusion de documents entific research documents, whether they are pub- scientifiques de niveau recherche, publiés ou non, lished or not. The documents may come from émanant des établissements d’enseignement et de teaching and research institutions in France or recherche français ou étrangers, des laboratoires abroad, or from public or private research centers. publics ou privés. Communications in Statistics - Simulation and Computation For Peer Review Only AUTOREGRESSIVE MODEL WITH PARTIAL FORGETTING WITHIN RAO-BLACKWELLIZED PARTICLE FILTER Journal: Communications in Statistics - Simulation and Computation Manuscript ID: LSSP-2011-0055 Manuscript Type: Original Paper Date Submitted by the 09-Feb-2011 Author: Complete List of Authors: Dedecius, Kamil; Institute of Information Theory and Automation, Academy of Sciences of the Czech Republic, Department of Adaptive Systems Hofman, Radek; Institute of Information Theory and Automation, Academy of Sciences of the Czech Republic, Department of Adaptive Systems Keywords: particle filters, Bayesian methods, recursive estimation We are concerned with Bayesian identification and prediction of a nonlinear discrete stochastic process. The fact, that a nonlinear process can be approximated by a piecewise linear function advocates the use of adaptive linear models. -

Uniform Noise Autoregressive Model Estimation

Uniform Noise Autoregressive Model Estimation L. P. de Lima J. C. S. de Miranda Abstract In this work we present a methodology for the estimation of the coefficients of an au- toregressive model where the random component is assumed to be uniform white noise. The uniform noise hypothesis makes MLE become equivalent to a simple consistency requirement on the possible values of the coefficients given the data. In the case of the autoregressive model of order one, X(t + 1) = aX(t) + (t) with i.i.d (t) ∼ U[α; β]; the estimatora ^ of the coefficient a can be written down analytically. The performance of the estimator is assessed via simulation. Keywords: Autoregressive model, Estimation of parameters, Simulation, Uniform noise. 1 Introduction Autoregressive models have been extensively studied and one can find a vast literature on the subject. However, the the majority of these works deal with autoregressive models where the innovations are assumed to be normal random variables, or vectors. Very few works, in a comparative basis, are dedicated to these models for the case of uniform innovations. 2 Estimator construction Let us consider the real valued autoregressive model of order one: X(t + 1) = aX(t) + (t + 1); where (t) t 2 IN; are i.i.d real random variables. Our aim is to estimate the real valued coefficient a given an observation of the process, i.e., a sequence (X(t))t2[1:N]⊂IN: We will suppose a 2 A ⊂ IR: A standard way of accomplish this is maximum likelihood estimation. Let us assume that the noise probability law is such that it admits a probability density. -

Membership Information

ACM 1515 Broadway New York, NY 10036-5701 USA The CHI 2002 Conference is sponsored by ACM’s Special Int e r est Group on Computer-Human Int e r a c t i o n (A CM SIGCHI). ACM, the Association for Computing Machinery, is a major force in advancing the skills and knowledge of Information Technology (IT) profession- als and students throughout the world. ACM serves as an umbrella organization offering its 78,000 members a variety of forums in order to fulfill its members’ needs, the delivery of cutting-edge technical informa- tion, the transfer of ideas from theory to practice, and opportunities for information exchange. Providing high quality products and services, world-class journals and magazines; dynamic special interest groups; numerous “main event” conferences; tutorials; workshops; local special interest groups and chapters; and electronic forums, ACM is the resource for lifelong learning in the rapidly changing IT field. The scope of SIGCHI consists of the study of the human-computer interaction process and includes research, design, development, and evaluation efforts for interactive computer systems. The focus of SIGCHI is on how people communicate and interact with a broadly-defined range of computer systems. SIGCHI serves as a forum for the exchange of ideas among com- puter scientists, human factors scientists, psychologists, social scientists, system designers, and end users. Over 4,500 professionals work together toward common goals and objectives. Membership Information Sponsored by ACM’s Special Interest Group on Computer-Human -

Download a PDF of This Issue

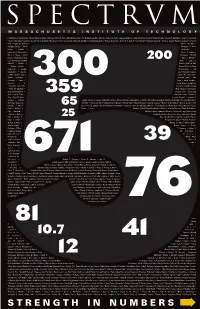

Hal Abelson • Edward Adams • Morris Adelman • Edward Adelson • Khurram Afridi • Anuradha Agarwal • Alfredo Alexander-Katz • Marilyne Andersen • Polina Olegovna Anikeeva • Anuradha M Annaswamy • Timothy Antaya • George E. Apostolakis • Robert C. Armstrong • Elaine V. Backman • Lawrence Bacow • Emilio Baglietto • Hari Balakrishnan • Marc Baldo • Ronald G. Ballinger • George Barbastathis • William A. Barletta • Steven R. H. Barrett • Paul I. Barton • Moungi G. Bawendi • Martin Z. Bazant • Geoffrey S. Beach • Janos M. Beer • Angela M. Belcher • Moshe Ben-Akiva • Hansjoerg Berger • Karl K. Berggren • A. Nihat Berker • Dimitri P. Bertsekas • Edmundo D. Blankschtein • Duane S. Boning • Mary C. Boyce • Kirkor Bozdogan • Richard D. Braatz • Rafael Luis Bras • John G. Brisson • Leslie Bromberg • Elizabeth Bruce • Joan E. Bubluski • Markus J. Buehler • Cullen R. Buie • Vladimir Bulovic • Mayank Bulsara • Tonio Buonassisi • Jacopo Buongiorno • Oral Buyukozturk • Jianshu Cao • Christopher Caplice • JoAnn Carmin • John S. Carroll • W. Craig Carter • Frederic Casalegno • Gerbrand Ceder • Ivan Celanovic • Sylvia T. Ceyer • Ismail Chabini • Anantha • P. Chandrakasan • Gang Chen • Sow-Hsin Chen • Wai K. Cheng • Yet-Ming Chiang • Sallie W. Chisholm • Nazil Choucri • Chryssosto- midis Chryssostomos • Shaoyan Chu • Jung-Hoon Chun • Joel P. Clark • Robert E. Cohen • Daniel R. Cohn • Clark K. Colton • Patricia Connell • Stephen R. Connors • Chathan Cooke • Charles L. Cooney • Edward F. Crawley • Mary Cummings • Christopher C. Cummins • Kenneth R. Czerwinski • Munther A. Da hleh • Rick Lane Danheiser • Ming Dao • Richard de Neufville • Olivier L de Weck • Edward Francis DeLong • Erik Demaine • Martin Demaine • Laurent Demanet • Michael J. Demkowicz • John M. Deutch • Srini Devadas • Mircea Dinca • Patrick S Doyle • Elisabeth M.Drake • Mark Drela • Catherine L.Drennan • Michael J.Driscoll • Steven Dubowsky • Esther Duflo • Fredo Durand • Thomas Eagar • Richard S. -

Wearable Platform to Foster Learning of Natural Facial Expressions and Emotions in High-Functioning Autism and Asperger Syndrome

Wearable Platform to Foster Learning of Natural Facial Expressions and Emotions in High-Functioning Autism and Asperger Syndrome PRIMARY INVESTIGATORS: Matthew Goodwin, The Groden Center. Rosalind Picard, Massachusetts Institute of Technology Media Laboratory Rana el Kaliouby, Massachusetts Institute of Technology Media Laboratory Alea Teeters, Massachusetts Institute of Technology Media Laboratory June Groden, The Groden Center DESCRIPTION: Evaluate the scientific and clinical significance of using a wearable camera system (Self-Cam) to improve recognition of emotions from real-world faces in young adults with Asperger syndrome (AS) and high-functioning autism (HFA). The study tests a group of 10 young adults diagnosed with AS/HFA who are asked to wear Self-Cam three times a week for 10 weeks, and use a computerized, interactive facial expression analysis system to review and analyze their videos on a weekly basis. A second group of 10 age-, sex-, IQ-matched young adults with AS/HFA will use Mind Reading – a systematic DVD guide for teaching emotion recognition. These two groups will be tested pre- and post-intervention on recognition of emotion from faces at three levels of generalization: videos of themselves, videos of their interaction partner, and videos of other participants (i.e. people they are less familiar with). A control group of 10 age- and sex- matched typically developing adults will also be tested to establish performance differences in emotion recognition abilities across groups. This study will use a combination of behavioral reports, direct observation, and pre- and post- questionnaires to assess Self-Cam’s acceptance by persons with autism. We hypothesize that the typically developing control group will perform better on emotion recognition than both groups with AS/HFA at baseline and that the Self-Cam group, whose intervention teaches emotion recognition using real-life videos, will perform better than the Mind Reading group who will learn emotion recognition from a standard stock of emotion videos.