The Reasonable Coder Petros Terzis Phd Student, Centre for Information Rights, University of Winchester, UK

Total Page:16

File Type:pdf, Size:1020Kb

Load more

Recommended publications

-

Air India Flight 182

Smith AAR AI 182 John Barry Smith www.corazon.com [email protected] Copyright 2001 All Rights Reserved AIRCRAFT ACCIDENT REPORT Air India Flight 182 1 Smith AAR AI 182 Report on the Accident to Boeing 747-237B VT-EFO, Air India Flight 182, Off Cork, Ireland on 23 June 1985 by John Barry Smith, Independent Aircraft Accident Investigator Abstract: Air India Flight 182, a Boeing 747-237B, registration VT-EFO, was on a flight from Mirabel airport, Montreal, Canada, to Heathrow airport, London, UK, when it disappeared from the radar scope at a position of latitude 51 degrees 0 minutes North and longitude 12 degrees 50 minutes West at 0714 Greenwich Mean Time (GMT), 23 June 1985, and the pieces crashed into the ocean about 110 miles west of Cork, Ireland. There were no survivors among the 329 passengers and crew members. There was unanimous official opinion among authorities that an inflight breakup caused by an explosion in the forward cargo compartment occurred. Based on the direct, tangible and circumstantial evidence of four similar accidents as described in six aircraft accident reports and using the benefit of hindsight, the conclusion of this investigator and author of this report is that the probable cause of the accident to Air India Flight 182 was faulty wiring shorting on the door unlatch motor causing the forward cargo door to inadvertently rupture open in flight probably at one or both of the midspan latches leading to an explosion of explosive decompression in the forward cargo compartment and subsequent aircraft breakup. Contents: 1. Air India Flight 182 Glossary Acronyms References and Source Materials Definitions Formatting Style Introduction 2. -

THE PHILOSOPHY of STEVEN SODERBERGH the Philosophy of Popular Culture

THE PHILOSOPHY OF STEVEN SODERBERGH The Philosophy of Popular Culture Th e books published in the Philosophy of Popular Culture series will illuminate and explore philosophical themes and ideas that occur in popular culture. Th e goal of this series is to demonstrate how philosophical inquiry has been reinvigorated by increased scholarly interest in the intersection of popular culture and philosophy, as well as to explore through philosophical analysis beloved modes of entertainment, such as movies, TV shows, and music. Philosophical concepts will be made accessible to the general reader through examples in popular culture. Th is series seeks to publish both established and emerging scholars who will engage a major area of popular culture for philosophical interpretation and examine the philosophical underpinnings of its themes. Eschewing ephemeral trends of philosophical and cultural theory, authors will establish and elaborate on connections between traditional philosophical ideas from important thinkers and the ever-expanding world of popular culture. Series Editor Mark T. Conard, Marymount Manhattan College, NY Books in the Series Th e Philosophy of Stanley Kubrick, edited by Jerold J. Abrams Football and Philosophy, edited by Michael W. Austin Tennis and Philosophy, edited by David Baggett Th e Philosophy of the Coen Brothers, edited by Mark T. Conard Th e Philosophy of Film Noir, edited by Mark T. Conard Th e Philosophy of Martin Scorsese, edited by Mark T. Conard Th e Philosophy of Neo-Noir, edited by Mark T. Conard Th e Philosophy of Horror, edited by Th omas Fahy Th e Philosophy of Th e X-Files, edited by Dean A. -

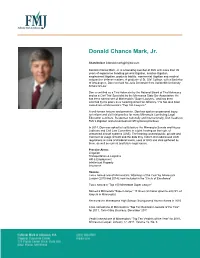

Donald Chance Mark, Jr

Donald Chance Mark, Jr. Shareholder | [email protected] Donald Chance Mark, Jr. is a founding member of FMJ with more than 40 years of experience handling general litigation, aviation litigation, employment litigation, products liability, commercial litigation and medical malpractice defense matters. A graduate of St. Olaf College, with a Bachelor of Arts degree, Don received his Juris Doctorate from Vanderbilt University School of Law. Don is certified as a Trial Advocate by the National Board of Trial Advocacy and as a Civil Trial Specialist by the Minnesota State Bar Association. He has been named one of Minnesota’s “Super Lawyers,” and has been selected by his peers as a “Leading American Attorney.” He has also been named one of Minnesota’s “Top 100 Lawyers.” A well-known lecturer and presenter, Don has spoken on personal injury, tort reform and civil trial practice for many Minnesota Continuing Legal Education seminars. Respected nationally and internationally, Don heads up FMJ’s litigation and unmanned aircraft systems practices. In 2014, Don was asked to testify before the Minnesota Senate and House Judiciary and Civil Law Committee in a joint hearing on the topic of unmanned aircraft systems (UAS). The hearing covered public, private and commercial usage of UAS and the data they collect. Don addressed UAS regulations at state and federal levels, uses of UAS and data gathered by them, as well as current and future legal issues. Practice Areas: Litigation Products Liability Transportation & Logistics HR & Employment Intellectual -

The Terrorist Is a Star!: Regulating Media Coverage of Publicity- Seeking Crimes

Federal Communications Law Journal Volume 60 Issue 3 Article 3 6-2008 The Terrorist Is A Star!: Regulating Media Coverage of Publicity- Seeking Crimes Michelle Ward Ghetti Southern University Law Center Follow this and additional works at: https://www.repository.law.indiana.edu/fclj Part of the Administrative Law Commons, Communications Law Commons, Constitutional Law Commons, First Amendment Commons, and the Legislation Commons Recommended Citation Ghetti, Michelle Ward (2008) "The Terrorist Is A Star!: Regulating Media Coverage of Publicity-Seeking Crimes," Federal Communications Law Journal: Vol. 60 : Iss. 3 , Article 3. Available at: https://www.repository.law.indiana.edu/fclj/vol60/iss3/3 This Article is brought to you for free and open access by the Law School Journals at Digital Repository @ Maurer Law. It has been accepted for inclusion in Federal Communications Law Journal by an authorized editor of Digital Repository @ Maurer Law. For more information, please contact [email protected]. The Terrorist Is A Star!: Regulating Media Coverage of Publicity-Seeking Crimes Michelle Ward Ghetti* I. P REFA CE ...................................................................................482 II. INTRODUCTION ......................................................................... 487 III. THE PROBLEM OF MEDIA COVERAGE OF PUBLICITY-SEEKING CRIMES ................................................... 490 A . Intimidation ...................................................................... 495 B. Im itation.......................................................................... -

A History of Flight Operations Rules for Common Carriers

AIAA SciTech Forum 10.2514/6.2018-1616 8–12 January 2018, Kissimmee, Florida 2018 AIAA Aerospace Sciences Meeting Extraordinary Care: A History of Flight Operations Rules for Common Carriers Donald L Wood1 and Timothy T Takahashi2 Arizona State Universtiy, Tempe, AZ, 85287-6106 In this work, we cover how the legal concept of the “Common Carrier” applies to commercial aircraft design and operations. We trace the history of this concept from antiquity to the present, specifically detailing the change history regarding Federal Regulation of Aircraft. We note that the Civil Aeronautics Act of 1938 was a milestone event; since these laws were amended, scheduled commercial aircraft (both design and operation) regulations became more stringent than those used in “general aviation.” We also document how the focus of litigation and additional regulation has shifted from fundamental performance and safety (fuel reserves, runway requirements, and obstacle avoidance) to protecting the passenger from incidental injury (trips, slips and falls). I. Introduction OMMERCIAL AIR TRAVEL plays a substantial role in United States political economy. Beginning with C rickety, canvas wrapped, wood and wire contraptions, commercial passenger aircraft have matured into the high performance jets that are capable of crossing vast oceans in a matter of hours. Fare paying customers enjoy the convenience offered by this expedient and safe form of travel by the millions each year. In fact, the U.S. Bureau of Transportation Statistics noted that 2015 resulted in nearly 900 million passengers traveling by commercial air carriers, a new all-time high. This relatively safe travel environment is the result of many a lesson learned in the air travel industry, often at the cost of bloodshed and innocent lives lost. -

K0572 Walter W

THE STATE HISTORCIAL SOCIETY OF MISSOURI RESEARCH CENTER-KANSAS CITY K0572 Walter W. Davis (1922-2011) Papers 1948-2007 1 cubic foot Davis was a flight engineer with Trans World Airlines (TWA). Includes newsletters, publications, clippings, handbooks, and reports, and employee agreements, an employee grievance pamphlet, a retirement handbook, and a copy of a legal Motion for Intervention filed by Flight Engineers BIOGRAPHY: Walter W. Davis was born in Hemple, Missouri. He graduated from Brookfield, Missouri High School, and then studied at the Missouri Aviation Institute in Kansas City, Missouri. Davis was first employed by Curtis Wright in St. Louis, Missouri, working on the early manufacture of the C46. He moved to Kansas City and worked on the B25’s, progressing to Tulsa, Oklahoma and 4- engine B24’s. Davis entered the U.S. Navy in 1944 and served as an Aviation Machinist Mate until 1946. He joined TWA in 1946 and retired in 1984 as a flight engineer. Davis flew both domestic and international flights, on all airplanes requiring a Flight Engineer C54/747. In 1976 Walter Davis was awarded Flight Engineer of the Year from the Chicago domicile. After his retirement, Davis became involved in the restoration of the Lockheed Constellation “Connie” in Kansas City. During the 15 years he worked with the “Connie”, he was made “Designated Flight Engineer” by the F.A.A. He conducted yearly recurrent classes and personal instruction to new flight engineers for the “Connie.” In 1996, Davis received the Charles Taylor Master Mechanics award, presented to those with 50 years aircraft mechanic experience. -

Dear All, As Requested, My Humble Info...Amardeep Kaur

From: "AMARDEEP KAUR" <[email protected]> Date: March 12, 2001 12:15:08 AM PST To: [email protected], [email protected], [email protected], [email protected], [email protected], [email protected], [email protected] Subject: Re:Flight Plan Dear All, As requested, my humble info...... Amardeep Kaur Age: 26 Highest Qualification : Law Degree from the University of Leicester, UK. Called to the Malaysian Bar in 1998. Currently employed by the Kuala Lumpur Stock Exchange in a Legal Department. Office Add : Malaysian Central Depository 6th Floor, Exchange Square Bukit Kewangan 50200 Kuala Lumpur Malaysia Am moving houses soon, hence office add is more permanent. Cell no : (+6012) 297-0677 deep Santokh Singh <[email protected]> 03/11/01 06:54PM >>> Crew I completely understand Parmjit's situation - something which is difficult for those who are unfamiliar with recent happenings amongst the Sikh community. The Sikh psyche has been under attack since the late 1970s. The culmination was the pinning of the blame of an airliner crash on our Nation. Parmjit will continue to help in whatever way he can, which he feels will not endanger his family. Given time, we will all recover our confidence and trust. Santokh Singh Maan Born 10-8-1944 in Agra, India. Schooling in Singapore. Flying training in 1964, AST, Perth, Scotland. Co-pilot 1966 - 1971 Malaysia Singapore Airlines 1966 DC3, F27 1967 De Havilland Comet 4 1968 - 1971 Boeing 707 Captain 1972 - 1986 Singapore Airlines 1972 F27 1973 Boeing 737 (Instructor) 1974 Boeing 707 (Instructor) 1976 - 1986 Boeing 747 (Line Instructor) 1986 Migrated to the Netherlands. -

Recent Developments in Aviation Cases David L

Journal of Air Law and Commerce Volume 47 | Issue 1 Article 2 1981 Recent Developments in Aviation Cases David L. Campbell Darrell K. Cherry Follow this and additional works at: https://scholar.smu.edu/jalc Recommended Citation David L. Campbell et al., Recent Developments in Aviation Cases, 47 J. Air L. & Com. 1 (1981) https://scholar.smu.edu/jalc/vol47/iss1/2 This Article is brought to you for free and open access by the Law Journals at SMU Scholar. It has been accepted for inclusion in Journal of Air Law and Commerce by an authorized administrator of SMU Scholar. For more information, please visit http://digitalrepository.smu.edu. RECENT DEVELOPMENTS IN AVIATION CASES DAVID L. CAMPBELL* DARRELL K. CHERRY** I. INTRODUCTION H AVING REVIEWED several hundred decisions since the report of 1980, the principal impression as to any overall conclusion in the trend of jurisprudence is the rather phenom- enal success that the defense seems to have obtained in the last year in aviation litigation. This has been a particularly good year for manufacturers, and component manufacturers, with several successful motions for summary judgment. Simi- larly, there has been continued success for defendants in the enforcement of contractual waivers between parties of rela- tively equal status. As this article will show, while there has been an occasional deviation, the Second Circuit has recently followed the Fifth and Ninth Circuits in important decisions in such contractual enforcements. The year 1980 also proved to be ripe for cases involving im- plied causes of action arising out of aviation statutes. While * D. -

Donald Chance Mark, Jr

Donald Chance Mark, Jr. Shareholder | [email protected] Donald Chance Mark, Jr. is a founding member of FMJ with more than 35 years of experience handling general litigation, aviation litigation, employment litigation, products liability, commercial litigation and medical malpractice defense matters. A graduate of St. Olaf College, with a Bachelor of Arts degree, Don received his Juris Doctorate from Vanderbilt University School of Law. Don is certified as a Trial Advocate by the National Board of Trial Advocacy and as a Civil Trial Specialist by the Minnesota State Bar Association. He has been named one of Minnesota’s “Super Lawyers,” and has been selected by his peers as a “Leading American Attorney.” He has also been named one of Minnesota’s “Top 100 Lawyers.” A well-known lecturer and presenter, Don has spoken on personal injury, tort reform and civil trial practice for many Minnesota Continuing Legal Education seminars. Respected nationally and internationally, Don heads up FMJ’s litigation and unmanned aircraft systems practices. In 2014, Don was asked to testify before the Minnesota Senate and House Judiciary and Civil Law Committee in a joint hearing on the topic of unmanned aircraft systems (UAS). The hearing covered public, private and commercial usage of UAS and the data they collect. Don addressed UAS regulations at state and federal levels, uses of UAS and data gathered by them, as well as current and future legal issues. Practice Areas: Litigation Transportation & Logistics HR & Employment Intellectual Property Insurance -

Raid on Hijacked Plane Kills Dozens SPORTS Most Passengers on the Plaae, Com- the Body from the Plane Onto the Andeered Saturday Over Greece with 97 Tarmac

Jets win in OT; Giants also triumph Social Security Cloudy Highs around 50. Game Rain likely. 4B Complete forecast The Register pag«ZA Vol. 108 No. 78 YOUR HOMETOWN' NEWSPAPER SINCE 1878 MONDAY, NOVEMBER 25, 1985 ?5 CENTS INSIDE Raid on hijacked plane kills dozens SPORTS Most passengers on the plaae, com- the body from the plane onto the andeered Saturday over Greece with 97 tarmac. Tht Associated Press people aboard and diverted to Malta, Gala) earlier told authorities by VALLETTA, Malta - Egyptian com- were Egyptians or Greeks. One of the radio that the hijackers had killed mando* stormed a hijacked Egyptalr three American passengers was killed seven people. jetliner last night and shots, explosions and the other two were wounded and As the commandos stormed the and a fire during the assault killed as thrown from the plane by the hijackers plane, Galal killed the hijack leader many as 90 people, a government before the commando assault with an axe, Mifsud said. Both the pilot spokesman said. Malta's state-run television said and the co-pilot were wounded in the "There are about 90 dead by bullet about SO people were on the jetliner struggle. or fire," spokesman Paul Mlfsud told when the commandos attacked. It said Joel Levy, the deputy U.S. Embassy reporters two hoars after the assault. IS wounded people were taken to chief, told reporters the commandos He Mid the hijackers hurled hand hospitals. attacked at 8:20 p.m. (2:20 p.m. EST), grenades at the passengers when they Mlfsud Hid Prime Minister Carmelo about 24 hours after the jetliner was realised the Boeing 737 was being Mlfsud Bonnici authorized the assault seized while en route from Athens, stormed, and the resulting fire de- because "the situation was getting out Greece, to Cairo, Egypt. -

Donald Chance Mark, Jr

Donald Chance Mark, Jr. Shareholder | [email protected] Donald Chance Mark, Jr. is a founding member of FMJ with more than 35 years of experience handling general litigation, aviation litigation, employment litigation, products liability, commercial litigation and medical malpractice defense matters. A graduate of St. Olaf College, with a Bachelor of Arts degree, Don received his Juris Doctorate from Vanderbilt University School of Law. Don is certified as a Trial Advocate by the National Board of Trial Advocacy and as a Civil Trial Specialist by the Minnesota State Bar Association. He has been named one of Minnesota’s “Super Lawyers,” and has been selected by his peers as a “Leading American Attorney.” He has also been named one of Minnesota’s “Top 100 Lawyers.” A well-known lecturer and presenter, Don has spoken on personal injury, tort reform and civil trial practice for many Minnesota Continuing Legal Education seminars. Respected nationally and internationally, Don heads up FMJ’s litigation and unmanned aircraft systems practices. In 2014, Don was asked to testify before the Minnesota Senate and House Judiciary and Civil Law Committee in a joint hearing on the topic of unmanned aircraft systems (UAS). The hearing covered public, private and commercial usage of UAS and the data they collect. Don addressed UAS regulations at state and federal levels, uses of UAS and data gathered by them, as well as current and future legal issues. Practice Areas: Litigation Products Liability Transportation & Logistics HR & Employment Intellectual -

Wrestlers Self, but the Process of Gaining 9

~-------------------------------------------- 1 Apartheid - page 6 VOL XX, NO. 59 TUESDAY, NOVEMBER 26, 1985 ~- . an independent student newspaper serving Notre Dame and Saint Mary's Police hunt Egypt ·claims for suspects rescue effort in base store on airplane car bombing was justified As8oclakd Press Associated Press FRANKFURT, West Germany - VALLETTA, Malta - Egypt said yes Two men believed to be carrying terday it sent commandos storming Moroccan passports became prime into a hijacked jetliner to avert a suspects yesterday in the hunt for massacre, but the hijackers those who bombed a U.S. military responded with fire grenades that shopping center, West German aut turned the plane into a blazing coffin h~rities reported. for scores of passengers. Sunday's car bombing wounded Nine of the 59 victims were 35 people, ·almost all of them U.S. children. One of the five hijackers civilians and servicemen. Three survived the assault on the Egyptair remained hospitalized yesterday, jet and underwent surgery at a one a serviceman who had been hospital, said Paul Mifsud, the Mal listed in serious condition but was tese government spokesman. upgraded to good condition yester Prime Minister Carmelo Mifsud day. Bonnici of Malta said he approved Officials have refused to release the raid because "we wanted to the names of the injured. show we would not give in." Authorities first had said the at "The Egyptian forces assured us tack looked like the work of West that this would be a quick operation German leftist terrorists. Later they ... and that the assault would come said foreigners might have been to a good ending," he said.