Openspiel: a Framework for Reinforcement Learning in Games

Total Page:16

File Type:pdf, Size:1020Kb

Load more

Recommended publications

-

The Determinants of Efficient Behavior in Coordination Games

The Determinants of Efficient Behavior in Coordination Games Pedro Dal B´o Guillaume R. Fr´echette Jeongbin Kim* Brown University & NBER New York University Caltech May 20, 2020 Abstract We study the determinants of efficient behavior in stag hunt games (2x2 symmetric coordination games with Pareto ranked equilibria) using both data from eight previous experiments on stag hunt games and data from a new experiment which allows for a more systematic variation of parameters. In line with the previous experimental literature, we find that subjects do not necessarily play the efficient action (stag). While the rate of stag is greater when stag is risk dominant, we find that the equilibrium selection criterion of risk dominance is neither a necessary nor sufficient condition for a majority of subjects to choose the efficient action. We do find that an increase in the size of the basin of attraction of stag results in an increase in efficient behavior. We show that the results are robust to controlling for risk preferences. JEL codes: C9, D7. Keywords: Coordination games, strategic uncertainty, equilibrium selection. *We thank all the authors who made available the data that we use from previous articles, Hui Wen Ng for her assistance running experiments and Anna Aizer for useful comments. 1 1 Introduction The study of coordination games has a long history as many situations of interest present a coordination component: for example, the choice of technologies that require a threshold number of users to be sustainable, currency attacks, bank runs, asset price bubbles, cooper- ation in repeated games, etc. In such examples, agents may face strategic uncertainty; that is, they may be uncertain about how the other agents will respond to the multiplicity of equilibria, even when they have complete information about the environment. -

Lecture 4 Rationalizability & Nash Equilibrium Road

Lecture 4 Rationalizability & Nash Equilibrium 14.12 Game Theory Muhamet Yildiz Road Map 1. Strategies – completed 2. Quiz 3. Dominance 4. Dominant-strategy equilibrium 5. Rationalizability 6. Nash Equilibrium 1 Strategy A strategy of a player is a complete contingent-plan, determining which action he will take at each information set he is to move (including the information sets that will not be reached according to this strategy). Matching pennies with perfect information 2’s Strategies: HH = Head if 1 plays Head, 1 Head if 1 plays Tail; HT = Head if 1 plays Head, Head Tail Tail if 1 plays Tail; 2 TH = Tail if 1 plays Head, 2 Head if 1 plays Tail; head tail head tail TT = Tail if 1 plays Head, Tail if 1 plays Tail. (-1,1) (1,-1) (1,-1) (-1,1) 2 Matching pennies with perfect information 2 1 HH HT TH TT Head Tail Matching pennies with Imperfect information 1 2 1 Head Tail Head Tail 2 Head (-1,1) (1,-1) head tail head tail Tail (1,-1) (-1,1) (-1,1) (1,-1) (1,-1) (-1,1) 3 A game with nature Left (5, 0) 1 Head 1/2 Right (2, 2) Nature (3, 3) 1/2 Left Tail 2 Right (0, -5) Mixed Strategy Definition: A mixed strategy of a player is a probability distribution over the set of his strategies. Pure strategies: Si = {si1,si2,…,sik} σ → A mixed strategy: i: S [0,1] s.t. σ σ σ i(si1) + i(si2) + … + i(sik) = 1. If the other players play s-i =(s1,…, si-1,si+1,…,sn), then σ the expected utility of playing i is σ σ σ i(si1)ui(si1,s-i) + i(si2)ui(si2,s-i) + … + i(sik)ui(sik,s-i). -

Graph Traversals

Graph Traversals CS200 - Graphs 1 Tree traversal reminder Pre order A A B D G H C E F I In order B C G D H B A E C F I Post order D E F G H D B E I F C A Level order G H I A B C D E F G H I Connected Components n The connected component of a node s is the largest set of nodes reachable from s. A generic algorithm for creating connected component(s): R = {s} while ∃edge(u, v) : u ∈ R∧v ∉ R add v to R n Upon termination, R is the connected component containing s. q Breadth First Search (BFS): explore in order of distance from s. q Depth First Search (DFS): explores edges from the most recently discovered node; backtracks when reaching a dead- end. 3 Graph Traversals – Depth First Search n Depth First Search starting at u DFS(u): mark u as visited and add u to R for each edge (u,v) : if v is not marked visited : DFS(v) CS200 - Graphs 4 Depth First Search A B C D E F G H I J K L M N O P CS200 - Graphs 5 Question n What determines the order in which DFS visits nodes? n The order in which a node picks its outgoing edges CS200 - Graphs 6 DepthGraph Traversalfirst search algorithm Depth First Search (DFS) dfs(in v:Vertex) mark v as visited for (each unvisited vertex u adjacent to v) dfs(u) n Need to track visited nodes n Order of visiting nodes is not completely specified q if nodes have priority, then the order may become deterministic for (each unvisited vertex u adjacent to v in priority order) n DFS applies to both directed and undirected graphs n Which graph implementation is suitable? CS200 - Graphs 7 Iterative DFS: explicit Stack dfs(in v:Vertex) s – stack for keeping track of active vertices s.push(v) mark v as visited while (!s.isEmpty()) { if (no unvisited vertices adjacent to the vertex on top of the stack) { s.pop() //backtrack else { select unvisited vertex u adjacent to vertex on top of the stack s.push(u) mark u as visited } } CS200 - Graphs 8 Breadth First Search (BFS) n Is like level order in trees A B C D n Which is a BFS traversal starting E F G H from A? A. -

Evolutionary Game Theory: ESS, Convergence Stability, and NIS

Evolutionary Ecology Research, 2009, 11: 489–515 Evolutionary game theory: ESS, convergence stability, and NIS Joseph Apaloo1, Joel S. Brown2 and Thomas L. Vincent3 1Department of Mathematics, Statistics and Computer Science, St. Francis Xavier University, Antigonish, Nova Scotia, Canada, 2Department of Biological Sciences, University of Illinois, Chicago, Illinois, USA and 3Department of Aerospace and Mechanical Engineering, University of Arizona, Tucson, Arizona, USA ABSTRACT Question: How are the three main stability concepts from evolutionary game theory – evolutionarily stable strategy (ESS), convergence stability, and neighbourhood invader strategy (NIS) – related to each other? Do they form a basis for the many other definitions proposed in the literature? Mathematical methods: Ecological and evolutionary dynamics of population sizes and heritable strategies respectively, and adaptive and NIS landscapes. Results: Only six of the eight combinations of ESS, convergence stability, and NIS are possible. An ESS that is NIS must also be convergence stable; and a non-ESS, non-NIS cannot be convergence stable. A simple example shows how a single model can easily generate solutions with all six combinations of stability properties and explains in part the proliferation of jargon, terminology, and apparent complexity that has appeared in the literature. A tabulation of most of the evolutionary stability acronyms, definitions, and terminologies is provided for comparison. Key conclusions: The tabulated list of definitions related to evolutionary stability are variants or combinations of the three main stability concepts. Keywords: adaptive landscape, convergence stability, Darwinian dynamics, evolutionary game stabilities, evolutionarily stable strategy, neighbourhood invader strategy, strategy dynamics. INTRODUCTION Evolutionary game theory has and continues to make great strides. -

Lecture Notes

GRADUATE GAME THEORY LECTURE NOTES BY OMER TAMUZ California Institute of Technology 2018 Acknowledgments These lecture notes are partially adapted from Osborne and Rubinstein [29], Maschler, Solan and Zamir [23], lecture notes by Federico Echenique, and slides by Daron Acemoglu and Asu Ozdaglar. I am indebted to Seo Young (Silvia) Kim and Zhuofang Li for their help in finding and correcting many errors. Any comments or suggestions are welcome. 2 Contents 1 Extensive form games with perfect information 7 1.1 Tic-Tac-Toe ........................................ 7 1.2 The Sweet Fifteen Game ................................ 7 1.3 Chess ............................................ 7 1.4 Definition of extensive form games with perfect information ........... 10 1.5 The ultimatum game .................................. 10 1.6 Equilibria ......................................... 11 1.7 The centipede game ................................... 11 1.8 Subgames and subgame perfect equilibria ...................... 13 1.9 The dollar auction .................................... 14 1.10 Backward induction, Kuhn’s Theorem and a proof of Zermelo’s Theorem ... 15 2 Strategic form games 17 2.1 Definition ......................................... 17 2.2 Nash equilibria ...................................... 17 2.3 Classical examples .................................... 17 2.4 Dominated strategies .................................. 22 2.5 Repeated elimination of dominated strategies ................... 22 2.6 Dominant strategies .................................. -

Econstor Wirtschaft Leibniz Information Centre Make Your Publications Visible

A Service of Leibniz-Informationszentrum econstor Wirtschaft Leibniz Information Centre Make Your Publications Visible. zbw for Economics Banerjee, Abhijit; Weibull, Jörgen W. Working Paper Evolution and Rationality: Some Recent Game- Theoretic Results IUI Working Paper, No. 345 Provided in Cooperation with: Research Institute of Industrial Economics (IFN), Stockholm Suggested Citation: Banerjee, Abhijit; Weibull, Jörgen W. (1992) : Evolution and Rationality: Some Recent Game-Theoretic Results, IUI Working Paper, No. 345, The Research Institute of Industrial Economics (IUI), Stockholm This Version is available at: http://hdl.handle.net/10419/95198 Standard-Nutzungsbedingungen: Terms of use: Die Dokumente auf EconStor dürfen zu eigenen wissenschaftlichen Documents in EconStor may be saved and copied for your Zwecken und zum Privatgebrauch gespeichert und kopiert werden. personal and scholarly purposes. Sie dürfen die Dokumente nicht für öffentliche oder kommerzielle You are not to copy documents for public or commercial Zwecke vervielfältigen, öffentlich ausstellen, öffentlich zugänglich purposes, to exhibit the documents publicly, to make them machen, vertreiben oder anderweitig nutzen. publicly available on the internet, or to distribute or otherwise use the documents in public. Sofern die Verfasser die Dokumente unter Open-Content-Lizenzen (insbesondere CC-Lizenzen) zur Verfügung gestellt haben sollten, If the documents have been made available under an Open gelten abweichend von diesen Nutzungsbedingungen die in der dort Content Licence (especially Creative Commons Licences), you genannten Lizenz gewährten Nutzungsrechte. may exercise further usage rights as specified in the indicated licence. www.econstor.eu Ind ustriens Utred n i ngsi nstitut THE INDUSTRIAL INSTITUTE FOR ECONOMIC AND SOCIAL RESEARCH A list of Working Papers on the last pages No. -

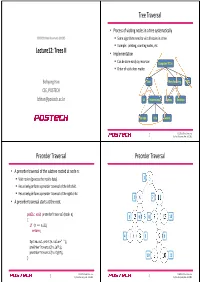

Lecture12: Trees II Tree Traversal Preorder Traversal Preorder Traversal

Tree Traversal • Process of visiting nodes in a tree systematically CSED233: Data Structures (2013F) . Some algorithms need to visit all nodes in a tree. Example: printing, counting nodes, etc. Lecture12: Trees II • Implementation . Can be done easily by recursion Computers”R”Us . Order of visits does matter. Bohyung Han Sales Manufacturing R&D CSE, POSTECH [email protected] US International Laptops Desktops Europe Asia Canada CSED233: Data Structures 2 by Prof. Bohyung Han, Fall 2013 Preorder Traversal Preorder Traversal • A preorder traversal of the subtree rooted at node n: . Visit node n (process the node's data). 1 . Recursively perform a preorder traversal of the left child. Recursively perform a preorder traversal of the right child. 2 7 • A preorder traversal starts at the root. public void preOrderTraversal(Node n) 3 6 8 12 { if (n == null) return; 4 5 9 System.out.print(n.value+" "); preOrderTraversal(n.left); preOrderTraversal(n.right); 10 11 } CSED233: Data Structures CSED233: Data Structures 3 by Prof. Bohyung Han, Fall 2013 4 by Prof. Bohyung Han, Fall 2013 Inorder Traversal Inorder Traversal • A preorder traversal of the subtree rooted at node n: . Recursively perform a preorder traversal of the left child. 6 . Visit node n (process the node's data). Recursively perform a preorder traversal of the right child. 4 11 • An iorder traversal starts at the root. public void inOrderTraversal(Node n) 2 5 7 12 { if (n == null) return; 1 3 9 inOrderTraversal(n.left); System.out.print(n.value+" "); inOrderTraversal(n.right); } 8 10 CSED233: Data Structures CSED233: Data Structures 5 by Prof. -

Introduction to Game Theory Lecture 1: Strategic Game and Nash Equilibrium

Game Theory and Rational Choice Strategic-form Games Nash Equilibrium Examples (Non-)Strict Nash Equilibrium . Introduction to Game Theory Lecture 1: Strategic Game and Nash Equilibrium . Haifeng Huang University of California, Merced Shanghai, Summer 2011 . • Rational choice: the action chosen by a decision maker is better or at least as good as every other available action, according to her preferences and given her constraints (e.g., resources, information, etc.). • Preferences (偏好) are rational if they satisfy . Completeness (完备性): between any x and y in a set, x ≻ y (x is preferred to y), y ≻ x, or x ∼ y (indifferent) . Transitivity(传递性): x ≽ y and y ≽ z )x ≽ z (≽ means ≻ or ∼) ) Say apple ≻ banana, and banana ≻ orange, then apple ≻ orange Game Theory and Rational Choice Strategic-form Games Nash Equilibrium Examples (Non-)Strict Nash Equilibrium Rational Choice and Preference Relations • Game theory studies rational players’ behavior when they engage in strategic interactions. • Preferences (偏好) are rational if they satisfy . Completeness (完备性): between any x and y in a set, x ≻ y (x is preferred to y), y ≻ x, or x ∼ y (indifferent) . Transitivity(传递性): x ≽ y and y ≽ z )x ≽ z (≽ means ≻ or ∼) ) Say apple ≻ banana, and banana ≻ orange, then apple ≻ orange Game Theory and Rational Choice Strategic-form Games Nash Equilibrium Examples (Non-)Strict Nash Equilibrium Rational Choice and Preference Relations • Game theory studies rational players’ behavior when they engage in strategic interactions. • Rational choice: the action chosen by a decision maker is better or at least as good as every other available action, according to her preferences and given her constraints (e.g., resources, information, etc.). -

Efficient Stackless Hierarchy Traversal on Gpus with Backtracking in Constant Time

High Performance Graphics (2016) Ulf Assarsson and Warren Hunt (Editors) Efficient Stackless Hierarchy Traversal on GPUs with Backtracking in Constant Time Nikolaus Binder† and Alexander Keller‡ NVIDIA Corporation 1 1 1 2 3 2 3 2 3 4 5 6 7 4 5 6 7 4 5 6 7 10 11 10 11 10 11 22 23 22 23 22 23 a) b) c) 44 45 44 45 44 45 Figure 1: Backtracking from the current node with key 22 to the next node with key 3, which has been postponed most recently, by a) iterating along parent and sibling references, b) using references to uncle and grand uncle, c) using a stack, or as we propose, a perfect hash directly mapping the key for the next node to its address without using a stack. The heat maps visualize the reduction in the number of backtracking steps. Abstract The fastest acceleration schemes for ray tracing rely on traversing a bounding volume hierarchy (BVH) for efficient culling and use backtracking, which in the worst case may expose cost proportional to the depth of the hierarchy in either time or state memory. We show that the next node in such a traversal actually can be determined in constant time and state memory. In fact, our newly proposed parallel software implementation requires only a few modifications of existing traversal methods and outperforms the fastest stack-based algorithms on GPUs. In addition, it reduces memory access during traversal, making it a very attractive building block for ray tracing hardware. Categories and Subject Descriptors (according to ACM CCS): I.3.3 [Computer Graphics]: Picture/Image Generation—Ray Trac- ing 1. -

Introduction to Game Theory I

Introduction to Game Theory I Presented To: 2014 Summer REU Penn State Department of Mathematics Presented By: Christopher Griffin [email protected] 1/34 Types of Game Theory GAME THEORY Classical Game Combinatorial Dynamic Game Evolutionary Other Topics in Theory Game Theory Theory Game Theory Game Theory No time, doesn't contain differential equations Notion of time, may contain differential equations Games with finite or Games with no Games with time. Modeling population Experimental / infinite strategy space, chance. dynamics under Behavioral Game but no time. Games with motion or competition Theory Generally two player a dynamic component. Games with probability strategic games Modeling the evolution Voting Theory (either induced by the played on boards. Examples: of strategies in a player or the game). Optimal play in a dog population. Examples: Moves change the fight. Chasing your Determining why Games with coalitions structure of a game brother across a room. Examples: altruism is present in or negotiations. board. The evolution of human society. behaviors in a group of Examples: Examples: animals. Determining if there's Poker, Strategic Chess, Checkers, Go, a fair voting system. Military Decision Nim. Equilibrium of human Making, Negotiations. behavior in social networks. 2/34 Games Against the House The games we often see on television fall into this category. TV Game Shows (that do not pit players against each other in knowledge tests) often require a single player (who is, in a sense, playing against The House) to make a decision that will affect only his life. Prize is Behind: 1 2 3 Choose Door: 1 2 3 1 2 3 1 2 3 Switch: Y N Y N Y N Y N Y N Y N Y N Y N Y N Win/Lose: L W W L W L W L L W W L W L W L L W The Monty Hall Problem Other games against the house: • Blackjack • The Price is Right 3/34 Assumptions of Multi-Player Games 1. -

MA/CSSE 473 Day 15

MA/CSSE 473 Day 15 BFS Topological Sort Combinatorial Object Generation MA/CSSE 473 Day 15 • HW 6 due tomorrow, HW 7 Friday, Exam next Tuesday • HW 8 has been updated for this term. Due Oct 8 • ConvexHull implementation problem due Day 27 (Oct 21); assignment is available now – Individual assignment (I changed my mind, but I am giving you 3.5 weeks to do it) • Schedule page now has my projected dates for all remaining reading assignments and written assignments – Topics for future class days and details of assignments 9-17 still need to be updated for this term • Student Questions • DFS and BFS • Topological Sort • Combinatorial Object Generation - Intro Q1 1 Recap: Pseudocode for DFS Graph may not be connected, so we loop. Backtracking happens when this loop ends (no more unmarked neighbors) Analysis? Q2 Notes on DFS • DFS can be implemented with graphs represented as: – adjacency matrix: Θ(|V| 2) – adjacency list: Θ(| V|+|E|) • Yields two distinct ordering of vertices: – order in which vertices are first encountered (pushed onto stack) – order in which vertices become dead-ends (popped off stack) • Applications: – check connectivity, finding connected components – Is this graph acyclic? – finding articulation points, if any (advanced) – searching the state-space of problem for (optimal) solution (AI) Q3 2 Breadth-first search (BFS) • Visits graph vertices by moving across to all the neighbors of last visited vertex. Vertices closer to the start are visited early • Instead of a stack, BFS uses a queue • Level-order tree traversal is -

The Conservation Game ⇑ Mark Colyvan A, , James Justus A,B, Helen M

Biological Conservation 144 (2011) 1246–1253 Contents lists available at ScienceDirect Biological Conservation journal homepage: www.elsevier.com/locate/biocon Special Issue Article: Adaptive management for biodiversity conservation in an uncertain world The conservation game ⇑ Mark Colyvan a, , James Justus a,b, Helen M. Regan c a Sydney Centre for the Foundations of Science, University of Sydney, Sydney, NSW 2006, Australia b Department of Philosophy, Florida State University, Tallahassee, FL 32306, USA c Department of Biology, University of California Riverside, Riverside, CA 92521, USA article info abstract Article history: Conservation problems typically involve groups with competing objectives and strategies. Taking effec- Received 16 December 2009 tive conservation action requires identifying dependencies between competing strategies and determin- Received in revised form 20 September 2010 ing which action optimally achieves the appropriate conservation goals given those dependencies. We Accepted 7 October 2010 show how several real-world conservation problems can be modeled game-theoretically. Three types Available online 26 February 2011 of problems drive our analysis: multi-national conservation cooperation, management of common-pool resources, and games against nature. By revealing the underlying structure of these and other problems, Keywords: game-theoretic models suggest potential solutions that are often invisible to the usual management pro- Game theory tocol: decision followed by monitoring, feedback and revised decisions. The kind of adaptive manage- Adaptive management Stag hunt ment provided by the game-theoretic approach therefore complements existing adaptive management Tragedy of the commons methodologies. Games against nature Ó 2010 Elsevier Ltd. All rights reserved. Common-pool resource management Multi-national cooperation Conservation management Resource management Prisoners’ dilemma 1.