The Future of Truth and Misinformation Online Experts Are Evenly Split on Whether the Coming Decade Will See a Reduction in False and Misleading Narratives Online

Total Page:16

File Type:pdf, Size:1020Kb

Load more

Recommended publications

-

A Solution for Elder Bullying

WIEGAND.DOCX (DO NOT DELETE) 1/27/2020 11:06 AM “LIKE MEAN GIRLS, BUT EVERYONE IS EIGHTY”: A SOLUTION FOR ELDER BULLYING Brittany Wiegand* Bullying has long been an adolescent issue. With the elderly population ever-growing, however, so too does the incidence of elder bullying. Bullying behaviors often occur in small group settings with members who interact regularly, such as in schools. Senior living communities also fit this description. Bullies in senior communities may engage in verbal and even physical abuse; in the worst cases, bullying can be fatal. Instances of bullying among older adults are likely to increase in frequency as this population grows. This Note evaluates current federal and state laws that bullying victims might use to seek redress. It argues that current state laws should be amended to include seniors by aligning laws with research-based definitions of bullying. This Note also recommends providing a private right of action and implementing research-based programming in communal living centers. I. Introduction Flipped tables and false accusations: two unlikely scenes from a nursing home. Though one might expect something like this to occur at a middle school, each is only a minor component of one woman’s ex‐ perience in an elderly living community where she faced a torrent of physical and verbal abuse from other residents due to her sexual orien‐ tation.1 Brittany Wiegand is the Editor‐in‐Chief 2019–2020, Member 2018–2019, The Elder Law Journal; J.D. 2020, University of Illinois, Urbana‐Champaign; M.A. 2011, Curriculum & Instruction, Louisiana State University; B.A. -

Words That Work: It's Not What You Say, It's What People Hear

ï . •,";,£ CASL M T. ^oÛNTAE À SUL'S, REVITA 1ENT, HASSLE- NT_ MAIN STR " \CCOUNTA ;, INNOVAT MLUE, CASL : REVITA JOVATh IE, CASL )UNTAE CO M M XIMEN1 VlTA • Ml ^re aW c^Pti ( °rds *cc Po 0 ^rof°>lish lu*t* >nk Lan <^l^ gua a ul Vic r ntz °ko Ono." - Somehow, W( c< Words are enorm i Jheer pleasure of CJ ftj* * - ! love laag^ liant about Words." gM °rder- Franl< Luntz * bril- 'Frank Luntz understands the power of words to move public Opinion and communicate big ideas. Any Democrat who writes off his analysis and decades of experience just because he works for the other side is making a big mistake. His les sons don't have a party label. The only question is, where s our Frank Luntz^^^^^^^™ îy are some people so much better than others at talking their way into a job or nit of trouble? What makes some advertising jingles cut through the clutter of our crowded memories? What's behind winning campaign slogans and career-ending political blunders? Why do some speeches resonate and endure while others are forgotten moments after they are given? The answers lie in the way words are used to influence and motivate, the way they connect thought and emotion. And no person knows more about the intersection of words and deeds than language architect and public-opinion guru Dr. Frank Luntz. In Words That Work, Dr. Luntz not only raises the curtain on the craft of effective language, but also offers priceless insight on how to find and use the right words to get what you want out of life. -

Fake News and Propaganda: a Critical Discourse Research Perspective

Open Information Science 2019; 3: 197–208 Research article Iulian Vamanu* Fake News and Propaganda: A Critical Discourse Research Perspective https://doi.org/10.1515/opis-2019-0014 Received September 25, 2018; accepted May 9, 2019 Abstract: Having been invoked as a disturbing factor in recent elections across the globe, fake news has become a frequent object of inquiry for scholars and practitioners in various fields of study and practice. My article draws intellectual resources from Library and Information Science, Communication Studies, Argumentation Theory, and Discourse Research to examine propagandistic dimensions of fake news and to suggest possible ways in which scientific research can inform practices of epistemic self-defense. Specifically, the article focuses on a cluster of fake news of potentially propagandistic import, employs a framework developed within Argumentation Theory to explore ten ways in which fake news may be used as propaganda, and suggests how Critical Discourse Research, an emerging cluster of theoretical and methodological approaches to discourses, may provide people with useful tools for identifying and debunking fake news stories. My study has potential implications for further research and for literacy practices. In particular, it encourages empirical studies of its guiding premise that people who became familiar with certain research methods are less susceptible to fake news. It also contributes to the design of effective research literacy practices. Keywords: post-truth, literacy, scientific research, discourse studies, persuasion “Don’t be so overly dramatic about it, Chuck. You’re saying it’s a falsehood [...] Sean Spicer, our press secretary, gave alternative facts to that.” (Kellyanne Conway, Counselor to the U.S. -

Leaving Reality Behind Etoy Vs Etoys Com Other Battles to Control Cyberspace By: Adam Wishart Regula Bochsler ISBN: 0066210763 See Detail of This Book on Amazon.Com

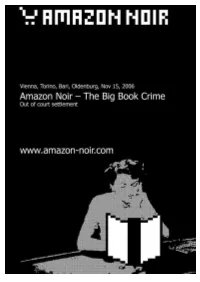

Leaving Reality Behind etoy vs eToys com other battles to control cyberspace By: Adam Wishart Regula Bochsler ISBN: 0066210763 See detail of this book on Amazon.com Book served by AMAZON NOIR (www.amazon-noir.com) project by: PAOLO CIRIO paolocirio.net UBERMORGEN.COM ubermorgen.com ALESSANDRO LUDOVICO neural.it Page 1 discovering a new toy "The new artist protests, he no longer paints." -Dadaist artist Tristan Tzara, Zh, 1916 On the balmy evening of June 1, 1990, fleets of expensive cars pulled up outside the Zurich Opera House. Stepping out and passing through the pillared porticoes was a Who's Who of Swiss society-the head of state, national sports icons, former ministers and army generals-all of whom had come to celebrate the sixty-fifth birthday of Werner Spross, the owner of a huge horticultural business empire. As one of Zurich's wealthiest and best-connected men, it was perhaps fitting that 650 of his "close friends" had been invited to attend the event, a lavish banquet followed by a performance of Romeo and Juliet. Defiantly greeting the guests were 200 demonstrators standing in the square in front of the opera house. Mostly young, wearing scruffy clothes and sporting punky haircuts, they whistled and booed, angry that the opera house had been sold out, allowing itself for the first time to be taken over by a rich patron. They were also chanting slogans about the inequity of Swiss society and the wealth of Spross's guests. The glittering horde did its very best to ignore the disturbance. The protest had the added significance of being held on the tenth anniversary of the first spark of the city's most explosive youth revolt of recent years, The Movement. -

Dog Whistling Far-Right Code Words: the Case of 'Culture

Information, Communication & Society ISSN: (Print) (Online) Journal homepage: https://www.tandfonline.com/loi/rics20 Dog whistling far-right code words: the case of ‘culture enricher' on the Swedish web Mathilda Åkerlund To cite this article: Mathilda Åkerlund (2021): Dog whistling far-right code words: the case of ‘culture enricher' on the Swedish web, Information, Communication & Society, DOI: 10.1080/1369118X.2021.1889639 To link to this article: https://doi.org/10.1080/1369118X.2021.1889639 © 2021 The Author(s). Published by Informa UK Limited, trading as Taylor & Francis Group Published online: 23 Feb 2021. Submit your article to this journal Article views: 502 View related articles View Crossmark data Full Terms & Conditions of access and use can be found at https://www.tandfonline.com/action/journalInformation?journalCode=rics20 INFORMATION, COMMUNICATION & SOCIETY https://doi.org/10.1080/1369118X.2021.1889639 Dog whistling far-right code words: the case of ‘culture enricher’ on the Swedish web Mathilda Åkerlund Sociology Department, Umeå University, Umeå, Sweden ABSTRACT ARTICLE HISTORY This paper uses the Swedish, once neo-Nazi expression culture Received 21 August 2020 enricher (Swedish: kulturberikare) as a case study to explore how Accepted 31 January 2021 covert and coded far-right discourse is mainstreamed, over time KEYWORDS and across websites. A sample of 2,336 uses of the expression Far-right; mainstreaming; between 1999 and 2020 were analysed using critical discourse fi dog whistling; critical analysis. The ndings illustrate how the expression works like a discourse analysis; coded ‘dog whistle’ by enabling users to discretely self-identify with an language use imagined in-group of discontent white ‘Swedes’, while simultaneously showing opposition to the priorities of a generalised ‘establishment’. -

Deception, Disinformation, and Strategic Communications: How One Interagency Group Made a Major Difference by Fletcher Schoen and Christopher J

STRATEGIC PERSPECTIVES 11 Deception, Disinformation, and Strategic Communications: How One Interagency Group Made a Major Difference by Fletcher Schoen and Christopher J. Lamb Center for Strategic Research Institute for National Strategic Studies National Defense University Institute for National Strategic Studies National Defense University The Institute for National Strategic Studies (INSS) is National Defense University’s (NDU’s) dedicated research arm. INSS includes the Center for Strategic Research, Center for Complex Operations, Center for the Study of Chinese Military Affairs, Center for Technology and National Security Policy, Center for Transatlantic Security Studies, and Conflict Records Research Center. The military and civilian analysts and staff who comprise INSS and its subcomponents execute their mission by conducting research and analysis, publishing, and participating in conferences, policy support, and outreach. The mission of INSS is to conduct strategic studies for the Secretary of Defense, Chairman of the Joint Chiefs of Staff, and the Unified Combatant Commands in support of the academic programs at NDU and to perform outreach to other U.S. Government agencies and the broader national security community. Cover: Kathleen Bailey presents evidence of forgeries to the press corps. Credit: The Washington Times Deception, Disinformation, and Strategic Communications: How One Interagency Group Made a Major Difference Deception, Disinformation, and Strategic Communications: How One Interagency Group Made a Major Difference By Fletcher Schoen and Christopher J. Lamb Institute for National Strategic Studies Strategic Perspectives, No. 11 Series Editor: Nicholas Rostow National Defense University Press Washington, D.C. June 2012 Opinions, conclusions, and recommendations expressed or implied within are solely those of the contributors and do not necessarily represent the views of the Defense Department or any other agency of the Federal Government. -

Information Warfare, International Law, and the Changing Battlefield

ARTICLE INFORMATION WARFARE, INTERNATIONAL LAW, AND THE CHANGING BATTLEFIELD Dr. Waseem Ahmad Qureshi* ABSTRACT The advancement of technology in the contemporary era has facilitated the emergence of information warfare, which includes the deployment of information as a weapon against an adversary. This is done using a numBer of tactics such as the use of media and social media to spread propaganda and disinformation against an adversary as well as the adoption of software hacking techniques to spread viruses and malware into the strategically important computer systems of an adversary either to steal confidential data or to damage the adversary’s security system. Due to the intangible nature of the damage caused By the information warfare operations, it Becomes challenging for international law to regulate the information warfare operations. The unregulated nature of information operations allows information warfare to Be used effectively By states and nonstate actors to gain advantage over their adversaries. Information warfare also enhances the lethality of hyBrid warfare. Therefore, it is the need of the hour to arrange a new convention or devise a new set of rules to regulate the sphere of information warfare to avert the potential damage that it can cause to international peace and security. ABSTRACT ................................................................................................. 901 I. INTRODUCTION ......................................................................... 903 II. WHAT IS INFORMATION WARFARE? ............................. -

The Biological Basis of Human Irrationality

DOCUMENT RESUME ED 119 041 CG 010 346 AUTHOR Ellis, Albert TITLE The Biological Basis of Human Irrationality. PUB DATE 31 Aug 75 NOTE 42p.; Paper presented at the Annual Meeting of the American Psychological Association (83rd, Chicago, Illinois, August 30-September 2, 1975) Reproduced from best copy available EDRS PRICE MF-$0.83 HC-$2.06 Plus Postage DESCRIPTORS *Behavioral Science Research; *Behavior Patterns; *Biological Influences; Individual Psychology; *Psychological Patterns; *Psychological Studies; Psychotherapy; Speeches IDENTIFIERS *Irrationality ABSTRACT If we define irrationality as thought, emotidn, or behavior that leads to self-defeating consequences or that significantly interferes with the survival and happiness of the organism, we find that literally hundreds of major irrationalities exist in all societies and in virtually all humans in those societies. These irrationalities persist despite people's conscious determination to change; many of them oppose almost all the teachings of the individuals who follow them; they persist among highly intelligent, educated, and relatively undisturbed individuals; when people give them up, they usually replace them with other, sometimes just as extreme, irrationalities; people who strongly oppose them in principle nonetheless perpetuate them in practice; sharp insight into them or their origin hardly removes them; many of them appear to stem from autistic invention; they often seem to flow from deepseated and almost ineradicable tendencies toward human fallibility, overgeneralization, wishful thinking, gullibility, prejudice, and short-range hedonism; and they appear at least in part tied up with physiological, hereditary, and constitutional processes. Although we can as yet make no certain or unqualified claim for the biological basis of human irrationality, such a claim now has enough evidence behind it to merit serious consideration. -

The Future of Free Speech, Trolls, Anonymity and Fake News Online.” Pew Research Center, March 2017

NUMBERS, FACTS AND TRENDS SHAPING THE WORLD FOR RELEASE MARCH 29, 2017 BY Lee Rainie, Janna Anderson, and Jonathan Albright FOR MEDIA OR OTHER INQUIRIES: Lee Rainie, Director, Internet, Science and Technology research Prof. Janna Anderson, Director, Elon University’s Imagining the Internet Center Asst. Prof. Jonathan Albright, Elon University Dana Page, Senior Communications Manager 202.419.4372 www.pewresearch.org RECOMMENDED CITATION: Rainie, Lee, Janna Anderson and Jonathan Albright. The Future of Free Speech, Trolls, Anonymity and Fake News Online.” Pew Research Center, March 2017. Available at: http://www.pewinternet.org/2017/03/29/the-future-of-free-speech- trolls-anonymity-and-fake-news-online/ 1 PEW RESEARCH CENTER About Pew Research Center Pew Research Center is a nonpartisan fact tank that informs the public about the issues, attitudes and trends shaping America and the world. It does not take policy positions. The Center conducts public opinion polling, demographic research, content analysis and other data-driven social science research. It studies U.S. politics and policy; journalism and media; internet, science, and technology; religion and public life; Hispanic trends; global attitudes and trends; and U.S. social and demographic trends. All of the Center’s reports are available at www.pewresearch.org. Pew Research Center is a subsidiary of The Pew Charitable Trusts, its primary funder. For this project, Pew Research Center worked with Elon University’s Imagining the Internet Center, which helped conceive the research as well as collect and analyze the data. © Pew Research Center 2017 www.pewresearch.org 2 PEW RESEARCH CENTER The Future of Free Speech, Trolls, Anonymity and Fake News Online The internet supports a global ecosystem of social interaction. -

The Impact of Disinformation on Democratic Processes and Human Rights in the World

STUDY Requested by the DROI subcommittee The impact of disinformation on democratic processes and human rights in the world @Adobe Stock Authors: Carme COLOMINA, Héctor SÁNCHEZ MARGALEF, Richard YOUNGS European Parliament coordinator: Policy Department for External Relations EN Directorate General for External Policies of the Union PE 653.635 - April 2021 DIRECTORATE-GENERAL FOR EXTERNAL POLICIES POLICY DEPARTMENT STUDY The impact of disinformation on democratic processes and human rights in the world ABSTRACT Around the world, disinformation is spreading and becoming a more complex phenomenon based on emerging techniques of deception. Disinformation undermines human rights and many elements of good quality democracy; but counter-disinformation measures can also have a prejudicial impact on human rights and democracy. COVID-19 compounds both these dynamics and has unleashed more intense waves of disinformation, allied to human rights and democracy setbacks. Effective responses to disinformation are needed at multiple levels, including formal laws and regulations, corporate measures and civil society action. While the EU has begun to tackle disinformation in its external actions, it has scope to place greater stress on the human rights dimension of this challenge. In doing so, the EU can draw upon best practice examples from around the world that tackle disinformation through a human rights lens. This study proposes steps the EU can take to build counter-disinformation more seamlessly into its global human rights and democracy policies. -

Protecting Children in Virtual Worlds Without Undermining Their Economic, Educational, and Social Benefits

Protecting Children in Virtual Worlds Without Undermining Their Economic, Educational, and Social Benefits Robert Bloomfield* Benjamin Duranske** Abstract Advances in virtual world technology pose risks for the safety and welfare of children. Those advances also alter the interpretations of key terms in applicable laws. For example, in the Miller test for obscenity, virtual worlds constitute places, rather than "works," and may even constitute local communities from which standards are drawn. Additionally, technological advances promise to make virtual worlds places of such significant social benefit that regulators must take care to protect them, even as they protect children who engage with them. Table of Contents I. Introduction ................................................................................ 1177 II. Developing Features of Virtual Worlds ...................................... 1178 A. Realism in Physical and Visual Modeling. .......................... 1179 B. User-Generated Content ...................................................... 1180 C. Social Interaction ................................................................. 1180 D. Environmental Integration ................................................... 1181 E. Physical Integration ............................................................. 1182 F. Economic Integration ........................................................... 1183 * Johnson Graduate School of Management, Cornell University. This Article had its roots in Robert Bloomfield’s presentation at -

Political Rhetoric and Minority Health: Introducing the Rhetoric- Policy-Health Paradigm

Saint Louis University Journal of Health Law & Policy Volume 12 Issue 1 Public Health Law in the Era of Alternative Facts, Isolationism, and the One Article 7 Percent 2018 Political Rhetoric and Minority Health: Introducing the Rhetoric- Policy-Health Paradigm Kimberly Cogdell Grainger North Carolina Central University, [email protected] Follow this and additional works at: https://scholarship.law.slu.edu/jhlp Part of the Health Law and Policy Commons Recommended Citation Kimberly C. Grainger, Political Rhetoric and Minority Health: Introducing the Rhetoric-Policy-Health Paradigm, 12 St. Louis U. J. Health L. & Pol'y (2018). Available at: https://scholarship.law.slu.edu/jhlp/vol12/iss1/7 This Symposium Article is brought to you for free and open access by Scholarship Commons. It has been accepted for inclusion in Saint Louis University Journal of Health Law & Policy by an authorized editor of Scholarship Commons. For more information, please contact Susie Lee. SAINT LOUIS UNIVERSITY SCHOOL OF LAW POLITICAL RHETORIC AND MINORITY HEALTH: INTRODUCING THE RHETORIC-POLICY-HEALTH PARADIGM KIMBERLY COGDELL GRAINGER* ABSTRACT Rhetoric is a persuasive device that has been studied for centuries by philosophers, thinkers, and teachers. In the political sphere of the Trump era, the bombastic, social media driven dissemination of rhetoric creates the perfect space to increase its effect. Today, there are clear examples of how rhetoric influences policy. This Article explores the link between divisive political rhetoric and policies that negatively affect minority health in the U.S. The rhetoric-policy-health (RPH) paradigm illustrates the connection between rhetoric and health. Existing public health policy research related to Health in All Policies and the social determinants of health combined with rhetorical persuasive tools create the foundation for the paradigm.