Managing Large-Scale Probabilistic Databases

Total Page:16

File Type:pdf, Size:1020Kb

Load more

Recommended publications

-

Oracle Database VLDB and Partitioning Guide, 11G Release 2 (11.2) E25523-01

Oracle® Database VLDB and Partitioning Guide 11g Release 2 (11.2) E25523-01 September 2011 Oracle Database VLDB and Partitioning Guide, 11g Release 2 (11.2) E25523-01 Copyright © 2008, 2011, Oracle and/or its affiliates. All rights reserved. Contributors: Hermann Baer, Eric Belden, Jean-Pierre Dijcks, Steve Fogel, Lilian Hobbs, Paul Lane, Sue K. Lee, Diana Lorentz, Valarie Moore, Tony Morales, Mark Van de Wiel This software and related documentation are provided under a license agreement containing restrictions on use and disclosure and are protected by intellectual property laws. Except as expressly permitted in your license agreement or allowed by law, you may not use, copy, reproduce, translate, broadcast, modify, license, transmit, distribute, exhibit, perform, publish, or display any part, in any form, or by any means. Reverse engineering, disassembly, or decompilation of this software, unless required by law for interoperability, is prohibited. The information contained herein is subject to change without notice and is not warranted to be error-free. If you find any errors, please report them to us in writing. If this is software or related documentation that is delivered to the U.S. Government or anyone licensing it on behalf of the U.S. Government, the following notice is applicable: U.S. GOVERNMENT RIGHTS Programs, software, databases, and related documentation and technical data delivered to U.S. Government customers are "commercial computer software" or "commercial technical data" pursuant to the applicable Federal Acquisition Regulation and agency-specific supplemental regulations. As such, the use, duplication, disclosure, modification, and adaptation shall be subject to the restrictions and license terms set forth in the applicable Government contract, and, to the extent applicable by the terms of the Government contract, the additional rights set forth in FAR 52.227-19, Commercial Computer Software License (December 2007). -

Data Warehouse Fundamentals for Storage Professionals – What You Need to Know EMC Proven Professional Knowledge Sharing 2011

Data Warehouse Fundamentals for Storage Professionals – What You Need To Know EMC Proven Professional Knowledge Sharing 2011 Bruce Yellin Advisory Technology Consultant EMC Corporation [email protected] Table of Contents Introduction ................................................................................................................................ 3 Data Warehouse Background .................................................................................................... 4 What Is a Data Warehouse? ................................................................................................... 4 Data Mart Defined .................................................................................................................. 8 Schemas and Data Models ..................................................................................................... 9 Data Warehouse Design – Top Down or Bottom Up? ............................................................10 Extract, Transformation and Loading (ETL) ...........................................................................11 Why You Build a Data Warehouse: Business Intelligence .....................................................13 Technology to the Rescue?.......................................................................................................19 RASP - Reliability, Availability, Scalability and Performance ..................................................20 Data Warehouse Backups .....................................................................................................26 -

Probabilistic Databases

Series ISSN: 2153-5418 SUCIU • OLTEANU •RÉ •KOCH M SYNTHESIS LECTURES ON DATA MANAGEMENT &C Morgan & Claypool Publishers Series Editor: M. Tamer Özsu, University of Waterloo Probabilistic Databases Probabilistic Databases Dan Suciu, University of Washington, Dan Olteanu, University of Oxford Christopher Ré,University of Wisconsin-Madison and Christoph Koch, EPFL Probabilistic databases are databases where the value of some attributes or the presence of some records are uncertain and known only with some probability. Applications in many areas such as information extraction, RFID and scientific data management, data cleaning, data integration, and financial risk DATABASES PROBABILISTIC assessment produce large volumes of uncertain data, which are best modeled and processed by a probabilistic database. This book presents the state of the art in representation formalisms and query processing techniques for probabilistic data. It starts by discussing the basic principles for representing large probabilistic databases, by decomposing them into tuple-independent tables, block-independent-disjoint tables, or U-databases. Then it discusses two classes of techniques for query evaluation on probabilistic databases. In extensional query evaluation, the entire probabilistic inference can be pushed into the database engine and, therefore, processed as effectively as the evaluation of standard SQL queries. The relational queries that can be evaluated this way are called safe queries. In intensional query evaluation, the probabilistic Dan Suciu inference is performed over a propositional formula called lineage expression: every relational query can be evaluated this way, but the data complexity dramatically depends on the query being evaluated, and Dan Olteanu can be #P-hard. The book also discusses some advanced topics in probabilistic data management such as top-kquery processing, sequential probabilistic databases, indexing and materialized views, and Monte Carlo databases. -

Adaptive Schema Databases ∗

Adaptive Schema Databases ∗ William Spothb, Bahareh Sadat Arabi, Eric S. Chano, Dieter Gawlicko, Adel Ghoneimyo, Boris Glavici, Beda Hammerschmidto, Oliver Kennedyb, Seokki Leei, Zhen Hua Liuo, Xing Niui, Ying Yangb b: University at Buffalo i: Illinois Inst. Tech. o: Oracle {wmspoth|okennedy|yyang25}@buffalo.edu {barab|slee195|xniu7}@hawk.iit.edu [email protected] {eric.s.chan|dieter.gawlick|adel.ghoneimy|beda.hammerschmidt|zhen.liu}@oracle.com ABSTRACT in unstructured or semi-structured form, then an ETL (i.e., The rigid schemas of classical relational databases help users Extract, Transform, and Load) process needs to be designed in specifying queries and inform the storage organization of to translate the input data into relational form. Thus, clas- data. However, the advantages of schemas come at a high sical relational systems require a lot of upfront investment. upfront cost through schema and ETL process design. In This makes them unattractive when upfront costs cannot this work, we propose a new paradigm where the database be amortized, such as in workloads with rapidly evolving system takes a more active role in schema development and data or where individual elements of a schema are queried data integration. We refer to this approach as adaptive infrequently. Furthermore, in settings like data exploration, schema databases (ASDs). An ASD ingests semi-structured schema design simply takes too long to be practical. or unstructured data directly using a pluggable combina- Schema-on-query is an alternative approach popularized tion of extraction and data integration techniques. Over by NoSQL and Big Data systems that avoids the upfront time it discovers and adapts schemas for the ingested data investment in schema design by performing data extraction using information provided by data integration and infor- and integration at query-time. -

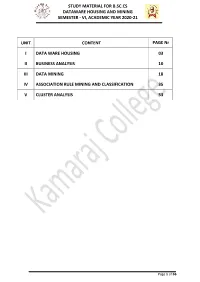

Study Material for B.Sc.Cs Dataware Housing and Mining Semester - Vi, Academic Year 2020-21

STUDY MATERIAL FOR B.SC.CS DATAWARE HOUSING AND MINING SEMESTER - VI, ACADEMIC YEAR 2020-21 UNIT CONTENT PAGE Nr I DATA WARE HOUSING 03 II BUSINESS ANALYSIS 10 III DATA MINING 18 IV ASSOCIATION RULE MINING AND CLASSIFICATION 35 V CLUSTER ANALYSIS 53 Page 1 of 66 STUDY MATERIAL FOR B.SC.CS DATAWARE HOUSING AND MINING SEMESTER - VI, ACADEMIC YEAR 2020-21 UNIT I: DATA WAREHOUSING Data warehousing Components: ->Overall Architecture Data warehouse architecture is Based on a relational database management system server that functions as the central repository (a central location in which data is stored and managed) for informational data In the data warehouse architecture, operational data and processing is separate and data warehouse processing is separate. Central information repository is surrounded by a number of key components. These key components are designed to make the entire environment- (i) functional, (ii) manageable and (iii) accessible by both the operational systems that source data into warehouse by end-user query and analysis tools. Page 2 of 66 STUDY MATERIAL FOR B.SC.CS DATAWARE HOUSING AND MINING SEMESTER - VI, ACADEMIC YEAR 2020-21 The source data for the warehouse comes from the operational applications As data enters the data warehouse, it is transformed into an integrated structure and format The transformation process may involve conversion, summarization, filtering, and condensation of data Because data within the data warehouse contains a large historical component the data warehouse must b capable of holding and managing large volumes of data and different data structures for the same database over time. ->Data Warehouse Database Central data warehouse database is a foundation for data warehousing environment. -

Finding Interesting Itemsets Using a Probabilistic Model for Binary Databases

Finding interesting itemsets using a probabilistic model for binary databases Tijl De Bie University of Bristol, Department of Engineering Mathematics Queen’s Building, University Walk, Bristol, BS8 1TR, UK [email protected] ABSTRACT elegance and e±ciency of the algorithms to search for fre- A good formalization of interestingness of a pattern should quent patterns. Unfortunately, the frequency of a pattern satisfy two criteria: it should conform well to intuition, and is only loosely related to interestingness. The output of fre- it should be computationally tractable to use. The focus quent pattern mining methods is usually an immense bag has long been on the latter, with the development of frequent of patterns that are not necessarily interesting, and often pattern mining methods. However, it is now recognized that highly redundant with each other. This has hampered the more appropriate measures than frequency are required. uptake of FPM and FIM in data mining practice. In this paper we report results in this direction for item- Recent research has shifted focus to the search for more set mining in binary databases. In particular, we introduce useful formalizations of interestingness that match practical a probabilistic model that can be ¯tted e±ciently to any needs more closely, while still being amenable to e±cient binary database, and that has a compact and explicit repre- algorithms. As FIM is arguably the simplest special case of sentation. We then show how this model enables the formal- frequent pattern mining, it is not surprising that most of the ization of an intuitive and tractable interestingness measure recent work has focussed on itemset patterns, see e.g. -

Public 1 Agenda

© 2013 SAP AG. All rights reserved. Public 1 Agenda Welcome Agenda • Introduction to Dobler Consulting • SAP IQ Roadmap – What to Expect • Q&A Presenters • Courtney Claussen - SAP IQ Product Management • Peter Dobler - CEO Dobler Consulting Closing © 2013 SAP AG. All rights reserved. Public 2 Introduction to Dobler Consulting Dobler Consulting is a leading information technology and database services company, offering a broad spectrum of services to their clients; acting as your Trusted Adviser, Provide License Sales, Architectural Review and Design Consulting, Optimization Services, High Availability review and enablement, Training and Cross Training, and lastly ongoing support and preventative maintenance. Founded in 2000, the Tampa consulting firm specializes in SAP/Sybase, Microsoft SQL Server, and Oracle. Visit us online at www.doblerconsulting.com, or contact us at 813 322 3240, or [email protected]. © 2013 SAP AG. All rights reserved. Public 3 Your Data is Your DNA, Dobler Consulting Focus Areas Strategic Database Consulting SAP VAR D&T DBA Database Managed Training Services Programs Cross-Platform Expertise SAP Sybase® SQL Server® Oracle® © 2013 SAP AG. All rights reserved. Public 4 What’s Ahead ISUG-TECH Annual Conference April 14-17, Atlanta • Register at http://my.isug.com/conference/registration • Early Bird ending 2/28/14 (free hotel room with registration) SAPPHIRENOW Annual Conference June 3-5, Orlando • Come visit our kiosk in the exhibition hall © 2013 SAP AG. All rights reserved. Public 5 SAP IQ Roadmap Dobler Events Webinar Courtney Claussen / SAP IQ Product Management February 27, 2014 Disclaimer This presentation outlines our general product direction and should not be relied on in making a purchase decision. -

Open-World Probabilistic Databases

Open-World Probabilistic Databases Ismail˙ Ilkan˙ Ceylan and Adnan Darwiche and Guy Van den Broeck Computer Science Department University of California, Los Angeles [email protected],fdarwiche,[email protected] Abstract techniques, from part-of-speech tagging until relation ex- traction (Mintz et al. 2009). For knowledge-base comple- Large-scale probabilistic knowledge bases are becoming in- tion – the task of deriving new facts from existing knowl- creasingly important in academia and industry alike. They edge – statistical relational learning algorithms make use are constantly extended with new data, powered by modern information extraction tools that associate probabilities with of embeddings (Bordes et al. 2011; Socher et al. 2013) database tuples. In this paper, we revisit the semantics under- or probabilistic rules (Wang, Mazaitis, and Cohen 2013; lying such systems. In particular, the closed-world assump- De Raedt et al. 2015). In both settings, the output is a pre- tion of probabilistic databases, that facts not in the database dicted fact with its probability. It is therefore required to de- have probability zero, clearly conflicts with their everyday fine probabilistic semantics for knowledge bases. The classi- use. To address this discrepancy, we propose an open-world cal and most-basic model is that of tuple-independent prob- probabilistic database semantics, which relaxes the probabili- abilistic databases (PDBs) (Suciu et al. 2011), which in- ties of open facts to intervals. While still assuming a finite do- deed underlies many of these systems (Dong et al. 2014; main, this semantics can provide meaningful answers when Shin et al. 2015). -

Database Machines in Support of Very Large Databases

Rochester Institute of Technology RIT Scholar Works Theses 1-1-1988 Database machines in support of very large databases Mary Ann Kuntz Follow this and additional works at: https://scholarworks.rit.edu/theses Recommended Citation Kuntz, Mary Ann, "Database machines in support of very large databases" (1988). Thesis. Rochester Institute of Technology. Accessed from This Thesis is brought to you for free and open access by RIT Scholar Works. It has been accepted for inclusion in Theses by an authorized administrator of RIT Scholar Works. For more information, please contact [email protected]. Rochester Institute of Technology School of Computer Science Database Machines in Support of Very large Databases by Mary Ann Kuntz A thesis. submitted to The Faculty of the School of Computer Science. in partial fulfillment of the requirements for the degree of Master of Science in Computer Systems Management Approved by: Professor Henry A. Etlinger Professor Peter G. Anderson A thesis. submitted to The Faculty of the School of Computer Science. in partial fulfillment of the requirements for the degree of Master of Science in Computer Systems Management Approved by: Professor Henry A. Etlinger Professor Peter G. Anderson Professor Jeffrey Lasky Title of Thesis: Database Machines In Support of Very Large Databases I Mary Ann Kuntz hereby deny permission to reproduce my thesis in whole or in part. Date: October 14, 1988 Mary Ann Kuntz Abstract Software database management systems were developed in response to the needs of early data processing applications. Database machine research developed as a result of certain performance deficiencies of these software systems. -

Requirements for XML Document Database Systems Airi Salminen Frank Wm

Requirements for XML Document Database Systems Airi Salminen Frank Wm. Tompa Dept. of Computer Science and Information Systems Department of Computer Science University of Jyväskylä University of Waterloo Jyväskylä, Finland Waterloo, ON, Canada +358-14-2603031 +1-519-888-4567 ext. 4675 [email protected] [email protected] ABSTRACT On the other hand, XML will also be used in ways SGML and The shift from SGML to XML has created new demands for HTML were not, most notably as the data exchange format managing structured documents. Many XML documents will be between different applications. As was the situation with transient representations for the purpose of data exchange dynamically created HTML documents, in the new areas there is between different types of applications, but there will also be a not necessarily a need for persistent storage of XML documents. need for effective means to manage persistent XML data as a Often, however, document storage and the capability to present database. In this paper we explore requirements for an XML documents to a human reader as they are or were transmitted is database management system. The purpose of the paper is not to important to preserve the communications among different parties suggest a single type of system covering all necessary features. in the form understood and agreed to by them. Instead the purpose is to initiate discussion of the requirements Effective means for the management of persistent XML data as a arising from document collections, to offer a context in which to database are needed. We define an XML document database (or evaluate current and future solutions, and to encourage the more generally an XML database, since every XML database development of proper models and systems for XML database must manage documents) to be a collection of XML documents management. -

A Compositional Query Algebra for Second-Order Logic and Uncertain Databases

A Compositional Query Algebra for Second-Order Logic and Uncertain Databases Christoph Koch Department of Computer Science Cornell University, Ithaca, NY, USA [email protected] ABSTRACT guages for querying possible worlds. Indeed, second-order World-set algebra is a variable-free query language for uncer- quantifiers are the essence of what-if reasoning in databases. tain databases. It constitutes the core of the query language World-set algebra seems to be a strong candidate for a core implemented in MayBMS, an uncertain database system. algebra for forming query plans and optimizing and execut- This paper shows that world-set algebra captures exactly ing them in uncertain database management systems. second-order logic over finite structures, or equivalently, the It was left open in previous work whether world-set alge- polynomial hierarchy. The proofs also imply that world- bra is closed under composition, or whether definitions are set algebra is closed under composition, a previously open adding to the expressive power of the language. Compo- problem. sitionality is a desirable and rather commonplace property of query algebras, but in the case of WSA it seems rather unlikely to hold. The reason for this is that the algebra con- 1. INTRODUCTION tains an uncertainty-introduction operation that on the level Developing suitable query languages for uncertain data- of possible worlds is nondeterministic. First materializing a bases is a substantial research challenge that is only cur- view and subsequently using it multiple times in the query is rently starting to get addressed. Conceptually, an uncertain semantically quite different from composing the query with database is a finite set of possible worlds, each one a re- the view and thus obtaining several copies of the view def- lational database. -

Answering Queries from Statistics and Probabilistic Views∗

Answering Queries from Statistics and Probabilistic Views∗ Nilesh Dalvi Dan Suciu University of Washington, Seattle, WA, USA 1 Introduction paper we will assume the Local As View (LAV) data integration paradigm [17, 16], which consists of defin- Systems integrating dozens of databases, in the scien- ing a global mediated schema R¯, then expressing each tific domain or in a large corporation, need to cope local source i as a view vi(R¯) over the global schema. with a wide variety of imprecisions, such as: different Users are allowed to ask queries over the global, me- representations of the same object in different sources; diated schema, q(R¯), however the data is given as in- imperfect and noisy schema alignments; contradictory stances J1; : : : ; Jm of the local data sources. In our information across sources; constraint violations; or in- model all instances are probabilistic, both the local sufficient evidence to answer a given query. If standard instances and the global instance. Statistics are given query semantics were applied to such data, all but the explicitly over the global schema R¯, and the probabil- most trivial queries will return an empty answer. ities are given explicitly over the local sources, hence We believe that probabilistic databases are the right over the views. We make the Open World Assumption paradigm to model all types of imprecisions in a uni- throughout the paper. form and principled way. A probabilistic database is a probability distribution on all instances [5, 4, 15, 1.1 Example: Using Statistics 12, 11, 8]. Their early motivation was to model im- precisions at the tuple level: tuples are not known Suppose we integrate two sources, one show- with certainty to belong to the database, or represent ing which employee works for what depart- noisy measurements, etc.