Leveraging Browser Fingerprinting for Web Authentication Tompoariniaina Nampoina Andriamilanto

Total Page:16

File Type:pdf, Size:1020Kb

Load more

Recommended publications

-

Application Development with Tocollege.Net

CYAN YELLOW MAGENTA BLACK PANTONE 123 C BOOKS FOR PROFESSIONALS BY PROFESSIONALS® THE EXPERT’S VOICE® IN WEB DEVELOPMENT Companion eBook Available Covers Pro Web 2.0 Application GWT 1.5 Pro Development with GWT 2.0 Web Dear Reader, This book is for developers who are ready to move beyond small proof-of-concept Pro sample applications and want to look at the issues surrounding a real deploy- ment of GWT. If you want to see what the guts of a full-fledged GWT application look like, this is the book for you. GWT 1.5 is a game-changing technology, but it doesn’t exist in a bubble. Real deployments need to connect to your database, enforce authentication, protect against security threats, and allow good search engine optimization. To show you all this, we’ll look at the code behind a real, live web site called Application Development with ToCollege.net. This application specializes in helping students who are applying Web 2.0 to colleges; it allows them to manage their application processes and compare the rankings that they give to schools. It’s a slick application that’s ready for you to sign up for and use. Application Development This book will give you a walking tour of this modern Web 2.0 start-up’s code- base. The included source code will provide a functional demonstration of how to merge together the modern Java stack including Hibernate, Spring Security, Spring MVC 2.5, SiteMesh, and FreeMarker. This fully functioning application is better than treasure if you’re a developer trying to wire GWT into a Maven build environment who just wants to see some code that makes it work. -

Move Beyond Passwords Index

Move Beyond Passwords Index The quest to move beyond passwords 4 Evaluation of current authentication method 6 Getting started with passwordless authentication 8 Early results to going passwordless 9 Common approaches to going passwordless 11 Email magic links 11 Factor sequencing 12 Webauthn 14 Planning for a passwordless future 16 Move Beyond Passwords 2 Introduction Traditional authentication using a username and password has been the foundation of digital identity and security for over 50 years. But with the ever-growing number of user accounts, there are a number of new issues: the burden on end users to remember multiple passwords, support costs, and most importantly, the security risks posed by compromised credentials. These new challenges are now outweighing the usefulness of passwords. The case for eliminating passwords from the authentication experience is getting more compelling every day. Emerging passwordless security standards, elevated consumer and consumer-like experience expectations, and ballooning costs have moved eliminating passwords from a theoretical concept to a real possibility. In this whitepaper, we will explore the case for going passwordless for both customer and employee authentication, and map out steps that organizations can take on their journey to true passwordless authentication. Move Beyond Passwords 3 The quest to move beyond passwords Understanding the need for passwordless authentication starts with understanding the challenges presented by passwords. The core challenges with passwords can be broken down into the following areas: Poor Account Security Passwords have spawned a whole category of security/identity-driven attacks — compromised passwords due to credential breaches, phishing, password spraying attacks, or poor password hygiene can result in account takeover attacks (ATO). -

Cybersecurity in a Digital Era.Pdf

Digital McKinsey and Global Risk Practice Cybersecurity in a Digital Era June 2020 Introduction Even before the advent of a global pandemic, executive teams faced a challenging and dynamic environ- ment as they sought to protect their institutions from cyberattack, without degrading their ability to innovate and extract value from technology investments. CISOs and their partners in business and IT functions have had to think through how to protect increasingly valuable digital assets, how to assess threats related to an increasingly fraught geopolitical environment, how to meet increasingly stringent customer and regulatory expectations and how to navigate disruptions to existing cybersecurity models as companies adopt agile development and cloud computing. We believe there are five areas for CIOs, CISOs, CROs and other business leaders to address in particular: 1. Get a strategy in place that will activate the organization. Even more than in the past cybersecurity is a business issue – and cybersecurity effectiveness means action not only from the CISO organiza- tion, but also from application development, infrastructure, product development, customer care, finance, human resources, procurement and risk. A successful cybersecurity strategy supports the business, highlights the actions required from across the enterprise – and perhaps most importantly captures the imagination of the executive in how it can manage risk and also enable business innovation. 2. Create granular, analytic risk management capabilities. There will always be more vulnerabilities to address and more protections you can consider than you will have capacity to implement. Even companies with large and increasing cybersecurity budgets face constraints in how much change the organization can absorb. -

Implementing a Web Application for W3C Webauthn Protocol Testing †

proceedings Proceedings Implementing a Web Application for W3C WebAuthn Protocol Testing † Martiño Rivera Dourado 1,* , Marcos Gestal 1,2 and José M. Vázquez-Naya 1,2 1 Grupo RNASA-IMEDIR, Departamento de Computación, Facultade de Informática, Universidade da Coruña, Elviña, 15071 A Coruña, Spain; [email protected] (M.G.); [email protected] (J.M.V.-N.) 2 Centro de Investigación CITIC, Universidade da Coruña, Elviña, 15071 A Coruña, Spain * Correspondence: [email protected] † Presented at the 3rd XoveTIC Conference, A Coruña, Spain, 8–9 October 2020. Published: 18 August 2020 Abstract: During the last few years, the FIDO Alliance and the W3C have been working on a new standard called WebAuthn that aims to substitute the obsolete password as an authentication method by using physical security keys instead. Due to its recent design, the standard is still changing and so are the needs for protocol testing. This research has driven the development of a web application that supports the standard and gives extensive information to the user. This tool can be used by WebAuthn developers and researchers, helping them to debug concrete use cases with no need for an ad hoc implementation. Keywords: WebAuthn; authentication; testing 1. Introduction Authentication is one of the most critical parts of an application. It is a security service that aims to guarantee the authenticity of an identity. This can be done by using several security mechanisms but currently, without a doubt, the most common is the username and password method. Although this method is easy for a user to conceptually understand, it constitutes many security problems. -

8 Steps for Effectively Deploying

8 Steps for Effectively Deploying MFA Table of Contents The value of MFA 3 1. Educate your users 4 2. Consider your MFA policies 5 3. Plan and provide for a variety of access needs 7 4. Think twice about using SMS for OTP 10 5. Check compliance requirements carefully 11 6. Plan for lost devices 12 7. Plan to deploy MFA to remote workers 14 8. Phase your deployment: Be prepared to review and revise 16 8 Steps for Effectively Deploying MFA 2 The value of MFA Multi-factor authentication (MFA) has never been more important. With the growing number of data breaches and cybersecurity threats—and the steep financial and reputational costs that come with them—organizations need to prioritize MFA deployment for their workforce and customers alike. Not doing so could spell disaster; an invitation for bad actors to compromise accounts and breach your systems. Adopting modern MFA means implementing a secure, simple, and context-aware solution that ensures that only the right people have access to the right resources. It adds a layer of security, giving your security team, your employees, and your customers peace of mind. Unfortunately, while the benefits are clear, implementing MFA can be a complex project. In our Multi-factor Authentication Deployment Guide, we’ve outlined eight steps that you can take to better enable your MFA deployment: Educate your users Consider your MFA policies Plan and provide for a variety of access needs Think twice about using SMS for OTP Check compliance requirements carefully Plan for lost devices Plan to deploy MFA to remote workers Phase your deployment: be prepared to review and revise In this eBook, we’ll take a deeper dive into each of these elements, giving you tactical advice and best practices for how to implement each step as you get ready to roll out Okta MFA. -

It's All About Visibility

8 It’s All About Visibility This chapter looks at the critical tasks for getting your message found on the web. Now that we’ve discussed how to prepare a clear targeted message using the right words (Chapter 5, “The Audience Is Listening (What Will You Say?)”), we describe how online visibility depends on search engine optimization (SEO) “eat your broccoli” basics, such as lightweight and crawlable website code, targeted content with useful labels, and inlinks. In addition, you can raise the visibility of your website, products, and services online through online advertising such as paid search advertising, outreach through social websites, and display advertising. 178 Part II Building the Engine Who Sees What and How Two different tribes visit your website: people, and entities known as web spiders (or crawlers or robots). People will experience your website differently based on their own characteristics (their visual acuity or impairment), their browser (Internet Explorer, Chrome, Firefox, and so on), and the machine they’re using to view your website (a TV, a giant computer monitor, a laptop screen, or a mobile phone). Figure 8.1 shows a page on a website as it appears to website visitors through a browser. Figure 8.1 Screenshot of a story page on Model D, a web magazine about Detroit, Michigan, www.modeldmedia.com. What Search Engine Spiders See The web spiders are computer programs critical to your business because they help people who don’t know about your website through your marketing efforts find it through the search engines. The web spiders “crawl” through your website to learn about what it contains and carry information back to the gigantic servers behind the search engines, so that the search engine can provide relevant results to people searching for your product or service. -

Mfaproxy: a Reverse Proxy for Multi-Factor Authentication

Iowa State University Capstones, Theses and Creative Components Dissertations Fall 2019 MFAProxy: A reverse proxy for multi-factor authentication Alan Schmitz Follow this and additional works at: https://lib.dr.iastate.edu/creativecomponents Part of the Digital Communications and Networking Commons Recommended Citation Schmitz, Alan, "MFAProxy: A reverse proxy for multi-factor authentication" (2019). Creative Components. 425. https://lib.dr.iastate.edu/creativecomponents/425 This Creative Component is brought to you for free and open access by the Iowa State University Capstones, Theses and Dissertations at Iowa State University Digital Repository. It has been accepted for inclusion in Creative Components by an authorized administrator of Iowa State University Digital Repository. For more information, please contact [email protected]. MFAProxy: A reverse proxy for multi-factor authentication by Alan Schmitz A Creative Component submitted to the graduate faculty in partial fulfillment of the requirements for the degree of MASTER OF SCIENCE Major: Information Assurance Program of Study Committee: Doug Jacobson, Major Professor The student author, whose presentation of the scholarship herein was approved by the program of study committee, is solely responsible for the content of this Creative Component. The Graduate College will ensure this Creative Component is globally accessible and will not permit alterations after a degree is conferred. Iowa State University Ames, Iowa 2019 Copyright c Alan Schmitz, 2019. All rights reserved. ii TABLE OF CONTENTS Page LIST OF FIGURES . iii ABSTRACT . iv CHAPTER 1. INTRODUCTION . .1 CHAPTER 2. BACKGROUND . .3 2.1 Passwords and PINs . .3 2.2 Short Message Service . .4 2.3 One-Time Passwords . .5 2.4 U2F and WebAuthn . -

![Google Cheat Sheets [.Pdf]](https://docslib.b-cdn.net/cover/9906/google-cheat-sheets-pdf-999906.webp)

Google Cheat Sheets [.Pdf]

GOOGLE | CHEAT SHEET Key for skill required Novice This two page Google Cheat Sheet lists all Google services and tools as to understand the Intermediate well as background information. The Cheat Sheet offers a great reference underlying concepts to grasp of basic to advance Google query building concepts and ideas. Expert CHEAT SHEET GOOGLE SERVICES Google domains google.co.kr Google Company Information google.ae google.kz Public (NASDAQ: GOOG) and google.com.af google.li (LSE: GGEA) Google AdSense https://www.google.com/adsense/ google.com.ag google.lk google.off.ai google.co.ls Founded Google AdWords https://adwords.google.com/ google.am google.lt Menlo Park, California (1998) Google Analytics http://google.com/analytics/ google.com.ar google.lu google.as google.lv Location Google Answers http://answers.google.com/ google.at google.com.ly Mountain View, California, USA Google Base http://base.google.com/ google.com.au google.mn google.az google.ms Key people Google Blog Search http://blogsearch.google.com/ google.ba google.com.mt Eric E. Schmidt Google Bookmarks http://www.google.com/bookmarks/ google.com.bd google.mu Sergey Brin google.be google.mw Larry E. Page Google Books Search http://books.google.com/ google.bg google.com.mx George Reyes Google Calendar http://google.com/calendar/ google.com.bh google.com.my google.bi google.com.na Revenue Google Catalogs http://catalogs.google.com/ google.com.bo google.com.nf $6.138 Billion USD (2005) Google Code http://code.google.com/ google.com.br google.com.ni google.bs google.nl Net Income Google Code Search http://www.google.com/codesearch/ google.co.bw google.no $1.465 Billion USD (2005) Google Deskbar http://deskbar.google.com/ google.com.bz google.com.np google.ca google.nr Employees Google Desktop http://desktop.google.com/ google.cd google.nu 5,680 (2005) Google Directory http://www.google.com/dirhp google.cg google.co.nz google.ch google.com.om Contact Address Google Earth http://earth.google.com/ google.ci google.com.pa 2400 E. -

Strong Authentication Based on Mobile Application

View metadata, citation and similar papers at core.ac.uk brought to you by CORE provided by Helsingin yliopiston digitaalinen arkisto STRONG AUTHENTICATION BASED ON MOBILE APPLICATION Helsingin yliopisto Faculty of Science Department of Computer Science Master's thesis Spring 2020 Harri Salminen Supervisor: Valtteri Niemi Tiedekunta - Fakultet - Faculty Osasto - Avdelning - Department Faculty of Science The Department of Computer Science Tekijä - Författare - Author Harri Salminen Työn nimi - Arbetets titel Title STRONG AUTHENTICATION BASED ON MOBILE APPLICATION Oppiaine - Läroämne – Subject Computer Science Työn laji/ Ohjaaja - Arbetets art/Handledare - Aika - Datum - Month and Sivumäärä - Sidoantal - Number of Level/Instructor year pages Master's thesis / Valtteri Niemi June, 2020 58 Tiivistelmä - Referat - Abstract The user authentication in online services has evolved over time from the old username and password-based approaches to current strong authentication methodologies. Especially, the smartphone app has become one of the most important forms to perform the authentication. This thesis describes various authentication methods used previously and discusses about possible factors that generated the demand for the current strong authentication approach. We present the concepts and architectures of mobile application based authentication systems. Furthermore, we take closer look into the security of the mobile application based authentication approach. Mobile apps have various attack vectors that need to be taken under consideration when designing an authentication system. Fortunately, various generic software protection mechanisms have been developed during the last decades. We discuss how these mechanisms can be utilized in mobile app environment and in the authentication context. The main idea of this thesis is to gather relevant information about the authentication history and to be able to build a view of strong authentication evolution. -

The Top 10 Ddos Attack Trends

WHITE PAPER The Top 10 DDoS Attack Trends Discover the Latest DDoS Attacks and Their Implications Introduction The volume, size and sophistication of distributed denial of service (DDoS) attacks are increasing rapidly, which makes protecting against these threats an even bigger priority for all enterprises. In order to better prepare for DDoS attacks, it is important to understand how they work and examine some of the most widely-used tactics. What Are DDoS Attacks? A DDoS attack may sound complicated, but it is actually quite easy to understand. A common approach is to “swarm” a target server with thousands of communication requests originating from multiple machines. In this way the server is completely overwhelmed and cannot respond anymore to legitimate user requests. Another approach is to obstruct the network connections between users and the target server, thus blocking all communication between the two – much like clogging a pipe so that no water can flow through. Attacking machines are often geographically-distributed and use many different internet connections, thereby making it very difficult to control the attacks. This can have extremely negative consequences for businesses, especially those that rely heavily on its website; E-commerce or SaaS-based businesses come to mind. The Open Systems Interconnection (OSI) model defines seven conceptual layers in a communications network. DDoS attacks mainly exploit three of these layers: network (layer 3), transport (layer 4), and application (layer 7). Network (Layer 3/4) DDoS Attacks: The majority of DDoS attacks target the network and transport layers. Such attacks occur when the amount of data packets and other traffic overloads a network or server and consumes all of its available resources. -

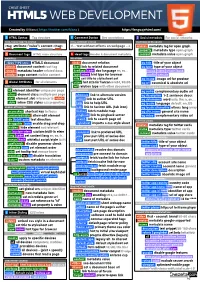

HTML5 Cheatsheet 2019

CHEAT SHEET HTML5 WEB DEVELOPMENT Created by @Manz ( https://twitter.com/Manz ) https://lenguajehtml.com/ S HTML Syntax Tag structure C Comment Syntax Dev annotations S Social metadata For social networks HTML TAG/ATTRIBUTE SYNTAX HTML COMMENT SYNTAX FACEBOOK OPEN GRAPH <tag attribute="value"> content </tag> <!-- text without effects on webpage --> <meta> metadata tag for open graph property metadata type open graph D Document tags HTML main structure H Head tags Header & document metadata content metadata value open graph MAIN TAGS RELATIONS REQUIRED METADATA PROPERTIES <!DOCTYPE html> HTML5 document <link> document relation og:title title of your object <html> document content root tag href link to related document og:type type of your object <head> metadata header related docs hreflang code doc language en, es... music video article book <body> page content visible content type mime hint type for browser profile website title set title to stylesheet set og:image image url for preview G Global Attributes for all elements sizes hint size for favicon 64x64, 96x96 og:url canonical & absolute url DOM / STYLE ATTRIBUTES rel relation type with other document OPTIONAL METADATA PROPERTIES id element identifier unique per page BASIC RELATION og:audio complementary audio url class element class multiple per page alternate link to alternate version og:description 1-2 sentence descr. slot element slot reference to <slot> author link to author URL og:determiner word auto, the, a, an, ... style inline CSS styles css properties help link to help URL -

How Can I Get Deep App Screens Indexed for Google Search?

HOW CAN I GET DEEP APP SCREENS INDEXED FOR GOOGLE SEARCH? Setting up app indexing for Android and iOS apps is pretty straightforward and well documented by Google. Conceptually, it is a three-part process: 1. Enable your app to handle deep links. 2. Add code to your corresponding Web pages that references deep links. 3. Optimize for private indexing. These steps can be taken out of order if the app is still in development, but the second step is crucial. Without it, your app will be set up with deep links but not set up for Google indexing, so the deep links will not show up in Google Search. NOTE: iOS app indexing is still in limited release with Google, so there is a special form submission and approval process even after you have added all the technical elements to your iOS app. That being said, the technical implementations take some time; by the time your company has finished, Google may have opened up indexing to all iOS apps, and this cumbersome approval process may be a thing of the past. Steps For Google Deep Link Indexing Step 1: Add Code To Your App That Establishes The Deep Links A. Pick A URL Scheme To Use When Referencing Deep Screens In Your App App URL schemes are simply a systematic way to reference the deep linked screens within an app, much like a Web URL references a specific page on a website. In iOS, developers are currently limited to using Custom URL Schemes, which are formatted in a way that is more natural for app design but different from Web.