Brian P. Bresnahan Harvard Thesis 2018

Total Page:16

File Type:pdf, Size:1020Kb

Load more

Recommended publications

-

Bitmovin's “Video Developer Report 2018,”

MPEG MPEG VAST VAST HLS HLS DASH DASH H.264 H.264 AV1 AV1 HLS NATIVE NATIVE CMAF CMAF RTMP RTMP VP9 VP9 ANDROID ANDROID ROKU ROKU HTML5 HTML5 Video Developer MPEG VAST4.0 MPEG VAST4.0 HLS HLS DASH DASH Report 2018 H.264 H.264 AV1 AV1 NATIVE NATIVE CMAF CMAF ROKU RTMP ROKU RTMP VAST4.0 VAST4.0 VP9 VP9 ANDROID ANDROID HTML5 HTML5 DRM DRM MPEG MPEG VAST VAST DASH DASH AV1 HLS AV1 HLS NATIVE NATIVE H.264 H.264 CMAF CMAF RTMP RTMP VP9 VP9 ANDROID ANDROID ROKU ROKU MPEG VAST4.0 MPEG VAST4.0 HLS HLS DASH DASH H.264 H.264 AV1 AV1 NATIVE NATIVE CMAF CMAF ROKU ROKU Welcome to the 2018 Video Developer Report! First and foremost, I’d like to thank everyone for making the 2018 Video Developer Report possible! In its second year the report is wider both in scope and reach. With 456 survey submissions from over 67 countries, the report aims to provide a snapshot into the state of video technology in 2018, as well as a vision into what will be important in the next 12 months. This report would not be possible without the great support and participation of the video developer community. Thank you for your dedication to figuring it out. To making streaming video work despite the challenges of limited bandwidth and a fragmented consumer device landscape. We hope this report provides you with insights into what your peers are working on and the pain points that we are all experiencing. We have already learned a lot and are looking forward to the 2019 Video Developer Survey a year from now! Best Regards, StefanStefan Lederer Lederer CEO, Bitmovin Page 1 Key findings In 2018 H.264/AVC dominates video codec usage globally, used by 92% of developers in the survey. -

Video Developer Report 2017 Welcome to Bitmovin’S Video Developer Report!

Video Developer Report 2017 Welcome to Bitmovin’s Video Developer Report! First and foremost, I’d like to thank everyone for making the 2017 Video Developer Survey possible! Without the great support and participation of the video developer community, we would not be able to create this report and share the insights into how developers around the globe work with video. With 380 survey submissions from over 50 countries, this report aims to give an overview of the status of video technology in 2017, as well as a vision into what will be important to video developers in the next 12 months. We hope this report provides helpful information. We have already learned a lot, and are looking forward to the 2018 Video Developer Survey a year from now! Best Regards, Stefan Lederer Stefan Lederer CEO, Bitmovin Page 2 Key findings Apple HLS is the dominant format in video streaming in 2017, but the format people are looking to in the next 12 months is MPEG-DASH. HEVC is the video codec most people are planning to use in the next 12 months, but VP9 and AV1 are also growing in popularity. Software encoders are the most popular encoders, used either on-premise or in the cloud. Cloud encoding is more popular in North America than in Europe. More than a third monetize their online video with subscription models. 45% use some form of encryption or DRM protection. Half of the developers with ad requirements are using a Server-Side Ad Insertion solution. HTML5 is now by far the dominant platform for video playback. -

Notice of Opposition Opposer Information Applicant Information Goods/Services Affected by Opposition

Trademark Trial and Appeal Board Electronic Filing System. http://estta.uspto.gov ESTTA Tracking number: ESTTA1098521 Filing date: 11/30/2020 IN THE UNITED STATES PATENT AND TRADEMARK OFFICE BEFORE THE TRADEMARK TRIAL AND APPEAL BOARD Notice of Opposition Notice is hereby given that the following party opposes registration of the indicated application. Opposer Information Name Corporacion de Radio y Television Espanola, S.A Granted to Date 11/28/2020 of previous ex- tension Address AVDA RADIO TELEVISION 4 EDIF PRADO DEL R POZUELO DE ALARCON MADRID, 28223 SPAIN Attorney informa- TODD A. SULLIVAN tion HAYES SOLOWAY PC 175 CANAL STREET MANCHESTER, NH 03101 UNITED STATES Primary Email: [email protected] Secondary Email(s): [email protected] 603-668-1400 Docket Number Isern 20.01 Applicant Information Application No. 88647258 Publication date 09/29/2020 Opposition Filing 11/30/2020 Opposition Peri- 11/28/2020 Date od Ends Applicant HONER, JODI 20845 CHENEY DR TOPANGA, CA 90290 UNITED STATES Goods/Services Affected by Opposition Class 038. First Use: 0 First Use In Commerce: 0 All goods and services in the class are opposed, namely: Transmission of interactive television pro- grams; Transmission of interactive television program guides and listings; Transmission of streaming television programs, namely, streaming of television programs on the internet; Transmission of sound, video and information from webcams, video cameras or mobile phones, all featuring live or re- corded materials; Television transmission services; Televisionbroadcasting; -

2020 Bitmovin Video Developer Report

2020 BITMOVIN VIDEO DEVELOPER REPORT www.bitmovin.com CONTENTS Welcome 5 Key Findings 6 Methodology 8 THE STATE OF THE STREAMING INDUSTRY The biggest challenges 12 Impact of Covid-19 14 Innovation 16 VIDEO WORKFLOWS Encoder 20 Video Codecs 22 Audio Codecs 23 Streaming Formats 24 Packaging 25 CDN solution 26 Per-Title Encoding 27 AI/ML 28 Player codebase 30 Platforms and Devices 32 BUSINESS INSIGHTS Monetization 38 DRM and content protection 39 Advertising 42 Low Latency 44 Analytics 47 The Annual Bitmovin Video Developer Report is one of the many resources that we are pleased to present which has become an industry standard for video streaming. Tradition is that we reveal the results in person at IBC. However, in these unprecedented times, with our fourth installment, a printed or digital version and a virtual presentation will have to do. Participation in the 2020 Report increased 46% Welcome from 2019 with a record breaking 792 respondents - twice as many participants as our first Report in 2017! We believe this also reflects the industry What a year 2020 has been so far! I believe it’s growth and important role video streaming plays fair to say that we’ve all been feeling the strain of today. trying to adjust to and master “the new normal.” Despite the many uncertainties 2020 has brought, Thank you to all who participated this year. We one thing remains consistent and that’s Bitmovin’s are grateful for your feedback and contributions. commitment to provide you with comprehensive Without you, it would not be possible to successively content offerings that feature the state of the video create such a thorough picture of the industry. -

The Streaming Television Industry: Mature Or Still Growing?

The Streaming Television Industry: Mature or Still Growing? Johannes H. Snyman Metropolitan State University of Denver Debora J. Gilliard Metropolitan State University of Denver This paper is about the streaming television industry. It begins by defining the industry and provides a brief history of the television industry in general, from the first transatlantic television signal in 1928 to 2018. From that point, it emphasizes the streaming television industry, which started in 2007, when Netflix first streamed movies over the internet. The main section of the paper focuses on Michael Porter’s theory of the industry life cycle to determine the current stage of the industry. The research question is developed, proposing that the industry is in the growth phase. Then, the number of paid subscribers of the top streaming providers in 2018 is used to address the research question. The results indicate that the industry is in the growth phase. The final section provides an outlook for the industry’s future. Keywords: Streaming TV Industry, OTT TV, Growth Industry INTRODUCTION The television industry is rapidly changing, moving toward “over-the-top”, or streaming, television. Cord cutting is occurring as millennials and traditional viewers discontinue and unbundle their television, internet, and landline telephone services (Snyman & Gilliard, 2018). Millennials are keeping their monthly expenses down by subscribing to streaming services that provide the content they are interested in watching, and binge-watching of serial television shows is the new norm (Prastien, 2019). “Over-the-top” (OTT) television and “online video distribution” (OVD) are terms that originally referred to the streaming television services provided by Netflix, Hulu and Amazon. -

BBC Research and Development White Paper List Colorimetric And

BBC Research and Development White Paper List Ref Publication date Number Main author Title Year Month White Paper link Keywords WHP001 J. Middleton The calibration of VHF/UHF field strength measuring equipment 2001 April http://downloads.bbc.co.uk/rd/pubs/whp/whp-pdf-files/WHP001.pdf VHF/UHF field strength, calibration WHP002 J. Middleton The validation of interference reduction techniques used in planning the UK DTT network 2001 July http://downloads.bbc.co.uk/rd/pubs/whp/whp-pdf-files/WHP002.pdf Interference reduction, DTT network planning WHP003 T. Everest Multiplex coverage equalisation 2001 August http://downloads.bbc.co.uk/rd/pubs/whp/whp-pdf-files/WHP003.pdf digital television, planning RTS Award submission WHP004 J.H. Stott Cumulative effects of distributed interferers 2001 August http://downloads.bbc.co.uk/rd/pubs/whp/whp-pdf-files/WHP004.pdf DSL, PLT, PLC, emissions, broadcasting, aircraft safety, cumulative interference WHP005 R. Walker Acoustic modelling - approximations to the real world 2001 September http://downloads.bbc.co.uk/rd/pubs/whp/whp-pdf-files/WHP005.pdf Acoustic modelling, sound propagation WHP006 M.R. Ellis Digital Radio - Common problems in DAB receivers 2001 November http://downloads.bbc.co.uk/rd/pubs/whp/whp-pdf-files/WHP006.pdf Digital Radio; DAB; Digital Audio Broadcasting; receiver; common problems WHP007 O. Grau Applications of depth METADATA 2001 September http://downloads.bbc.co.uk/rd/pubs/whp/whp-pdf-files/WHP007.pdf METADATA, depth sensing WHP008 A. Roberts How to recognise video image sources 2001 October http://downloads.bbc.co.uk/rd/pubs/whp/whp-pdf-files/WHP008.pdf video image recognition WHP009 R. -

Dynamic Adaptive Streaming Over HTTP

Date of acceptance Grade Instructor Dynamic Adaptive Streaming over HTTP Dajie Wang Helsinki November 7, 2016 UNIVERSITY OF HELSINKI Department of Computer Science HELSINGIN YLIOPISTO HELSINGFORS UNIVERSITET UNIVERSITY OF HELSINKI Tiedekunta Fakultet Faculty Laitos Institution Department Faculty of Science Department of Computer Science Tekijä Författare Author Dajie Wang Työn nimi Arbetets titel Title Dynamic Adaptive Streaming over HTTP Oppiaine Läroämne Subject Computer Science Työn laji Arbetets art Level Aika Datum Month and year Sivumäärä Sidoantal Number of pages November 7, 2016 49 pages + 2 appendices Tiivistelmä Referat Abstract This thesis summarises the adaptive bitrate streaming technology called Dynamic Adaptive Stream- ing over HTTP, also named as MPEG-DASH, as it is developed by the Moving Picture Experts Group. The thesis introduces and summarises MPEG-DASH standard, including the content of the stan- dard, Proles from MPEG and DASH Industry Forum, and an evaluation of the standard. The thesis then analyses the MPEG-DASH system and provides related research papers. It is organized into three dierent parts based on the workow of the whole system, including the hosted Media Presentation Description le and video Segments in server, network infrastructures and DASH clients. In the end, the thesis discusses about the adoptions of the MPEG-DASH system in dierent indus- tries, including Broadband, Broadcast, Mobile and 3D. ACM Computing Classication System (CCS): Avainsanat Nyckelord Keywords dash, streaming, mpeg Säilytyspaikka Förvaringsställe Where deposited Muita tietoja övriga uppgifter Additional information ii Contents 1 Introduction 1 2 MPEG-DASH Standard 3 2.1 The ISO/IEC 23009-1 Standard . 3 2.1.1 Format of MPD le . -

The Effects of the Cord-Cutting Counterpublic

Utrecht University The Effects of The Cord-Cutting Counterpublic How the Dynamics of Resistance and Resilience between the Cord-Cutters and the Television Distribution Industry Are Redefining Television Eleonora Maria Mazzoli Academic Year 2014-2015 Page intentionally left blank Utrecht University The Effects of the Cord-Cutting Counterpublic How the Dynamics of Resistance and Resilience between the Cord-Cutters and the Television Distribution Industry Are Redefining Television Master Thesis Eleonora Maria Mazzoli 4087186 RMA Media and Performance Studies Words: 37600 Supervisor: Prof. Dr. William Uricchio Second Reader: Prof. Dr. Eggo Müller Academic Year: 2014-2015 Cover picture by TechgenMag.com © 2015 by Eleonora Maria Mazzoli All rights reserved Page intentionally left blank To my parents, Donatella and Piergiovanni, and to my little sister, Elena “La televisiun, la g'ha na forsa de leun; la televisiun, la g'ha paura de nisun.” “Television is as strong as a lion; Television fears nobody.” Enzo Jannacci. La Televisiun.1975. Page intentionally left blank Table of Content Abstract 1 Preface: Television as A Cross-Media Techno-Cultural Form 2 1. Introduction - Cord-Cutting 101: A Revolution Has Begun 5 1.1. Changing Habits in a Changing Ecosystem 5 1.2. The Question of the Cord-Cutting Counterpublic 8 1.3. Methodology: Sources, Approach and Structure 11 1.4. Relevance 15 2. The Cutters within a Cross-Media Television Ecosystem 17 2.1. Re-working of the U.S. Television Distribution Platforms 18 2.2. Legitimating Newfound Watching Behaviours 23 2.3. Proactive Viewers, Cord-Killers, Digital Pirates: Here Come the Cord-Cutters 25 3. -

How Software-Defined Servers Will Drive the Future of Infrastructure and Operations

March 2020 How Software-Defined Servers Will Drive the Future of Infrastructure and Operations In this issue Introduction 2 How Software-Defined Servers Will Drive the Future of Infrastructure and Operations 3 Research from Gartner Top 10 Technologies That Will Drive the Future of Infrastructure and Operations 10 About TidalScale 23 2 Introduction SIXTEEN YEARS AGO, InformationWeek published a prescient call to arms for building an intelligent IT infrastructure. “The mounting complexity of today’s IT infrastructure,” cautioned the author, is having a “draining effect…on IT resources.”1 If the need for flexible, on-demand IT infrastructure was obvious back in 2004, imagine where we find ourselves today. Businesses now run on data. They analyze it to uncover opportunities, identify efficiencies, and to define their competitive advantage. But that dependency comes with real-world challenges, particularly with data volumes doubling every year2 and IoT data growth outpacing enterprise data by 50X.3 Talk about “mounting complexity.” The “draining effect” on IT resources observed 16 years ago is hitting IT operations where they live—both in their ability to meet SLAs and in their efforts to do more within limited budgets. Legacy platforms fail to keep up with growing and unpredictable workloads. Traditional approaches to scaling force IT departments into the same old system sizing, purchasing, and deployment cycles that can last months, even years. Today’s CIOs are right to ask: If my largest servers can’t handle my SAP HANA, Oracle Database, -

Today's and Future Challenges with New Forms

TODAY’S AND FUTURE CHALLENGES WITH NEW FORMS OF CONTENT LIKE 360°, AR, AND VR Stefan Lederer Bitmovin, Inc. MPEG WORKSHOP: +1 650 4438956 GLOBAL MEDIA TECHNOLOGY [email protected] STANDARDS FOR AN IMMERSIVE AGE @bitmovin www.bitmovin.com 301 Howard Street, Suite 1800 | San Francisco | CA 94105 | USA © bitmovin, Inc. | Confidential | Patents PendingSchleppeplatz 7 | 9020 Klagenfurt | Austria | Europe 1 FULL-STACK VIDEO INFRASTRUCTURE © bitmovin, Inc. | Confidential | Patents Pending 2 END-TO-END 360° INFRASTRUCTURE © bitmovin, Inc. | Confidential | Patents Pending 3 CUSTOMER USE CASES © bitmovin, Inc. | Confidential | Patents Pending 4 VIDEO CODING FOR 360° VIDEO? No special techniques for coding in spherical domain are widely available yet • Encoding in rectangular domain • Therefore we need the described projections to rectangular layouts • Equirectangular projection • Cube projection • Pyramid, Equal-Area projection and more . • Traditional video codecs are used: AVC / HEVC (/ VP8 / VP9) © bitmovin, Inc. | Confidential | Patents Pending 5 360° VIDEO – WHAT’S OUT THERE? Primarily: Progressive MP4 for 360° • 1080p to 4K Videos, using H.264 (some VP9) • Why? • Browser/OS restrictions, e.g., on iPhone • Application has no access to frames • Without adaptive streaming, the result is buffering and poor QoE © bitmovin, Inc. | Confidential | Patents Pending 6 360° VIDEO – WHAT’S OUT THERE? Current trends? MPEG-DASH / HLS • Logical next step to use adaptive streaming • Especially for high bitrate/resolution content Challenges: • Device coverage & issues • Desktop browsers, mobile Web, smartphone apps, VR headsets, TVs, casting devices, etc. • 360° rendering and access to frames is different on all platforms • Lack of frame access, DASH/HLS support, etc. • Overlays and ads • How to position/communicate/integrate different types of ads • DRM protection • No access to decoded frame • See also next slides © bitmovin, Inc. -

Transcoding and Streaming-As-A-Service For

Transcoding and Streaming-as-a-Service for improved Video Quality on the Web Christian Timmerer Daniel Weinberger Martin Smole Alpen-Adria-Universität Klagenfurt / bitmovin GmbH bitmovin GmbH bitmovin GmbH Lakeside B01 Lakeside B01 Universitätsstraße 65-67 9020 Klagenfurt, Austria 9020 Klagenfurt, Austria 9020 Klagenfurt, Austria [email protected] [email protected] [email protected] / [email protected] Reinhard Grandl Christopher Müller Stefan Lederer bitmovin GmbH bitmovin GmbH bitmovin GmbH Lakeside B01 Lakeside B01 Lakeside B01 9020 Klagenfurt, Austria 9020 Klagenfurt, Austria 9020 Klagenfurt, Austria [email protected] [email protected] [email protected] ABSTRACT delivery ecosystems. Interestingly, the trend analysis in [1] shows Real-time entertainment application such as the streaming of that by 2019 traffic originating from wireless/mobile devices will audio and video is responsible for the majority of today’s Internet exceed the traffic from wired/stationary devices. traffic. The transport thereof is accomplished over the top of the The goal to make video a “first class citizen” on the Internet (and existing infrastructure and with MPEG-DASH interoperability is consequently the Web) has become reality thanks to improved achieved. However, standards like MPEG-DASH only provide the network capacities and capabilities, more efficient (video) codecs format definition and the actual behavior of the corresponding (e.g., AVC, HEVC), powerful end user devices (e.g., computers, implementations is left open for (industry) competition. In this laptops, smart devices (TVs, tablet, phones, watches)), and demo paper we present our cloud encoding service and HTML5 corresponding Web technologies (e.g., HTML5 and associated adaptive streaming player enabling highest quality (i.e., no stalls recommendations). -

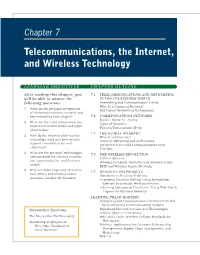

Telecommunications, the Internet, and Wireless Technology

Chapter 7 Telecommunications, the Internet, and Wireless Technology LEARNING OBJECTIVESS CHAPTER OUTLINE After reading this chapter, you 7.1 TELECOMMUNICATIONS AND NETWORKING will be able to answer the IN TODAY’S BUSINESS WORLD following questions: Networking and Communication Trends What Is a Computer Network? 1. What are the principal components Key Digital Networking Technologies of telecommunications networks and key networking technologies? 7.2 COMMUNICATIONS NETWORKS Signals: Digital vs. Analog 2. What are the main telecommunica- Types of Networks tions transmission media and types Physical Transmission Media of networks? 7.3 THE GLOBAL INTERNET 3. How do the Internet and Internet What Is the Internet? technology work and how do they Internet Addressing and Architecture support communication and Internet Services and Communications Tools e-business? The Web 4. What are the principal technologies 7.4 THE WIRELESS REVOLUTION and standards for wireless network- Cellular Systems ing, communication, and Internet Wireless Computer Networks and Internet Access access? RFID and Wireless Sensor Networks 5. Why are radio frequency identifica- 7.5 HANDS-ON MIS PROJECTS tion (RFID) and wireless sensor Management Decision Problems networks valuable for business? Improving Decision Making: Using Spreadsheet Software to Evaluate Wireless Services Achieving Operational Excellence: Using Web Search Engines for Business Research LEARNING TRACK MODULES Computing and Communications Services Provided by Commercial Communications Vendors Interactive Sessions: