Unit and Integration Testing of Java: JVM Behavior-Driven Development Testing Frame- Works Vs

Total Page:16

File Type:pdf, Size:1020Kb

Load more

Recommended publications

-

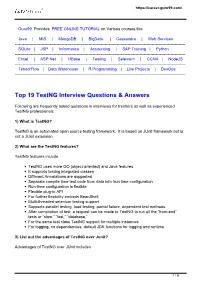

Top 19 Testng Interview Questions & Answers

https://career.guru99.com/ Guru99 Provides FREE ONLINE TUTORIAL on Various courses like Java | MIS | MongoDB | BigData | Cassandra | Web Services ------------------------------------------------------------------------------------------------------------------------------- SQLite | JSP | Informatica | Accounting | SAP Training | Python ------------------------------------------------------------------------------------------------------------------------------- Excel | ASP Net | HBase | Testing | Selenium | CCNA | NodeJS ------------------------------------------------------------------------------------------------------------------------------- TensorFlow | Data Warehouse | R Programming | Live Projects | DevOps ------------------------------------------------------------------------------------------------------------------------------- Top 19 TestNG Interview Questions & Answers Following are frequently asked questions in interviews for freshers as well as experienced TestNG professionals. 1) What is TestNG? TestNG is an automated open source testing framework. It is based on JUnit framework but is not a JUnit extension. 2) What are the TestNG features? TestNG features include TestNG uses more OO (object-oriented) and Java features It supports testing integrated classes Different Annotations are supported Separate compile time test code from data info /run time configuration Run-time configuration is flexible Flexible plug-in API For further flexibility embeds BeanShell Multi-threaded selenium testing support Supports parallel testing, -

Proof of Concept (Poc) Selenium Web Driver Based Automation Framework

e-ISSN (O): 2348-4470 Scientific Journal of Impact Factor (SJIF): 4.72 p-ISSN (P): 2348-6406 International Journal of Advance Engineering and Research Development Volume 4, Issue 7, July -2017 Proof of concept (Poc) selenium web driver based Automation framework B AJITH KUMAR Master of science ( Information Technology ) Department of Mathematics COLLEGE OF ENGINNERING GUINDY (CEG) Anna university ABSTRACT: To control test execution time. Software testing is a process of executing a program or application with the intent of finding the software bugs. It can also be stated as the process of validating and verifyingthat a software program or application or product: Meets the business and technical requirements that guided it’s design and development. Works as expected. KEY WORDS: SOFTWARE TESTING .AUTOMATION TESTING ,SELENIUM, SELENIUM WEBDRIVER ,AGILE TESTING , TESTNG Test automation : In software testing, test automation is the use of special software (separate from the software being tested) to control the execution of tests and the comparison of actual outcomes with predicted outcomes. Test automation can automate some repetitive but necessary tasks in a formalized testing process already in place, or add additional testing that would be difficult to perform manually. AGILE TESTING : A software testing practice that follows the principles of agile software development is called Agile Testing. Agile is an iterative development methodology, where requirements evolve through collaboration between the customer and self- organizing teams and agile aligns development with customer needs. Selenium automation tool Selenium has the support of some of the largest browser vendors who have taken (or are taking) steps to make Selenium a native part of their browser. -

Taming Functional Web Testing with Spock and Geb

Taming Functional Web Testing with Spock and Geb Peter Niederwieser, Gradleware Creator, Spock Contributor, Geb The Actors Spock, Geb, Page Objects Spock “Spock is a testing and specification framework for Java and Groovy applications. What makes it stand out from the crowd is its beautiful and highly expressive specification language. Thanks to its JUnit runner, Spock is compatible with most IDEs, build tools, and continuous integration servers. Spock is inspired from JUnit, RSpec, jMock, Mockito, Groovy, Scala, Vulcans, and other fascinating life forms. Spock (ctd.) http://spockframework.org ASL2 licence Serving mankind since 2008 Latest releases: 0.7, 1.0-SNAPSHOT Java + Groovy JUnit compatible Loves Geb Geb “Geb is a browser automation solution. It brings together the power of WebDriver, the elegance of jQuery content selection, the robustness of Page Object modelling and the expressiveness of the Groovy language. It can be used for scripting, scraping and general automation — or equally as a functional/web/acceptance testing solution via integration with testing frameworks such as Spock, JUnit & TestNG. Geb (ctd.) http://gebish.org ASL2 license Serving mankind since 2009 Latest releases: 0.7.2, 1.0-SNAPSHOT Java + Groovy Use with any test framework Loves Spock First-class page objects Page Objects “The Page Object pattern represents the screens of your web app as a series of objects. Within your web app's UI, there are areas that your tests interact with. A Page Object simply models these as objects within the test code. This reduces the amount of duplicated code and means that if the UI changes, the fix need only be applied in one place. -

Pragmatic Unit Testingin Java 8 with Junit

www.it-ebooks.info www.it-ebooks.info Early praise for Pragmatic Unit Testing in Java 8 with JUnit Langr, Hunt, and Thomas demonstrate, with abundant detailed examples, how unit testing with JUnit works in the real world. Beyond just showing simple iso- lated examples, they address the hard issues–things like mock objects, databases, multithreading, and getting started with automated unit testing. Buy this book and keep it on your desk, as you’ll want to refer to it often. ➤ Mike Cohn Author of Succeeding with Agile, Agile Estimating and Planning, and User Stories Applied Working from a realistic application, Jeff gives us the reasons behind unit testing, the basics of using JUnit, and how to organize your tests. This is a super upgrade to an already good book. If you have the original, you’ll find valuable new ideas in this one. And if you don’t have the original, what’s holding you back? ➤ Ron Jeffries www.ronjeffries.com Rational, balanced, and devoid of any of the typical religious wars surrounding unit testing. Top-drawer stuff from Jeff Langr. ➤ Sam Rose This book is an excellent resource for those new to the unit testing game. Experi- enced developers should also at the very least get familiar with the very helpful acronyms. ➤ Colin Yates Principal Architect, QFI Consulting, LLP www.it-ebooks.info We've left this page blank to make the page numbers the same in the electronic and paper books. We tried just leaving it out, but then people wrote us to ask about the missing pages. -

Comparative Analysis of Junit and Testng Framework

International Research Journal of Engineering and Technology (IRJET) e-ISSN: 2395-0056 Volume: 05 Issue: 05 | May-2018 www.irjet.net p-ISSN: 2395-0072 Comparative Analysis of JUnit and TestNG framework Manasi Patil1, Mona Deshmukh2 Student, Dept. of MCA, VES Institute of Technology, Maharashtra, India1 Professor, Dept. of MCA, VES Institute of Technology, Maharashtra, India2 ---------------------------------------------------------------------***--------------------------------------------------------------------- Abstract - Testing is an important phase in SDLC, Testing It is flexible than Junit and supports parametrization, parallel can be manual or automated. Nowadays Automation testing is execution and data driven testing. widely used to find defects and to ensure the correctness and The Table – 1 compares different functionalities of TestNG completeness of the software. Open source framework can be and JUnit framework. used for automation testing such as Robotframework, Junit, Spock, NUnit, TestNG, Jasmin, Mocha etc. This paper compares Table -1: Functionality TestNG vs JUnit JUnit and TestNG based on their features and functionalities. Key Words: JUnit, TestNG, Automation Framework, Functionality TestNG JUnit Automation Testing, Selenium TestNG, Selenium JUnit Yes 1. INTRODUCTION Support Annotations Yes Yes Support Test Suit Initialization Yes Testing a software manually is tedious work, and takes lot of No time and efforts. Automation saves lot of time and money, Support for Tests groups Yes also it increases the test coverage -

Java Test Driven Development with Testng

"Charting the Course ... ... to Your Success!" Java Test Driven Development with TestNG Course Summary Description Test Driven Development (TDD) has become a standard best practice for developers, especially those working in an Agile development environment. TDD is more than just automated unit testing, it is a team and individual development discipline that, when followed correctly, increases productivity of both individual developers and entire teams. From the programmer’s perspective, TDD has another benefit – it allows programmers to eliminate the tedious tasks of debugging and reworking code so that programmers can focus on the creative work of designing and writing code. It makes programming fun again. The course integrates two primary learning streams. The first is the how to effectively implement TDD in a production or development environment and integrating TDD practices with other practices like software craftsmanship, agile design practices, continuous integration and best practices in object oriented programming and Java development. The second learning stream is an in depth and hands on deep dive into Java TDD tools such as mocking libraries, matching libraries and the TestNG framework itself. TestNG (Test Next Generation) is a test framework inspired by JUnit and NUnit but with additional features and functionality The class is designed to be about 50% hands on labs and exercises, about 25% theory and 25% instructor led hands on learning where students code along with the instructor. Topics The TDD process - “red, green, -

Smart BDD Testing Using Cucumber and Jacoco

www.hcltech.com Smart BDD Testing Using Cucumber and JaCoCo Business assurance $ Testing AuthOr: Arish Arbab is a Software Engineer at HCL Singapore Pte Limited, having expertize on Agile GUI/API Automation methodologies. WHITEPAPER ApriL 2015 SMART BDD TESTING USING CUCUMBER AND JACOCO | APRIL 2015 TABLE OF CONTENTS INTRODUCTION 3 PROBLEM FACED 3 SOLUTION APPROACH 4 BENEFITS 6 IMPROVEMENTS 7 APPLICABILITY TO OTHER PROJECTS 7 UPCOMING FEATURES 7 REFERENCES 8 APPRECIATIONS RECEIVED 8 ABOUT HCL 9 © 2015, HCL TECHNOLOGIES. REPRODUCTION PROHIBITED. THIS DOCUMENT IS PROTECTED UNDER COPYRIGHT BY THE AUTHOR, ALL RIGHTS RESERVED. 2 SMART BDD TESTING USING CUCUMBER AND JACOCO | APRIL 2015 INTRODUCTION Behavioral Driven Development (BDD) testing uses natural language to describe the “desired behavior” of the system that can be understood by the developer, tester and the customer. It is a synthesis and refinement of practices stemming from TDD and ATDD. It describes behaviors in a single notation that is directly accessible to domain experts, testers and developers for improving communication. It focuses on implementing only those behaviors, which contribute most directly to the business outcomes for optimizing the scenarios. PROBLEM FACED By using the traditional automation approach, and if given a summary report of automated tests to the business analysts, then it is guaranteed that the report would be met with a blank expression. This makes it tricky to prove that the tests are correct— do they match the requirement and, if this changes, what tests need to change to reflect this? The whole idea behind BDD is to write tests in plain English, describing the behaviors of the thing that you are testing. -

Programming-In-Scala.Pdf

Cover · Overview · Contents · Discuss · Suggest · Glossary · Index Programming in Scala Cover · Overview · Contents · Discuss · Suggest · Glossary · Index Programming in Scala Martin Odersky, Lex Spoon, Bill Venners artima ARTIMA PRESS MOUNTAIN VIEW,CALIFORNIA Cover · Overview · Contents · Discuss · Suggest · Glossary · Index iv Programming in Scala First Edition, Version 6 Martin Odersky is the creator of the Scala language and a professor at EPFL in Lausanne, Switzerland. Lex Spoon worked on Scala for two years as a post-doc with Martin Odersky. Bill Venners is president of Artima, Inc. Artima Press is an imprint of Artima, Inc. P.O. Box 390122, Mountain View, California 94039 Copyright © 2007, 2008 Martin Odersky, Lex Spoon, and Bill Venners. All rights reserved. First edition published as PrePrint™ eBook 2007 First edition published 2008 Produced in the United States of America 12 11 10 09 08 5 6 7 8 9 ISBN-10: 0-9815316-1-X ISBN-13: 978-0-9815316-1-8 No part of this publication may be reproduced, modified, distributed, stored in a retrieval system, republished, displayed, or performed, for commercial or noncommercial purposes or for compensation of any kind without prior written permission from Artima, Inc. All information and materials in this book are provided "as is" and without warranty of any kind. The term “Artima” and the Artima logo are trademarks or registered trademarks of Artima, Inc. All other company and/or product names may be trademarks or registered trademarks of their owners. Cover · Overview · Contents · Discuss · Suggest · Glossary · Index to Nastaran - M.O. to Fay - L.S. to Siew - B.V. -

Bringing Test-Driven Development to Web Service Choreographies

Journal of Systems and Software • October 2014 • http://www.sciencedirect.com/science/article/pii/S016412121400209X Bringing Test-Driven Development to Web Service Choreographies Felipe Besson,Paulo Moura,Fabio Kon Dejan Milojicic Department of Computer Science Hewlett Packard Laboratories University of São Paulo Palo Alto, USA {besson, pbmoura, kon}@ime.usp.br [email protected] Abstract Choreographies are a distributed approach for composing web services. Compared to orchestrations, which use a centralized scheme for distributed service management, the interaction among the chore- ographed services is collaborative with decentralized coordination. Despite the advantages, choreography development, including the testing activities, has not yet evolved sufficiently to support the complexity of the large distributed systems. This substantially impacts the robustness of the products and overall adoption of choreographies. The goal of the research described in this paper is to support the Test-Driven Development (TDD) of choreographies to facilitate the construction of reliable, decentralized distributed systems. To achieve that, we present Rehearsal, a framework supporting the automated testing of chore- ographies at development-time. In addition, we present a choreography development methodology that guides the developer on applying TDD using Rehearsal. To assess the framework and the methodology, we conducted an exploratory study with developers, whose result was that Rehearsal was considered very helpful for the application of TDD and that the methodology helped the development of robust choreographies. Keywords: Automated testing, Test-Driven Development, Web service choreographies I. Introduction Service-Oriented Architecture (SOA) is a set of principles and methods that uses services as the building blocks for the development of distributed applications. -

Unit Testing Essentials Using Junit 5 and Mockito

Trivera Technologies IT Training Coaching Courseware Collaborate. Educate. Accelerate! Unit Testing Essentials using JUnit 5 and Mockito (with Best Practices) Explore Unit Testing, JUnit, Best Practices, Testing Apps with Native IDE Support, Debugging, Using Mockito & More w w w . t r i v e r a t e c h . c o m Course Snapshot Course: Unit Testing Essentials using JUnit 5 and Mockito (TT3526) Duration: 3 days Audience & Skill‐Level: This in an introduction to Unit Testing course for experienced Java developers Language / Tools: This course uses JUnit 5 and Mockito. This course is also available for JUnit 4, or JUnit 5 and EasyMock. Hands‐on Learning: This course is approximately 50% hands‐on, combining expert lecture, real‐world demonstrations and group discussions with machine‐based practical labs and exercises. Student machines are required. Delivery Options: This course is available for onsite private classroom presentation, live online virtual presentation, or can be presented in a flexible blended learning format for combined onsite and remote attendees. Please also ask about our Self‐ Paced / Video or QuickSkills / Short Course options. Public Schedule: This course has active dates on our live‐online open enrollment Public Schedule. Customizable: This course agenda, topics and labs can be further adjusted to target your specific training skills objectives, tools and learning goals. Please inquire for details. Overview JUnit 5 and EasyMock make it possible to write higher‐quality Java code. These powerful tools are designed to support robust, predictable and automated testing development in the Java enterprise application arena. Unit Testing Essentials using JUnit 5 and Mockito is a three‐day, hands‐on unit testing course geared for experienced developers who need to get up and running with essential unit testing skills using JUnit, Mockito, and other tools. -

Testen Mit Mockito.Pdf

Testen mit Mockito http://www.vogella.com/tutorials/Mockito/article.html Warum testen? Würde dies funktionieren, ohne es vorab zu testen? Definition von Tests • Ein Softwaretest prüft und bewertet Software auf Erfüllung der für ihren Einsatz definierten Anforderungen und misst ihre Qualität. Die gewonnenen Erkenntnisse werden zur Erkennung und Behebung von Softwarefehlern genutzt. Tests während der Softwareentwicklung dienen dazu, die Software möglichst fehlerfrei in Betrieb zu nehmen. Arten von Tests • Unit-Test: Test der einzelnen Methoden einer Klasse • Integrationstest: Tests mehrere Klassen / Module • Systemtest: Test des Gesamtsystems (meist GUI-Test) • Akzeptanztest / Abnahmetest: Test durch den Kunden, ob Produkt verwendbar • Regressionstest: Nachweis, dass eine Änderung des zu testenden Systems früher durchgeführte Tests erneut besteht • Feldtest: Test während des Einsatzes • Lasttest • Stresstest • Smoke-Tests: (Zufallstest) nicht systematisch; nur Funktion wird getestet Definitionen • SUT … system under test • CUT … class under test TDD - Test Driven Development Test-Driven Development with Mockito, packt 2013 JUnit.org Hamcrest • Hamcrest is a library of matchers, which can be combined in to create flexible expressions of intent in tests. They've also been used for other purposes • http://hamcrest.org/ • https://github.com/hamcrest/JavaHamcrest • http://www.leveluplunch.com/java/examples/hamcrest- collection-matchers-junit-testing/ • http://grepcode.com/file/repo1.maven.org/maven2/ org.hamcrest/hamcrest-library/1.3/org/hamcrest/Matchers.java Test Doubles Warum Test-Doubles? • A unit test should test a class in isolation. Side effects from other classes or the system should be eliminated if possible. The achievement of this desired goal is typical complicated by the fact that Java classes usually depend on other classes. -

Mastering Software Testing with Junit 5: Comprehensive Guide to Develop

Mastering Software Testing with JUnit 5 Comprehensive guide to develop high quality Java applications Boni García BIRMINGHAM - MUMBAI Mastering Software Testing with JUnit 5 Copyright © 2017 Packt Publishing All rights reserved. No part of this book may be reproduced, stored in a retrieval system, or transmitted in any form or by any means, without the prior written permission of the publisher, except in the case of brief quotations embedded in critical articles or reviews. Every effort has been made in the preparation of this book to ensure the accuracy of the information presented. However, the information contained in this book is sold without warranty, either express or implied. Neither the author, nor Packt Publishing, and its dealers and distributors will be held liable for any damages caused or alleged to be caused directly or indirectly by this book. Packt Publishing has endeavored to provide trademark information about all of the companies and products mentioned in this book by the appropriate use of capitals. However, Packt Publishing cannot guarantee the accuracy of this information. First published: October 2017 Production reference: 1231017 Published by Packt Publishing Ltd. Livery Place 35 Livery Street Birmingham B3 2PB, UK. ISBN 978-1-78728-573-6 www.packtpub.com Credits Author Copy Editor Boni García Charlotte Carneiro Reviewers Project Coordinator Luis Fernández Muñoz Ritika Manoj Ashok Kumar S Commissioning Editor Proofreader Smeet Thakkar Safis Editing Acquisition Editor Indexer Nigel Fernandes Aishwarya Gangawane Content Development Editor Graphics Mohammed Yusuf Imaratwale Jason Monteiro Technical Editor Production Coordinator Ralph Rosario Shraddha Falebhai About the Author Boni García has a PhD degree on information and communications technology from Technical University of Madrid (UPM) in Spain since 2011.