Memory Reclamation Strategies for Lock-Free and Concurrently-Readable Data Structures

Total Page:16

File Type:pdf, Size:1020Kb

Load more

Recommended publications

-

(12) Patent Application Publication (10) Pub. No.: US 2004/0107227 A1 Michael (43) Pub

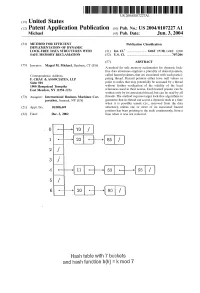

US 2004O107227A1 (19) United States (12) Patent Application Publication (10) Pub. No.: US 2004/0107227 A1 Michael (43) Pub. Date: Jun. 3, 2004 (54) METHOD FOR EFFICIENT Publication Classification IMPLEMENTATION OF DYNAMIC LOCK-FREE DATASTRUCTURES WITH (51) Int. Cl." ............................ G06F 17/30; G06F 12/00 SAFE MEMORY RECLAMATION (52) U.S. Cl. .............................................................. 707/206 (57) ABSTRACT Inventor: (75) Maged M. Michael, Danbury, CT (US) A method for Safe memory reclamation for dynamic lock free data structures employs a plurality of Shared pointers, Correspondence Address: called hazard pointers, that are associated with each partici F. CHAU & ASSOCIATES, LLP pating thread. Hazard pointers either have null values or Suite 501 point to nodes that may potentially be accessed by a thread 1900 Hempstead Turnpike without further verification of the validity of the local East Meadow, NY 11554 (US) references used in their access. Each hazard pointer can be written only by its associated thread, but can be read by all (73) Assignee: International Business Machines Cor threads. The method requires target lock-free algorithms to poration, Armonk, NY (US) guarantee that no thread can access a dynamic node at a time when it is possibly unsafe (i.e., removed from the data (21) Appl. No.: 10/308,449 Structure), unless one or more of its associated hazard pointerS has been pointing to the node continuously, from a (22) Filed: Dec. 3, 2002 time when it was not removed. 2 3 4. Hash table With 7 buckets and hash function h(k) F k mod 7 Patent Application Publication Jun. 3, 2004 Sheet 1 of 8 US 2004/0107227A1 PRIMARY STORAGE OPERATING SECONDARY I/O SYSTEM STORAGE SERVICES Application Programs FIG. -

Practical Parallel Data Structures

Practical Parallel Data Structures Shahar Timnat Technion - Computer Science Department - Ph.D. Thesis PHD-2015-06 - 2015 Technion - Computer Science Department - Ph.D. Thesis PHD-2015-06 - 2015 Practical Parallel Data Structures Research Thesis Submitted in partial fulfillment of the requirements for the degree of Doctor of Philosophy Shahar Timnat Submitted to the Senate of the Technion | Israel Institute of Technology Sivan 5775 Haifa June 2015 Technion - Computer Science Department - Ph.D. Thesis PHD-2015-06 - 2015 Technion - Computer Science Department - Ph.D. Thesis PHD-2015-06 - 2015 This research was carried out under the supervision of Prof. Erez Petrank, in the Faculty of Computer Science. Some results in this thesis have been published as articles by the author and research collaborators in conferences during the course of the author's doctoral research period, the most up-to-date versions of which being: Keren Censor-Hillel, Erez Petrank, and Shahar Timnat. Help! In Proceedings of the 34th Annual ACM Symposium on Principles of Distributed Computing, PODC 2015, Donostia-San Sebastian, Spain, July 21-23, 2015. Erez Petrank and Shahar Timnat. Lock-free data-structure iterators. In Distributed Computing - 27th International Symposium, DISC 2013, Jerusalem, Israel, October 14-18, 2013. Proceedings, pages 224{238, 2013. Shahar Timnat, Anastasia Braginsky, Alex Kogan, and Erez Petrank. Wait-free linked-lists. In Principles of Distributed Systems, 16th International Conference, OPODIS 2012, Rome, Italy, December 18-20, 2012. Proceedings, pages 330{344, 2012. Shahar Timnat, Maurice Herlihy, and Erez Petrank. A practical transactional memory interface. In Euro-Par 2015 Parallel Processing - 21st International Conference, Vienna, Austria, August 24-28, 2015. -

Contention Resistant Non-Blocking Priority Queues

Contention resistant non-blocking priority queues Lars Frydendal Bonnichsen Kongens Lyngby 2012 IMM-MSC-2012-21 Technical University of Denmark Informatics and Mathematical Modelling Building 321, DK-2800 Kongens Lyngby, Denmark Phone +45 45253351, Fax +45 45882673 [email protected] www.imm.dtu.dk 3 Table of contents 1 Abstract...........................................................................................................................4 2 Acknowledgments..........................................................................................................5 3 Introduction....................................................................................................................6 3.1 Contributions...........................................................................................................6 3.2 Outline.....................................................................................................................7 4 Background.....................................................................................................................8 4.1 Terminology............................................................................................................8 4.2 Prior work.............................................................................................................13 4.3 Summary...............................................................................................................20 5 Concurrent building blocks..........................................................................................21 -

Efficient and Practical Non-Blocking Data Structures

Thesis for the degree of Doctor of Philosophy Efficient and Practical Non-Blocking Data Structures HAKAN˚ SUNDELL Department of Computing Science Chalmers University of Technology and G¨oteborg University SE-412 96 G¨oteborg, Sweden G¨oteborg, 2004 Efficient and Practical Non-Blocking Data Structures HAKAN˚ SUNDELL ISBN 91-7291-514-5 c HAKAN˚ SUNDELL, 2004. Doktorsavhandlingar vid Chalmers tekniska h¨ogskola Ny serie nr 2196 ISSN 0346-718X Technical report 30D ISSN 1651-4971 School of Computer Science and Engineering Department of Computing Science Chalmers University of Technology and G¨oteborg University SE-412 96 G¨oteborg Sweden Telephone + 46 (0)31-772 1000 Cover: A skip list data structure with concurrent inserts and deletes. Chalmers Reproservice G¨oteborg, Sweden, 2004 Abstract This thesis deals with how to design and implement efficient, practical and reliable concurrent data structures. The design method using mutual ex- clusion incurs serious drawbacks, whereas the alternative non-blocking tech- niques avoid those problems and also admit improved parallelism. However, designing non-blocking algorithms is a very complex task, and a majority of the algorithms in the literature are either inefficient, impractical or both. We have studied how information available in real-time systems can im- prove and simplify non-blocking algorithms. We have designed new methods for recycling of buffers as well as time-stamps, and have applied them on known non-blocking algorithms for registers, snapshots and priority queues. We have designed, to the best of our knowledge, the first practical lock- free algorithm of a skip list data structure. Using our skip list construction we have designed a lock-free algorithm of the priority queue abstract data type, as well as a lock-free algorithm of the dictionary abstract data type. -

On the Design and Implementation of an Efficient Lock-Free Scheduler

On the Design and Implementation of an Efficient Lock-Free Scheduler B Florian Negele1, Felix Friedrich1,SuwonOh2, and Bernhard Egger2( ) 1 Department of Computer Science, ETH Z¨urich, Z¨urich, Switzerland {negelef,felix.friedrich}@inf.ethz.ch 2 Department of Computer Science and Engineering, Seoul National University, Seoul, Korea {suwon,bernhard}@csap.snu.ac.kr Abstract. Schedulers for symmetric multiprocessing (SMP) machines use sophisticated algorithms to schedule processes onto the available processor cores. Hardware-dependent code and the use of locks to protect shared data structures from simultaneous access lead to poor portability, the difficulty to prove correctness, and a myriad of problems associated with locking such as limiting the available parallelism, deadlocks, starva- tion, interrupt handling, and so on. In this work we explore what can be achieved in terms of portability and simplicity in an SMP scheduler that achieves similar performance to state-of-the-art schedulers. By strictly limiting ourselves to only lock-free data structures in the scheduler, the problems associated with locking vanish altogether. We show that by employing implicit cooperative scheduling, additional guarantees can be made that allow novel and very efficient implementations of memory- efficient unbounded lock-free queues. Cooperative multitasking has the additional benefit that it provides an extensive hardware independence. It even allows the scheduler to be used as a runtime library for appli- cations running on top of standard operating systems. In a comparison against Windows Server and Linux running on up to 64 cores we analyze the performance of the lock-free scheduler and show that it matches or even outperforms the performance of these two state-of-the-art sched- ulers in a variety of benchmarks. -

High Performance Dynamic Lock-Free Hash Tables and List-Based Sets

High Performance Dynamic Lock-Free Hash Tables and List-Based Sets Maged M. Michael IBM Thomas J. Watson Research Center P.O. Box 218 Yorktown Heights NY 10598 USA [email protected] ABSTRACT based shared objects suffer significant performance degrada- tion when faced with the inopportune delay of a thread while Lock-free (non-blocking) shared data structures promise more holding a lock, for instance due to preemption. While the robust performance and reliability than conventional lock- lock holder is delayed, other active threads that need ac- based implementations. However, all prior lock-free algo- cess to the locked shared object are prevented from making rithms for sets and hash tables suffer from serious drawbacks progress until the lock is released by the delayed thread. that prevent or limit their use in practice. These drawbacks A lock-free (also called non-blocking) implementation of include size inflexibility, dependence on atomic primitives not a shared object guarantees that if there is an active thread supported on any current processor architecture, and depen- trying to perform an operation on the object, some operation, dence on highly-inefficient or blocking memory management by the same or another thread, will complete within a finite techniques. number of steps regardless of other threads’ actions [8]. Lock- Building on the results of prior researchers, this paper free objects are inherently immune to priority inversion and presents the first CAS-based lock-free list-based set algorithm deadlock, and offer robust performance, even with indefinite thread delays and failures. that is compatible with all lock-free memory management Shared sets (also called dictionaries) are the building blocks methods. -

Verifying Concurrent Memory Reclamation Algorithms with Grace

Verifying Concurrent Memory Reclamation Algorithms with Grace Alexey Gotsman, Noam Rinetzky, and Hongseok Yang 1 IMDEA Software Institute 2 Tel-Aviv University 3 University of Oxford Abstract. Memory management is one of the most complex aspects of mod- ern concurrent algorithms, and various techniques proposed for it—such as haz- ard pointers, read-copy-update and epoch-based reclamation—have proved very challenging for formal reasoning. In this paper, we show that different memory reclamation techniques actually rely on the same implicit synchronisation pat- tern, not clearly reflected in the code, but only in the form of assertions used to argue its correctness. The pattern is based on the key concept of a grace period, during which a thread can access certain shared memory cells without fear that they get deallocated. We propose a modular reasoning method, motivated by the pattern, that handles all three of the above memory reclamation techniques in a uniform way. By explicating their fundamental core, our method achieves clean and simple proofs, scaling even to realistic implementations of the algorithms without a significant increase in proof complexity. We formalise the method us- ing a combination of separation logic and temporal logic and use it to verify example instantiations of the three approaches to memory reclamation. 1 Introduction Non-blocking synchronisation is a style of concurrent programming that avoids the blocking inherent to lock-based mutual exclusion. Instead, it uses low-level synchro- nisation techniques, such as compare-and-swap operations, that lead to more complex algorithms, but provide a better performance in the presence of high contention among threads. -

Verifying Concurrent Memory Reclamation Algorithms with Grace

Verifying Concurrent Memory Reclamation Algorithms with Grace Alexey Gotsman, Noam Rinetzky, and Hongseok Yang 1 IMDEA Software Institute 2 Tel-Aviv University 3 University of Oxford Abstract. Memory management is one of the most complex aspects of mod- ern concurrent algorithms, and various techniques proposed for it—such as haz- ard pointers, read-copy-update and epoch-based reclamation—have proved very challenging for formal reasoning. In this paper, we show that different memory reclamation techniques actually rely on the same implicit synchronisation pat- tern, not clearly reflected in the code, but only in the form of assertions used to argue its correctness. The pattern is based on the key concept of a grace period, during which a thread can access certain shared memory cells without fear that they get deallocated. We propose a modular reasoning method, motivated by the pattern, that handles all three of the above memory reclamation techniques in a uniform way. By explicating their fundamental core, our method achieves clean and simple proofs, scaling even to realistic implementations of the algorithms without a significant increase in proof complexity. We formalise the method us- ing a combination of separation logic and temporal logic and use it to verify example instantiations of the three approaches to memory reclamation. 1 Introduction Non-blocking synchronisation is a style of concurrent programming that avoids the blocking inherent to lock-based mutual exclusion. Instead, it uses low-level synchro- nisation techniques, such as compare-and-swap operations, that lead to more complex algorithms, but provide a better performance in the presence of high contention among threads. -

Non-Blocking Data Structures Handling Multiple Changes Atomically Niloufar Shafiei a Dissertation Submitted to the Faculty of Gr

NON-BLOCKING DATA STRUCTURES HANDLING MULTIPLE CHANGES ATOMICALLY NILOUFAR SHAFIEI A DISSERTATION SUBMITTED TO THE FACULTY OF GRADUATE STUDIES IN PARTIAL FULFILMENT OF THE REQUIREMENTS FOR THE DEGREE OF DOCTOR OF PHILOSOPHY GRADUATE PROGRAM IN DEPARTMENT OF COMPUTER SCIENCE AND ENGINEERING YORK UNIVERSITY TORONTO, ONTARIO JULY 2015 c NILOUFAR SHAFIEI, 2015 Abstract Here, we propose a new approach to design non-blocking algorithms that can apply multiple changes to a shared data structure atomically using Compare&Swap (CAS) instructions. We applied our approach to two data structures, doubly-linked lists and Patricia tries. In our implementations, only update operations perform CAS instructions; operations other than updates perform only reads of shared memory. Our doubly-linked list implements a novel specification that is designed to make it easy to use as a black box in a concurrent setting. In our doubly-linked list implementation, each process accesses the list via a cursor, which is an object in the process's local memory that is located at an item in the list. Our specification describes how updates affect cursors and how a process gets feedback about other processes' updates at the location of its cursor. We provide a detailed proof of correctness for our list implementation. We also give an amortized analysis for our list implementation, which is the first upper bound on amortized time complexity that has been proved for a concurrent doubly-linked list. In addition, we evaluate ii our list algorithms on a multi-core system empirically to show that they are scalable in practice. Our non-blocking Patricia trie implementation stores a set of keys, represented as bit strings, and allows processes to concurrently insert, delete and find keys. -

Distributed Computing with Modern Shared Memory

Thèse n° 7141 Distributed Computing with Modern Shared Memory Présentée le 20 novembre 2020 à la Faculté informatique et communications Laboratoire de calcul distribué Programme doctoral en informatique et communications pour l’obtention du grade de Docteur ès Sciences par Mihail Igor ZABLOTCHI Acceptée sur proposition du jury Prof. R. Urbanke, président du jury Prof. R. Guerraoui, directeur de thèse Prof. H. Attiya, rapporteuse Prof. N. Shavit, rapporteur Prof. J.-Y. Le Boudec, rapporteur 2020 “Where we going man?” “I don’t know but we gotta go.” — Jack Kerouac, On the Road To my grandmother Momo, who got me started on my academic adventure and taught me that learning can be fun. Acknowledgements I am first and foremost grateful to my advisor, Rachid Guerraoui. Thank you for believing in me, for encouraging me to trust my own instincts, and for opening so many doors for me. Looking back, it seems like you always made sure I had the perfect conditions to do my best possible work. Thank you also for all the things you taught me: how to get at the heart of a problem, how to stay optimistic despite all signs to the contrary, how to be pragmatic while also chasing beautiful problems. I am grateful to my other supervisors and mentors: Yvonne Anne Pignolet and Ettore Ferranti for my internship at ABB Research, Maurice Herlihy for the time at Brown University and at Oracle Labs, Dahlia Malkhi and Ittai Abraham for my internship at VMware, Virendra Marathe and Alex Kogan for my internship at Oracle Labs and the subsequent collaboration, Marcos Aguilera for the collaboration on the RDMA work, and Aleksandar Dragojevic for the internship at Microsoft Research.