NASA Program Assessment Process Study

Total Page:16

File Type:pdf, Size:1020Kb

Load more

Recommended publications

-

Record Store Day 2020 (GSA) - 18.04.2020 | (Stand: 05.03.2020)

Record Store Day 2020 (GSA) - 18.04.2020 | (Stand: 05.03.2020) Vertrieb Interpret Titel Info Format Inhalt Label Genre Artikelnummer UPC/EAN AT+CH (ja/nein/über wen?) Exclusive Record Store Day version pressed on 7" picture disc! Top song on Billboard's 375Media Ace Of Base The Sign 7" 1 !K7 Pop SI 174427 730003726071 D 1994 Year End Chart. [ENG]Pink heavyweight 180 gram audiophile double vinyl LP. Not previously released on vinyl. 'Nam Myo Ho Ren Ge Kyo' was first released on CD only in 2007 by Ace Fu SPACE AGE 375MEDIA ACID MOTHERS TEMPLE NAM MYO HO REN GE KYO (RSD PINK VINYL) LP 2 PSYDEL 139791 5023693106519 AT: 375 / CH: Irascible Records and now re-mastered by John Rivers at Woodbine Street Studio especially for RECORDINGS vinyl Out of print on vinyl since 1984, FIRST official vinyl reissue since 1984 -Chet Baker (1929 - 1988) was an American jazz trumpeter, actor and vocalist that needs little introduction. This reissue was remastered by Peter Brussee (Herman Brood) and is featuring the original album cover shot by Hans Harzheim (Pharoah Sanders, Coltrane & TIDAL WAVES 375MEDIA BAKER, CHET MR. B LP 1 JAZZ 139267 0752505992549 AT: 375 / CH: Irascible Sun Ra). Also included are the original liner notes from jazz writer Wim Van Eyle and MUSIC two bonus tracks that were not on the original vinyl release. This reissue comes as a deluxe 180g vinyl edition with obi strip_released exclusively for Record Store Day (UK & Europe) 2020. * Record Store Day 2020 Exclusive Release.* Features new artwork* LP pressed on pink vinyl & housed in a gatefold jacket Limited to 500 copies//Last Tango in Paris" is a 1972 film directed by Bernardo Bertolucci, saxplayer Gato Barbieri' did realize the soundtrack. -

Singing Accent Americanisation in the Light of Frequency Effects: Lot Unrounding and Price Monophthongisation in Focus

Research in Language, 2018, vol. 16:2 DOI: 10.2478/rela-2018-0008 SINGING ACCENT AMERICANISATION IN THE LIGHT OF FREQUENCY EFFECTS: LOT UNROUNDING AND PRICE MONOPHTHONGISATION IN FOCUS MONIKA KONERT-PANEK University of Warsaw [email protected] Abstract The paper investigates – within the framework of usage-based phonology – the significance of lexical frequency effects in singing accent Americanisation. The accent of Joe Elliott of a British band, Def Leppard is analysed with regard to LOT unrounding and PRICE monophthongisation. Both auditory and acoustic methods are employed; PRAAT is used to provide acoustic verification of the auditory analysis whenever isolated vocal tracks are available. The statistical significance of the obtained results is verified by means of a chi- square test. In both analysed cases the percentage of frequent words undergoing the change is higher compared with infrequent ones and in the case of PRICE monphthongisation the result is statistically significant, which suggests that word frequency may affect singing style variation. Keywords: frequency effects, LOT unrounding, popular music, PRICE monophthong- gisation, singing accent, usage-based phonology 1. Introduction The phenomenon of style-shifting involved in pop singing in general and the Americanisation of British singing accent in particular have been investigated from various theoretical perspectives (Trudgill 1983, Simpson 1999, Beal 2009, Gibson and Bell 2012 among others). Depending on the theoretical standpoint, the notions of identity, reference style or default accent have been brought to light and assigned major explanatory power. Trudgill (1983) in his seminal paper on the sociolinguistics of British pop-song pronunciation interprets the emulation of the American accent as a symbolic tribute to the origins of popular music and provides the list of six characteristic features of this stylisation (1), two of which ((1c) and (1d)) are addressed in the present paper. -

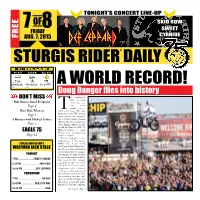

A World Record!

TONight’s CONCERT LINE-UP OF SKID ROW EE 7 8 SWEET FRIDAY CYANIDE FR AUG. 7, 2015 ® STURGIS RIDER DAILY Fri 8/7 Sat 8/8 Sun 8/9 A WORLD RecORD! Doug Danger flies into history he undisputed DON’t Miss king of stunt Bob Hansen Award Recipients men? Sure, cer- Page 4 tain names might come to Rat’s Hole Winners mind at that phrase. But since Tyesterday, at 6:03 PM, the only Page 5 name people are mention- 5 Minutes with Michael Lichter ing is Doug Danger. Because that was the time on the clock Page 3 when Danger jumped 22 cars aboard Evel Knievel’s XR-750 Harley-Davidson, a stunt EAGLE 75 Knievel once attempted but Page 12 failed to complete. The feat took place in the amphitheater at the Sturgis STURGIS BUFFALo Chip’s Buffalo Chip as part of the Evel Knievel Thrill Show. Dan- WOLFMAN JACK STAGE ger, who has been performing motorcycle jumps for decades, TONIGHT was inspired by Knievel when he was young and got to know 7 PM ..................SWEET CYANIDE him later in life. Danger 8:30 PM .....................SKID ROW regarded this stunt not as way to best his hero but as a favor, 10:30 PM ...............DEF LEPPARD completing a task for a friend. Danger is fully cognizant of TOMORROW the potential peril of his cho- sen profession and he’s real- 7 PM ............................ NICNOS istic; he knows firsthand the flip side of a successful jump. 8:30 PM ............... ADELITAS WAY But he felt solid and confident 10:30 PM ..........................WAR Continued on Page 2 PAGE 2 STURGIS RIDER DAILY FRIDAY, AUG. -

November 1983

VOL. 7, NO. 11 CONTENTS Cover Photo by Lewis Lee FEATURES PHIL COLLINS Don't let Phil Collins' recent success as a singer fool you—he wants everyone to know that he's still as interested as ever in being a drummer. Here, he discusses the percussive side of his life, including his involvement with Genesis, his work with Robert Plant, and his dual drumming with Chester Thompson. by Susan Alexander 8 NDUGU LEON CHANCLER As a drummer, Ndugu has worked with such artists and groups as Herbie Hancock, Michael Jackson, and Weather Report. As a producer, his credits include Santana, Flora Purim, and George Duke. As articulate as he is talented, Ndugu describes his life, his drumming, and his musical philosophies. 14 by Robin Tolleson INSIDE SABIAN by Chip Stern 18 JOE LABARBERA Joe LaBarbera is a versatile drummer whose career spans a broad spectrum of experience ranging from performing with pianist Bill Evans to most recently appearing with Tony Bennett. In this interview, LaBarbera discusses his early life as a member of a musical family and the influences that have made him a "lyrical" drummer. This accomplished musician also describes the personal standards that have allowed him to maintain a stable life-style while pursuing a career as a jazz musician. 24 by Katherine Alleyne & Judith Sullivan Mclntosh STRICTLY TECHNIQUE UP AND COMING COLUMNS Double Paradiddles Around the Def Leppard's Rick Allen Drumset 56 EDUCATION by Philip Bashe by Stanley Ellis 102 ON THE MOVE ROCK PERSPECTIVES LISTENER'S GUIDE Thunder Child 60 A Beat Study by Paul T. -

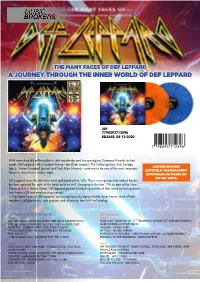

A Journey Through the Inner World of Def Leppard

THE MANY FACES OF DEF LEPPARD A JOURNEY THROUGH THE INNER WORLD OF DEF LEPPARD 2LP 7798093712896 RELEASE: 04-12-2020 With more than 65 million albums sold worldwide and two prestigious Diamond Awards to their credit, Def Leppard with its current line-up –Joe Elliott (vocals), Phil Collen (guitar), Rick Savage LIMITED EDITION (bass), Vivian Campbell (guitar) and Rick Allen (drums)— continue to be one of the most important GATEFOLD TRANSPARENT forces in rock music.n many ways, ORANGE/BLUE MARBLED 180 GR. VINYL Def Leppard were the definitive hard rock band of the ‘80s. There were groups that rocked harder, but few captured the spirit of the times quite as well. Emerging in the late ‘70s as part of the New Wave of British Heavy Metal, Def Leppard gained a following outside of that scene by toning down their heavy riffs and emphasizing melody. In The Many Faces of Def Leppard, we are going to dig deep into the lesser known work of their members, collaborations, side projects and of course, their hit-filled catalog. LP1 - THE MANY FACES OF DEF LEPPARD LP2 - THE SONGS Side A Side A ALL JOIN HANDS - ROADHOUSE FEAT. PETE WILLIS & FRANK NOON POUR SOME SUGAR ON ME - LEE THOMPSON, JOHNNY DEE, RICHARD KENDRICK, I WILL BE THERE - GOMAGOG FEAT. PETE WILLIS & JANICK GERS MARKO PUKKILA & HOWIE SIMON DOIN’ FINE - CARMINE APPICE FEAT. VIVIAN CAMPBELL HYSTERIA - DANIEL FLORES ON A LONELY ROAD - THE FROG & THE RICK VITO BAND LET IT GO - WICKED GARDEN FEAT. JOE ELLIOTT ROCK ROCK TIL YOU DROP - CHRIS POLAND, JOE VIERS & RICHARD KENDRICK OVER MY DEAD BODY - MAN RAZE FEAT. -

Def Leppard “Hysteria” By: Kyle Harman

Def Leppard “Hysteria” By: Kyle Harman Hysteria would be a fitting name for the album thought Def Leppard drummer Rick Allen, following his 1984 injury from a horrific car accident that resulted in the loss of his left arm, Hysteria was the perfect title to describe the worldwide media coverage that followed the band between the years of 1984 and 1987. The album was the last to feature the late Steve Clark and his role of lead guitarist due to an overdose in the year of 1992. In the very prime years of glam metal in the late 80’s at the dawn of grunge genre about to begin, Def Leppard delivered the bands bestselling album to date with selling 25 million copies worldwide and 12 million in the US alone. The three year recording process of Hysteria was painstaking with the accident of drummer Rick Allen, and with producers coming and going left the band with the perfect example of sometimes patience truly does pay off. A unique recording process took place where each member recorded their own recordings at separate times, allowing each member to focus on their own performance on the album. The original intent of the album was to be a rock version of Michael Jackson’s Thriller, with the desire of having every song off the album as a possible hit single. The end result of the album was impressive to say the least with 7 singles released off the single album alone. The album recording sessions were painstaking at times, “Animal” consisted of 2 and a half years to produce its final product, where “Pour Some Sugar On Me” was finished within two weeks. -

Hello America Free

FREE HELLO AMERICA PDF J. G. Ballard,Ben Marcus | 256 pages | 21 Mar 2012 | HarperCollins Publishers | 9780007287031 | English | London, United Kingdom Quibi - Hello America From " Veronica Mars " to Hello America take a look back at the career of Armie Hammer on and off the screen. See the full gallery. After being suspended from the People's Council, Bakhit and Adeela travel to New York at the request of Bakhit's cousin Nawfal who is a wealthy man living with his wife Hello America two kids. Hello America and Adeela notice Hello America cultural differences as they try to earn a decent living in America and get married after years of waiting. Written by Anonymous. Looking for Hello America great streaming picks? Check out some of the IMDb editors' favorites movies and shows to round out your Watchlist. Visit our What to Watch page. Sign In. Keep track of everything you watch; tell your Hello America. Full Cast and Crew. Release Dates. Official Sites. Company Credits. Technical Specs. Plot Summary. Plot Keywords. Parents Guide. External Sites. User Reviews. User Ratings. External Reviews. Metacritic Reviews. Photo Gallery. Trailers and Videos. Crazy Credits. Alternate Versions. Rate This. Writer: Lenine El-Ramli. Added to Watchlist. The Evolution of Armie Hammer. Arabic movies. Way to Movies. Egyption Movies. Share this Rating Title: Hello America 4. Use the HTML below. You must be a registered user Hello America use the IMDb rating plugin. Photos Add Image. Edit Cast Cast overview: Adel Emam Bakhit Sherine Adeela Osama Abbas Nawfal Rushdi El Mahdi Frank Enas Mekky Ezzat Diaa Abdel Khalek Shokry Hanem Mohamed Bakhit's Mother Ayman Mohmed Sam Sayed Gabr NaNa Marlene Jackson Sitona Barbara Sunny Lane Edit Storyline After being suspended from the People's Council, Bakhit and Adeela travel to New York at the request of Bakhit's cousin Nawfal who is a wealthy man living with his wife and Hello America kids. -

Adrenalized : Life, Def Leppard and Beyond Kindle

ADRENALIZED : LIFE, DEF LEPPARD AND BEYOND PDF, EPUB, EBOOK Philip Collen | 256 pages | 19 May 2016 | Transworld Publishers Ltd | 9780552170451 | English | London, United Kingdom Adrenalized : Life, Def Leppard and Beyond PDF Book It amazes me no one will do that! It may not be everyone's cup of tea, but I, for one, found his take on fame and fortune refreshing. Could Phil Collen become your personal coach then? Harry Crisswell July 18, at pm Reply. I thought the guys were gonna hate it, but I showed it to them anyway — and they really liked it. Wow, these are beautiful! That's what happened to Rep. You can get away with a lot less, just by tweaking it here and there. I'm a huge Def Leppard fan. Leading with a foot-tapping bass beat, "Man Enough" has a jangly rhythm track that uses guitars sparingly. There is beauty in all of it. I lost 6 pounds in a week, and I wasn't actually trying to lose weight. They convey genuine strain and regret about a lost love, alongside a serene acoustic guitar. Sammy Helen! I knew that Collen started in a band named girl with Phil Lewis who went on the L. Def Leppard On Through the Night. Still, the band received a good amount of grief for releasing something so polished in a time when alternative bands were beginning to invade the airwaves. I also didn't appreciate that he blames this evil on religion, greed, blah blah blah, all while admitting his profession is part of the problem. -

LN PR Template

KISS & DEF LEPPARD - THE WORLD’S BIGGEST ROCK BANDS SET TO TOUR THIS SUMMER - Tickets On Sale Starting Friday, March 21 Through Live Nation Mobile App and at LiveNation.com - LOS ANGELES – March 17, 2014 – This summer two of the world's greatest rock bands, KISS and DEF LEPPARD, are set to deliver a massive tour dedicated to fans who wanna rock and roll all night! These two legendary rock bands spanning two continents announced today that they will launch the tour as KISS celebrates their 40th year in music. The summer's biggest hit-fueled rock tour, promoted exclusively by Live Nation, will storm through 40-plus cities throughout North America kicking-off on Monday, June 23 in West Valley City, UT at the USANA Amphitheater. Tickets go on sale starting on Friday, March 21 through the Live Nation mobile app and at www.livenation.com. KISS and Def Leppard jointly decided to support our heroes and honor their dedicated fans in the armed services by partnering with numerous military organizations for the tour. Partnerships will include the USO, Hiring Our Heroes, Project Resiliency/The Raven Drum Foundation, August Warrior Project and the Wounded Warrior Project. All military personnel will be given exclusive access to discounted tickets for the tour with their own pre-sale through Military.com/Monster.com while also participating in the tour launch announcement featuring a live streamed Q&A with band members from KISS and Def Leppard in Los Angeles, California at the House Of Blues on Monday, March 17. Military charities will receive a portion of ticket sales with the money raised being split amongst all the non-profit organizations participating with the summer tour. -

Ef Eppard in 19 Formation: Ste E Clark, Phil Collen, Oe Lliott, Rick Sa Ae, and Rick Llen

ef eppard in 19 formation: Stee Clark, Phil Collen, oe lliott, Rick Saae, and Rick llen (from left) > Def > 20494_RNRHF_Text_Rev1_22-61.pdf 9 3/11/19 5:23 PM 21333_RNRHF_DefLeppard_30-37_ACG.inddLeppard 30 4/25/19 4:11 PM A THEY FORGED A NEW POP-METAL SOUND WITH MULTIPLATINUM RESULTS BY ROBERT BURKE WARREN issing his bus that autumn day States. Their club-mates: Van Halen, Led Zeppelin, in Sheeld, England, 1977, may Pink Floyd, the Eagles, and the Beatles. But while the have inconvenienced 18-year- band’s journey includes these and many other strato- old wannabe rocker Joe Elliott, spheric highs of rock stardom, it also features some but it set in motion a series of unimaginable lows – legendary dues paying, it seems, events that would lead to his for all those dreams come true. fronting one of the biggest Looking back to the murky beginnings, Elliott re- bands in history: Def Leppard. An undeniable eight- called passing 19-year-old local guitar player Pete ies juggernaut still vital today, Def Leppard stand as Willis on that unplanned walk home from the bus one of a handful of rock bands to reach the RIAA’s stop. It was, he said, “a sliding-doors moment.” Elliott “Double Diamond” status, i.e., ten million units of had just taken up guitar and asked if Willis’ new Mtwo or more original studio albums sold in the United metal band, Atomic Mass – which included drummer 21333_RNRHF_DefLeppard_30-37_ACG.indd 31 4/25/19 4:11 PM Pete Willis, Allen, Elliott, Clark, and Savage (clockwise from bottom), before a gig at Atlanta’s Fox Theatre, 1981 20494_RNRHF_Text_Rev1_22-61.pdf 11 3/11/19 5:23 PM Above: Elliott surprised by Union Jack-ed fans, c. -

Def Leppard Announce Select Fall 20/20 Vision Tour Dates with Very Special Guests Zz Top

DEF LEPPARD ANNOUNCE SELECT FALL 20/20 VISION TOUR DATES WITH VERY SPECIAL GUESTS ZZ TOP 16-City Tour to Kick Off on September 21st Tickets On Sale To General Public Starting Friday, February 21 at LiveNation.com (NEW YORK - NY February 13, 2020) – Legendary British rock’ & roll icons, and 2019 Rock & Roll Hall of Fame® inductees, Def Leppard, announce select 20/20 Vision fall tour dates with very special guests ZZ Top. Produced by Live Nation, this new leg of dates will immediately follow the group’s massively successful summer stadium tour with Motley Crue, which has already sold 1.1 million tickets. The 20/20 Vision tour will kick off on September 21st at Times Union Center in Albany, NY. Please see full tour routing below. Tickets go on sale to the general public beginning Friday, February 21st at 10am local time at LiveNation.com. Citi is the official presale credit card of the 20/20 Vision Tour. As such, Citi cardmembers will have access to purchase presale tickets beginning Tuesday, February 18th at 10am local time until Thursday, February 20th at 10pm local time through Citi Entertainment®. For complete presale details visit www.citientertainment.com. Def Leppard front man Joe Elliott says of the tour, “What a year this is going to be! First, sold out stadiums, then we get to go on tour with the mighty ZZ Top! Having been an admirer of the band for a lifetime it’s gonna be a real pleasure to finally do some shows together...maybe some of us will get to go for a spin with Billy in one of those fancy cars ...” “We’re excited about hitting the road with Def Leppard this fall; we’ve been fans of theirs since forever,” says the aforementioned Billy F Gibbons. -

Gonzo283 Bagladies.Pub

Subscribe to Gonzo Weekly http://eepurl.com/r-VTD Subscribe to Gonzo Daily http://eepurl.com/OvPez Gonzo Facebook Group https://www.facebook.com/groups/287744711294595/ Gonzo Weekly on Twitter https://twitter.com/gonzoweekly Gonzo Multimedia (UK) http://www.gonzomultimedia.co.uk/ Gonzo Multimedia (USA) http://www.gonzomultimedia.com/ 3 to start a record company newsletter. Looking a gift horse in the mouth, as I so often do, I instead mooted the idea of doing a weekly magazine. To my immense pleasure, Rob agreed, and therefore the rod for my own back which you are currently reading, was hammered firmly into place over five years ago. I always remember where I was on a specific day in February, 1991, and an article in last week's issue brought that memory spiralling back into the front of my conscience. It was Jeremy's account of a gig by Ferocious Dog that did it for me, or rather it was one of the photographs that accompanied the article. Because there, as large as life, was a bloke called Les Carter, Dear friends, whom I first met back in the days when he was more unusually named as 'Fruitbat'. He Welcome to another issue of this was half of a duo named Carter the increasingly peculiar little magazine. On a Unstoppable Sex Machine, who were, at number of occasions I have recounted the the time, the blue-eyed boys of British rock story of how my old friend, Rob Ayling journalism. (who was only just a school leaver when I first met him, but is now MD of Gonzo I had first heard of them during the summer Multimedia, one of the most interesting of 1989, at a notorious rock festival in media companies in the world), asked me Cornwall, called the Treworgey Tree 4 Fayre, which has gone down in history as aforementioned Leslie George Carter.