ENAR 2013 Spring Meeting March 10–13

Total Page:16

File Type:pdf, Size:1020Kb

Load more

Recommended publications

-

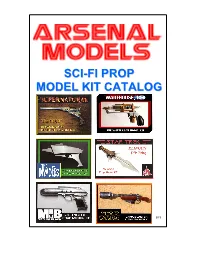

Sci-Fi Prop Model Kit Catalog

SSCCII--FFII PPRROOPP MMOODDEELL KKIITT CCAATTAALLOOGG 2/13 Welcome to the Arsenal Models sci-fi prop model kit catalog. We specialize in screen-accurate prop replica kits from your favorite science fiction films and TV shows. Prop kits shown are 1:1 scale highly detailed replicas of actual props used in the films or shows. Many are casts of actual screen-used prop weapons. Others are based on the actual weapons used by the prop makers with the proper modifications. All kits are cast in heavy solid white durable resin and come in full color boxes picturing the finished prop. Instructions and any hardware or labels required are also included. Since these are resin garage kits, a little kit building experience is helpful for assembling and finishing the prop, but not necessary. Remember, although the finished props look very authentic, these are only resin prop guns and cannot be made to fire anything. Please look over the entire catalog as items from a particular series can appear throughout. Ordering instructions are on the back page of the catalog. Thanks for looking! Battlestar Galactica Colonial Warrior Blaster This is a replica of the gun sidearm by the Colonial Warriors in the classic 70s show. Kit is one piece solid white resin and measures about twelve inches long. Comes in photo box with instructions…............................$45.00 Farscape Pulse Pistol This kit is the pulse pistol from the hit Sci-fi Channel series Farscape. This is an exact replica of the screen used props from the filming of the show. Includes main body and side scope. -

Table of Contents

Table of Contents PART I. Introduction 5 A. Overview 5 B. Historical Background 6 PART II. The Study 16 A. Background 16 B. Independence 18 C. The Scope of the Monitoring 19 D. Methodology 23 1. Rationale and Definitions of Violence 23 2. The Monitoring Process 25 3. The Weekly Meetings 26 4. Criteria 27 E. Operating Premises and Stipulations 32 PART III. Findings in Broadcast Network Television 39 A. Prime Time Series 40 1. Programs with Frequent Issues 41 2. Programs with Occasional Issues 49 3. Interesting Violence Issues in Prime Time Series 54 4. Programs that Deal with Violence Well 58 B. Made for Television Movies and Mini-Series 61 1. Leading Examples of MOWs and Mini-Series that Raised Concerns 62 2. Other Titles Raising Concerns about Violence 67 3. Issues Raised by Made-for-Television Movies and Mini-Series 68 C. Theatrical Motion Pictures on Broadcast Network Television 71 1. Theatrical Films that Raise Concerns 74 2. Additional Theatrical Films that Raise Concerns 80 3. Issues Arising out of Theatrical Films on Television 81 D. On-Air Promotions, Previews, Recaps, Teasers and Advertisements 84 E. Children’s Television on the Broadcast Networks 94 PART IV. Findings in Other Television Media 102 A. Local Independent Television Programming and Syndication 104 B. Public Television 111 C. Cable Television 114 1. Home Box Office (HBO) 116 2. Showtime 119 3. The Disney Channel 123 4. Nickelodeon 124 5. Music Television (MTV) 125 6. TBS (The Atlanta Superstation) 126 7. The USA Network 129 8. Turner Network Television (TNT) 130 D. -

Schneider-Kreuznach Advertisement Feature

SCHNEIDER-KREUZNACH ADVERTISEMENT FEATURE Starring role Crescenzo Notarile decided to boldly go for Schneider-Kreuznach True-Streak filters when shooting a legendary sci-fi TV series hen a top Hollywood filmmaker calls his filters his “cinematographic brush” that he uses on many high- budgetW productions, you know they offer something very special. When Crescenzo Notarile landed the dream job of shooting the Star Trek: Discovery TV series, he knew he could rely on his trusty set of Schneider- Kreuznach True-Streak filters to make something that would live long and prosper. “Like a painter constructs his strokes of IMAGE Notarile on-set paint with the bristles of his brush, I like to using a Schneider- think in the same way by constructing the Kreuznach True-Streak strokes of light via certain filters,” he says. filter – a winner in our With an incredible back catalogue of Gear of the Year Awards work that includes TV and movie projects like Crazy and Timecop, plus music videos for the likes of Michael Jackson, Pink Floyd Carr, and lots of LED lights programmed lenses gives the ultimate in long flares. It’s and ZZ Top, Notarile has the eye to pick into a dimmer board with hundreds of a look that also works very well on standard equipment that allows him to put his own channels individually available to fine-tune lenses, giving an anamorphic-style look. creative stamp on his projects. the look. It’s no surprise they were voted as best Lens “I previously used the True-Streak “Each set cost hundreds of thousands of Accessory in the Pro Moviemaker Gear of the filters on a show I did calledCSI: Crime dollars, some cost millions, and took many Year Awards. -

Juuma Houkan Accele Brid Ace Wo Nerae! Acrobat Mission

3X3 EYES - JUUMA HOUKAN ACCELE BRID ACE WO NERAE! ACROBAT MISSION ACTRAISER HOURAI GAKUEN NO BOUKEN! - TENKOUSEI SCRAMBLE AIM FOR THE ACE! ALCAHEST THE AMAZING SPIDER-MAN - LETHAL FOES ANGELIQUE ARABIAN NIGHTS - SABAKU NO SEIREI-O ASHITA NO JOE CYBERNATOR BAHAMUT LAGOON BALL BULLET GUN BASTARD!! BATTLE SOCCER - FIELD NO HASHA ANCIENT MAGIC - BAZOO! MAHOU SEKAI BING BING! BINGO BISHOUJO SENSHI SAILOR MOON - ANOTHER STORY SAILOR MOON R BISHOUJO SENSHI SAILOR MOON SUPER S - FUWA FUWA PANIC BRANDISH 2 - THE PLANET BUSTER BREATH OF FIRE II - SHIMEI NO KO BS CHRONO TRIGGER - MUSIC LIBRARY CAPTAIN TSUBASA III - KOUTEI NO CHOUSEN CAPTAIN TSUBASA V - HASH NO SHOUGOU CAMPIONE CARAVAN SHOOTING COLLECTION CHAOS SEED - FUUSUI KAIROKI CHOU MAHOU TAIRIKU WOZZ CHRONO TRIGGER CLOCK TOWER CLOCKWERX CRYSTAL BEANS FROM DUNGEON EXPLORER CU-ON-PA SFC CYBER KNIGHT CYBER KNIGHT II - CHIKYUU TEIKOKU NO YABOU CYBORG 009 DAI 3 JI SUPER ROBOT WARS DAI 4 JI SUPER ROBOT WARS DAIKAIJ MONOGATARI DARK HALF DARK LAW - THE MEANING OF DEATH DER LANGRISSER DIGITAL DEVIL STORY 2 - SHIN MEGAMI TENSEI II DONALD DUCK NO MAHOU NO BOUSHI DORAEMON 4 DO RE MI FANTASY - MILON NO DOKIDOKI DAIBOUKEN DOSSUN! GANSEKI BATTLE DR. MARIO DRAGON BALL Z - HYPER DIMENSION DRAGON BALL Z - CHOU SAIYA DENSETSU DRAGON BALL Z - SUPER BUTOUDEN DRAGON BALL Z - SUPER BUTOUDEN 3 DRAGON BALL Z - SUPER GOKUDEN - TOTSUGEKI HEN DRAGON BALL Z - SUPER GOKUDEN - KAKUSEI HEN DRAGON BALL Z - SUPER SAIYA DENSETSU DRAGON QUEST I AND II DRAGON QUEST III - SOSHITE DENSETU E... DRAGON QUEST V - TENKUU NO HANAYOME -

Tobacco Product Placement and Its Reporting to the Federal Trade Commission

Tobacco product placement and its reporting to the Federal Trade Commission Jonathan R. Polansky Onbeyond LLC, Fairfax, California Stanton A. Glantz, PhD University of California, San Francisco ___________________________ University of California, San Francisco This publication is available at www.escholarship.org/uc/item/7kd981j3 July 2016 Tobacco product placement and its reporting to the FTC | 2 Summary of findings The historical record strongly suggests that asking tobacco companies to report their product placement activities and expenditures did not capture all activity in this area. This report compares expenditures for product placement described in internal documents from American Tobacco, Brown & Williamson, Liggett & Myers, Philip Morris and RJ Reynolds tobacco companies with reports the companies were required to submit to the US Federal Trade Commission in the “endorsements and testimonials” category of cigarette promotion and advertising. During that time, in their internal documents, American Tobacco, Brown & Williamson, Philip Morris and RJ Reynolds, or their contracted product placement agents, listed 750 motion pictures as engaged for product placement, 600 of which were released widely to theaters (Appendix). Substantial discrepancies exist between product placement spending described in the internal industry records and the spending reported to the Federal Trade Commission in the “endorsements and testimonials” category. Nearly half (47 percent; $2.3 million of about $5 million) of spending for on-screen product placement -

TIMECOP-AE Towards Innovative Methods for Combustion Prediction in Aero-Engines

TIMECOP-AE Towards Innovative MEthods for COmbustion Prediction in Aero-Engines Author : Dr. Thomas LEDERLIN Combustion Engineer Turbomeca S.A. Methods Department BP17 64511 Bordes Cedex – FRANCE Email : [email protected] Tel : (33) 5 59 12 50 65 Introduction The aim of the FP6 TIMECOP-AE project (2006-2010) was to improve the necessary combustion prediction methods that enable the development of practical advanced combustion systems for future engines, with reduced emission levels and fuel consumption. Predictive tools are required to be able to reduce NOx emissions, to decrease the development time and costs of new combustion systems and to improve the operability of lean-burn combustion systems. Most promising approaches to satisfy future emission levels regulations are based on lean combustion technology. However, lean combustion compromises combustor operability, including ignition, altitude re-light, pull-away, weak extinction performance and thermo-acoustic instability behaviour. Therefore it is of prime importance to evaluate the behaviour of the flame during these transient phases in the design stage and modelling tools are required. Without these tools the development of advanced combustion systems relies on many costly and time consuming rig tests. The high-fidelity simulations proposed in TIMECOP-AE are therefore a way to increase our competitiveness. During the last years big advances have been made in the field of reactive Large Eddy Simulation (LES) with gaseous fuels. This approach gives promising results with respect to turbulence modelling and can be used to model unsteady processes. Within the framework of TIMECOP-AE, the LES tools have gained a new critical capability: modelling of the liquid fuel combustion process for conventional and low-emission combustors, over a wide range of operating conditions. -

HAVERFORD-Spring-Summer-2019.Pdf

Thesis Statement Pipeline to the MLB Tobias Iaconis ’93 The singular experience of the Fords make their Long road to the senior capstone project way into the front office big screen The Magazine of Haverford College SPRING/SUMMER 2019 DANIEL DAE KIM ’90 Bringing Change to Hollywood 6 21 Editor Contributing Writers Eils Lotozo Karen Brooks DEPARTMENTS Charles Curtis ’04 Class News Editor Brian Glaser 2 Inbox Mara Miller Johnson ’10 Mara Miller Johnson ’10 3 View From Founders Giving Back Editor Ryan Jones Pat Laws Lini S. Kadaba 5 In Season Steve McGovern Photography Editor Natalie Pompilio 6 Main Lines Patrick Montero Anne Stein 14 Academix Graphic Design College Communications 21 Ford Games Tracey Diehl, editorial assistants: Eye D Communications Michael Weber ’19, Allison Wise ’20 23 Mixed Media Director of News Contributing Photographers 46 Roads Taken and Not Taken and Social Media Noel Besuzzi 47 Giving Back/Notes From Rebecca Raber Holden Blanco ’17 the Alumni Association Assistant Vice President for Stephanie Alvarez Ewens College Communications Dan Z. Johnson 53 Class News/Obituaries Chris Mills ’82 College Communications 77 Then and Now photography team: Vice President for Institutional Advancement Alexandra Iglesia ‘21, Ruiming Li ’22, Cole Sansom ’19, Lily Xu ’19 Ann West Figueredo ’84 On the cover: Daniel Dae Kim ’90. Photo by Mendy Greenwald. Back cover photo: The nature trail. Photo by Patrick Montero. Haverford magazine is printed on recycled paper that contains 30% post-consumer waste fiber. spring/summer 2019 34 40 FEATURES 28 TELL US MORE 34 Our Thesis Statement Tobias Iaconis ’93: On the Big Screen Haverford is rare among its peers for not only offering, but requiring, a senior thesis project for any student majoring at the College. -

2005 Minigame Multicart 32 in 1 Game Cartridge 3-D Tic-Tac-Toe Acid Drop Actionauts Activision Decathlon, the Adventure A

2005 Minigame Multicart 32 in 1 Game Cartridge 3-D Tic-Tac-Toe Acid Drop Actionauts Activision Decathlon, The Adventure Adventures of TRON Air Raid Air Raiders Airlock Air-Sea Battle Alfred Challenge (France) (Unl) Alien Alien Greed Alien Greed 2 Alien Greed 3 Allia Quest (France) (Unl) Alligator People, The Alpha Beam with Ernie Amidar Aquaventure Armor Ambush Artillery Duel AStar Asterix Asteroid Fire Asteroids Astroblast Astrowar Atari Video Cube A-Team, The Atlantis Atlantis II Atom Smasher A-VCS-tec Challenge AVGN K.O. Boxing Bachelor Party Bachelorette Party Backfire Backgammon Bank Heist Barnstorming Base Attack Basic Math BASIC Programming Basketball Battlezone Beamrider Beany Bopper Beat 'Em & Eat 'Em Bee-Ball Berenstain Bears Bermuda Triangle Berzerk Big Bird's Egg Catch Bionic Breakthrough Blackjack BLiP Football Bloody Human Freeway Blueprint BMX Air Master Bobby Is Going Home Boggle Boing! Boulder Dash Bowling Boxing Brain Games Breakout Bridge Buck Rogers - Planet of Zoom Bugs Bugs Bunny Bump 'n' Jump Bumper Bash BurgerTime Burning Desire (Australia) Cabbage Patch Kids - Adventures in the Park Cakewalk California Games Canyon Bomber Carnival Casino Cat Trax Cathouse Blues Cave In Centipede Challenge Challenge of.... Nexar, The Championship Soccer Chase the Chuckwagon Checkers Cheese China Syndrome Chopper Command Chuck Norris Superkicks Circus Atari Climber 5 Coco Nuts Codebreaker Colony 7 Combat Combat Two Commando Commando Raid Communist Mutants from Space CompuMate Computer Chess Condor Attack Confrontation Congo Bongo -

The Canadianization of the American Media Landscape Eric Weeks

Document generated on 09/26/2021 3:27 a.m. International Journal of Canadian Studies Revue internationale d’études canadiennes Where is There? The Canadianization of the American Media Landscape Eric Weeks Culture — Natures in Canada Article abstract Culture — natures au Canada An increasing number of American film and television productions are filmed Number 39-40, 2009 inCanada. This paper argues that while the Canadianization of the American medialandscape may make financial sense, the trend actually causes harm to URI: https://id.erudit.org/iderudit/040824ar the culturallandscape of both countries. This study examines reasons DOI: https://doi.org/10.7202/040824ar contributing to thetendency to move production to Canada and how recent events have impactedHollywood's northern migration. Further, "authentic" representations of place onthe big and small screens are explored, as are the See table of contents effects that economic runawayproductions—those films and television programs that relocate production due tolower costs found elsewhere—have on a national cultural identity and audiencereception, American and Canadian Publisher(s) alike. Conseil international d'études canadiennes ISSN 1180-3991 (print) 1923-5291 (digital) Explore this journal Cite this article Weeks, E. (2009). Where is There? The Canadianization of the American Media Landscape. International Journal of Canadian Studies / Revue internationale d’études canadiennes, (39-40), 83–107. https://doi.org/10.7202/040824ar Tous droits réservés © Conseil international d'études canadiennes, 2009 This document is protected by copyright law. Use of the services of Érudit (including reproduction) is subject to its terms and conditions, which can be viewed online. https://apropos.erudit.org/en/users/policy-on-use/ This article is disseminated and preserved by Érudit. -

Dark Horse Comics

Dark Horse Comics By Steve Duin Born of a maxed-out credit card and a passion for creator rights, Dark Horse Comics of Milwaukie, Oregon, has transformed the creative landscape in the Pacific Northwest, turning the Portland area into the country's largest community of cartoonists and graphic artists outside of New York. Dark Horse was founded in 1986 by Mike Richardson, who had opened a small chain of comic-book stores in the early 1980s on the strength of that credit card. Frustrated that the market had little to offer other than the usual super-hero fantasies, Richardson and Randy Stradley decided to publish comics aimed at more mature readers and—in a novel break with industry traditions—offer the creators full ownership of the material. Paul Chadwick's Concrete was the company's first breakthrough hit, but it was the arrival of writer/artist Frank Miller in 1990 that elevated Dark Horse from a minor publishing house to a major force in the industry. After redesigning Batman in The Dark Knight Returns, for DC Comics (one of the seminal events in modern comics history), Miller wanted to take future projects to a publisher where he would have greater creative freedom and a larger share of the profits. He eventually brought Give Me Liberty and the commercial blockbuster Sin City to Dark Horse. The Oregon-based company has, by and large, remained the industry's number three publisher, behind Marvel and DC, ever since. On the strength of Richardson's energy and instincts, Dark Horse pioneered comics based on licensed projects—including Star Wars, Buffy the Vampire Slayer, Aliens, and Terminator—and has dramatically popularized Japanese manga. -

ROSS BERRYMAN, ACS ASC Director of Photography (Partial List)

ROSS BERRYMAN, ACS ASC Director Of Photography (partial list) http://www.rossberrymandp.com Television/Streaming: FOR ALL MANKIND (Season 2, Eps. 203, 204, 207, 208) Exec Producers: Ron Moore, Ben Nedivi, Maril Davis, Apple TV Matt Wolpert Alternating DP Co-Exec Producers: Steve Oster, David Weddle, Nichole Beattie Director: Andrew Stanton MAGNUM P.I. (Ep.203) Exec Producers: Peter Lenkov, Bryan Spicer CBS Director: Carlos Bernard FOR ALL MANKIND (Season 1, Eps. 103, 104, 107, 108) Exec Producers: Ron Moore, Ben Nedivi, Maril Davis, Apple TV Matt Wolpert Alternating DP Co-Exec Producers: Steve Oster, David Weddle, Nichole Beattie Director: Allen Coulter, Meera Menon MR. MERCEDES (Season 2, Eps. 205: “Andale,” 206: “Proximity”) Producers: David E. Kelley, Jack Bender, Stephen King Audience Network Directors: Peter Weller (#205), Laura Innis (#206) GONE (Season 1) Producers: Kim Moses, Matt Lopez, Barry O’Brien NBC International UPM: David Brightbill Directors: Thomas Carter, Jan Eliasberg, Brad Turner GRIMM (Seasons 5 & 6) Producers: Sean Hayes, Todd Milliner, NBC David Greenwalt, Jim Kouf Directors: various NASHVILLE (Season 1) Producers: Dee Johnson, Loucas George ABC Callie Khouri, R.J. Cutler, Steve Buchanan Directors: various AMISH GRACE (MOW) Director: Gregg Champion Lifetime Producers: Larry Thompson, Kyle Clark DOLLHOUSE (Season 1) Producers: Joss Whedon Fox Television Directors: various UGLY BETTY (Seasons 1, 2) Producers: Silvia Horta, Ben Silverman, ABC TV/Touchstone Teri Weinberg, Alice West, Marco Pennette Directors: various THE POSEIDON ADVENTURE (MOW) Director: John Putch NBC/Hallmark Producers: Larry Levinson, Mary Church ANGEL (Seasons 3, 4 & 5) Producers: Joss Whedon, David Greenwalt, Fox Television David Fury, Jeffrey Bell, Kelly Manners Directors: various EARLY EDITION (Seasons 3, 4 & 5) Producers: Jeff Melvoin, Gary Nelson CBS/TriStar Directors: various 1 4929 Wilshire Blvd., Ste. -

Melissa Stubbs Stunt Coord

MELISSA STUBBS STUNT COORD. / PERFORMER FIGHT CHOREOGRAPHY HEIGHT: 5’6 SAG 00456628 DGA MEMBER WEIGHT: 117 lbs. UBCP/AFTRA HAIR: BLONDE (or whatever color you want) JONIES 818 980-2123 EYES: GREEN STUNT COORDINATOR CREDITS *THE LAST SAMURAI (FEATURE) WARNER BROS. ASSTANT STUNT COORD ALSO 2ND UNIT STUNT COORDINATOR DIR. EDWARD ZWICK *X-MEN II (FEATURE) 20TH CENTURY FOX DIR. BRYAN SINGER DIR.2ND U. BRIAN SMRZ GARY JENSON MAIN UNIT MELISSA STUBBS 2ND UNIT NEW YORK MINUTE WARNER BROS. DENISE DI NOVI, CHRIS SACANI (Also 2ND Unit Director) ALLISON GREENSPAN, DIR. DENNIE GORDON BALLISTIC: ECKS Vs. SEVER (FEATURE) WARNER BROS. PROD: ANDREW SUGARMAN PROD.BARBARA KELLY DIR: KAOS DOUBLE JEOPARDY (FEATURE) PARAMOUNT GLENN RANDALL 2ND. UNIT DIRECTOR ALONG CAME A SPIDER (FEATURE) PARAMOUNT DIR. LEI TAMAHOURIE MISSION TO MARS (DISNEY) DIR. BRIAN DE PALMA PRODUCER: SAM MERCER WITH JEFF HABBERSTAD THE LAST STOP (FEATURE) New City Productions PRODUCER. COLLEEN NYSTEDT ROMEO MUST DIE (FEATURE) WARNER BROS. CONRAD PALMISANO 2ND UNIT DRECTOR SPOOKY HOUSE (FEATURE) CO-COORD WITH JACOB RUPP P.M. M. MCCARTHY DARK ANGEL (SERIES) FOX PROD: JAMES CAMERON TIME COP (FEATURE) TIMECOP INC./LARGO ENT. MATCH COORD. WITH GLENN RANDALL DIR. PETER HYMES SUSPICIOUS RIVER (FEATURE) DIR: LYNNE STOPKEWICH P.M. LOUISE VALGARSON CHAOS 2004 CONVERGENCE” - (FEATURE) RAMPAGE ENT. P.M. DIANN PATRICK O’CONNER WRONGFULLY ACCUSED (FEATURE) COVER DAYS P.M. WARREN CARR THE COLOUR OF COURAGE (MOW) COVER DAYS P.M. RON MCLOUD POLICE ACADEMY (TV SERIES) FOX FOR WARNER BROS. P.M. JIM MARGELLES KISS THE GIRLS (FEATURE) TRISTAR WITH VICTOR PAUL TRICKS (FEATURE) BRAVING ALASKA (SHOWTIME) CBS MICHAEL JAFFIIE PROD.