Music Playlist Recommender System

Total Page:16

File Type:pdf, Size:1020Kb

Load more

Recommended publications

-

Legitimizing Pay to Play: Marketizing Radio Content Through a Responsive Auction Mechanism

LEGITIMIZING PAY TO PLAY: MARKETIZING RADIO CONTENT THROUGH A RESPONSIVE AUCTION MECHANISM Alon Rotem* I. INTRODUCTION ............................................. 130 II. RADIO REGULATION BACKGROUND / HISTORY ........ 131 A. Government Enforced Public Interest Standards...... 131 B. Marketization of the Public Interest Doctrine ........ 133 C. The Impact of the 1996 Telecommunications Act on License Renewals .................................... 134 III. PAYOLA RULES ............................................ 135 A. Payola Rules Impact on the Recording Industry ...... 136 B. A Brief History of Payola Transgressions ............ 137 C. Falloutfrom Recent Payola Prosecution .............. 139 D. Modern Payola Rules Ambiguity ..................... 139 IV. IMPACT OF TECHNOLOGY AND AUCTIONS ON BROAD- CAST SCARCITY ............................................ 140 A. The Rise and Evolution of Technology-Driven A uctions ....... ..................................... 141 B. Applying the Auction Mechanism to Radio Content Programm ing ........................................ 141 * J.D. Candidate 2007, University of California, Berkeley, Boalt Hall School of Law. B.S. Managerial Economics, 2001, University of California, Davis. I would like to thank my wife, Nicole, parents, Doron and Batsheva, and brothers, Tommy and Jonathan for their love, support, and encouragement. Additionally, I would like to thank Professor Howard Shelanski for his wisdom and guidance in the "Telecommunications Law & Policy" class for which this comment was written. Special thanks to Paul Cohune, who has generously de- voted his time to editing this and virtually every paper I have written in the last 10 years, to Zach Katz for sharing his profound knowledge of the music industry, and to my future col- leagues at Ropes & Gray, LLP. I am also very grateful for the assistance of the editors of the UCLA Entertainment Law Review. Mr. Rotem welcomes comments at alon.rotem@ gmail.com. -

Jeff Smith Head of Music, BBC Radio 2 and 6 Music Media Masters – August 16, 2018 Listen to the Podcast Online, Visit

Jeff Smith Head of Music, BBC Radio 2 and 6 Music Media Masters – August 16, 2018 Listen to the podcast online, visit www.mediamasters.fm Welcome to Media Masters, a series of one to one interviews with people at the top of the media game. Today, I’m here in the studios of BBC 6 Music and joined by Jeff Smith, the man who has chosen the tracks that we’ve been listening to on the radio for years. Now head of music for BBC Radio 2 and BBC Radio 6 Music, Jeff spent most of his career in music. Previously he was head of music for Radio 1 in the late 90s, and has since worked at Capital FM and Napster. In his current role, he is tasked with shaping music policy for two of the BBC’s most popular radio stations, as the technology of how we listen to music is transforming. Jeff, thank you for joining me. Pleasure. Jeff, Radio 2 has a phenomenal 15 million listeners. How do you ensure that the music selection appeals to such a vast audience? It’s a challenge, obviously, to keep that appeal across the board with those listeners, but it appears to be working. As you say, we’re attracting 15.4 million listeners every week, and I think it’s because I try to keep a balance of the best of the best new music, with classic tracks from a whole range of eras, way back to the 60s and 70s. So I think it’s that challenge of just making that mix work and making it work in terms of daytime, and not only just keeping a kind of core audience happy, but appealing to a new audience who would find that exciting and fun to listen to. -

Dade County Radio December 2012 Playlist

# No Title Artist Length Count 1 Miami Bass FEST III CHICO 1:18 228 2 FreddieMcGregor_Da1Drop 0:07 126 3 dadecountyradio_kendrick DROP 0:05 121 4 DROP 0:20 104 5 daoneradiodrop 0:22 90 6 Black Jesus @thegame 4:52 52 7 In The End (new 2013) @MoneyRod305 ft @LeoBre 3:53 49 8 M.I.A. Feat. @Wale (Dirty) @1Omarion 3:57 48 9 Up (Remix) K Kutta , LoveRance, 50 Cent 2:43 47 10 Drop-DadeCounty Patrice 0:10 47 11 My Hood (Feat Mannie Fresh) B.G. 3:58 46 12 SCOOBY DOO JT MONEY 4:32 45 13 Stay High _Official Music Video_ Fweago Squad (Rob Flamez) Feat Stikkmann 3:58 45 14 They Got Us (Prod. By Big K.R.I.T.) Big K.R.I.T. 3:27 44 15 You Da One Rihanna 3:22 44 16 Love Me Or Not (Radio Version) @MoneyRod305 Ft @Casely 4:04 44 17 Haters Ball Greeezy 3:14 44 18 Playa A** S*** J.T. Money 4:35 44 19 Ni**as In Paris Jay-Z & Kanye West 3:39 43 20 Myself Bad Guy 3:26 43 21 Jook with me (dirty) Ballgreezy 3:46 42 22 Ro_&_Pat_Black_-_Soldier 05_Ridah_Redd_feat._Z 3:37 42 23 Go @JimmyDade 3:49 42 24 Keepa @Fellaonyac 4:43 41 25 Gunplay-Pop Dat Freestyle Gunplay 1:47 41 26 I Need That Nipsey Hussle ft Dom Kennedy 2:47 40 27 Harsh Styles P 4:18 40 28 American Dreamin' Jay-Z 4:48 40 29 Tears Of Joy (Ft. -

Peter Seidel's Milkboy Art Show

Friends’ Central School FVolume XXXVI Issue III 1101 City AvenueCUS Wynnewood Pennsylvania 19096 November 2010 Edition In This Issue.... Peter Seidel’s Milkboy Art Show By Alex Flick ‘12 Page 2: Q/A with As studens, we the visual imagery of are often so caught light when it’s seen Kristen O’Dore up with our own through colored lives that we forget plastic or water. He’s our teachers have been interested in this Page 3: their own life goals, subject for almost separate from our 15 years. Mr. Seidel Powerhouse 2010 education. But as has received positive Review many of us know, Mr. feedback from Seidel, our creative visitors, and of course art teacher, is an he says he feels great Page 4: Senior accomplished artist about it. Although as well. His recent he also said that he Sibling Preview exhibition, at Milkboy would be happy with Coffee on Lancaster any reaction, positive Ave. in Ardmore, has or negative. Page 6: Boys’ and attracted the eyes of In the future, Mr. many visitors, all very Seidel hopes to have Girls’ Basketball interested in his work. more exhibits. But he Preview This is Mr. does not know where Seidel’s second show or when these will at Milk Boy. His first be. However, I hope Page 6: Phoenix in show, during April of that when they do 2008, was so popular happen, we manage to the Phast Lane that the owner asked take time out of our him back. His show schedule to support featured work done his work. -

8123 Songs, 21 Days, 63.83 GB

Page 1 of 247 Music 8123 songs, 21 days, 63.83 GB Name Artist The A Team Ed Sheeran A-List (Radio Edit) XMIXR Sisqo feat. Waka Flocka Flame A.D.I.D.A.S. (Clean Edit) Killer Mike ft Big Boi Aaroma (Bonus Version) Pru About A Girl The Academy Is... About The Money (Radio Edit) XMIXR T.I. feat. Young Thug About The Money (Remix) (Radio Edit) XMIXR T.I. feat. Young Thug, Lil Wayne & Jeezy About Us [Pop Edit] Brooke Hogan ft. Paul Wall Absolute Zero (Radio Edit) XMIXR Stone Sour Absolutely (Story Of A Girl) Ninedays Absolution Calling (Radio Edit) XMIXR Incubus Acapella Karmin Acapella Kelis Acapella (Radio Edit) XMIXR Karmin Accidentally in Love Counting Crows According To You (Top 40 Edit) Orianthi Act Right (Promo Only Clean Edit) Yo Gotti Feat. Young Jeezy & YG Act Right (Radio Edit) XMIXR Yo Gotti ft Jeezy & YG Actin Crazy (Radio Edit) XMIXR Action Bronson Actin' Up (Clean) Wale & Meek Mill f./French Montana Actin' Up (Radio Edit) XMIXR Wale & Meek Mill ft French Montana Action Man Hafdís Huld Addicted Ace Young Addicted Enrique Iglsias Addicted Saving abel Addicted Simple Plan Addicted To Bass Puretone Addicted To Pain (Radio Edit) XMIXR Alter Bridge Addicted To You (Radio Edit) XMIXR Avicii Addiction Ryan Leslie Feat. Cassie & Fabolous Music Page 2 of 247 Name Artist Addresses (Radio Edit) XMIXR T.I. Adore You (Radio Edit) XMIXR Miley Cyrus Adorn Miguel Adorn Miguel Adorn (Radio Edit) XMIXR Miguel Adorn (Remix) Miguel f./Wiz Khalifa Adorn (Remix) (Radio Edit) XMIXR Miguel ft Wiz Khalifa Adrenaline (Radio Edit) XMIXR Shinedown Adrienne Calling, The Adult Swim (Radio Edit) XMIXR DJ Spinking feat. -

Hip Hop As Cultural Capital: Remixing Bourdieu's Theory to Affirm Cultural Wealth

Language, Literature, and Interdisciplinary Studies (LLIDS) ISSN: 2547-0044 http://ellids.com/archives/2019/03/2.3-Turner.pdf CC Attribution-No Derivatives 4.0 International License http://ellids.com/ Hip Hop as Cultural Capital: Remixing Bourdieu's Theory to Affirm Cultural Wealth Milanika Turner Hierarchical societies reproduce themselves through cultural resources that generate social value. The values attached to these so- cial and cultural resources are determined from the top social strata and then disseminated throughout society via mediums like education. Pitched as a supplement to mainstream pedagogy, hip-hop based edu- cation (HHBE) uses rap songs and lyrics as curricular resources to teach a variety of disciplines. HHBE has been inserted into teaching practice since the 1990s to enhance English language arts analyses (Hall 343; Morrell and Duncan-Andrade 89) in addition to providing context and relevancy for humanities and social science curricula (Stovall 587). However, the pedagogical potential of HHBE has thus far used hip hop lyrics as an accessory to reinforce the transmission of dominant social values through education instead of utilizing the in- herent value of hip hop as its own form of social and cultural capital. Applying Yosso’s conceptualization of community cultural wealth to rap music provides evidence of the social value of hip hop for educa- tion and broader society even after decades of negative characteriza- tions of the genre. Despite its popularity in the United States of America, hip hop culture is misunderstood by many. The popular discourse on rap music is unjustly negative. Throughout history black popular music has been blamed for the propagation of a number of social ills including the de- terioration of families, urban decline, and juvenile delinquency. -

Playlists from the Matrix — Combining Audio and Metadata in Semantic Embeddings

PLAYLISTS FROM THE MATRIX — COMBINING AUDIO AND METADATA IN SEMANTIC EMBEDDINGS Kyrill Pugatschewski1;2 Thomas Kollmer¨ 1 Anna M. Kruspe1 1 Fraunhofer IDMT, Ilmenau, Germany 2 University of Saarland, Saarbrucken,¨ Germany [email protected], [email protected], [email protected] ABSTRACT 2. DATASET 2.1 Crawling We present a hybrid approach to playlist generation which The Spotify Web API 2 has been chosen as source of playlist combines audio features and metadata. Three different ma- information. We focused on playlists created by tastemak- trix factorization models have been implemented to learn ers, popular users such as labels and radio stations, as- embeddings for audio, tags and playlists as well as fac- suming that their track listings have higher cohesiveness in tors shared between tags and playlists. For training data, comparison to user collections of their favorite songs. Ex- we crawled Spotify for playlists created by tastemakers, tracted data includes 30-second preview clips, genres and assuming that popular users create collections with high high-level audio features such as danceability and instru- track cohesion. Playlists generated using the three differ- mentalness. ent models are presented and discussed. In total, we crawled 11,031 playlists corresponding to 4,302,062 distinct tracks. For 1,147 playlists, we have pre- view clips for their 54,745 distinct tracks. 165 playlists are curated by tastemakers, corresponding to 6,605 tracks. 1. INTRODUCTION 2.2 Preprocessing The overabundance of digital music makes manual compi- To use the high-level audio features within our model, we lation of good playlists a difficult process. -

United States District Court Eastern District Of

Case 2:13-cv-05463-KDE-SS Document 1 Filed 08/16/13 Page 1 of 118 UNITED STATES DISTRICT COURT EASTERN DISTRICT OF LOUISIANA PAUL BATISTE D/B/A * CIVIL ACTION NO. ARTANG PUBLISHING LLC * * SECTION: “ ” Plaintiff, * * JUDGE VERSUS * * MAGISTRATE FAHEEM RASHEED NAJM, P/K/A T-PAIN, KHALED * BIN ABDUL KHALED, P/K/A DJ KHALED, WILLIAM * ROBERTS II, P/K/A RICK ROSS, ANTOINE * MCCOLISTER P/K/A ACE HOOD, 4 BLUNTS LIT AT * ONCE PUBLISHING, ATLANTIC RECORDING * CORPORATION, BYEFALL MUSIC LLC, BYEFALL * PRODUCTIONS, INC., CAPITOL RECORDS LLC, * CASH MONEY RECORDS, INC., EMI APRIL MUSIC, * INC., EMI BLACKWOOD MUSIC, INC., * FIRST-N-GOLD PUBLISHING, INC., FUELED BY * RAMEN, LLC, NAPPY BOY, LLC, NAPPY BOY * ENTERPRISES, LLC, NAPPY BOY PRODUCTIONS, * LLC, NAPPY BOY PUBLISHING, LLC, NASTY BEAT * MAKERS PRODUCTIONS, INC., PHASE ONE * NETWORK, INC., PITBULL’S LEGACY * PUBLISHING, RCA RECORDS, RCA/JIVE LABEL * GROUP, SONGS OF UNIVERSAL, INC., SONY * MUSIC ENTERTAINMENT DIGITAL LLC, * SONY/ATV MUSIC PUBLISHING, LLC, SONY/ATV * SONGS, LLC, SONY/ATV TUNES, LLC, THE ISLAND * DEF JAM MUSIC GROUP, TRAC-N-FIELD * ENTERTAINMENT, LLC, UMG RECORDINGS, INC., * UNIVERSAL MUSIC – MGB SONGS, UNIVERSAL * MUSIC – Z TUNES LLC, UNIVERSAL MUSIC * CORPORATION, UNIVERSAL MUSIC PUBLISHING, * INC., UNIVERSAL-POLYGRAM INTERNATIONAL * PUBLISHING, INC., WARNER-TAMERLANE * PUBLISHING CORP., WB MUSIC CORP., and * ZOMBA RECORDING LLC * * Defendants. * ****************************************************************************** Case 2:13-cv-05463-KDE-SS Document 1 Filed 08/16/13 Page 2 of 118 COMPLAINT Plaintiff Paul Batiste, doing business as Artang Publishing, LLC, by and through his attorneys Koeppel Traylor LLC, alleges and complains as follows: INTRODUCTION 1. This is an action for copyright infringement. -

TELLIN' STORIES: the Art of Building & Maintaining Artist Legacies

TELLIN’ STORIES The Art Of Building & Maintaining Artist Legacies TELLIN’ STORIES: The Art of Building & Maintaining Artist Legacies Introduction “A legacy has to be proactive and reactive at the same time and their greatest successes lie in simultaneously looking back to guide the way forward...” Legacies in music are a life’s work – constantly sometimes it can have a detrimental impact. It is evolving and surprising audiences. a high-wire act and a bad decision can unspool a lifetime of work, an image becomes frozen or the It does not follow, however, that just because you artists (and their art) are painted into a corner. have built a legacy in your career that it is set in stone forever. Legacies are ongoing work and they When an artist passes away, that work they have to be worked at, refined and maintained. achieved in their lifetime has to be carried on by the Existing audiences have to be held onto and new artist’s estate. Increasingly they have to be worked audiences have to be continually brought on board. and developed just like current living artists. Streaming and social media have made that always- Equally, it does not follow that everything an on approach more straightforward, but every step artist and their team does will add to or expand has to be carefully calculated and remain true to that legacy. Sometimes it can have no impact and the original vision of that artist. 2 TELLIN’ STORIES: The Art of Building & Maintaining Artist Legacies Contents 02 Introduction 04 Filing systems: the art of archive management 12 Inventory -

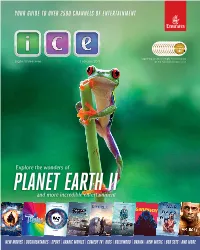

Your Guide to Over 2500 Channels of Entertainment

YOUR GUIDE TO OVER 2500 CHANNELS OF ENTERTAINMENT Voted World’s Best Infl ight Entertainment Digital Widescreen February 2017 for the 12th consecutive year! PLANET Explore the wonders ofEARTH II and more incredible entertainment NEW MOVIES | DOCUMENTARIES | SPORT | ARABIC MOVIES | COMEDY TV | KIDS | BOLLYWOOD | DRAMA | NEW MUSIC | BOX SETS | AND MORE ENTERTAINMENT An extraordinary experience... Wherever you’re going, whatever your mood, you’ll find over 2500 channels of the world’s best inflight entertainment to explore on today’s flight. 496 movies Information… Communication… Entertainment… THE LATEST MOVIES Track the progress of your Stay connected with in-seat* phone, Experience Emirates’ award- flight, keep up with news SMS and email, plus Wi-Fi and mobile winning selection of movies, you can’t miss and other useful features. roaming on select flights. TV, music and games. from page 16 STAY CONNECTED ...AT YOUR FINGERTIPS Connect to the OnAir Wi-Fi 4 103 network on all A380s and most Boeing 777s Move around 1 Choose a channel using the games Go straight to your chosen controller pad programme by typing the on your handset channel number into your and select using 2 3 handset, or use the onscreen the green game channel entry pad button 4 1 3 Swipe left and right like Search for movies, a tablet. Tap the arrows TV shows, music and ĒĬĩĦĦĭ onscreen to scroll system features ÊÉÏ 2 4 Create and access Tap Settings to Português, Español, Deutsch, 日本語, Français, ̷͚͑͘͘͏͐, Polski, 中文, your own playlist adjust volume and using Favourites brightness Many movies are available in up to eight languages. -

Classical Revival in 2020

THE CLASSICAL REVIVAL IN 2020 A report by BPI, Deezer and the Royal Philharmonic Orchestra Deezer, BPI and the Royal Philharmonic (RPO) present their first ever collaborative report which brings together streaming data with consumer opinion research. Using Deezer’s streaming data, the report highlights the demographic profile of Classical listeners across a range of countries and whether that has changed during lockdown. The market research data (provided by the RPO) reports on consumer attitudes to music during the period of home isolation. The RPO has seen online and social media audience engagement soar during lockdown and it has premiered several online concerts. All the organisations involved in this report share a commitment to broadening the range and appeal of Classical music to a broader and younger audience. Please address any questions to: Chris Green: [email protected] Hannah Wright: [email protected] INTRODUCTION RPO: Guy Bellamy: [email protected] • In a one-year period (April 2019- 2020), there was a 17% increase of Classical listeners on Deezer worldwide. • In the last year, almost a third (31%) of Deezer’s Classical listeners in the UK were under 35 years old, a much younger age profile than those who typically purchase Classical music. • RPO’s research found that under 35s were the most likely age group to have listened to orchestral music during lockdown (59%, compared to a national average of 51%). • There were a greater number of female KEY listeners in the UK listening to Deezer’s FINDINGS Classical playlists during lockdown. • Classical fans preferred mood-based music during lockdown. -

2011-01-08A-Billboard-Page-0060

AIRPLAY SALES DATA MONITORED BY COMPILED BY JAN niclscn nickel) 8 Billboard BDS SoundScan MAINSTREAM 1281B HIP -NOP° TITLE IMPRINT / DISTRIBUTING LABEL #1 MICHAEL JACKSON 1 2 ASTON MARTIN MUSIC WHAT'S 1 1 #1 MY NAME? YOU ARE 2WKS MICHAEL MJJ w13 1 10 1 1 /EPIC 66773/SONY MUSIC O S 15 .1, WRIBYMEBMFBINDEBMBEMMdYS W RIHANNA FEAT. DRAKE (SRP /DEF JAM/IOJMG) CHARLIE WILSON (P MUSIC /JIVE/JLG) JAMIE FOXX NO HANDS ONLY GIRL (IN THE WORLD) WHEN A WOMAN LOVES BEST NIGHT OF MY LIFE J 54860 /RMG WAIL FLOCKA FLAME (1017 BRICK SOUAL/ASOJJ4 WARNER BROS) RIHANNA (SRP/DEF JAM/IDJMG) R. KELLY (JIVE /JLG) EMINEM Ell © 8 28 GG ...RIGHT THRU ME NO HANDS CANT BE FRIENDS MîOYBIY YAR'SMOY /PfTERWOHWIE15WPE 014111Á6A MDO MBW (YOUNG NUGYAA91 MOEV /UFW SAL MOTO714IJMRG) WAKA FLOCEAFLAME (1017 BREI< SOIWYASYWNVWARNER BROS.) TREY SONGS (SONGBOOK/ATLANTIC) NICKI MINAJ MIN ..WHAT'S MY NAME? BLACK AND YELLOW SOMETIMES I CRY MMRBLI1'KING MONEY/CASHMOEYAEAEMAL NEIMDAN 015021 AARG 4 I I 4 RIHANNA FEAT. DRAKE (SRP /DEF JAM /IDJMG) WIZ KHALIFA (ROSTRUM /ATLANTIC) ERIC BENET (REPRISE /WARNER BROS.) KEYSHIA COLE 5 NEW CANT BE FRIENDS BOTTOMS UP I'M DOING ME CALLING ALL HEARTS GEFFEN 015108 /IGA ... TREY SONGS (SONGBOOK/ATLANTIC) TREY SONGS FEAT. NICKI MINAJ (SONGBOOK/ATLANTIC) FANTASIA (S /19/J /RMG) RIHANNA MAKE A MOVIE RIGHT ABOVE IT I 6 SHARE MY LIFE LOUD SRP /DEF JAM 014927/IDJMG 6 TWISTA FEAT. CHRIS BROWN (GMG /CAPITOL) LIL WAYNE FEAT. DRAKE (CASH MONEY/UNIVERSAL MOTOWN) KEM (UNIVERSAL MOTOWN/UMRG) KERI HILSON 7 NEW LAY IT DOWN GRENADE EMERGENCY NO BOYS ALLOWED MOSLEY/ZONE 44NTERSCOPE 01508BlGA LLOYD (YOUNG- GOLOIE/ZONE 4 /INTERSCOPE) EMI BRUNO MARS (ELEKTRA/ATLANTIC) TANK (MOGAME /SONG DYNASTY /ATLANTIC) ..NO BS LIKE A G6 .