Evaluating the [In]Security of Web Applications

Total Page:16

File Type:pdf, Size:1020Kb

Load more

Recommended publications

-

Development of Web Based Application for Visualisation of Railway Condition Data

Development of Web Based Application for Visualisation of Railway Condition Data Abiola Famureva Master Programme in Mobile Systems 2020 Luleå University of Technology Department of Civil, Environmental and Natural Resources Engineering Development of Web Based Application for Visualisation of Railway Condition Data by Abiola Famurewa Master of Science Mobile systems Department of Computer Science, Electrical and Space Engineering Luleå University of Technology Supervisor Dr. Josef Hallberg August, 2014 PREFACE This master thesis was carried out at Damill AB between 2013 and early 2014. It is submitted for the partial fulfillment of the requirement of Master Degree in Computer Science and Engineering at Luleå University of Technology. The central focus and contribution of the thesis is the development of web application which is suitable for visualizing railway condition data for different stakeholders. My sincere gratitude goes to God for his divine support and goodness towards me all through the programme especially during the thesis work. I would like to thank my examiner Prof. Christer Åhlund and my supervisor Dr. Josef Hallberg for the chance to work with them and their encouragement which inspires me to complete the thesis. I would like appreciate my external supervisor Mr. Dan Larsson and the entire members of staff of Damill AB for giving creating a good working environment and giving me the technical support during the course of the thesis work. The technical support of Jon Öberg in the collection and structuring of the data is appreciated I would also like to express my special gratitude to my dear husband Stephen and lovely children, Jeremiah and Joanna for their encouragement, patience and support to make this final thesis possible. -

The Most Powerful Way to Develop Web Applications!

The most powerful way to develop Web applications! CodeCharge reduced the time it took to develop applications Whether you’re developing data-driven interactive Web sites or enterprise from months to weeks! My latest site is over 56,000 lines of Internet and Intranet systems, CodeCharge Studio provides the fastest way to code with less than 5% of it hand-coded! build your applications. —Dan Safar, Insight Consulting Group CodeCharge has enabled us to deliver eye-popping database- driven Web sites in a fraction of the time other tools take. Powerful Web RAD and Web Reporting Tool —Frank Rocco CodeCharge Studio incorporates a powerful code generation engine wrapped in a full-featured IDE. You simply specify your application’s user I have intermediate skill in ColdFusion and SQL, entry-level skill in PHP, and almost no skill with ASP, yet I have been able interactions, logic and data sources through intuitive point-and-click wizards, to generate successfully applications in all three languages and CodeCharge automatically generates clean, customizable, industrial with ease. strength applications and Web reports CodeCharge works with all databases —Kelly D. Carter and supports all major server technologies: PHP, ASP.NET (C# & VB), ASP (VBScript), Java (JSP or Servlets), ColdFusion 4.0 and Perl. I have had compliments from my peers on how neat and readable my code is. Little do they know, CodeCharge did it all for me. Shhh! —D.B. Code generation sets CodeCharge apart CodeCharge Studio is the fi rst visual RAD tool that employs a code- generation engine to automatically create database-driven Web applications. -

Buyers Guide Product Listings

BUYERS GUIDE PRODUCT LISTINGS Visual Studio Magazine Buyers’ Guide Product Listings The 2009 Visual Studio Magazine Buyers’ Guide listings comprise more than 700 individual products and services, ranging from developer tooling and UI components to Web hosting and instructor-led training. Included for each product is contact and pricing information. Keep in mind that many products come in multiple SKUs and with varied license options, so it’s always a good idea to contact vendors directly for specific pricing. The developer tools arena is a vast and growing space. As such, we’re always on the prowl for new tools and vendors. Know of a product our readers might want to learn more about? E-mail us at [email protected]. BUG & FEATURE TRACKING Gemini—CounterSoft Starts at $1189 • countersoft.com • +44 (0)1753 824000 Rational ClearQuest—IBM Rational Software $1,810 • ibm.com/rational • 888-426-3774 IssueNet Intercept—Elsinore Technologies Call for price • elsitech.com • 866-866-0034 FogBugz 7.0—Fog Creek Software $199 • fogcreek.com • 888-364-2849; 212-279-2076 SilkPerformer—Borland Call for price • borland.com • 800-632-2864; 512-340-2200 OnTime 2009 Professional—Axosoft Starts at $795 for five users • axosoft.com • 800-653-0024; SourceOffSite 4.2—SourceGear 480-362-1900 $239 • sourcegear.com • 217-356-0105 Alexsys Team 2.10—Alexsys Surround SCM 2009—Seapine Software Starts at $145 • alexcorp.com • 888-880-2539; 781-279-0170 Call for price • seapine.com • 888-683-6456; 513-754-1655 AppLife DNA—Kinetic Jump Software TeamInspector—Borland -

Php|Architect the Magazine for PHP Professionals Writing Secure PHP Code Make Your Applications Safer

JANUARY 2003 - Volume II - Issue 1 php|architect The Magazine For PHP Professionals Writing Secure PHP Code Make your applications safer IonCube PHP Accelerator 1.3.3 Reviewed for you: CodeCharge Studio 1.0 Exclusive ZEND Interview Plus: Using the .NET Assembly with PHP Writing A Web-based PDF Viewer Taming Full-Text Search with MySQL This copy is registered to: Jakob Breivik Grimstveit Accessing the Windows API and Other DLLs [email protected] Implementing Database Persistence Layers The designers of PHP offerfer youyou thethe fullfull spectrumspectrum ofof PHPPHP solutionssolutions Serve More. With Less. Zend Performance Suite Reliable Performance Management for PHP Visit www.zend.com for evaluation version and ROI calculator Technologies Ltd. TABLE OF CONTENTS php|architect Departments Features 10 | Implementing Database 4 | EDITORIAL RANTS Persistence Layers in PHP by Shawn Bedard 5 | NEW STUFF 19 | Accessing the Windows API and other Dynamic Link Libraries X 6 | PHP-WIN by David Jorm CodeCharge Studio 1.0 30 | Taming Full-Text Search with E MySQL 58 | REVIEWS by Leon Vismer EX D C ionCube PHP Accelerator LU 37 | The Zend of Computer SI VE Programming: Small Business N Heaven 68 | TIPS & TRICKS I by Marco Tabini by John Holmes 42 | Using The .NET Assembly through COM in PHP 71 | BOOK REVIEWS by Jayesh Jain 50 | Writing Secure PHP Code 73 | exit(0); by Theo Spears Let’s Call it the Unknown Language 62 | Writing a Web-based PDF Viewer by Marco Tabini January 2003 · PHP Architect · www.phparch.com 3 EDITORIAL There is nothing like a "trial by With this issue, I think we have php|architect fire" to make or break your day. -

Thematic Cycle 2: Software Development Table of Contents

THEMATIC CYCLE 2: SOFTWARE DEVELOPMENT THEMATIC CYCLE 2: SOFTWARE DEVELOPMENT TABLE OF CONTENTS 1. SDLC (software development life cycle) tutorial: what is, phases, model -------------------- 4 What is Software Development Life Cycle (SDLC)? --------------------------------------------------- 4 A typical Software Development Life Cycle (SDLC) consists of the following phases: -------- 4 Types of Software Development Life Cycle Models: ------------------------------------------------- 6 2. What is HTML? ----------------------------------------------------------------------------------------------- 7 HTML is the standard markup language for creating Web pages. -------------------------------- 7 Example of a Simple HTML Document---------------------------------------------------------------- 7 Exercise: learn html using notepad or textedit ---------------------------------------------------- 11 Example: ---------------------------------------------------------------------------------------------------- 13 The <!DOCTYPE> Declaration explained ------------------------------------------------------------ 13 HTML Headings ---------------------------------------------------------------------------------------------- 13 Example: ---------------------------------------------------------------------------------------------------- 13 HTML Paragraphs ------------------------------------------------------------------------------------------- 14 Example: ---------------------------------------------------------------------------------------------------- -

5.1. Codecharge

國 立 交 通 大 學 資訊科學系 碩 士 論 文 適用於 Visual Studio .NET 上之 多使用者介面產生器 A Multi User-Interface Generation Plug-in for Visual Studio .NET 研 究 生:高啟涵 指導教授:袁賢銘 教授 適用於 Visual Studio .NET 上之多使用者介面產生器 A Multi User-Interface Generation Plug-in for Visual Studio .NET 研 究 生:高啟涵 Student:Chi-Han Kao 指導教授:袁賢銘 Advisor:Shyan-Ming Yuan 國 立 交 通 大 學 資 訊 科 學系 碩 士 論 文 A Thesis Submitted to Institute of Computer and Information Science College of Electrical Engineering and Computer Science National Chiao Tung University in partial Fulfillment of the Requirements for the Degree of Master in Computer and Information Science June 2005 Hsinchu, Taiwan, Republic of China 中華民國九十四年六月 ii 適用於 Visual Studio .NET 上之多使用者介面產生器 學生: 高啟涵 指導教授: 袁賢銘 國立交通大學資訊科學系﹙研究所﹚碩士班 摘要 隨著手機的多樣化,各種設備間的規格也漸趨差異。就程式設計師而言, 相同功能的程式,為了在不同平台上執行,便必須以不同的程式語言重新撰寫。 這樣的行為對於程式開發者而言,是重覆而不必要的。因此,我們希望提供一 個轉換的工具,將撰寫好的程式,轉換成特定語言的版本。 在本篇論文中,我們選擇將轉換的功能嵌入到 Microsoft 所開發的 Visual Studio .NET。這是一套在 .NET 平台下,開發程式的整合性開發工具 (Integrated Development Environment),我們希望使用者利用 Visual Studio .NET 本身的開發環境,拖拉使用者介面呈現的方法,而後我們的轉換工 具便可以產生相對應的 C#、J2ME、XHTML-MP 和 WML 版本的使用者介面,節省程 式設計師的開發時間。所以,從程式開發者的角度而言,只要將我們的轉換工 具安裝到 Visual Studio .NET 中,使用一樣的方式作使用者介面的開發,而後 便可以得到不同版本的使用者介面,節省在不同平台上開發程式的時間。 iii A Multi User-Interface Generation Plug-in for Visual Studio .NET Student: Chi-Han Kao Advisor: Shyan-Ming Yuan Department of Computer and Information Science National Chiao Tung University Abstract With the variety of the mobile devices, specifications between devices have become more and more different. From the point view of programmers, in order to execute the application with the same function on the different platform, programmers have to rewrite the program in another language. -

CCS Developer Magazine

Vol OCTOBER 2004 VOLUME NO. 1 ISSUE NO. 3 DataObjx L.L.C. Website: www.dataobjx.net DataObjx L.L.C. Meriden, CT USA Office: 860/919-2536 Email: [email protected] Website: www.dataobjx.net DataObjx L.L.C. Extra: Building A In This Issue Document Management Dynamic Sub Menus - In this article we examine a technique that will enable you to System - Part 1 for rapidly create and integrate a sub-menu system for the various sections of your website. This method allows for dynamic link generation and allows you to create as many separate PHP Included In sub menus as you want. This Issue. Building A Document Management System Part 1 – PHP - Included in this issue is the conversion of “Part 1” of the Document Management System. Translated By Sixto Santos. If you’re a PHP developer, download Issue #1 in conjunction with this article and code. Dynamically Formatting Row Colors – PHP -In this article the technique used to dynamically alter the color of a row (php) is discussed. Read this article in conjunction with Issue #1. Producing Excel Spreadsheet Using The PHP Common Interface -In this article Fernando Sibaja explains how you can produce excel spreadsheets for any table or query using the PHP Common Interface. Need More Answers? Building A Document Management System – Part 3 -We wrap up our three (3) part Click here to access the Code series on building a document management system. Together we’ll integrate Check-In and Charge Studio Forums Check-Out capability plus the ability to un-do a check out. -

9397 Microway Update #24 Aprmay08.Indd

UPDATE April / May 2008 NEW! CONTENTS Functional Index page 22 DEVELOPER 3rdRail . 17 C++Builder 2007 . 16 Visual Studio 2008 Officially Launched! new technology for developing rich interactive Chart FX . 3 As most would already know by now, Microsoft applications for the Web). Take a look at CodeCharge Studio 4.0 . 9 the products under the Developer category CodeGear RAD Studio 2007. 16 “officially” launched Visual Studio 2008 in February. ComponentOne Studio Enterprise 2008 . 4 in this edition. DataWindow. NET 2.5 . 20 What is not so well known is that our MSDN Delphi/400 & Delphi/400 for PHP . 16 InstallShield 2008 Express . 14 customers have enjoyed access to the final release DB2, AS400 and Series-i products InstallShield 2008 . 11 of Visual Studio 2008 since November 2007, and MicroWay has products to assist with data JBuilder 2008. 17 JGear LiveSource. 17 have saved $000’s by ordering under a licensing replication, real-time XML translation and to make Keystone Training - .NET Complete . 2 scheme known as the “Open Business License”. integration and development faster for Windows LEADTOOLS Document Imaging Suite . 3 Nalpeiron Licensing Service . 24 If you don’t have an MSDN subscription, or are based developers & administrators working with NetAdvantage for .NET + WPF . 6 getting close to renewal time, you should check AS400/Series-i based data. See the Hit Ritmo and PrimalScript 2007 . 8 RadControls for ASP.NET . 8 out the benefits and huge savings on VS2008 and DBmoto products, as well as CodeGear’s new Ritmo/DB2 . 24 MSDN presented on page 7. MicroWay is the Delphi 400 in this edition. -

Codecharge 3.0

PRODUCT REVIEW: CodeCharge 3.0 PRODUCT REVIEW CodeCharge 3.0 Yet Another IDE? by PETER B. MacINTYRE his month I am reviewing another IDE for PHP. PHP: 4+, 5+ (MySQL - plus other ODBC databases) I wanted to look at this one, because I have a PRODUCT VERSION: 3.0 need to find the “Perfect PHP IDE,” and I have O/S: Windows 95, 98, ME, NT4, 2000 or XP yet to find one. There are limitations to this one as well: the first one being that it is Windows- Tonly. The plus side (if you consider it a plus) is that it PRICING: $279.95 (non-perpetual, per user peryear) can generate code for many web development platforms, $499.95 US (perpetual, per user) namely ASP.NET (C# & VB), ASP (VBScript), Java (JSP or Servlets), ColdFusion 4.0, PHP, and Perl. Naturally, this LINK: http://www.yessoftware.com/index2.php review will focus on the PHP side of the fence. Keeping with tradition, here is what the yessoftware web site has to say about the product: Getting Started The install was clean and uneventful. Many ODBC drivers CodeCharge Studio is the most productive solution for are installed at the same time as well as a few sample MS- visually creating database-driven Web applications with Access database files. Icons are created on the desktop minimal amount of coding. The support for virtually all and you are ready to explore. Figure 1 shows one of the databases, web servers and web technologies makes screens that are involved in the installation process. -

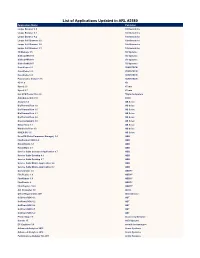

List of Applications Updated in ARL #2580

List of Applications Updated in ARL #2580 Application Name Publisher Loupe Browser 4.0 10x Genomics Loupe Browser 4.1 10x Genomics Loupe Browser 4.2 10x Genomics Loupe Cell Browser 2.0 10x Genomics Loupe Cell Browser 3.0 10x Genomics Loupe Cell Browser 3.1 10x Genomics 3D Manage 1.5 3D Systems GibbsCAM 2015 3D Systems GibbsCAM 2016 3D Systems GibbsCAM 2017 3D Systems CaseViewer 2.1 3DHISTECH CaseViewer 2.2 3DHISTECH CaseViewer 2.3 3DHISTECH Pannoramic Viewer 1.15 3DHISTECH 4D 11.0 4D Sync2 2.6 4Team Sync2 2.7 4Team Hot CPU Tester Pro 4.4 7Byte Computers AutoQuoterX II 2.10 80/20 Analyst 1.4 AB Sciex BioPharmaView 1.0 AB Sciex BioPharmaView 1.5 AB Sciex BioPharmaView 2.1 AB Sciex BioPharmaView 3.0 AB Sciex DiscoveryQuant 3.0 AB Sciex MasterView 1.1 AB Sciex MetabolitePilot 1.5 AB Sciex SCIEX OS 1.5 AB Sciex DrivePM (Drive Parameter Manager) 1.4 ABB FlexPendant SDK 6.0 ABB RobotStudio 5.1 ABB RobotWare 5.1 ABB Service Suite Compose Application 9.7 ABB Service Suite Desktop 9.6 ABB Service Suite Desktop 9.7 ABB Service Suite Mobile Application 9.6 ABB Service Suite Mobile Application 9.7 ABB Comparator 2.0 ABBYY FineReader 1.0 ABBYY FineReader 5.0 ABBYY FineReader 9 ABBYY FlexiCapture 12.0 ABBYY AVI Converter 1.6 Abdio Office Regenerator 2011 Abstradrome SciChart SDK 4.2 ABT SciChart SDK 5.2 ABT SciChart SDK 5.4 ABT SciChart SDK 6.1 ABT SciChart SDK 6.2 ABT Photo Snap 7.9 Accessory Software Canvas 15 ACD Systems EC-Engineer 3.0 acontis technologies Advanced Analytics 2013 Acorn Systems Advanced Analytics 2016 Acorn Systems Performance Analyzer -

Artisteer 2.0 User Manual

1 page artisteer.com | page 1 2 page Table of Contents Table of Contents ............................................................................................................................................. 2 What is Artisteer? ............................................................................................................................................. 4 What’s new in Artisteer 2 .................................................................................................................................. 5 Product Comparison ......................................................................................................................................... 6 Quick Start Guide ............................................................................................................................................. 7 Downloading Artisteer ................................................................................................................................... 7 System Requirements ................................................................................................................................... 7 Installing Artisteer .......................................................................................................................................... 8 Activating the software .................................................................................................................................. 9 How to work with the Artisteer Interface ........................................................................................................ -

UPDATE 2008 Volume 2

UPDATE 2008 Volume 2 NEW! CONTENTS Functional Index page 22 DEVELOPER Aspose.Words for .NET . .7 CodeCharge Studio . 8 Welcome to the latest MicroWay Update. Framework 3.5 and Visual Studio 2008 support ComponentOne Studio for WPF...........................9 plus a host of other enhancements, and also DBMoto..............................................3 As usual there’s many new products and DataWindow.NET .....................................17 AdminStudio 9. See Page 11 for details. DeepSea Obfuscator . .3 Enhanced File Transfer Server . .6 versions in this edition. Microsoft TechEd attendees will notice amongst Expression Professional Subscription . .5 a variety of exclusive specials that MicroWay is InstallAnywhere 2008 . .14 I want to draw your attention to the product called offering at our stand, that there is an extra special InstallShield 2009 . 13 ReSharper. This product is receiving rave reviews InstallShield 2009 Express . .14 deal on PrimalScript. We’re pleased to announce Leadtools Document Imaging Suite . 5 from many C#, VB.NET, and ASP.NET developers. that in conjunction with Sapien the makers of Nalpeiron Licensing Service .............................8 Basically they say that once you use it, you wonder NetAdvantage ICONS ..................................10 PrimalScript, we’re extending this special offer to NetAdvantage for Windows Forms .......................15 how you got along without it. There is a trial version all of our customers. See below for a saving of PowerBuilder ........................................17 available from our website, so I encourage you to PrimalScript 2007 Enterprise . 1 $115 off the price plus an additional free Platinum RadControls for ASP.NET ...............................17 give it a try. ReSharper 4.0 . .24 Support valued at $295. PrimalScript is a great tool Spread for ASP.NET 4..................................10 ReSharper 4.0 provides solution-wide error for anyone doing scripting or code editing.